Introduction

In this exciting integration of technology and creative ability, artificial intelligence (AI) has given life to image production, altering our notions of creativity. This blog is about “Artificial Intelligence and the Aesthetics of Image Generation,” it looks into the technical aspects of AI-powered artistic expression like Neural Style Transfer and Generative Adversarial Networks (GANs). As pixels and algorithms converge, the symbiotic performance between mathematical accuracy and aesthetic attraction is clear. Let’s look into this connection and redefine what it means to be an artist in an era when artificial intelligence and human vision collaborate to push the boundaries of creative brilliance.

Learning Objectives

- You will learn about some methodologies used for image generation.

- You will understand how important the integration of creativity and technology is.

- We will examine the visual quality of AI-generated art.

- You will learn about the Impact of AI on Creativity.

This article was published as a part of the Data Science Blogathon.

Evolution of Image Generation

Human hands and creativity mostly shaped the origins of image generation. Artists used brushes, pencils, and other materials to create visual representations meticulously. As the digital era came, computers began to play a larger role in this arena. Computer graphics were initially basic, pixelated, and lacked the elegance of human touch. The visuals are enhanced alongside the algorithms but remain only algorithms.

Artificial Intelligence is at its peak now. The field of AI developed significantly after the advancement in deep learning and neural networks, especially after the improvement in Generative Adversarial Networks(GANs)

AI has evolved from a tool to a partner. Because of their network approach, GANs began to produce images that were sometimes distinct from photographs.

Using Creative AI to Investigate Styles and Genres

Creative AI is a tool that can help us explore different styles and genres in art, music, and writing. Imagine having a computer program that can analyze famous paintings and create new artwork that integrates different styles.

In the world of visual arts, Creative AI is like a digital painter that can generate images in multiple styles. Think of a computer program that has looked at thousands of pictures, from classical portraits to modern abstract art. After learning from these, the AI can create new images that integrate different styles or even invent styles.

For example, you can generate images combining realistic textures with imaginative characters. This allows artists and designers to experiment with their different innovative ideas and develop interesting characters and unique designs that no one has ever considered.

Considerations for Ethical Issues

- Giving Credit to Original Artists: Giving credit to the artists whose work inspired the AI is a key consideration. If an AI creates something resembling a famous painting, we should ensure the original artist is credited.

- Ownership and copyright: Who owns the art created by the AI? Is it the person who programmed the AI, or do the artists who inspired the AI share ownership? To avoid conflicts, clear answers to these questions must be given.

- Bias in AI: AI may prefer certain styles or cultures when creating art. This can be unfair and should be carefully considered to protect all art forms.

- Accessibility: If only a few people have access to new AI tools, it would be unfair to others who want to use them and be productive using them.

- Data Privacy: When an AI studies art to learn how to create its own, it often requires the use of many images and data.

- Emotional Impact: If an AI creates art similar to human-made art, the emotional value of the original work may be neglected.

Like many other intersections of technology and tradition, the intersection of AI and art is exciting and challenging. Ethical concerns ensure that growth is in line with ideals and inclusion.

Methodologies for Creating Images

Image creation has changed dramatically, particularly with computer approaches and deep learning development. The following are some of the major techniques that have defined this evolution:

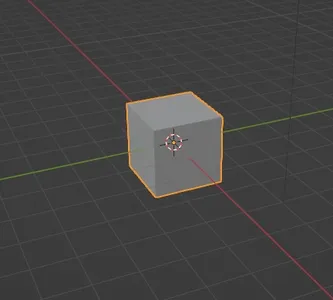

- Rendering and 3D modeling: Digitally creating three-dimensional buildings and scenery. The models are then rendered as 2D visuals or animations. Software like Blender, Maya, and ZBrush make this possible.

import bpy

"""

This Blender script initializes a scene containing a cube, positions a virtual

camera and sunlight, and then render the setup to a Full HD image.

"""

# Ensuring we start with a clean slate

bpy.ops.wm.read_factory_settings(use_empty=True)

# Setting render resolution

bpy.context.scene.render.resolution_x = 1920

bpy.context.scene.render.resolution_y = 1080

# Creating a new cube

bpy.ops.mesh.primitive_cube_add(size=2, enter_editmode=False, align='WORLD', location=(0, 0, 1))

# Setting up the camera

bpy.ops.object.camera_add(location=(0, -10, 2))

bpy.data.cameras[bpy.context.active_object.data.name].lens = 100

# Setting up lighting

bpy.ops.object.light_add(type="SUN", align='WORLD', location=(0, 0, 5))

# Rendering the scene

output_path = "/Users/ananya/Desktop/first.png" # Replacing with your desired path

bpy.context.scene.render.filepath = output_path

bpy.ops.render.render(write_still=True)Blender Image:

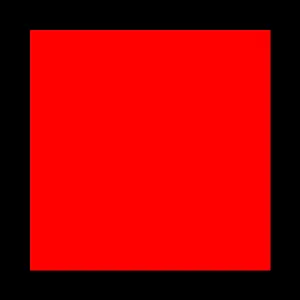

- Raster Images: This type of image is made up of pixel arrays which describe each pixel of the image in terms of its color. For example, Adobe Photoshop is works with raster graphics.

from PIL import Image, ImageDraw

"""

This computer program uses a special tool called PIL to create a picture that is 500 pixels

wide and 500 pixels tall. The picture has a rectangle that is colored red. The program also

saves a smaller version of the picture that only shows the rectangle.

"""

# Step 1: Create a new blank image (white background)

width, height = 500, 500

img = Image.new('RGB', (width, height), color="white")

# Step 2: Draw a simple red rectangle on the image

draw = ImageDraw.Draw(img)

draw.rectangle([50, 50, 450, 450], fill="red")

# Step 3: Save the image

img.save('raster_image.png')

# Step 4: Open and manipulate the saved image

img_opened = Image.open('raster_image.png')

cropped_img = img_opened.crop((100, 100, 400, 400)) # Crop the image

cropped_img.save('cropped_raster_image.png')

# This will produce two images: one with a red rectangle and a cropped version of the same.

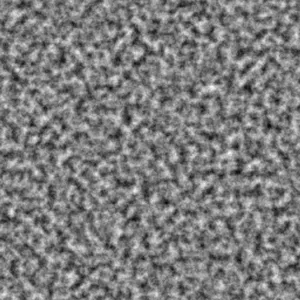

- Procedural Design: Procedural design is a way to make things like pictures, backgrounds, or even whole scenes using computer rules or steps. Basically, the computer goes through a set of instructions to generate different kinds of visuals. This is very useful in video games, for example, automatically creating mountains, forests, or skies in the background. Instead of making each part one by one, it is efficient to quickly and automatically build these designs.

import numpy as np

from noise import pnoise2

from PIL import Image

"""

This script creates a picture that looks like a pattern using a special math formula.

The picture is black and white and has 512 pixels in width and 512 pixels in height.

It is saved with the name 'procedural_perlin_noise.png'.

"""

# Constants

WIDTH, HEIGHT = 512, 512

OCTAVES = 6

FREQUENCY = 16.0

AMPLITUDE = 32.0

def generate_perlin_noise(width, height, frequency=16.0, octaves=6):

"""Generate a 2D texture of Perlin noise."""

noise_data = np.zeros((height, width))

for y in range(height):

for x in range(width):

value = pnoise2(x / frequency, y / frequency, octaves=octaves)

noise_data[y][x] = value

# Normalizing the noise data between 0 and 255

noise_data = ((noise_data - np.min(noise_data)) /

(np.max(noise_data) - np.min(noise_data))) * 255

return noise_data.astype(np.uint8)

# Generating Perlin noise

noise_data = generate_perlin_noise(WIDTH, HEIGHT, FREQUENCY, OCTAVES)

# Converting to image and save

image = Image.fromarray(noise_data, 'L') # 'L' indicates grayscale mode

image.save('procedural_perlin_noise.png')

The Value of Training Data

Machine learning and artificial intelligence models need training data. It is the foundational data upon which the understand and build the capabilities of these systems. The quality, quantity, and variety of training data directly affect the final AI models’ accuracy, dependability, and fairness. Poor or biased data can lead to incorrect, unanticipated results or discriminatory outputs, while well-curated data ensures that the model can successfully generalize to real-world settings. Training data is critical for AIcal performance and systems’ techniethical and social implications. The adage “garbage in, garbage out” is especially relevant here, as any AI model’s output is only sound if you train the data to be good.

Difficulties and limitations

- Consistency and quality: It is critical to ensure data quality because noisy or inconsistent data can jeopardize model accuracy. Furthermore, locating a comprehensive and diverse dataset is an inherent challenge.

- Bias and Representation: Unintentional data biases can cause models to reinforce societal preconceptions and imbalances in dataset representation resulting in new challenges to achieving fair AI outputs.

- Privacy and Annotation: Data preparation and use raise privacy concerns. Furthermore, the time-consuming work of data annotation complicates the AI training process.

- Evolving Nature and Overfitting: Because data is always changing, it changes constantly, potentially making the last datasets obsolete. Additionally, there is a persistent risk of models overfitting to specific datasets, reducing their generalizability.

Prospects for the Future

- Enhanced Performance and Transparency: AI models will be more accurate, more understandable, and more transparent, allowing everyone to understand the models easily in the future. Models will be open-source, allowing users to improve the model’s computational power.

- Revolution in Quantum Computing: Quantum computing is still in its early stages of development, but it allows linear advancements in data processing speeds.

- Efficient Training Techniques: Transfer learning and few-shot learning methodologies are in development, and they could reduce the need for large training datasets.

- Ethical Evolution: We know about the debate on whether AI would take over the human race, yet we’ll see an increase in tools and technologies involving AI.

Conclusion

Today’s issues, like data restrictions and ethical concerns, drive tomorrow’s solutions. As algorithms become more complex and applications become more prevalent, the importance of a symbiotic relationship between technology and human overlook is growing. The future promises smarter, more integrated AI systems that improve efficiency and maintain the complexities and values of human society. With careful management and collaborative effort, AI’s potential to revolutionize our world is limitless.

Key Takeaways

- AI and machine learning are having a linear impact on various industries, changing how we function and act.

- Ethical concerns and data challenges are central to the AI story.

- The future of artificial intelligence promises not only increased efficiency but also systems that are sensitive to human values and cultural sensitivities.

- Collaboration between technology and human monitoring is critical for harnessing AI’s promise ethically and successfully.

Frequently Asked Questions

A. AI is changing healthcare and entertainment industries by automating tasks, generating insights, and improving user experiences.

A. Ethical concerns ensure that AI systems are fair and unbiased and do not inadvertently harm or discriminate against specific individuals or groups.

A. AI systems will become more powerful and integrated in the future, allowing them to adapt to a broad spectrum of applications while emphasizing transparency, ethics, and human engagement.

A. Data is the underlying backbone of AI, providing the necessary knowledge for models to learn, adapt, and make intelligent decisions. Data quality and representation are critical for AI output success.

The media shown in this article is not owned by Analytics Vidhya and is used at the Author’s discretion.