Making talking faces is one of the most notable recent advances in artificial intelligence (AI), which has made huge improvements. Artificial intelligence (AI) algorithms are used to create realistic talking faces that can be used in various applications, including virtual assistants, gaming, and social media. Producing talking faces is a challenging process that requires advanced algorithms to accurately represent the nuances of human speech and facial emotions.

The researchers originally began experimenting with computer images to create realistic human features in the early days of computer animation, where the history of the creation of talking faces can be traced. However, the development of deep learning and neural networks is when the technology started to take off. Today, scientists are developing more expressive and realistic talking faces by combining various methods such as machine learning, machine vision, and natural language processing.

Talking face generation technology is now in its infancy, with numerous constraints and pitfalls yet to be resolved.

Some related challenges relate to recent developments in AI research, which have led to a variety of deep learning techniques that produce rich and expressive talking faces.

The most widely adopted AI architecture comprises two stages. In the first stage, an intermediate representation is predicted from the input audio, such as 2D reference points or blendshape coefficients, which are numbers used in computer graphics to influence the shape and expression of facial models in 3D. Based on the expected rendering, the video portraits are synthesized using a renderer.

Most of the techniques are designed to perform a deterministic one-to-one mapping of the audio provided to a video, although the creation of talking faces is essentially a one-to-many mapping problem. Due to the many context variables, such as phonetic contexts, emotions, and lighting settings, there are several possible visual representations of the target individual for an input audio clip. This makes it more difficult to provide realistic visual results when deterministic mapping is learned, since ambiguity is introduced during training.

The objective of the work presented in this article is to address the problem of generating talking faces taking into account these context variables.

The architecture is presented in the following figure.

The inputs consist of an audio feature and a video template of the target person. For template video, a good practice is to mask the face region.

First, the audio-to-expression model takes the extracted audio function and predicts the mouth-related expression coefficients. These coefficients are then merged with the original shape and pose coefficients extracted from the template video and guide the generation of an image with the expected characteristics.

The neural rendering model then takes the generated image and the masked video template to generate the final results, which correspond to the shape of the mouth in the image. In this way, the audio-to-speech model is responsible for the quality of the lip sync, while the neural-representation model is responsible for the rendering quality.

However, this two-stage framework still needs to be improved to address the difficulty of one-to-many mapping, as each stage is separately optimized to predict missing information, such as habits and wrinkles, per input. For this, the architecture exploits two memories, called, respectively, implicit memory and explicit memory, with attention mechanisms to jointly complement the missing information. According to the author, using only one memory would have been too challenging, given that the audio-to-speech model and the neural-representation model play different roles in the development of talking faces. The audio-to-expression model creates semantically aligned utterances from the input audio, and the neural rendering model creates the visual appearance at the pixel level based on the estimated utterances.

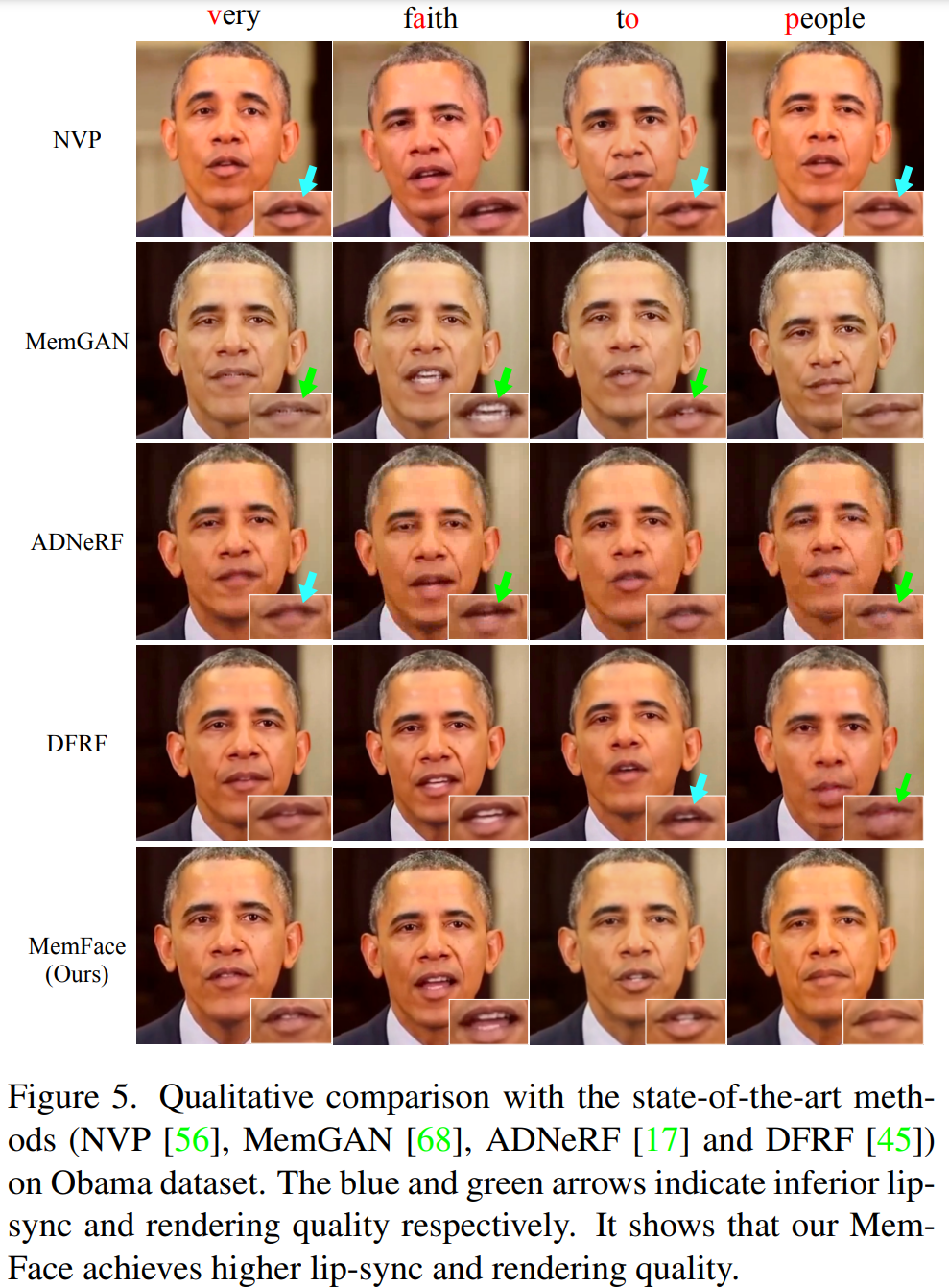

The results produced by the proposed framework are compared with the most advanced approaches, mainly regarding the quality of the lip sync. In the following figure, some samples are reported.

This was the summary of a novel framework to alleviate the problem of generating talking faces using memories. If you are interested, you can find more information in the following links.

review the Paper Y Project. All credit for this research goes to the researchers of this project. Also, don’t forget to join our reddit page, discord channel, Y electronic newsletterwhere we share the latest AI research news, exciting AI projects, and more.

Daniele Lorenzi received his M.Sc. in ICT for Internet and Multimedia Engineering in 2021 from the University of Padua, Italy. He is a Ph.D. candidate at the Institute of Information Technology (ITEC) at the Alpen-Adria-Universität (AAU) Klagenfurt. He currently works at the Christian Doppler ATHENA Laboratory and his research interests include adaptive video streaming, immersive media, machine learning and QoS / QoE evaluation.

NEWSLETTER

NEWSLETTER