Arcee ai has announced the launch of Distillation kit, an innovative open source tool designed to revolutionize the creation and distribution of small language models (SLMs). This release aligns with Arcee aiThe ongoing mission is to make ai more accessible and efficient for researchers, users, and businesses looking to access open source, easy-to-use distillation method tools.

Introduction to DistillKit

Distillation kit is a cutting-edge, open-source project focused on model distillation, a process that enables the transfer of knowledge from large, resource-intensive models to smaller, more efficient ones. This tool aims to make advanced ai capabilities available to a wider audience by significantly reducing the computational resources required to run these models.

The main objective of Distillation kit The goal is to create smaller models that retain the power and sophistication of their larger counterparts and are optimized for use on less powerful hardware, such as laptops and smartphones. This approach democratizes access to advanced ai and promotes energy efficiency and cost savings in ai deployment.

Distillation Methods in DistillKit

ai/distillkit-v0-1-by-arcee-ai/” target=”_blank” rel=”noreferrer noopener”>Distillation kit It employs two main methods for knowledge transfer: logit-based distillation and hidden state-based distillation.

- Logit-based distillation: This method involves the teacher model (the larger model) providing its output probabilities (logits) to the student model (the smaller model). The student model learns not only the correct answers, but also the teacher model's confidence levels in its predictions. This technique improves the student model's ability to generalize and perform efficiently by mimicking the teacher model's output distribution.

- Distillation based on hidden states: In this approach, the student model is trained to replicate the intermediate representations (hidden states) of the teacher model. By aligning its internal processing with the teacher model, the student model gains a deeper understanding of the data. This method is useful for cross-architecture distillation, as it allows knowledge transfer between models from different tokenizers.

DistillKit Key Points

DistillKit experiments and performance evaluations provide key insights into its effectiveness and potential applications:

- General Purpose Performance Boost: Distillation kit Consistent performance improvements were demonstrated across multiple datasets and training conditions. Models trained on subsets of Openhermes, WebInstruct-Sub, and FineTome showed encouraging gains on benchmarks such as MMLU and MMLU-Pro. These results indicate significant improvements in knowledge absorption for SLMs.

- Improved performance in specific domains: The directed distillation approach yielded notable improvements on domain-specific tasks. For example, distilling Arcee-Agent on Qwen2-1.5B-Instruct using the same training data as the teacher model resulted in substantial performance improvements. This suggests that leveraging identical training datasets for the teacher and student models can yield larger performance gains.

- Flexibility and versatility: Distillation kitThe ability to support both logit-based and hidden-state distillation methods provides flexibility in model architecture choices. This versatility allows researchers and developers to tailor the distillation process to meet specific requirements.

- Efficiency and resource optimization: Distillation kit It reduces the computational resources and energy required for ai implementation by enabling the creation of smaller, more efficient models. This makes advanced ai capabilities more accessible and promotes sustainable ai research and development practices.

- Open Source Collaboration: Distillation kitThe open source nature of invites the community to contribute to its ongoing development. This collaborative approach fosters innovation and improvement, encouraging researchers and developers to explore new distillation methods, optimize training routines, and improve memory efficiency.

Performance results

The effectiveness of DistillKit has been rigorously tested through a series of experiments to evaluate its impact on model performance and efficiency. These experiments focused on several aspects including the comparison of distillation techniques, the performance of distilled models with their master models, and domain-specific distillation applications.

- Comparison of distillation techniques

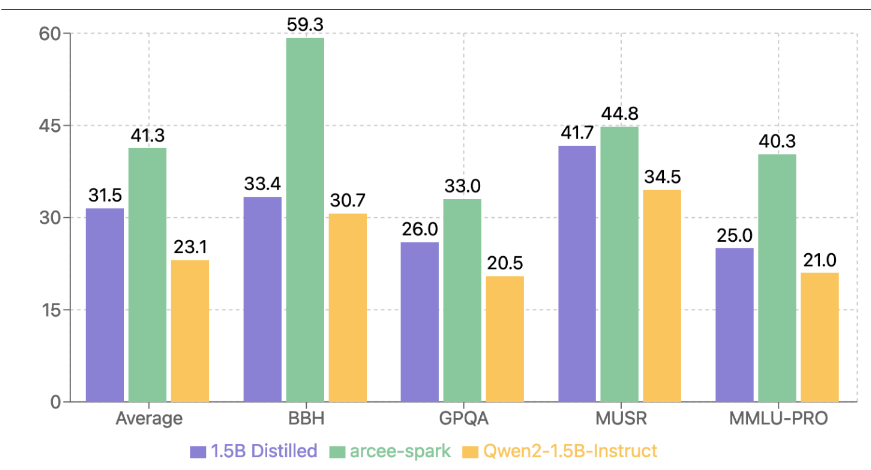

The first set of experiments compared the performance of different models refined using logit- and hidden-state-based distillation techniques against a standard supervised fine-tuning (SFT) approach. Using Arcee-Spark as a teaching model, knowledge was distilled into Qwen2-1.5B-Base models. The results demonstrated significant performance improvements of the distilled models compared to the SFT-only baseline on major benchmarks such as BBH, MUSR, and MMLU-PRO.

- Logit-based distillation: The logit-based approach outperformed the hidden state-based method on most benchmarks, demonstrating its superior ability to improve student performance by transferring knowledge more effectively.

- Distillation based on hidden states: While slightly behind the logit-based method in terms of overall performance, this technique still provided substantial gains compared to the SFT-only variant, especially in scenarios requiring cross-architecture distillation.

These findings underline the robustness of the distillation methods implemented in Distillation kit and highlight its potential to significantly increase the efficiency and accuracy of smaller models.

- Effectiveness in general domains:Further experiments evaluated the effectiveness of logit-based distillation in a domain-general setting. A 1.5B distilled model, trained on a subset of WebInstruct-Sub, was compared to its master model, Arcee-Spark, and the Qwen2-1.5B-Instruct baseline model. The distilled model consistently improved performance across all metrics, and demonstrated comparable results to the master model, particularly on the MUSR and GPQA benchmarks. This experiment confirmed DistillKit’s ability to produce highly efficient models that retain much of the master model’s performance while being significantly smaller and less resource intensive.

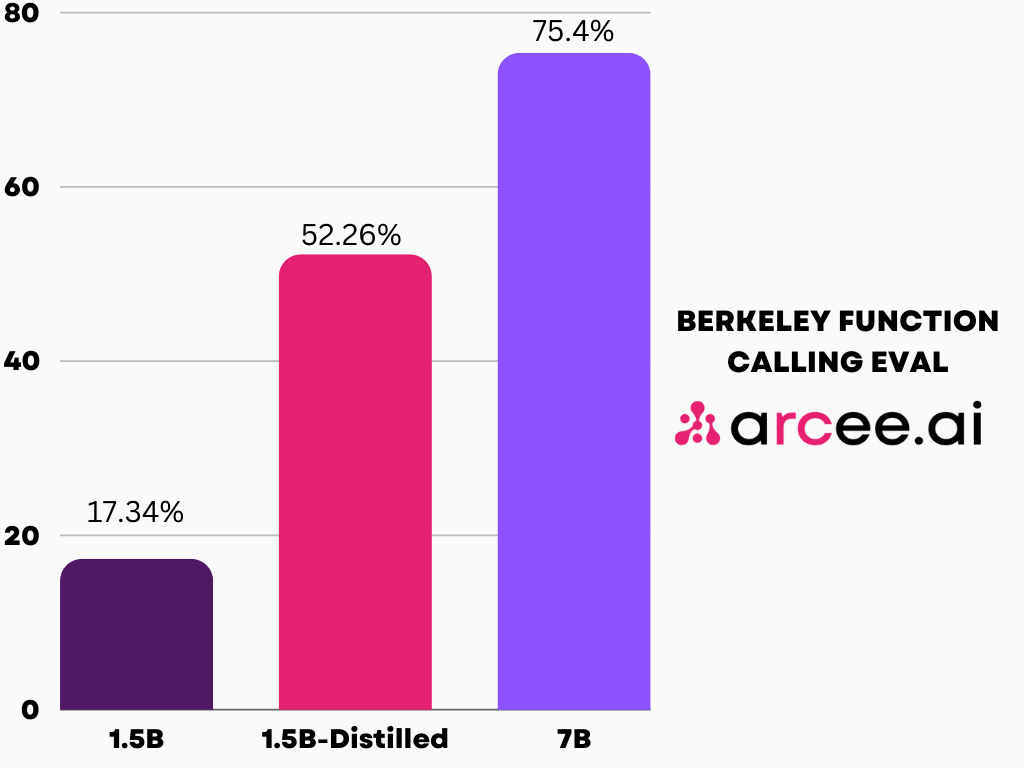

- Domain-specific distillation:The potential of DistillKit for domain-specific tasks was also explored by distilling Arcee-Agent into Qwen2-1.5B-Instruct models. Arcee-Agent, a model specialized for function calls and tool usage, acted as the instructor. The results revealed substantial performance improvements and highlighted the effectiveness of using the same training data for both the instructor and student models. This approach improved the general-purpose capabilities of the distilled models and optimized them for specific tasks.

Impact and future directions

The release of DistillKit is intended to enable the creation of smaller, more efficient models to make advanced ai accessible to various users and applications. This accessibility is crucial for businesses and individuals who may not have the resources to deploy ai models at scale. Smaller models generated through DistillKit offer several advantages, including lower power consumption and lower operational costs. These models can be deployed directly to local devices, improving privacy and security by minimizing the need to transmit data to cloud servers. Arcee ai plans to continue improving DistillKit with additional features and capabilities. Future updates will include advanced distillation techniques such as continuous pre-training (CPT) and direct preference optimization (DPO).

Conclusion

Distillation kit by Arcee ai DistillKit marks a major milestone in model distillation, offering a robust, flexible, and efficient tool for creating SLMs. Performance results from experiments and key takeaways highlight DistillKit’s potential to revolutionize ai deployment by making advanced models more accessible and practical. Arcee ai’s commitment to open-source research and community collaboration ensures that DistillKit will continue to evolve, incorporating new techniques and optimizations to meet the ever-changing demands of ai technology. Arcee ai also invites the community to contribute to the project by developing new distillation methods to improve training routines and optimize memory usage.

Asif Razzaq is the CEO of Marktechpost Media Inc. As a visionary engineer and entrepreneur, Asif is committed to harnessing the potential of ai for social good. His most recent initiative is the launch of an ai media platform, Marktechpost, which stands out for its in-depth coverage of machine learning and deep learning news that is technically sound and easily understandable to a wide audience. The platform has over 2 million monthly views, illustrating its popularity among the public.

NEWSLETTER

NEWSLETTER