Large language models (LLMs) have made significant progress in handling multiple modalities and tasks, but still need to improve their ability to process diverse inputs and perform a wide range of tasks effectively. The main challenge lies in developing a single neural network capable of handling a wide spectrum of tasks and modalities while maintaining high performance in all domains. Current models, such as 4M and UnifiedIO, are promising, but are limited by the limited number of modalities and tasks they are trained on. This limitation hinders its practical application in scenarios that require truly versatile and adaptable ai systems.

Recent attempts to solve multitask learning challenges in vision have evolved from combining dense vision tasks to integrating numerous tasks into unified multimodal models. Methods such as Gato, OFA, Pix2Seq, UnifiedIO, and 4M transform multiple modalities into discrete tokens and train Transformers using masked modeling sequences or targets. Some approaches enable a wide range of tasks by jointly training on separate data sets, while others, such as 4M, use pseudo-labeling for predictions of any modality on aligned data sets. Masked modeling has been shown to be effective in learning cross-modal representations, crucial for multimodal learning, and enables generative applications when combined with tokenization.

Researchers from Apple and the Swiss Federal Institute of technology in Lausanne (EPFL) base their method on the multimodal masking pre-training scheme, significantly expanding its capabilities by training on a diverse set of modalities. The approach incorporates more than 20 modalities, including SAM segments, 3D human poses, Canny edges, color palettes, and various metadata and embeddings. Using discrete, modality-specific tokenizers, the method encodes multiple inputs into a unified format, allowing a single model to be trained across multiple modalities without performance degradation. This unified approach expands existing capabilities across several key axes, including greater modality support, greater diversity in data types, effective tokenization techniques, and scaled model size. The resulting model demonstrates new possibilities for multimodal interaction, such as cross-modal retrieval and highly steerable generation across training modalities.

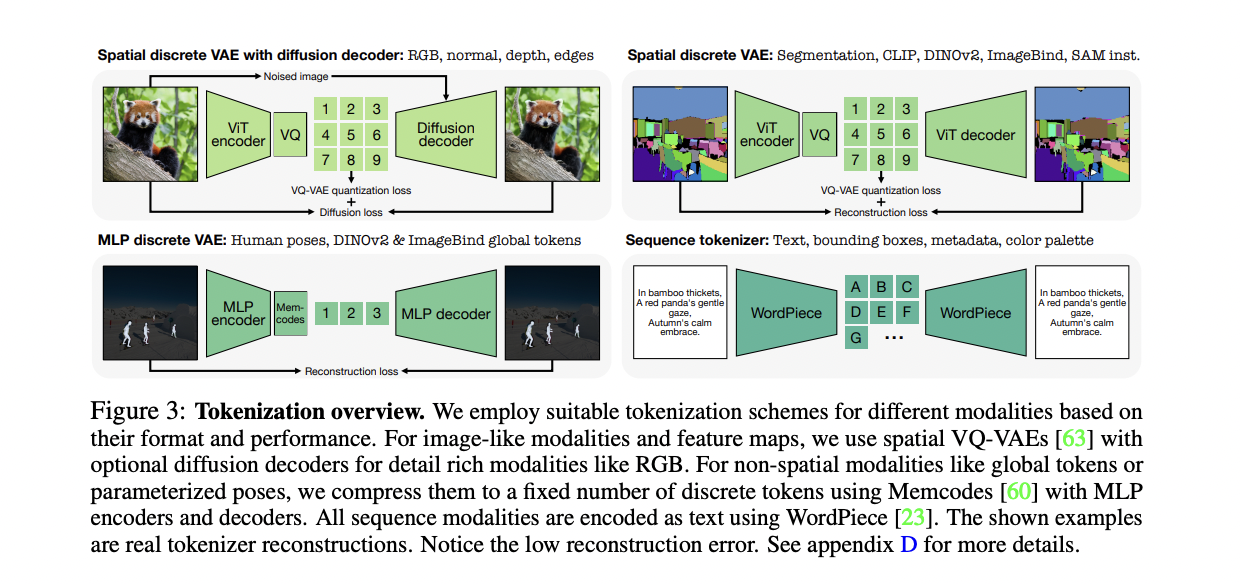

This method adopts the 4M pretraining scheme, expanding it to handle a diverse set of modalities. Transforms all modalities into sequences of discrete tokens using modality-specific tokenizers. The goal of training involves predicting one subset of tokens from another, using random selections from all modalities as inputs and targets. It uses pseudo-labeling to create a large pre-training data set with multiple aligned modalities. The method incorporates a wide range of modalities, including RGB, geometric, semantic, edges, feature maps, metadata, and text. Tokenization plays a crucial role in unifying the space of representation across these various modalities. This unification enables training with a single pre-training objective, improves training stability, allows full parameter sharing, and eliminates the need for task-specific components. Three main types of tokenizers are employed: ViT-based tokenizers for image-like modalities, MLP tokenizers for human poses and global embeddings, and a WordPieza tokenizer for text and other structured data. This comprehensive tokenization approach allows the model to handle a wide range of modalities efficiently, reducing computational complexity and enabling generative tasks across multiple domains.

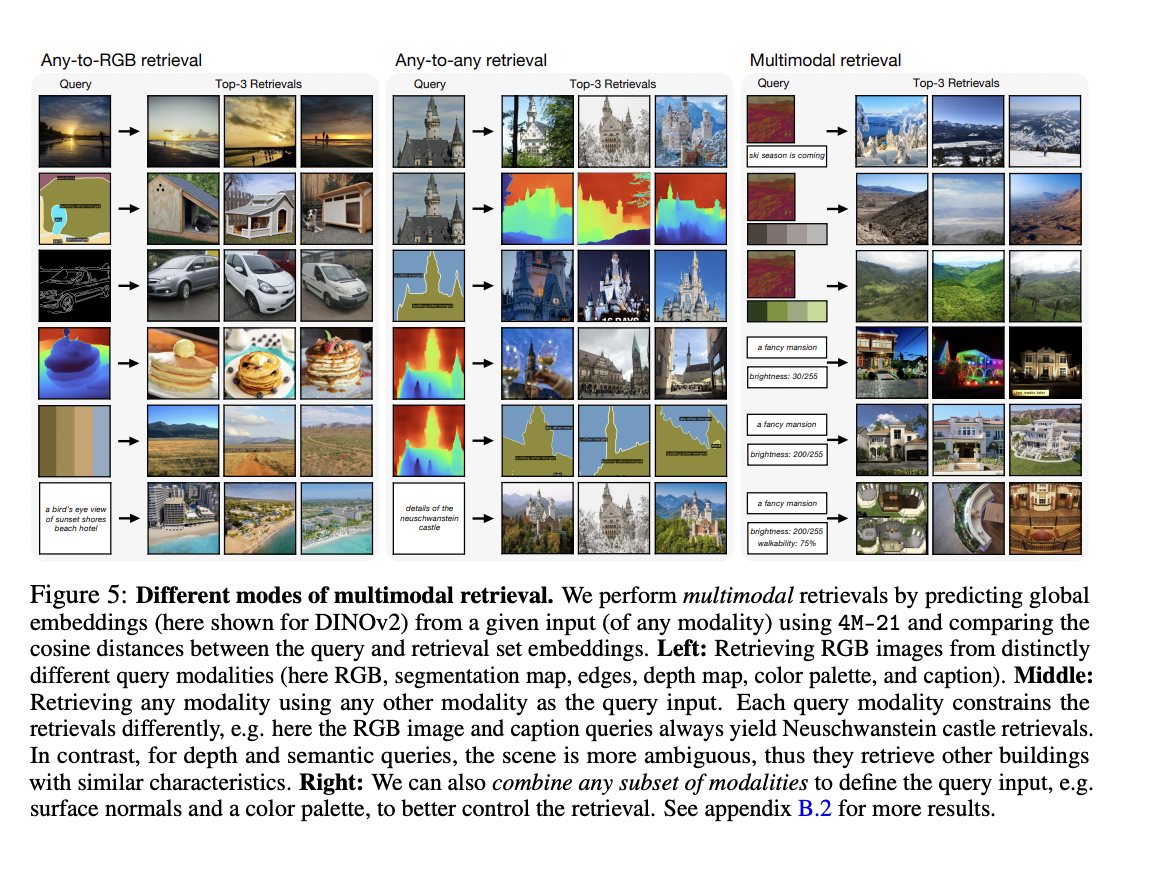

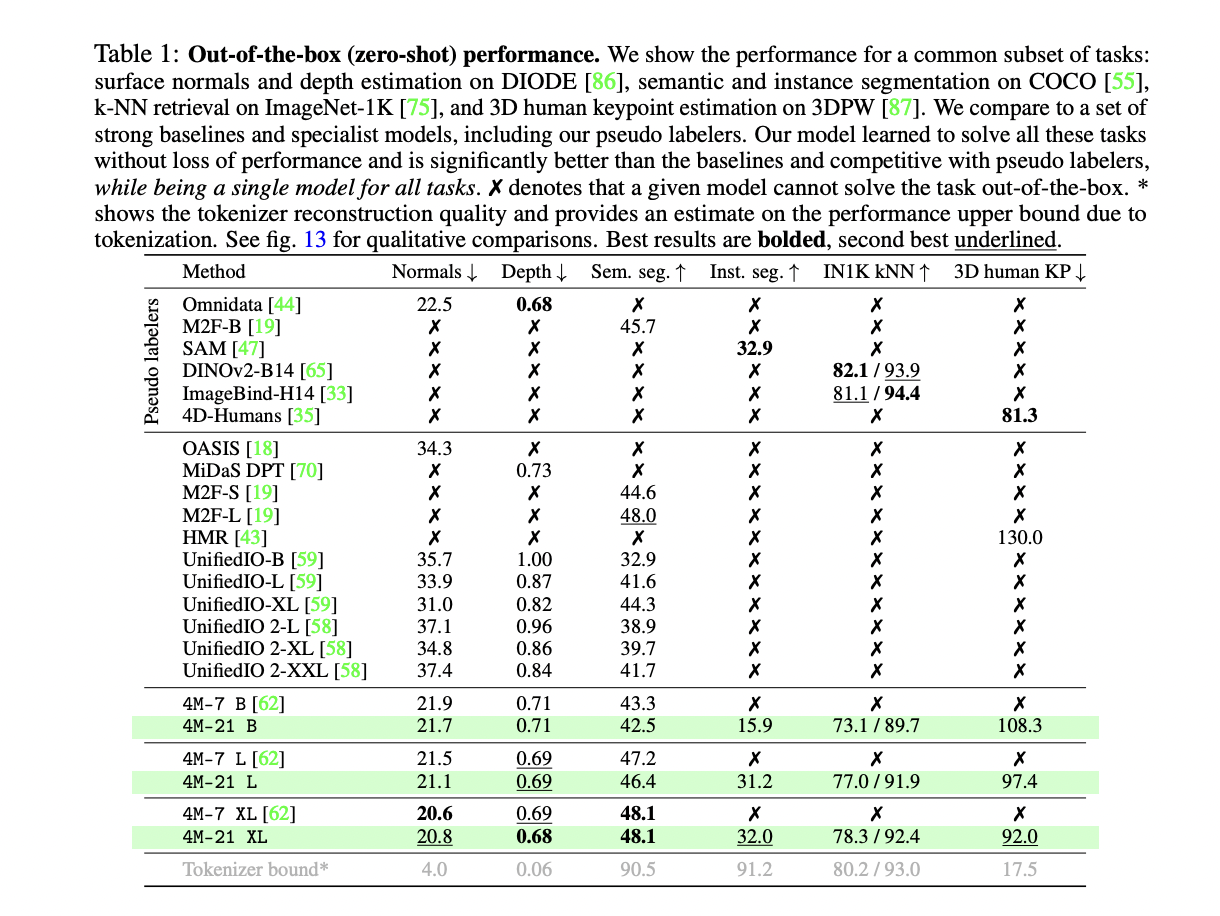

The 4M-21 model demonstrates a wide range of capabilities, including steerable multimodal generation, multimodal recovery, and strong out-of-the-box performance in various vision tasks. It can predict any training modality through iterative token decoding, enabling fine-grained, multi-modal generation with better text understanding. The model performs multimodal retrievals by predicting global embeddings from any input modality, enabling versatile retrieval capabilities. In out-of-the-box evaluations, 4M-21 achieves competitive performance on tasks such as surface normal estimation, depth estimation, semantic segmentation, instance segmentation, 3D human pose estimation, and image retrieval. It often equals or outperforms specialized models and pseudo-taggers while being a one-size-fits-all model. The 4M-21 XL variant, in particular, demonstrates strong performance in multiple modalities without sacrificing capability in any single domain.

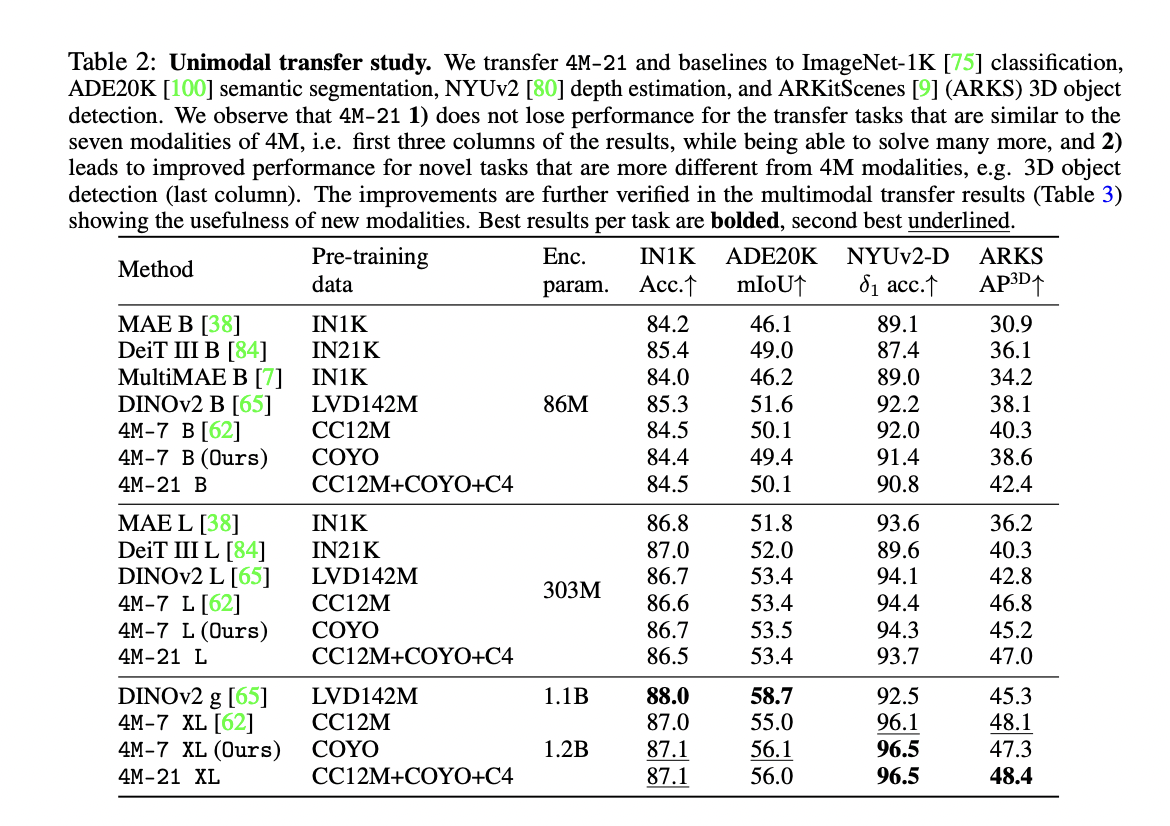

The researchers examine the pre-training scaling characteristics of any models across a large set of modalities, comparing three model sizes: B, L, and XL. Evaluation of unimodal (RGB) and multimodal (RGB + Depth) transfer learning scenarios. In unimodal transfers, 4M-21 maintains performance on tasks similar to the original seven modalities while showing improved results on complex tasks such as 3D object detection. The model demonstrates better performance with larger size, indicating promising scaling trends. For multimodal transfers, 4M-21 effectively uses optional depth inputs, significantly outperforming baselines. The study reveals that training on a broader set of modalities does not compromise performance on familiar tasks and can improve abilities on new ones, especially as model size increases.

This research demonstrates successful training of any model on a diverse set of 21 modalities and tasks. This achievement is possible by employing modality-specific tokenizers to map all modalities to discrete sets of tokens, along with a multimodal masked training objective. The model scales to three billion parameters across multiple data sets without compromising performance compared to more specialized models. The resulting unified model exhibits robust out-of-the-box capabilities and opens new avenues for multimodal interaction, generation, and retrieval. However, the study recognizes certain limitations and areas for future work. These include the need to further explore emergent and transfer capabilities, which remain largely untapped compared to linguistic models.

Review the Paper, Project, and GitHub. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on twitter.com/Marktechpost”>twitter.

Join our Telegram channel and LinkedIn Grabove.

If you like our work, you will love our Newsletter..

Don't forget to join our 44k+ ML SubReddit

<figure class="wp-block-embed is-type-rich is-provider-twitter wp-block-embed-twitter“>

![]()

Asjad is an internal consultant at Marktechpost. He is pursuing B.tech in Mechanical Engineering at Indian Institute of technology, Kharagpur. Asjad is a machine learning and deep learning enthusiast who is always researching applications of machine learning in healthcare.

<script async src="//platform.twitter.com/widgets.js” charset=”utf-8″>

NEWSLETTER

NEWSLETTER