Introduction

When I first started using Apache Spark, I was amazed at how easily it handled massive datasets. Now, with the release of Apache Spark 4.0 right around the corner, I’m more excited than ever. This latest update promises to be a game-changer, packed with powerful new features, noticeable performance improvements, and enhancements that make it easier to use than ever. Whether you’re a seasoned data engineer or just starting out in the world of big data, Spark 4.0 has something for everyone. Let’s dive deeper into what makes this new release so groundbreaking and how it’s set to redefine the way we process big data.

Overview

- Apache Spark 4.0:A major update that introduces transformative features, performance improvements, and enhanced usability for large-scale data processing.

- Spark plug connection:Revolutionizes the way users interact with Spark clusters through a thin client architecture, enabling cross-language development and simplified deployments.

- ANSI mode:Improves data integrity and SQL support in Spark 4.0, making migrations and debugging easier with improved error reporting.

- Arbitrary processing with V2 state:Introduces advanced flexibility for streaming applications, supporting complex event processing and stateful machine learning models.

- Collation support:Improves text processing and classification for multilingual applications, improving compatibility with traditional databases.

- Variant data type:Provides a flexible and high-performance way to handle semi-structured data like JSON, perfect for IoT data processing and web log analysis.

Apache Spark: An Overview

Apache Spark is a powerful open-source distributed computing system for processing and analyzing big data. It provides an interface for programming entire clusters with implicit data parallelism and fault tolerance. Spark is known for its speed, ease of use, and versatility. It is a popular choice for data processing tasks ranging from batch processing to real-time data streaming, machine learning, and interactive queries.

Download here:

Also Read: Complete Introduction to Apache Spark, RDD and Dataframes (Using PySpark)

What does Apache Spark 4.0 offer?

Here's what's new in Apache Spark 4.0:

1. Spark Connect: revolutionizing connectivity

Spark Connect is one of the most transformative additions to Spark 4.0, fundamentally changing how users interact with Spark clusters.

| Main Features | Technical details | Use cases |

|---|---|---|

| Thin Client Architecture | PySpark Connection Package | Creating interactive data applications |

| Language Agnostic | API Consistency | Cross-language development (e.g. Go client for Spark) |

| Interactive development | Performance | Simplified deployment in container environments |

2. ANSI Mode: Improved data integrity and SQL compatibility

ANSI mode becomes the default setting in Spark 4.0, bringing Spark SQL closer to standard SQL behavior and improving data integrity.

| Key improvements | Technical details | Impact |

|---|---|---|

| Silent prevention of data corruption | Call pickup error | Improved data quality and consistency across data pipelines |

| Improved error reporting | Configurable | Improved debugging experience for SQL and DataFrame operations |

| SQL standard compliance | – | Easier migration from traditional SQL databases to Spark |

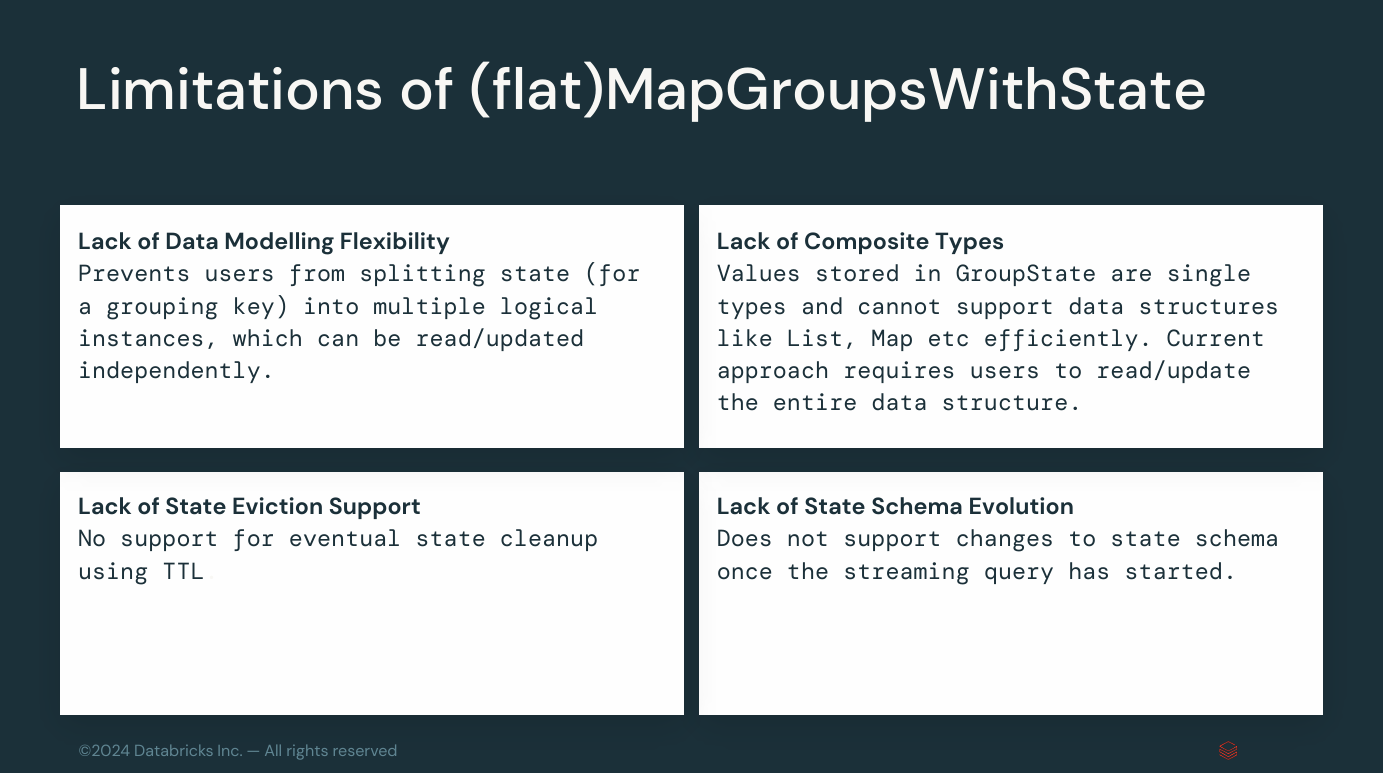

3. Arbitrary processing with V2 state

The second version of Arbitrary Stateful Processing introduces more flexibility and power for streaming applications.

Key improvements:

- Composite Types in GroupState

- Flexibility in data modeling

- State support for evictions

- Evolution of the state scheme

Technical example:

@udf(returnType="STRUCT")

class CountAndMax:

def __init__(self):

self._count = 0

self._max = 0

def eval(self, value: int):

self._count += 1

self._max = max(self._max, value)

def terminate(self):

return (self._count, self._max)

# Usage in a streaming query

df.groupBy("id").agg(CountAndMax("value"))Use cases:

- Complex event processing

- Real-time analytics with custom state management

- Stateful machine learning model that works in streaming contexts

4. Interleaving support

Spark 4.0 introduces comprehensive support for string collation, allowing for more nuanced string comparisons and sortings.

Main features:

- Case-insensitive comparisons

- Comparisons that do not take into account the accent

- Sorting by locale

Technical details:

- SQL Integration

- Optimized performance

Example:

SELECT name

FROM names

WHERE startswith(name COLLATE unicode_ci_ai, 'a')

ORDER BY name COLLATE unicode_ci_ai;Impact:

- Improved text processing for multilingual applications

- More accurate sorting and searching in text-heavy datasets

- Improved compatibility with traditional database systems

5. Variant data type for semi-structured data

The new Variant data type offers a flexible and high-performance way to handle semi-structured data such as JSON.

Key Benefits:

- Flexibility

- Performance

- Compliance with standards

Technical details:

- Internal representation

- Query Optimization

Example of use:

CREATE TABLE events (

id INT,

data VARIANT

);

INSERT INTO events VALUES (1, PARSE_JSON('{"level": "warning", "message": "Invalid request"}'));

SELECT * FROM events WHERE data:level="warning";Use cases:

- IoT data processing

- Web log analysis

- Flexible schema evolution in data lakes

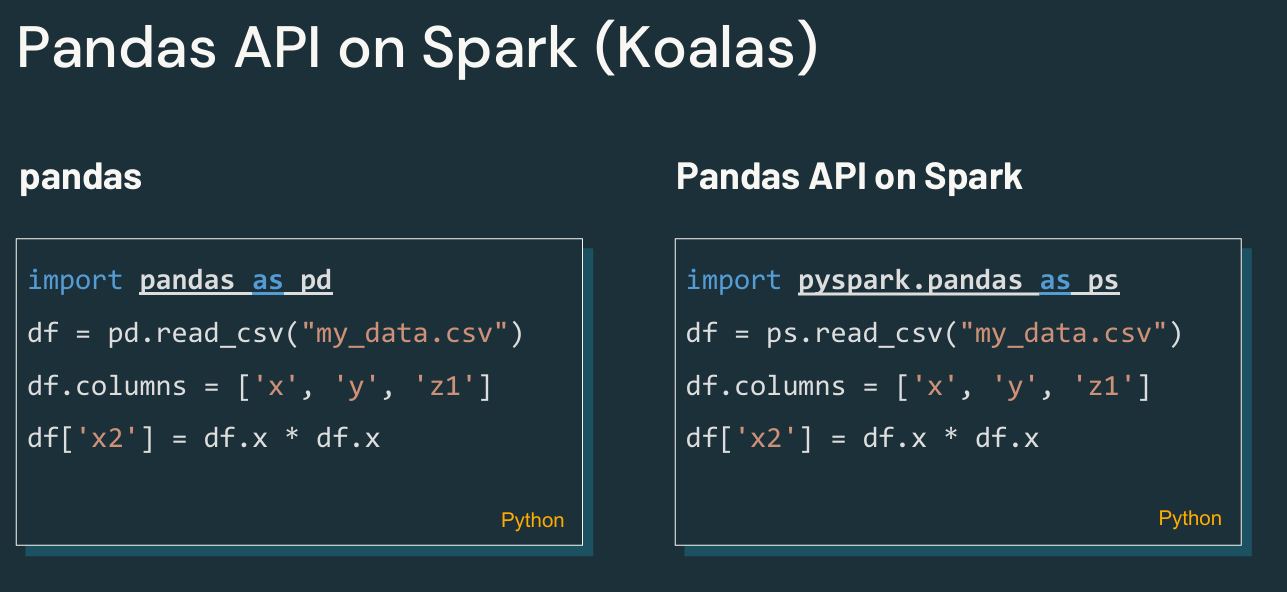

6. Python improvements

PySpark receives significant attention in this release, with several important improvements.

Key improvements:

- Pandas 2.x support

- Python Data Sources API

- Python UDFs optimized for Arrow

- Python User Defined Table Functions (UDTFs)

- Creating unified profiles for PySpark UDFs

Technical example (Python UDTF):

@udtf(returnType="num: int, squared: int")

class SquareNumbers:

def eval(self, start: int, end: int):

for num in range(start, end + 1):

yield (num, num * num)

# Usage

spark.sql("SELECT * FROM SquareNumbers(1, 5)").show()Performance improvements:

- Arrow-optimized UDFs show up to 2x performance improvement for certain operations.

- Python data source APIs reduce the overhead of custom data ingestion.

7. SQL and scripting improvements

Spark 4.0 brings several improvements to its SQL capabilities, making it more powerful and flexible.

Main features:

- SQL User Defined Functions (UDFs) and Table Functions (UDTFs)

- SQL Scripts

- Stored Procedures

Technical example (SQL scripting):

BEGIN

DECLARE c INT = 10;

WHILE c > 0 DO

INSERT INTO t VALUES (c);

SET c = c - 1;

END WHILE;

ENDUse cases:

- Complex ETL processes implemented entirely in SQL

- Migrating Legacy Stored Procedures to Spark

- Creating reusable SQL components for data pipelines

Also Read: A Complete Guide on Apache Spark RDD and PySpark

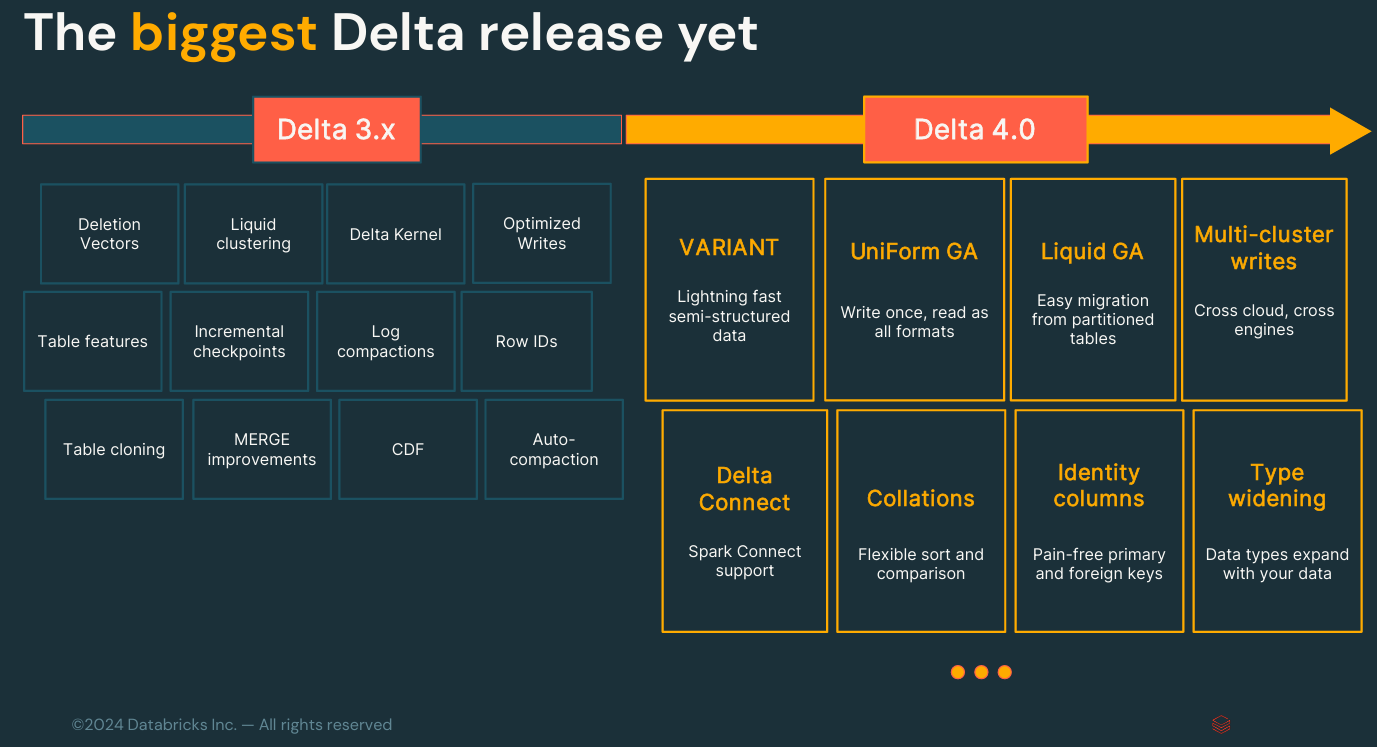

8. Delta Lake 4.0 Integration

Apache Spark 4.0 integrates seamlessly with Delta Lake 4.0bringing advanced features to lake house architecture.

Main features:

- Liquid grouping

- VARIANT type support

- Collation support

- Columns of identity

Technical details:

- Liquid grouping

- Implementation of VARIANT

Impact on performance:

- Liquid clustering can provide up to 12x faster reads for certain query patterns.

- The VARIANT type offers up to 2x better compression compared to JSON stored as strings.

9. Usability improvements

Spark 4.0 introduces several features to improve developer experience and ease of use.

Key improvements:

- Structured Logging Framework

- Message framework and error conditions

- Improved documentation

- Behavior change process

Technical example (structured logging):

{

"ts": "2023-03-12T12:02:46.661-0700",

"level": "ERROR",

"msg": "Fail to know the executor 289 is alive or not",

"context": {

"executor_id": "289"

},

"exception": {

"class": "org.apache.spark.SparkException",

"msg": "Exception thrown in awaitResult",

"stackTrace": "..."

},

"source": "BlockManagerMasterEndpoint"

}Impact:

- Improved troubleshooting and debugging capabilities

- Improved observability for Spark applications

- Smoother upgrade path between Spark versions

10. Performance optimizations

Throughout Spark 4.0, numerous performance improvements enhance overall system efficiency.

Key areas for improvement:

- Improved catalyst optimizer

- Improvements in adaptive query execution

- Improved Arrow Integration

Technical details:

- Join Reorder Optimization

- Dynamic partition pruning

- Running Vectorized Python UDFs

Reference points:

- Up to 30% improvement in TPC-DS benchmark performance compared to Spark 3.x.

- Python UDF performance improvements of up to 100% for certain workloads.

Conclusion

Apache Spark 4.0 represents a monumental advancement in big data processing capabilities. With its focus on connectivity (Spark Connect), data integrity (ANSI mode), advanced streaming (Arbitrary Stateful Processing V2), and enhanced support for semi-structured data (Variant type), this release addresses the evolving needs of data engineers, data scientists, and analysts working with large-scale data.

Improvements to Python integration, SQL capabilities, and overall ease of use make Spark 4.0 more accessible and powerful than ever. With performance optimizations and seamless integration with modern data lake technologies like Delta Lake, Apache Spark 4.0 reaffirms its position as the go-to platform for big data processing and analytics.

As organizations face ever-increasing data volumes and increasing complexity, Apache Spark 4.0 provides the tools and capabilities needed to build scalable, efficient, and innovative data solutions. Whether you’re working on real-time analytics, large-scale ETL processes, or advanced machine learning pipelines, Spark 4.0 delivers the features and performance needed to meet the challenges of modern data processing.

Frequently Asked Questions

Answer: An open source engine for large-scale data processing and analysis, offering in-memory computing for faster processing.

Answer: Spark uses in-memory processing, is easier to use, and integrates batch, streaming, and machine learning into a single framework, unlike Hadoop's disk-based processing.

Answer: Spark Core, Spark SQL, Spark Streaming, MLlib (machine learning), and GraphX (graph processing).

Answer: Resilient distributed data sets are immutable, fault-tolerant data structures processed in parallel.

Answer: Processes data in real time by dividing it into micro-batches to perform low-latency analysis.

NEWSLETTER

NEWSLETTER