This post is co-written with NVIDIA's Abhishek Sawarkar, Eliuth Triana, Jiahong Liu, and Kshitiz Gupta.

At AWS re:Invent 2024, we are excited to introduce the amazon Bedrock Marketplace. This is a revolutionary new capability within amazon Bedrock that serves as a centralized hub for discovering, testing, and deploying base models (FMs). It provides developers and organizations with access to an extensive catalog of over 100 popular, emerging and niche FMs, complementing the existing selection of industry-leading models on amazon Bedrock. Bedrock Marketplace enables subscription and deployment of models through managed endpoints, while maintaining the simplicity of amazon Bedrock's unified APIs.

The NVIDIA Nemotron family, available as <a target="_blank" href="https://www.nvidia.com/en-us/ai/” target=”_blank” rel=”noopener”>NVIDIA NIM microservices, offers a set of cutting-edge language models now available through the amazon Bedrock Marketplace, marking a major milestone in ai model accessibility and implementation.

In this post, we look at the benefits and capabilities of the Bedrock Marketplace and Nemotron models, and how to get started.

About amazon Bedrock Marketplace

Bedrock Marketplace plays a critical role in democratizing access to advanced ai capabilities through several key benefits:

- Complete selection of models – Bedrock Marketplace offers an exceptional range of models, from proprietary to publicly available options, allowing organizations to find the perfect fit for their specific use cases.

- Unified and secure experience – By providing a single point of access for all models through amazon Bedrock APIs, Bedrock Marketplace significantly simplifies the integration process. Organizations can safely use these models, and for models that support the amazon Bedrock Converse API, you can use the robust amazon Bedrock toolset, including amazon Bedrock Agents, amazon Bedrock Knowledge Bases, amazon Bedrock Guardrails and amazon Bedrock Flows.

- Scalable infrastructure – Bedrock Marketplace offers configurable scalability through managed endpoints, allowing organizations to select the desired number of instances, choose the appropriate instance types, define custom auto-scaling policies that dynamically adjust to workload demands work and optimize costs while maintaining performance.

About the NVIDIA Nemotron family of models

At the forefront of the NVIDIA Nemotron family of models is Nemotron-4, as stated by NVIDIA, is a powerful multilingual large language model (LLM) trained on an impressive 8 trillion text tokens, optimized specifically for tasks. coding, English and multilingual. Key capabilities include:

- Generation of synthetic data. – Capable of creating high-quality, domain-specific training data at scale

- Multilingual support – Trained on large text corpora, supporting multiple languages and tasks.

- High performance inference – Optimized for efficient deployment on GPU-accelerated infrastructure

- Versatile model sizes – Includes variants such as the Nemotron-4 15B with 15 billion parameters

- open license – Offers an exceptionally permissive open model license that gives companies a scalable way to generate and own synthetic data that can help create powerful LLMs.

Nemotron models offer transformative potential for ai developers by addressing critical challenges in ai development:

- Data Augmentation – Solve data scarcity issues by generating high-quality, synthetic training data sets.

- Profitability – Reduce costs of manual data annotation and time-consuming data collection processes

- Improved model training – Improve ai model performance by generating high-quality synthetic data.

- Flexible integration – Supports seamless integration with existing AWS services and workflows, allowing developers to build sophisticated ai solutions faster.

These capabilities make Nemotron models particularly suitable for organizations looking to accelerate their ai initiatives while maintaining high standards of performance and security.

Getting started with Bedrock Marketplace and Nemotron

To get started with amazon Bedrock Marketplace, open the amazon Bedrock console. From there, you can explore the Bedrock Marketplace interface, which offers a complete catalog of FMs from various vendors. You can browse the available options to discover different ai capabilities and specializations. This exploration will lead you to find the models offered by NVIDIA, including the Nemotron-4.

We guide you through these steps in the following sections.

Open amazon Bedrock Marketplace

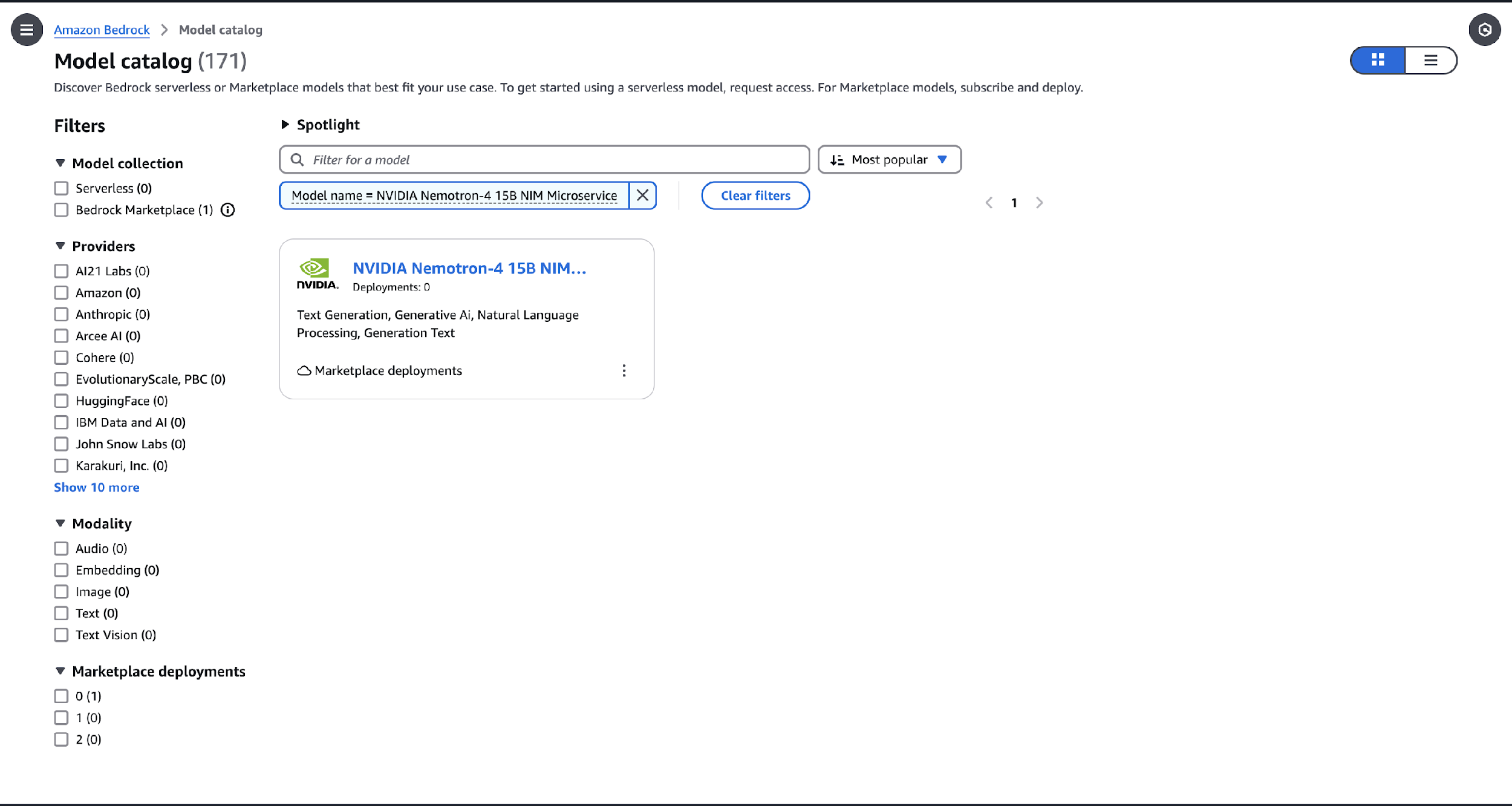

Navigating to amazon Bedrock Marketplace is easy:

- In the amazon Bedrock console, choose Model catalog in the navigation panel.

- Low Filtersselect bedrock market.

Upon entering Bedrock Marketplace, you will find a well-organized interface with several categories and filters to help you find the right model for your needs. You can browse by providers and modality.

- Use the search function to quickly locate specific vendors and explore models listed on Bedrock Marketplace.

Deploy NVIDIA Nemotron models

Once you've located NVIDIA model offerings on the Bedrock Marketplace, you can narrow down to the Nemotron model. To subscribe and deploy Nemotron-4, complete the following steps:

- Filter by Nemotron low Suppliers or search by model name.

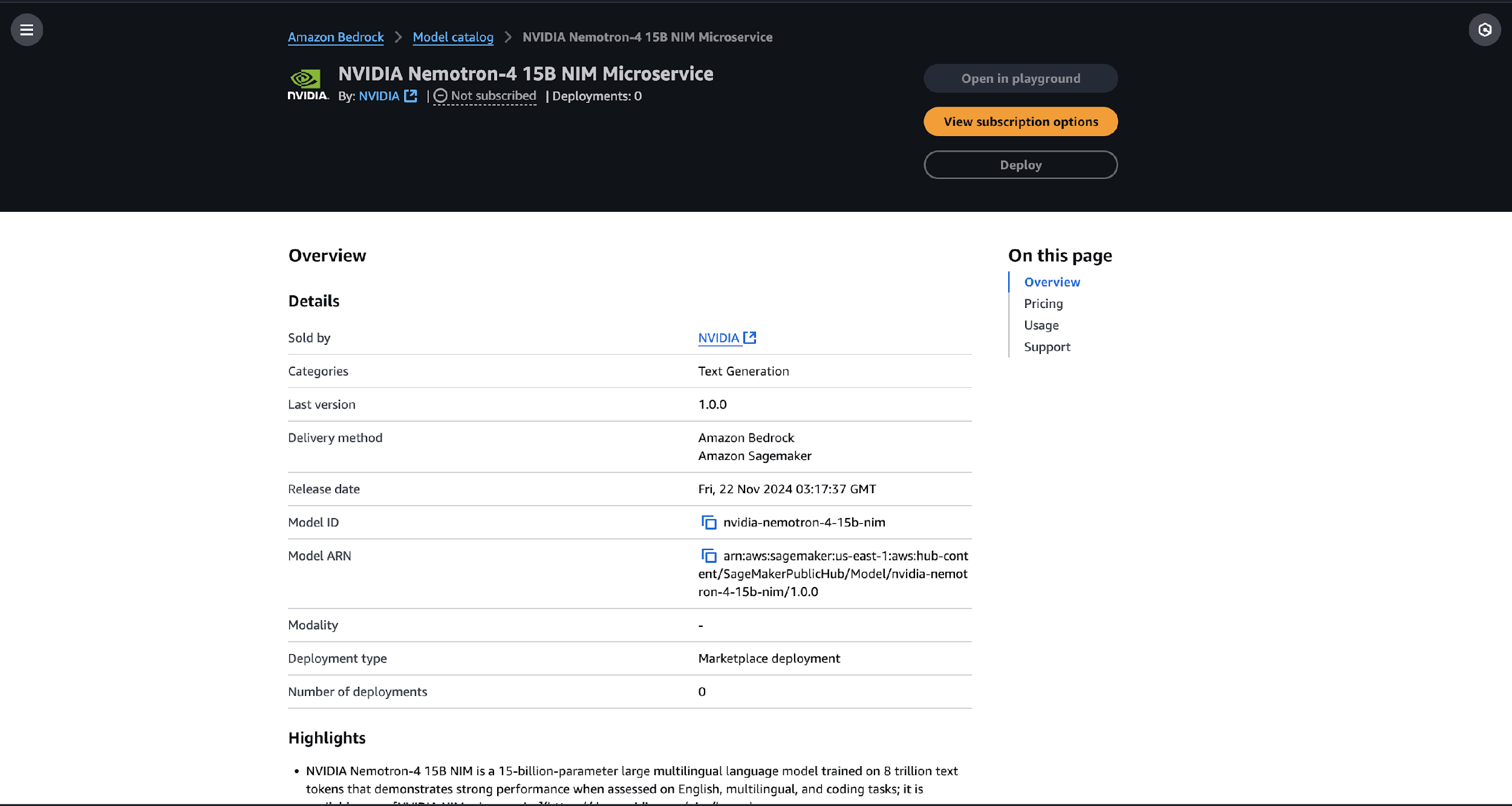

- Choose from available models, such as

Nemotron-4 15B.

On the model details page, you can examine its specifications, capabilities, and pricing details. The Nemotron-4 model offers impressive coding and multilingual capabilities.

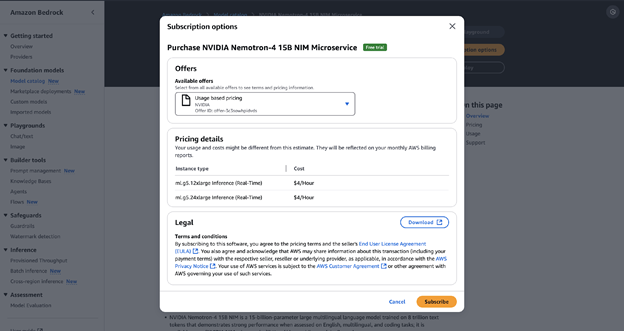

- Choose See subscription options to subscribe to the model.

- Review the available options and choose Subscribe.

- Choose Deploy and follow the instructions to configure your deployment options, including instance types and scaling policies.

The process is easy to use, allowing you to quickly integrate these powerful ai capabilities into your projects using amazon Bedrock APIs.

Conclusion

The launch of NVIDIA Nemotron models on the amazon Bedrock Marketplace marks an important milestone in making advanced ai capabilities more accessible to developers and organizations. Nemotron-4 15B, with its impressive 15 billion parameter architecture trained on 8 trillion text tokens, brings powerful multilingual and encoding capabilities to amazon Bedrock.

Through the Bedrock Marketplace, organizations can utilize Nemotron's advanced capabilities while benefiting from AWS's scalable infrastructure and NVIDIA's robust technologies. We encourage you to start exploring the capabilities of NVIDIA Nemotron models today through the amazon Bedrock Marketplace and experience firsthand how this powerful language model can transform your ai applications.

About the authors

james park He is a solutions architect at amazon Web Services. He works with amazon.com to design, build, and deploy technology solutions on AWS and has a particular interest in artificial intelligence and machine learning. In his free time he likes to seek out new cultures, new experiences and stay up to date with the latest technological trends. You can find it at LinkedIn.

james park He is a solutions architect at amazon Web Services. He works with amazon.com to design, build, and deploy technology solutions on AWS and has a particular interest in artificial intelligence and machine learning. In his free time he likes to seek out new cultures, new experiences and stay up to date with the latest technological trends. You can find it at LinkedIn.

Saurabh Trikande is a senior product manager for amazon Bedrock and SageMaker Inference. He is passionate about working with clients and partners, motivated by the goal of democratizing ai. It focuses on the key challenges related to deploying complex ai applications, inference with multi-tenant models, optimizing costs, and making the deployment of generative ai models more accessible. In his free time, Saurabh enjoys hiking, learning about innovative technologies, following TechCrunch, and spending time with his family.

Saurabh Trikande is a senior product manager for amazon Bedrock and SageMaker Inference. He is passionate about working with clients and partners, motivated by the goal of democratizing ai. It focuses on the key challenges related to deploying complex ai applications, inference with multi-tenant models, optimizing costs, and making the deployment of generative ai models more accessible. In his free time, Saurabh enjoys hiking, learning about innovative technologies, following TechCrunch, and spending time with his family.

Melanie LiPhD, is a Senior Solutions Architect specializing in Generative ai at AWS based in Sydney, Australia, where she focuses on working with customers to build solutions that leverage next-generation ai and machine learning tools. He has been actively involved in multiple generative ai initiatives at APJ, leveraging the power of large language models (LLM). Prior to joining AWS, Dr. Li held data science positions in the financial and retail industries.

Melanie LiPhD, is a Senior Solutions Architect specializing in Generative ai at AWS based in Sydney, Australia, where she focuses on working with customers to build solutions that leverage next-generation ai and machine learning tools. He has been actively involved in multiple generative ai initiatives at APJ, leveraging the power of large language models (LLM). Prior to joining AWS, Dr. Li held data science positions in the financial and retail industries.

marc karp is a machine learning architect on the amazon SageMaker service team. It focuses on helping customers design, deploy, and manage machine learning workloads at scale. In his free time he likes to travel and explore new places.

marc karp is a machine learning architect on the amazon SageMaker service team. It focuses on helping customers design, deploy, and manage machine learning workloads at scale. In his free time he likes to travel and explore new places.

Abhishek Sawarkar He is a product manager on the NVIDIA ai Enterprise team and works on integrating NVIDIA ai software into Cloud MLOps platforms. It focuses on integrating NVIDIA's ai stack end-to-end within cloud platforms and improving user experience in accelerated computing.

Abhishek Sawarkar He is a product manager on the NVIDIA ai Enterprise team and works on integrating NVIDIA ai software into Cloud MLOps platforms. It focuses on integrating NVIDIA's ai stack end-to-end within cloud platforms and improving user experience in accelerated computing.

Eliuth Triana is a Developer Relations Manager at NVIDIA, training amazon's ai MLOps, DevOps, scientists, and AWS technical experts to master the NVIDIA compute stack to accelerate and optimize Generative ai Foundation models ranging from data curation , GPU training, model inference, and production deployment on AWS GPU instances. . Additionally, Eliuth is an avid mountain biker, skier, tennis player, and poker player.

Eliuth Triana is a Developer Relations Manager at NVIDIA, training amazon's ai MLOps, DevOps, scientists, and AWS technical experts to master the NVIDIA compute stack to accelerate and optimize Generative ai Foundation models ranging from data curation , GPU training, model inference, and production deployment on AWS GPU instances. . Additionally, Eliuth is an avid mountain biker, skier, tennis player, and poker player.

Jia Hong Liu is a Solutions Architect on NVIDIA's Cloud Service Provider team. Helps customers adopt ai and machine learning solutions that leverage NVIDIA-accelerated computing to address their training and inference challenges. In his free time, he enjoys origami, DIY projects, and playing basketball.

Jia Hong Liu is a Solutions Architect on NVIDIA's Cloud Service Provider team. Helps customers adopt ai and machine learning solutions that leverage NVIDIA-accelerated computing to address their training and inference challenges. In his free time, he enjoys origami, DIY projects, and playing basketball.

Kshitiz Gupta is a solutions architect at NVIDIA. He enjoys educating cloud customers about the GPU ai technologies NVIDIA has to offer and helping them accelerate their machine learning and deep learning applications. Outside of work, he enjoys running, hiking, and wildlife watching.

Kshitiz Gupta is a solutions architect at NVIDIA. He enjoys educating cloud customers about the GPU ai technologies NVIDIA has to offer and helping them accelerate their machine learning and deep learning applications. Outside of work, he enjoys running, hiking, and wildlife watching.

NEWSLETTER

NEWSLETTER