Agent ai systems have revolutionized industries by enabling complex workflows through specialized agents working collaboratively. These systems streamline operations, automate decision making, and improve overall efficiency in various domains, including market research, healthcare, and business management. However, its optimization remains a persistent challenge, as traditional methods rely heavily on manual adjustments, which limits scalability and adaptability.

A critical challenge in optimizing Agentic ai systems is their reliance on manual configurations, which introduce inefficiencies and inconsistencies. These systems must continually evolve to align with dynamic goals and address complexities in agent interactions. Current approaches often fail to provide mechanisms for autonomous improvement, creating bottlenecks that hinder performance and scalability. This highlights the need for robust frameworks capable of iterative refinement without human intervention.

Existing tools for optimizing Agentic ai systems primarily focus on evaluating performance benchmarks or modular designs. While frameworks like MLA-gentBench evaluate agent performance across tasks, they do not address the broader need for continuous end-to-end optimization. Similarly, modular approaches enhance individual components but lack the holistic adaptability required for dynamic industries. These limitations underscore the demand for systems that autonomously improve workflows through iterative feedback and refinement.

Researchers at aiXplain Inc. introduced a novel framework that leverages large language models (LLMs), particularly Llama 3.2-3B, to autonomously optimize agent ai systems. The framework integrates specialized agents for the evaluation, generation, modification and execution of hypotheses. Employs iterative feedback loops to ensure continuous improvement, significantly reducing reliance on human supervision. This system is designed for broad applicability across industries, addressing domain-specific challenges while maintaining adaptability and scalability.

The framework operates through a structured process of synthesis and evaluation. TO Initially, the basic configuration of Agentic ai is implemented, with specific tasks and workflows assigned to agents. Evaluation metrics, both qualitative (clarity, relevance) and quantitative (execution time, success rates), guide the refinement process. Specialized agents, such as hypothesis and modification agents, iteratively propose and implement changes to improve performance. The system continues to refine configurations until predefined goals are achieved or performance improvements stabilize.

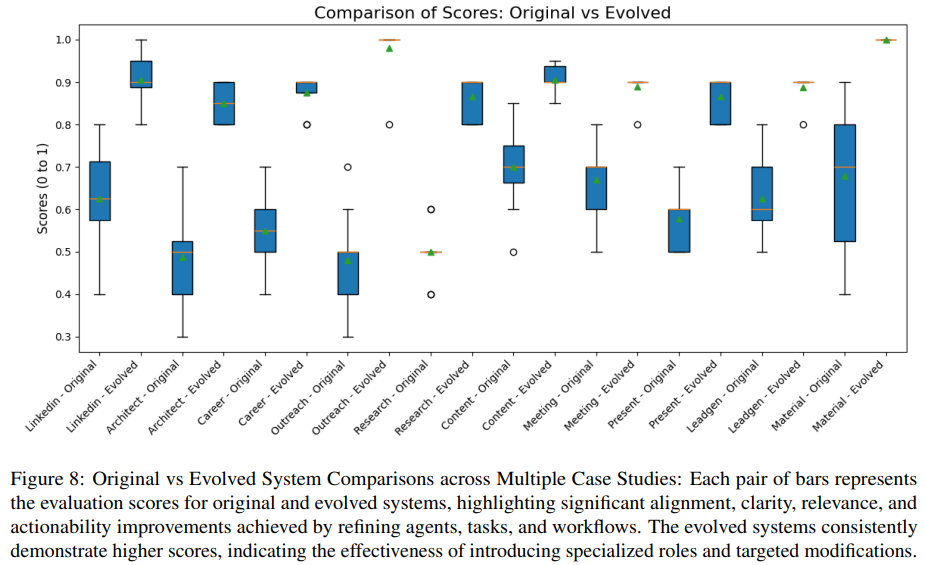

The transformative potential of this framework is demonstrated through several case studies in various settings. Each case highlights the challenges the original systems faced, the modifications introduced, and the resulting improvements in performance metrics:

- Market Research Agent: The initial system struggled with inadequate depth of market analysis and poor alignment with user needs, scoring 0.6 for clarity and relevance. Improvements were made to specialized agents such as market research analyst and data analyst, improving data-driven decision making and prioritizing user-centered design. After refinement, the system achieved alignment and relevance scores of 0.9, significantly improving its ability to provide useful information.

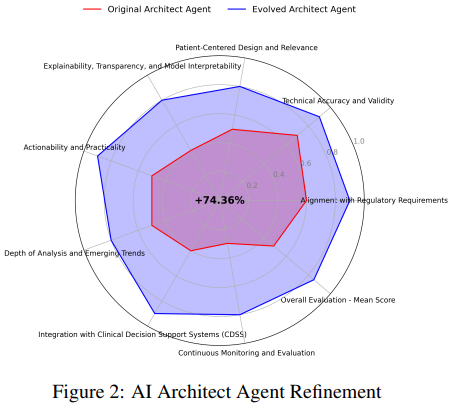

- Medical Imaging Architect Agent: This system faced challenges in regulatory compliance, patient engagement, and explainability. Specialized agents such as the Compliance Specialist and Patient Advocate have been added, along with transparency frameworks to improve explainability. The refined system achieved scores of 0.9 in regulatory compliance and 0.8 in patient-centered design, effectively addressing critical healthcare demands.

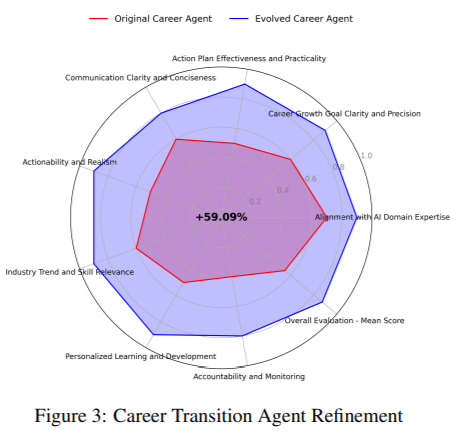

- Career transition agent: The initial system, designed to help software engineers transition into ai roles, lacked clarity and alignment with industry standards. By incorporating agents such as the domain specialist and skill developer, the refined system provided detailed timelines and structured results, increasing communication clarity scores from 0.6 to 0.9. This improved the system's ability to facilitate effective career transitions.

- Supply Chain Extension Agent: Initially limited in scope, the extension agent system for supply chain management struggled to address operational complexities. Five specialized roles were introduced to improve focus on supply chain analysis, optimization and sustainability. These modifications led to significant improvements in clarity, accuracy, and actionability, positioning the system as a valuable tool for e-commerce businesses.

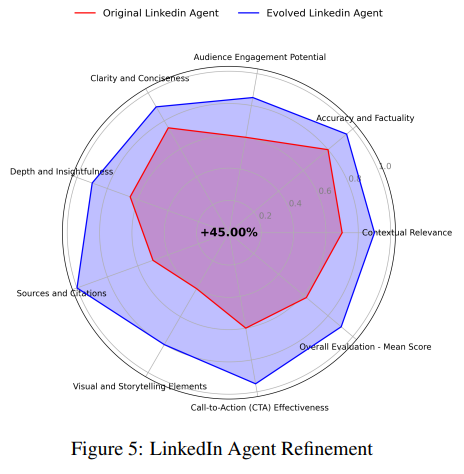

- LinkedIn Content Agent: The original system, designed to generate LinkedIn posts about generative ai trends, struggled with engagement and credibility. Specialized roles such as Audience Engagement Specialist were introduced, emphasizing metrics and adaptability. After refinement, the system achieved marked improvements in audience engagement and relevance, improving its usefulness as a content creation tool.

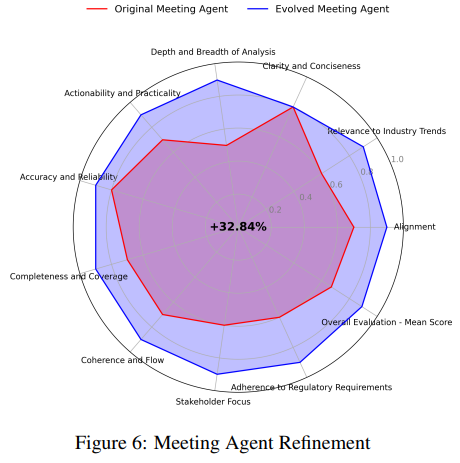

- Meeting facilitator agent: Developed for ai-driven drug discovery, this system did not align with industry trends and analytical depth. By integrating roles such as ai Industry Expert and Compliance Leader, the refined system achieved scores of 0.9 or higher across all assessment categories, making it more relevant and actionable for pharma stakeholders .

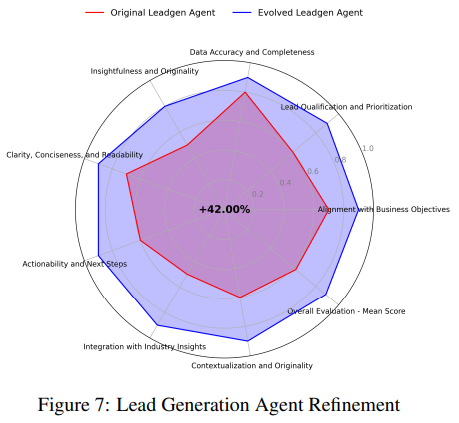

- Lead Generation Agent: Centered on the “ai for Personalized Learning” platform, this system initially struggled with data accuracy and business alignment. Specialized agents such as Market Analysts and Business Development Specialists were introduced, resulting in better lead qualification processes. After refinement, the system achieved scores of 0.91 for alignment with business objectives and 0.90 for data accuracy, highlighting the impact of specific modifications.

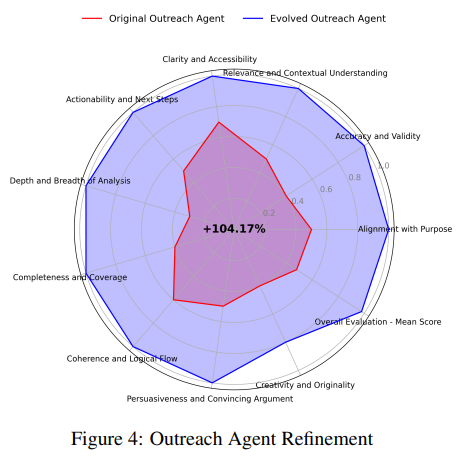

In all of these cases, the iterative feedback loop mechanism was instrumental in improving clarity, relevance, and actionability. For example, market research and medical imaging systems saw their performance metrics increase by more than 30% after refinement. Variability in results was significantly reduced, ensuring consistent and reliable performance.

The research provides several key conclusions:

- The framework adapts to various industries effectively, maintaining adaptability without compromising domain-specific requirements.

- Key metrics such as execution time, clarity, and relevance improved by an average of 30% across all case studies.

- The introduction of domain-specific roles effectively addressed unique challenges, as seen in the cases of market research and medical imaging.

- The iterative feedback loop mechanism minimized human intervention, improving operational efficiency and reducing refinement cycles.

- Variability in results was significantly reduced, ensuring reliable performance in dynamic environments.

- The improved results aligned with user needs and industry goals, providing actionable insights across domains.

In conclusion, aiXplain Inc.'s innovative framework optimizes agent ai systems by addressing the limitations of traditional manual refinement processes. The framework achieves continuous and autonomous improvements in various domains by integrating LLM-driven agents and iterative feedback loops. The case studies demonstrate its scalability, adaptability, and constant improvement of performance metrics such as clarity, relevance, and actionability, with scores above 0.9 in many cases. This approach reduces variability and aligns results with specific industry demands.

Verify he Paper. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on <a target="_blank" href="https://twitter.com/Marktechpost”>twitter and join our Telegram channel and LinkedIn Grabove. Don't forget to join our SubReddit over 60,000 ml.

Trending: LG ai Research launches EXAONE 3.5 – three frontier-level bilingual open-source ai models that deliver unmatched instruction following and broad context understanding for global leadership in generative ai excellence….

Aswin AK is a consulting intern at MarkTechPost. He is pursuing his dual degree from the Indian Institute of technology Kharagpur. He is passionate about data science and machine learning, and brings a strong academic background and practical experience solving real-life interdisciplinary challenges.

<script async src="//platform.twitter.com/widgets.js” charset=”utf-8″>

NEWSLETTER

NEWSLETTER