Introduction

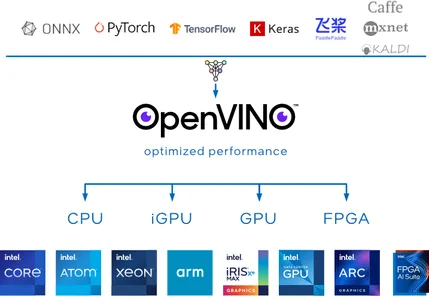

We talk about ai almost daily due to its growing impact in replacing manual labor of humans. The creation of ai-enabled software has grown rapidly in a short time. Enterprises and businesses believe in integrating trustworthy and responsible ai into their applications to generate more revenue. The most challenging part of integrating ai into an application is the model inference and the computational resources used to train the model. There are already many techniques that improve performance by optimizing the model during inference with fewer computing resources. With this problem statement, Intel introduced the OpenVINO Toolkit, an absolute game changer. OpenVINO is an open source toolkit for optimizing and implementing ai inference.

Learning objectives

In this article, we will:

- Understand what the OpenVINO Toolkit is and its purpose when optimizing and deploying ai inference models.

- Explore practical use cases for OpenVINO, especially its importance in the future of ai at the edge.

- Learn how to implement a text detection project in an image using OpenVINO in Google Colab.

- Discover the key features and benefits of using OpenVINO, including its model compatibility and support for hardware accelerators, and how it can impact various industries and applications.

This article was published as part of the Data Science Blogathon.

What is OpenVINO?

OpenVINO, what does it mean Open visual inference and neural network optimization, is a set of open source tools developed by the Intel team to facilitate the optimization of deep learning models. The vision of the OpenVINO toolkit is to power your ai deep learning models and deploy the application on-premises, on-device or in the cloud more efficiently and effectively.

The OpenVINO Toolkit is particularly valuable because it supports many deep learning frameworks, including popular ones like TensorFlow, PyTorch, Onnx, and Caffe. You can train your models using your preferred framework and then use OpenVINO to convert and optimize them for deployment on Intel hardware accelerators such as CPUs, GPUs, FPGAs, and VPUs.

Regarding inference, the OpenVINO Toolkit offers several tools for model quantization and compression, which can significantly reduce the size of deep learning models without losing inference accuracy.

Why use OpenVINO?

Currently, the ai craze is unwilling to slow down. With this popularity, it is evident that more and more applications will be developed to run ai applications on premises and on the device. Some of the challenging areas in which OpenVINO excels make it an ideal choice which is why it is crucial to use OpenVINO:

OpenVINO Model Zoo

OpenVINO offers a model zoo with pre-trained deep learning models for tasks like stable broadcast, speech, object detection and more. These models can serve as a starting point for your projects, saving you time and resources.

Model Compatibility

OpenVINO supports many deep learning frameworks, including TensorFlow, PyTorch, ONNx, and Caffe. This means you can use your preferred framework to train your models and then convert and optimize them for deployment using the OpenVINO Toolkit.

High performance

OpenVINO is optimized for fast inference, making it suitable for real-time applications such as computer vision, robotics, and IoT devices. It takes advantage of hardware acceleration such as FPGA, GPU and TPU to achieve high performance and low latency.

<h2 class="wp-block-heading" id="h-ai-in-edge-future-using-intel-openvino”>ai in the edge future with Intel OpenVINO

ai at the Edge is the most difficult area to address. Creating an optimized solution to solve hardware limitations is no longer impossible with the help of OpenVINO. The future of ai at the Edge with this toolkit has the potential to revolutionize various industries and applications.

Let’s find out how OpenVINO works to make it suitable for ai at Edge.

- The main step is to create a model using your favorite deep learning frameworks and convert it to an OpenVINO core model. Another alternative is to use pre-trained models using the OpenVINO model zoo.

- Once the model is trained, the next step is compression. OpenVINO Toolkit provides a Neural Network Compression Framework (NNCF).

- Model Optimizer converts the pre-trained model to a suitable format. The optimizer consists of IR data. The IR data refers to the Intermediate Representation of a deep learning model, which is already optimized and transformed for implementation with OpenVINO. The model weights are in .XML and .bin file formats.

- During model deployment, the OpenVINO Inference Engine can load and use IR data on the target hardware, enabling fast and efficient inference for various applications.

With this approach, OpenVINO can play a vital role in ai at the Edge. Let’s get our hands dirty with a code project to implement text detection in an image using the OpenVINO Toolkit.

Detecting text in an image using OpenVINO Toolkit

In implementing this project, we will use Google Colab as a means to run the application successfully. In this project we will use the horizontal-text-detection-0001 zoo model OpenVINO model. This pre-trained model detects horizontal text in input images and returns a mass of data in the form (100.5). This answer seems (x_min, y_min, x_max, y_max, conf) Format.

Step-by-step code implementation

Facility

!pip install openvinoImport required libraries

Let’s import the necessary modules to run this application. OpenVINO supports a utility helper function to download pre-trained weights from the provided source code URL.

import urllib.request

base = "https://raw.githubusercontent.com/openvinotoolkit/openvino_notebooks"

utils_file = "/main/notebooks/utils/notebook_utils.py"

urllib.request.urlretrieve(

url= base + utils_file,

filename="notebook_utils.py"

)

from notebook_utils import download_fileYou can verify that notebook_utils has now been downloaded successfully; Let’s quickly import the remaining modules.

import openvino

import cv2

import matplotlib.pyplot as plt

import numpy as np

from pathlib import PathDownload Weights

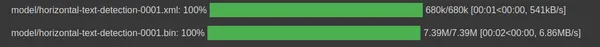

Initialize the path to download horizontal text detection IR data model weight files in .xml and .bin format.

base_model_dir = Path("./model").expanduser()

model_name = "horizontal-text-detection-0001"model_xml_name = f'{model_name}.xml'

model_bin_name = f'{model_name}.bin'

model_xml_path = base_model_dir / model_xml_name

model_bin_path = base_model_dir / model_bin_nameIn the following code snippet, we use three variables to simplify the path where the pre-trained model weights exist.

model_zoo = "https://storage.openvinotoolkit.org/repositories/open_model_zoo/2022.3/models_bin/1/"

algo = "horizontal-text-detection-0001/FP32/"

xml_url = "horizontal-text-detection-0001.xml"

bin_url = "horizontal-text-detection-0001.bin"

model_xml_url = model_zoo+algo+xml_url

model_bin_url = model_zoo+algo+bin_url

download_file(model_xml_url, model_xml_name, base_model_dir)

download_file(model_bin_url, model_bin_name, base_model_dir)

Loading model

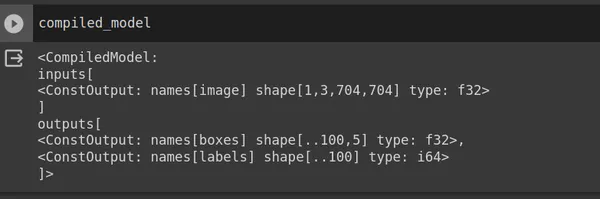

OpenVINO provides a Core class to interact with the OpenVINO toolkit. The Core class provides several methods and functions for working with models and performing inference. Use read_model and pass model_xml_path. After reading the model, compile it for a specific target device.

core = Core()

model = core.read_model(model=model_xml_path)

compiled_model = core.compile_model(model=model, device_name="CPU")

input_layer_ir = compiled_model.input(0)

output_layer_ir = compiled_model.output("boxes")In the code snippet above, the compiled model returns the shape of the input image (704,704,3), an RGB image but in PyTorch format (1,3,704,704) where 1 is the batch size, 3 is the number of channels , 704 is the height and weight. The output returns (x_min, y_min, x_max, y_max, conf). Let’s load an input image now.

Upload image

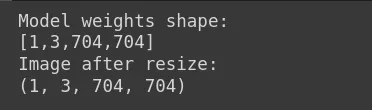

The weight of the model is (1,3,704,704). Consequently, you must resize the input image to match this shape. In Google Colab or your code editor, you can upload your input image and in our case the image file is called sample_image.jpg.

image = cv2.imread("sample_image.jpg")

# N,C,H,W = batch size, number of channels, height, width.

N, C, H, W = input_layer_ir.shape

# Resize the image to meet network expected input sizes.

resized_image = cv2.resize(image, (W, H))

# Reshape to the network input shape.

input_image = np.expand_dims(resized_image.transpose(2, 0, 1), 0)

print("Model weights shape:")

print(input_layer_ir.shape)

print("Image after resize:")

print(input_image.shape)

Displays the input image.

plt.imshow(cv2.cvtColor(image, cv2.COLOR_BGR2RGB))

plt.axis("off")

inference machine

Previously, we used model weights to compile the model. Use build the model in context with the input image.

# Create an inference request.

boxes = compiled_model((input_image))(output_layer_ir)

# Remove zero only boxes.

boxes = boxes(~np.all(boxes == 0, axis=1))Prediction

The compiled_model returns boxes with the coordinates of the bounding box. We use the cv2 module to create a rectangle and putText to add the confidence score over the detected text.

def detect_text(bgr_image, resized_image, boxes, threshold=0.3, conf_labels=True):

# Fetch the image shapes to calculate a ratio.

(real_y, real_x), (resized_y, resized_x) = bgr_image.shape(:2), resized_image.shape(:2)

ratio_x, ratio_y = real_x / resized_x, real_y / resized_y

# Convert image from BGR to RGB format.

rgb_image = cv2.cvtColor(bgr_image, cv2.COLOR_BGR2RGB)

# Iterate through non-zero boxes.

for box in boxes:

# Pick a confidence factor from the last place in an array.

conf = box(-1)

if conf > threshold:

(x_min, y_min, x_max, y_max) = (

int(max(corner_position * ratio_y, 10)) if idx % 2

else int(corner_position * ratio_x)

for idx, corner_position in enumerate(box(:-1))

)

# Draw a box based on the position, parameters in rectangle function are:

# image, start_point, end_point, color, thickness.

rgb_image = cv2.rectangle(rgb_image, (x_min, y_min), (x_max, y_max),(0,255, 0), 10)

# Add text to the image based on position and confidence.

if conf_labels:

rgb_image = cv2.putText(

rgb_image,

f"{conf:.2f}",

(x_min, y_min - 10),

cv2.FONT_HERSHEY_SIMPLEX,

4,

(255, 0, 0),

8,

cv2.LINE_AA,

)

return rgb_imageShow output image

plt.imshow(detect_text(image, resized_image, boxes));

plt.axis("off")

Conclusion

To conclude, we successfully created text detection in an image project using OpenVINO Toolkit. The Intel team continually improves the toolkit. OpenVINO also supports pre-trained generative ai models such as stable broadcast, ControlNet, speech-to-text, and more.

Key takeaways

- OpenVINO is an innovative open source tool to power your ai deep learning models and deploy the application on-premise, on-device, or in the cloud.

- The main goal of OpenVINO is to optimize deep models with various model quantizations and compressions, which can significantly reduce the size of deep learning models without losing inference accuracy.

- This toolkit also supports deploying ai applications on hardware accelerators such as GPU, FPGA, ASIC, TPU, and more.

- Various industries can adopt OpenVINO and harness its potential to impact ai at the edge.

- Using the zoo pre-trained model is simple as we implement text detection in images with just a few lines of code.

Frequent questions

A. Intel OpenVINO provides a model zoo with Pre-trained deep learning models for tasks like stable broadcast, speech, and more. OpenVINO runs pre-trained zoo models on-premise, on-device, and in the cloud more efficiently and effectively.

A. Both OpenVINO and TensorFlow are free and open source. Developers use TensorFlow, a deep learning framework, for model development, while OpenVINO, a toolkit, optimizes deep learning models and deploys them on Intel hardware accelerators.

A. OpenVINO’s versatility and ability to optimize deep learning models for Intel hardware make it a valuable tool for ai and computer vision applications in various industries, such as military defense, healthcare, smart cities, and many more.

A. Yes, Intel’s OpenVINO toolkit is free to use. The Intel team developed this open source toolset to facilitate the optimization of deep learning models.

The media shown in this article is not the property of Analytics Vidhya and is used at the author’s discretion.

NEWSLETTER

NEWSLETTER