artificial intelligence (ai) agents have become the cornerstone of advancements in numerous fields, ranging from natural language processing and computer vision to autonomous systems and reinforcement learning. ai agents are systems capable of perceiving their environment, reasoning, learning, and taking actions to achieve predefined goals. Over the years, significant research has focused on building intelligent agents with capabilities such as adaptability, collaboration, and decision-making in complex and dynamic environments. This article highlights the top 10 research papers that have shaped the field of ai agents, showcasing key breakthroughs, methodologies, and their implications.

These ai Agents research papers cover a wide spectrum of topics, including multi-agent systems, reinforcement learning, generative models, and ethical considerations, providing a comprehensive view of the landscape of ai agent research. By understanding these influential works, readers can gain insights into the evolution of ai agents and their transformative potential across industries.

<h2 class="wp-block-heading" id="h-the-importance-of-research-papers-on-ai-agents”>The Importance of Research Papers on ai Agents

Research papers on ai agents are crucial for advancing the understanding and capabilities of intelligent systems. They serve as the foundation for innovation, offering insights into how machines can perceive, learn, and interact with their environments to perform complex tasks. These papers document cutting-edge methodologies, breakthroughs, and lessons learned, helping researchers and practitioners build upon prior work and push the boundaries of what ai agents can achieve.

- Knowledge Dissemination: Research papers facilitate the sharing of ideas and findings across the ai community, fostering collaboration and enabling cumulative progress. They provide a structured way to communicate novel concepts, algorithms, and experimental results.

- Driving Innovation: The challenges outlined in these papers inspire the development of new techniques and technologies. From game-playing agents like AlphaZero to cooperative systems in multi-agent environments, research papers have paved the way for groundbreaking applications.

- Establishing Standards: Papers often propose benchmarks and evaluation metrics, helping standardize the assessment of ai agents. This ensures consistent and fair comparisons, driving the adoption of best practices.

- Practical Applications: Many papers bridge theory and practice, demonstrating how ai agents can solve real-world problems in areas like robotics, healthcare, finance, and climate modeling.

- Ethical and Social Impact: As ai agents increasingly influence society, research papers also address critical issues like fairness, accountability, and the ethical use of ai. They guide the development of systems that align with human values and priorities.

Also Read: Comprehensive Guide to Build ai Agents from Scratch

<h2 class="wp-block-heading" id="h-top-10-research-papers-on-ai-agents”>Top 10 Research Papers on ai Agents

Here are our top 10 picks from the hundreds of research papers published on ai Agents.

<h3 class="wp-block-heading" id="h-paper-1-modelling-social-action-for-ai-agents”>Paper 1: Modelling Social Action for ai Agents

Link: Read this ai Agent Research Paper Here

Paper Summary

The paper explores foundational concepts of social action, structure, and intelligence in artificial agents. It emphasizes that sociality in ai emerges from individual agent actions and intelligence within a shared environment. The paper introduces a framework to understand how individual and emergent collective phenomena shape the minds and behaviors of ai agents. It delves into dependencies, coordination, and goal dynamics as key drivers of social interaction among cognitive agents, presenting nuanced insights into goal delegation, adoption, and social commitment.

Key Insights of the Paper

Ontology of Social Action

The paper classifies social action into “weak” (based on beliefs about others’ mental states) and “strong” (guided by goals related to others’ minds or actions). Social action is distinguished from mere interaction, emphasizing that it involves treating others as cognitive agents with goals and beliefs.

Dependencies and Coordination

Dependence relationships among agents are foundational to sociality. The paper identifies two types of coordination:

- Reactive coordination

- Anticipatory coordination

Goal Delegation and Adoption

Delegation involves one agent incorporating another’s action into its plans, while goal adoption occurs when an agent aligns its goals with another’s objectives, fostering cooperation. The paper elaborates on levels of delegation (e.g., open vs. closed) and forms of adoption (e.g., instrumental, terminal, or cooperative).

Social Commitment and Group Dynamics

Social commitment—a relational obligation between agents—is highlighted as the glue for collaborative efforts. The paper critiques oversimplified views of group action, stressing that shared goals and reciprocal commitments are vital for stable organizations.

Emergent Social Structures

The paper underscores the importance of emergent dependence networks in shaping agent behaviors and collective dynamics. These structures arise independently of individual intentions but feedback into agents’ decision-making, influencing their goals and actions.

Reconciling Cognition and Emergence

Castelfranchi argues for integrating cognitive deliberation with emergent, pre-cognitive phenomena, such as self-organizing cooperation, to model realistic social behaviours in ai systems.

<h3 class="wp-block-heading" id="h-paper-2-visibility-into-ai-agents”>Paper 2: Visibility into ai Agents

Link: Read this ai Agent Research Paper Here

Paper Summary

The paper discusses the increasing societal risks posed by autonomous ai agents, capable of performing complex tasks with minimal human oversight. As these systems become pervasive across various domains, the lack of transparency in their deployment and use could magnify risks, including malicious misuse, systemic vulnerabilities, and overreliance. The authors propose three measures to enhance visibility into ai agents—agent identifiers, real-time monitoring, and activity logs. These measures aim to provide stakeholders with tools for governance, accountability, and risk mitigation. The paper also explores challenges related to decentralized deployments and emphasizes the importance of balancing transparency with privacy and power dynamics.

Key Insights of the Paper

Agent Identifiers: Enhancing Traceability and Accountability

Agent identifiers are proposed as a foundational tool for visibility, allowing stakeholders to trace interactions involving ai agents. These identifiers can include embedded information about the agent, such as its goals, permissions, or its developers and deployers. The paper introduces the concept of “agent cards,” which encapsulate this additional information to provide context for each agent’s activities. Identifiers can be implemented in various ways, such as watermarks for visual outputs or metadata in API requests. This approach facilitates incident investigations and governance by linking specific actions to the agents responsible.

Real-Time Monitoring: Oversight of Agent Activities

Real-time monitoring is emphasized as a proactive measure to flag problematic behavior as it occurs. This mechanism allows for oversight of an agent’s activities, such as unauthorized access to tools, excessive resource usage, or violations of operational boundaries. By automating the detection of anomalies and rule breaches, real-time monitoring can help deployers mitigate risks before they escalate. However, the paper acknowledges its limitations, particularly in addressing delayed or diffuse impacts that may emerge over time or across multiple interactions.

Activity Logs: Facilitating Retrospective Analysis

Activity logs are presented as a complementary measure to real-time monitoring. They record an agent’s inputs, outputs, and state changes, enabling in-depth post-incident analysis. Logs are particularly useful for identifying patterns or impacts that unfold over longer timeframes, such as systemic biases or cascading failures in multi-agent systems. While logs can provide detailed insights, the paper highlights challenges in managing privacy concerns, data storage costs, and ensuring the relevance of logged information.

Risks of ai Agents: Understanding the Threat Landscape

The paper explores several risks associated with ai agents. Malicious use, such as automating harmful tasks or conducting large-scale influence campaigns, could be amplified by these systems’ autonomy. Overreliance on agents for high-stakes decisions could lead to catastrophic failures if these systems malfunction or are attacked. Multi-agent systems introduce additional risks, including feedback loops and emergent behaviors that could destabilize broader systems. These risks underline the need for robust visibility mechanisms to ensure accountability and mitigate harm.

Challenges of Decentralized Deployments

Decentralized deployments pose unique obstacles to visibility. Users can independently deploy agents, bypassing centralized oversight. To address this, the authors propose leveraging compute providers and tool or service providers as enforcement points. These entities could condition access to resources on the implementation of visibility measures like agent identifiers. The paper also suggests voluntary standards and open-source frameworks as pathways to promote transparency in decentralized contexts without overly restrictive regulation.

Privacy and Power Concerns: Balancing Transparency with Ethics

While visibility measures are essential, they come with significant privacy risks. The extensive data collection needed for monitoring and logging could lead to surveillance concerns and erode user trust. Additionally, a reliance on centralized deployers for visibility measures may consolidate power among a few entities, potentially exacerbating systemic vulnerabilities. The authors advocate for decentralized approaches, transparency frameworks, and data protection safeguards to balance the need for visibility with ethical considerations.

<h3 class="wp-block-heading" id="h-paper-3-artificial-intelligence-and-virtual-worlds-toward-human-level-ai-agents”>Paper 3: artificial intelligence and Virtual Worlds –Toward Human-Level ai Agents

Link: Read this ai Agent Research Paper Here

Paper Summary

The paper explores the intersection of ai and virtual worlds, focusing on the role of ai agents as a means to advance toward human-level intelligence. The paper highlights how modern virtual worlds, such as interactive computer games and multi-user virtual environments (MUVEs), serve as testbeds for developing and understanding autonomous intelligent agents. These agents play an integral role in enhancing user immersion and interaction within virtual worlds while also presenting opportunities for researching complex ai behaviors. The author emphasizes that despite advancements in ai, achieving human-level intelligence remains a long-term challenge, requiring integrated approaches combining traditional and advanced ai methods.

Key Insights of the Paper

<h5 class="wp-block-heading" id="h-the-role-of-ai-in-virtual-worlds”>The Role of ai in Virtual Worlds

Virtual worlds provide a fertile ground for ai development as they allow for controlled experimentation with intelligent agents. While earlier ai efforts focused heavily on enhancing 3D graphics, achieving true immersion and interaction now relies on creating more believable and lifelike agent behaviors.

Game Agents (NPCs)

Non-Player Characters (NPCs) are central to enhancing user experience in virtual worlds. The author discusses how ai for NPCs often prioritizes the illusion of intelligence over actual complexity, balancing realism with game performance constraints.

<h5 class="wp-block-heading" id="h-ai-techniques-shaping-npc-behavior”>ai Techniques Shaping NPC Behavior

- Traditional Methods

- Advanced Techniques

- The paper highlights notable case studies, such as F.E.A.R., which utilized planning algorithms, and Creatures, which employed neural networks for learning and adaptation.

Human-Level Intelligence and Virtual Worlds

The paper connects virtual agents to broader ai theories, including:

- Embodiment Theory

- Situatedness

Challenges and Technical Limitations

Despite their potential, virtual worlds face limitations:

- Simplified embodiment of NPCs (mostly graphical).

- Computational costs of implementing advanced ai techniques in real time.

- Static nature of virtual worlds, which hinders accurate modeling of real-world physics and interactions.

Potential of Virtual Worlds as Testbeds

Virtual worlds are increasingly seen as ideal platforms for ai research. Their ability to simulate real-time decision-making, social interactions, and dynamic environments aligns well with the requirements for advancing human-level ai. Platforms like StarCraft competitions exemplify how virtual worlds push the boundaries of ai development.

Paper 4: Intelligent Agents: Theory and Practice

Link: Read this ai Agent Research Paper Here

Paper Summary

The paper explores the fundamental concepts, design, and challenges of intelligent agents. Agents are defined as autonomous, interactive systems capable of perceiving and responding to their environments while pursuing goal-directed behaviors. The authors categorize the field into three main areas: agent theories (formal properties of agents), agent architectures (practical design frameworks), and agent programming languages (tools for implementing agents). The paper discusses both theoretical foundations and practical applications, highlighting ongoing challenges in balancing formal precision with real-world constraints.

Key Insights of the Paper

Definitions of Agents: Weak and Strong Perspectives

Agents are defined in two ways. A weak notion of agency views agents as autonomous systems that operate without direct human intervention, interact socially, perceive their surroundings, and pursue goals. A strong notion of agency goes further, describing agents in terms of human-like attributes such as beliefs, desires, and intentions to model more sophisticated, intelligent behaviors.

Agent Theories: Formalizing Agent Properties

The paper discusses formal frameworks for representing agents’ properties, focusing on modal logics and possible worlds semantics. These methods model agents’ knowledge, beliefs, and reasoning abilities. However, challenges arise with logical omniscience, where agents are unrealistically assumed to know all logical consequences of their beliefs, an issue that limits practical applicability.

Agent Architectures: Deliberative and Reactive Approaches

- Deliberative (Symbolic) Architectures

- Reactive Architectures

Agent Programming Languages: Communication Mechanisms

The authors introduce programming languages like KQML (Knowledge Query and Manipulation Language), which standardize communication among agents. Inspired by speech act theory, these languages treat messages as actions designed to influence the recipient agent’s state, enabling more efficient collaboration.

<h4 class="wp-block-heading" id="h-applications-of-agent-technology“>Applications of Agent technology

Intelligent agents are applied in diverse fields, including air-traffic control, robotics, and software automation. Examples include softbots that autonomously perform tasks in software environments, as well as multi-agent systems that manage resource allocation and solve dynamic, real-world problems.

Open Challenges in Agent Development

The paper highlights key challenges that remain unresolved:

- Computational Limits: Addressing resource-bounded reasoning in agents remains a major hurdle.

- Scalability: Formal reasoning frameworks often fail to scale to real-world problems due to their complexity.

- Theory vs. Practice: Bridging the gap between theoretical precision and practical implementation continues to challenge researchers.

<h3 class="wp-block-heading" id="h-paper-5-tptu-task-planning-and-tool-usage-of-large-language-model-based-ai-agents”>Paper 5: TPTU: Task Planning and Tool Usage of Large Language Model-based ai Agents

Link: Read this ai Agent Research Paper Here

Paper Summary

The paper evaluates the challenges faced by Large Language Models (LLMs) in solving real-world tasks that require external tool usage and structured task planning. While LLMs excel at text generation, they often fail to handle complex tasks requiring logical reasoning, dynamic planning, and precise execution. The authors propose a framework for evaluating Task Planning and Tool Usage (TPTU) abilities, designing two agent types:

- One-Step Agent (TPTU-OA): Plans and executes all subtasks in a single instance.

- Sequential Agent (TPTU-SA): Solves tasks incrementally, breaking them into steps and refining plans as it progresses.

The authors evaluate popular LLMs like ChatGPT and InternLM using SQL and Python tools for solving a variety of tasks, analyzing their strengths, weaknesses, and overall performance.

Key Insights of the Paper

Agent Framework and Abilities

The proposed framework for LLM-based ai agents includes task instructions, prompts, toolsets, intermediate results, and final answers. The necessary abilities for effective task execution are: perception, task planning, tool usage, memory/feedback learning, and summarization.

Design of One-Step and Sequential Agents

The One-Step Agent plans globally, generating all subtasks and tool usage steps upfront. This approach relies on the model’s ability to map out the entire solution in one go but struggles with flexibility for complex tasks. The Sequential Agent, on the other hand, focuses on solving one subtask at a time. It integrates previous feedback, dynamically adapting its plan. This incremental approach improves performance by enabling the model to adjust based on context and intermediate results.

Task Planning Evaluation

The evaluation tested the agents’ ability to generate tool-subtask pairs, which link a tool with a relevant subtask description. Sequential agents outperformed one-step agents, especially for complex problems, because they mimic human-like step-by-step problem-solving.

- One-Step Agent (TPTU-OA): Global task planning but limited adaptability.

- Sequential Agent (TPTU-SA): Incremental problem-solving, benefiting from richer contextual understanding and error correction between steps.

Tool Usage Challenges

LLM-based agents struggled with effectively using multiple tools:

- Output Formatting Errors: Difficulty adhering to structured formats (e.g., tool-subtask lists).

- Task Misinterpretation: Incorrectly breaking tasks into subtasks or selecting inappropriate tools.

- Overutilization of Tools: Repeatedly applying tools unnecessarily.

- Poor Summarization: Relying on internal knowledge instead of integrating subtask outputs.

For instance, agents often misused SQL generators for purely mathematical problems or failed to summarize subtask responses accurately.

Performance of LLMs

ChatGPT achieved the best overall performance, particularly with sequential agents, where it scored 55% accuracy. InternLM showed moderate improvement, while Ziya and Chinese-Alpaca struggled to complete tasks involving external tools. The results highlight the gap in tool-usage capabilities across LLMs and the value of sequential task planning for improving accuracy.

Observations on Agent Behavior

The experiments revealed specific weaknesses in LLM-based agents:

- Misunderstanding task requirements, leading to poor subtask breakdowns.

- Errors in output formats, create inconsistencies.

- Over-reliance on particular tools, causes redundant or inefficient solutions.

These behaviors provide critical insights into areas where LLMs need refinement, particularly for complex, multi-tool tasks.

Paper 6: A Survey on Context-Aware Multi-Agent Systems: Techniques, Challenges and Future Directions

Link: Read this ai Agent Research Paper Here

Paper Summary

The paper examines the integration of Context-Aware Systems (CAS) with Multi-Agent Systems (MAS) to improve the adaptability, learning, and reasoning capabilities of autonomous agents in dynamic environments. It identifies context awareness as a critical feature that enables agents to perceive, comprehend, and act based on both internal (e.g., goals, behavior) and external (e.g., environmental changes, agent interactions) information. The survey provides a unified Sense-Learn-Reason-Predict-Act framework for context-aware multi-agent systems (CA-MAS) and explores relevant techniques, challenges, and future directions in this emerging field.

Key Insights of the Paper

Context-Aware Multi-Agent Systems (CA-MAS)

CA-MAS combines the autonomy and coordination capabilities of MAS with the adaptability of CAS to handle uncertain, dynamic environments. Agents rely on both intrinsic (goals, prior knowledge) and extrinsic (environmental or social) context to make decisions. This integration is vital for applications like autonomous driving, disaster relief management, utility optimization, and human-ai collaboration.

The authors propose a five-phase framework—Sense, Learn, Reason, Predict, and Act—to describe the CA-MAS process.

Sense: Agents gather contextual information through direct observations, communication with other agents, or sensing from the environment. Context graphs are often used to map relationships between contexts in dynamic environments.

Learn: Agents process the acquired context into meaningful representations. Techniques like key-value models, object-oriented models, and ontology-based models allow agents to structure and understand contextual data. To handle high-dimensional data and dynamic changes, deep learning methods such as LSTM, CNN, and reinforcement learning (RL) are applied. These models allow agents to adapt their behavior to shifting situations effectively.

Reason: Agents analyze context to make decisions or plan actions. Various reasoning approaches are discussed: rule-based reasoning for predefined responses, fuzzy logic for handling uncertainty, graph-based reasoning to analyze contextual relationships, and goal-oriented reasoning, where agents optimize actions based on cost functions or reinforcement learning feedback.

Predict: Agents anticipate future events using predictive models that minimize errors through cost-based or reward-based optimization. These predictions allow agents to proactively respond to changes in the environment.

Act: Agents execute actions guided by deterministic rules or stochastic policies that aim to optimize outcomes. Actions are continuously refined based on environmental feedback to enhance performance.

Techniques and Challenges

The paper extensively discusses techniques for context modeling and reasoning. Context modeling techniques, including key-value pairs, object-oriented structures, and ontology-based models, are used to represent information, while reasoning models such as case-based reasoning, graph-based reasoning, and reinforcement learning enable agents to derive decisions and handle uncertainty.

Despite advancements, CA-MAS faces significant challenges. One major issue is the lack of organizational structures for effective context sharing, which can introduce inefficiencies, security risks, and privacy concerns. Without structured coordination, agents may share irrelevant or sensitive context, reducing trust and system performance.

Another key challenge is the ambiguity and inconsistency in agent consensus when operating in uncertain environments. Agents often encounter incomplete or mismatched information, leading to conflicts during collaboration. Robust consensus techniques and conflict-resolution strategies are essential for improving communication and decision-making in CA-MAS.

The reliance on predefined rules and patterns limits agent adaptability in highly dynamic environments. While deep reinforcement learning (DRL) has emerged as a promising solution, integrating agent ontologies with DRL techniques remains underexplored. The authors highlight opportunities for leveraging graph-based neural networks (GNNs) and variational autoencoders (VAEs) to bridge this gap and enhance the contextual reasoning of agents.

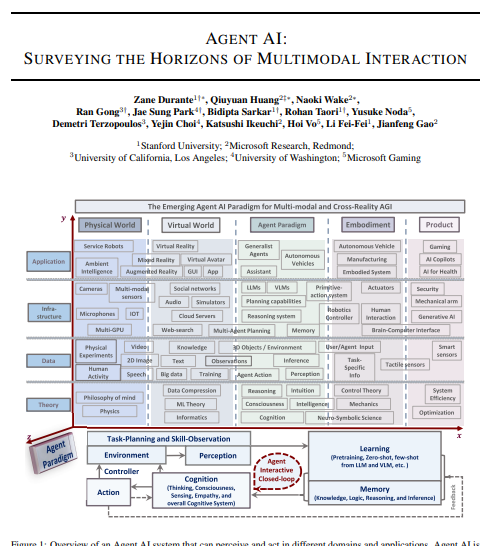

<h3 class="wp-block-heading" id="h-paper-7-nbsp-agent-ai-surveying-the-horizons-of-multimodal-interaction”>Paper 7: Agent ai: Surveying the Horizons of Multimodal Interaction

Link: Read this ai Agent Research Paper Here

Paper Summary

The paper explores the emerging field of multimodal ai agents capable of processing and interacting through various sensory inputs such as visual, audio, and textual data. These systems are positioned as a critical step toward Artificial General Intelligence (AGI) by enabling agents to act within physical and virtual environments. The authors present “Agent ai” as a new class of interactive systems that combine external knowledge, multi-sensory inputs, and human feedback to enhance action prediction and decision-making. The paper provides a framework for training and developing multimodal agents while addressing the challenges of hallucinations, generalization across environments, and ethical considerations in deployment.

Key Insights of the Paper

<h5 class="wp-block-heading" id="h-agent-ai-framework-and-capabilities”>Agent ai Framework and Capabilities

The paper defines Agent ai as systems designed to perceive environmental stimuli, understand language, and produce embodied actions. To achieve this, the framework integrates multiple modalities such as vision, speech, and environmental context. By leveraging large foundation models, including Large Language Models (LLMs) and Vision-Language Models (VLMs), Agent ai enhances its ability to interpret visual and linguistic cues, enabling effective task execution. These systems are applied across domains like gaming, robotics, and healthcare, demonstrating their versatility.

Training Paradigm and Embodied Learning

The authors propose a new paradigm for Agent ai training that incorporates several essential components: perception, memory, task planning, and cognitive reasoning. Using pre-trained models as a base, the agents are fine-tuned to learn domain-specific tasks while interacting with their environments. Reinforcement Learning (RL), imitation learning, and human feedback mechanisms are emphasized to refine agents’ decision-making processes and enable adaptive learning. This method improves long-term task planning and action execution, particularly in dynamic or unfamiliar scenarios.

Applications and Use Cases

The paper highlights significant applications of Agent ai in interactive domains:

- Robotics: Agents perform physical tasks by combining vision, action prediction, and task planning, particularly in tasks requiring human-like movement.

- Gaming and Virtual Reality: Interactive agents in gaming environments are used for natural communication, action planning, and immersive VR/AR experiences.

- Healthcare: Agent ai supports medical tasks such as interpreting clinical data or assisting in patient care by integrating visual and contextual information.

Challenges and Emerging Trends

While multimodal agents demonstrate promising results, several challenges remain:

- Hallucinations: Large foundation models often generate incorrect outputs, especially in unseen environments. The authors address this by combining multiple inputs (e.g., audio and video) to minimize errors.

- Generalization: Training agents to adapt to new domains requires extensive data and robust learning frameworks. Techniques such as task decomposition, environmental feedback, and data augmentation are proposed to improve adaptability.

- Ethics and Privacy: The integration of ai agents into real-world systems raises concerns regarding data privacy, bias, and accountability. The paper emphasizes the need for ethical guidelines, transparency, and user trust.

Paper 8: Large Language Model-Based Multi-Agents: A Survey of Progress and Challenges

Link: Read this ai Agent Research Paper Here

Paper Summary

The paper provides a comprehensive overview of the development, applications, and challenges of multi-agent systems powered by Large Language Models (LLMs). These systems harness the collective intelligence of multiple LLM-based agents to solve complex problems and simulate real-world environments. The survey categorizes the research into problem-solving applications, such as software development and multi-robot collaboration, and world simulation scenarios, including societal, economic, and psychological simulations. It further dissects critical components of LLM-based multi-agent systems, including agents’ communication, capabilities, and interaction with environments. The authors highlight existing limitations like hallucinations, scalability issues, and the lack of multi-modal integration while outlining opportunities for future research.

Key Insights of the Paper

LLM-Based Multi-Agent Systems

The paper explains that LLM-based multi-agent systems (LLM-MA) extend the capabilities of single-agent systems by enabling agents to specialize, interact, and collaborate. These agents are tailored for distinct roles, allowing them to collectively solve tasks or simulate diverse real-world phenomena. LLM-MA systems leverage LLMs’ reasoning, planning, and communication abilities to act autonomously and adaptively.

Agents-Environment Interface

The agents’ interaction with their environment is classified into three categories: sandbox (virtual/simulated), physical (real-world environments), and none (communication-based systems with no external interface). Agents perceive feedback from these environments to refine their strategies over time, particularly in tasks like robotics, gaming, and decision-making simulations.

Agents Communication and Profiling

Communication between agents is pivotal for collaboration. The paper identifies three communication paradigms: cooperative, where agents share information to achieve a common goal; debate, where agents argue to converge on a solution; and competitive, where agents work toward individual objectives. Communication structures, including centralized, decentralized, and shared message pools, are analyzed for their efficiency in coordinating tasks. Agents are profiled through pre-defined roles, model-generated traits, or data-derived features, enabling them to behave in context-specific ways.

Capabilities Acquisition

Agents in LLM-MA systems acquire capabilities through feedback and adjustment mechanisms:

- Memory: Agents use short-term and long-term memory to store and retrieve historical interactions.

- Self-Evolution: Agents dynamically update their goals and strategies based on feedback, ensuring adaptability.

- Dynamic Generation: Systems generate new agents on the fly to address emerging tasks, scaling efficiently in complex settings.

Applications of LLM-Based Multi-Agent Systems

The paper categorizes applications into two primary streams:

- Problem Solving: LLM-MA systems are applied in software development (role-based collaboration), embodied robotics (multi-robot systems), and scientific experimentation (collaborative automation). These applications rely on agents specializing in different tasks and refining solutions through iterative feedback.

- World Simulation: Multi-agent systems are used for societal simulations (social behavior modeling), gaming (interactive role-playing scenarios), economic simulations (market trading and policy-making), and psychology (replicating human behaviors). Agents simulate realistic environments to test theories, evaluate behaviors, and explore emergent patterns.

Challenges of LLM-Based Multi-Agent Systems

The authors identify several challenges:

- Hallucinations: Incorrect outputs by individual agents can propagate through the system, leading to cascading errors.

- Scalability: Scaling multi-agent systems increases computational demands and coordination complexities.

- Multi-Modal Integration: Most current systems rely on textual communication, lacking integration of visual, audio, and other sensory data.

- Evaluation Metrics: Standardized benchmarks and datasets are still limited, particularly for simulations in domains like science, economics, and policy-making.

Paper 9: The Rise and Potential of Large Language Model-Based Agents: A Survey

Link: Read this ai Agent Research Paper Here

Paper Summary

The paper explores the evolution and transformative potential of large language models (LLMs) as the foundation for advanced ai agents. Tracing the origins of ai agents from philosophy to modern ai, the authors present LLMs as a breakthrough in achieving autonomy, reasoning, and adaptability. A conceptual framework is introduced, consisting of three core components—brain, perception, and action—which together enable agents to function effectively in diverse environments. Practical applications of LLM-based agents are explored, spanning single-agent scenarios, multi-agent interactions, and human-agent collaboration. The paper also addresses challenges related to ethical concerns, scalability, and the trustworthiness of these systems.

Key Insights of the Paper

LLMs as the Brain of ai Agents

LLMs serve as the cognitive core of ai agents, enabling advanced capabilities like reasoning, memory, planning, and dynamic learning. Unlike earlier symbolic or reactive systems, LLM-based agents can autonomously adapt to unseen tasks and execute goal-driven actions. Memory mechanisms such as summarization and automated retrieval ensure the efficient handling of long-term interaction histories. Tools like Chain-of-Thought (CoT) reasoning and task decomposition further enhance problem-solving and planning abilities.

Perception: Multimodal Inputs for Enhanced Understanding

The perception module equips LLM-based agents with the ability to process multimodal inputs such as text, images, and auditory data. This expands their perceptual space, allowing them to interact with and interpret their environment more effectively. Techniques like visual-text alignment and auditory transfer models ensure seamless integration of non-textual data, enabling richer and more comprehensive environmental understanding.

Action: Adaptability and Real-World Execution

The action module enables LLM-based agents to operate beyond textual outputs by incorporating embodied actions and tool usage. This allows agents to adapt dynamically to real-world environments. Hierarchical planning and reflection mechanisms ensure agents can modify their strategies in response to evolving circumstances, improving their effectiveness in complex tasks.

Multi-Agent and Human-Agent Interaction

LLM-based agents facilitate both collaboration and competition in multi-agent systems, leading to the emergence of social phenomena such as coordination, negotiation, and division of labor. In human-agent collaborations, the paper discusses two key paradigms: the instructor-executor model, where agents assist users by following explicit instructions, and the equal partnership model, which promotes shared decision-making. Emphasis is placed on ensuring interactions remain interpretable and trustworthy.

Ethical and Practical Challenges

The paper highlights several challenges, including the risks of misuse, biases in decision-making, privacy concerns, and potential overreliance on ai systems. It also addresses the complexities of scaling agent societies while maintaining fairness and inclusivity. The authors propose adopting decentralized governance frameworks, transparency measures, and safeguards to mitigate these risks while ensuring ethical deployment and use of ai agents.

Paper 10: A survey of progress on cooperative multi-agent reinforcement learning in open environment

Link: Read this ai Agent Research Paper Here

Paper Summary

The paper reviews advancements in cooperative Multi-Agent Reinforcement Learning (MARL), particularly focusing on the shift from traditional closed settings to dynamic open environments. Cooperative MARL enables teams of agents to collaborate on complex tasks that are infeasible for a single agent, with applications in path planning, autonomous driving, and intelligent control. While classical MARL has achieved success in static environments, real-world tasks require adaptive strategies for evolving scenarios. The survey identifies key challenges, reviews existing approaches, and outlines future directions for advancing cooperative MARL in open settings.

Key Insights of the Paper

Background and Motivation

Reinforcement Learning (RL) trains agents to optimize sequential decisions based on feedback from the environment, with MARL extending this to multi-agent systems (MAS). Cooperative MARL focuses on shared goals and coordination, offering significant potential for solving large-scale, dynamic problems. Challenges in MARL include scalability, credit assignment, and handling partial observability. Despite progress in classical MARL, the transition to open environments remains underexplored, where factors such as agents, states, and interactions dynamically evolve.

Cooperative MARL in Classical Environments

The paper discusses key frameworks and methods in cooperative MARL, such as value decomposition algorithms (VDN, QMIX, and QPLEX), which simplify credit assignment and improve coordination. Policy-gradient-based approaches, like MADDPG, facilitate efficient learning through centralized training and decentralized execution. Hybrid techniques like DOP integrate value decomposition with policy gradients for better scalability. Research directions include multi-agent communication, hierarchical task learning, and efficient exploration methods like MAVEN and EMC to address challenges in sparse-reward settings. Cooperative MARL has been successfully applied in benchmarks like StarCraft II and autonomous robotics.

Cooperative MARL in Open Environments

Open environments introduce dynamic challenges where agents, goals, and environmental conditions evolve. These settings demand robustness, adaptability, and real-time decision-making. The survey highlights difficulties such as decentralized deployments, zero/few-shot learning, and balancing transparency with privacy concerns. Emerging research explores trustworthy MARL, advanced communication protocols for selective information sharing, and efficient policy transfer mechanisms to adapt to unseen scenarios.

Applications and Benchmarks

Cooperative MARL is applied in domains such as autonomous driving, intelligent control systems, and multi-robot coordination. Evaluation frameworks for classical environments include StarCraft II and GRF, while open-environment benchmarks emphasize adaptability to dynamic and uncertain conditions.

Conclusion

The field of ai agents is advancing at an unprecedented pace, driven by groundbreaking research that continues to push the boundaries of innovation. The Top 10 Research Papers on ai Agents highlighted in this article underscore the diverse applications and transformative potential of these technologies, from enhancing decision-making processes to powering autonomous systems and revolutionizing human-machine collaboration.

By exploring these seminal works, researchers, developers, and enthusiasts can gain valuable insights into the underlying principles and emerging trends that shape the ai agent landscape. As we look ahead, it is clear that ai agents will play a pivotal role in tackling complex global challenges and unlocking new opportunities across industries.

The future of ai agents is not just about smarter algorithms but about building systems that align with ethical considerations and societal needs. Continued exploration and collaboration will be key to ensuring that these intelligent agents contribute positively to humanity’s progress. Whether you’re a seasoned ai professional or a curious learner, diving into these papers is a step toward understanding and shaping the future of ai agents.

NEWSLETTER

NEWSLETTER