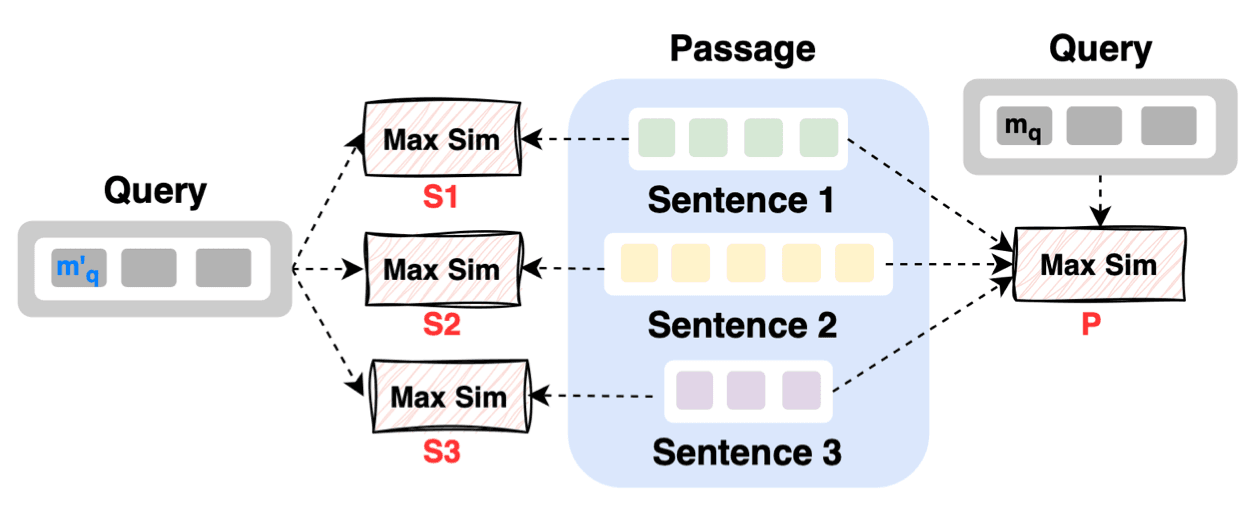

Ranking is a fundamental and popular problem in search. However, existing classification algorithms typically restrict the classification granularity to entire passages or require a specific dense index for each desired level of granularity. This lack of flexibility in granularity negatively impacts many applications that can benefit from more granular classification, such as sentence-level classification for open-domain question answering or proposition-level classification for attribution. In this work, we introduce the idea of any-granularity classification that leverages multi-vector approaches to classify at different levels of granularity while keeping the encoding at a single (coarser) level of granularity. We propose a multi-granular contrastive loss to train multi-vector approaches and validate its usefulness with both sentences and propositions as classification units. Finally, we demonstrate the application of proposition-level classification to post-hoc citation addition in augmented recall generation, outperforming notice-based citation generation.

NEWSLETTER

NEWSLETTER