The intersection of artificial intelligence and human understanding has always been a fascinating domain, especially when leveraging large language models (LLMs) to function as agents that interact, reason, and make decisions like humans. The drive to improve these digital entities has led to notable innovations, with each step aimed at making machines more useful and intuitive in real-world applications, from automated assistance to complex analytical tasks in various fields.

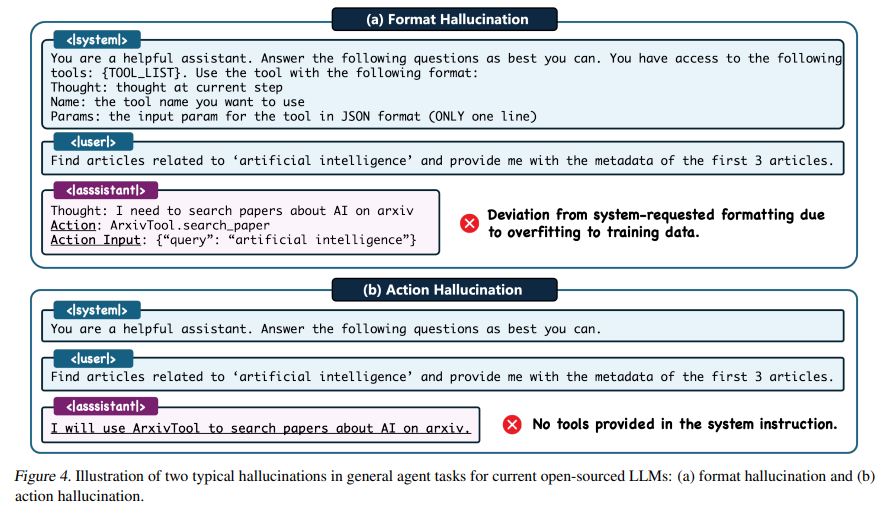

Central to this effort is the challenge of equipping LLMs with robust agent capabilities without diluting their overall intelligence and versatility. The crux is refining how these models are trained, going beyond traditional methods that often intertwine the format of the training data with the agent's reasoning process. Such entanglement can distort the model's learning curve, making it adept at certain tasks while failing at others, or worse, leading it to generate unreliable results, what researchers call hallucinations.

Agent tuning has revolved around rapid engineering or framework programming for closed source LLM like GPT-4. Despite their flexibility and remarkable results, these methods face substantial barriers, including prohibitive costs and data security concerns. Open source LLMs emerge as promising alternatives, but their performance as agents lags behind API-based models, highlighting a gap in effectiveness and implementation readiness.

Researchers from the University of Science and technology of China and the Shanghai ai Laboratory present Agent-FLAN, a unique and innovative approach designed to overcome the above challenges. Agent-FLAN revolutionizes the training process by meticulously redesigning the training corpus. This novel method aligns the training process with the model's original data, allowing for a more natural and efficient learning path. The key to Agent-FLAN's success lies in its ability to dissect and reassemble training material, focusing on improving essential agent capabilities such as reasoning, following instructions and, most importantly, reducing errors. hallucinations.

Agent-FLAN ensures that models learn optimally and is designed to improve the skills of your agents by addressing the interweaving of data formats and reasoning within the training process. This tuning method outperforms previous models, showing a substantial 3.5% improvement across various agent evaluation benchmarks. Furthermore, Agent-FLAN effectively mitigates the hallucination problem, improving the reliability of LLMs in practical applications.

The method allows LLMs, specifically the Llama2-7B model, to outperform previous best works on several evaluation data sets. This isn't just a jump in agent tuning; is a step towards realizing the full potential of open source LLMs across a broad spectrum of applications. Furthermore, Agent-FLAN's approach to mitigating hallucinations by comprehensively constructing negative samples is commendable as it significantly reduces such errors and paves the way for more reliable and accurate agent responses.

In conclusion, research on Agent-FLAN represents an important milestone in the evolution of large language models as agents. This method sets a new standard for integrating effective agent capabilities into LLMs by unraveling the complexities of agent fit. The meticulous design and execution of the training corpus, along with a strategic approach to addressing learning discrepancies and hallucinations, allows LLMs to operate with unprecedented precision and efficiency. Agent-FLAN not only bridges the gap between open source LLMs and API-based models, but also enriches the ai landscape with models that are more versatile, reliable, and ready for real-world challenges.

Review the Paper and GitHub. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on Twitter. Join our Telegram channel, Discord channeland LinkedIn Grabove.

If you like our work, you will love our Newsletter..

Don't forget to join our 38k+ ML SubReddit

![]()

Sana Hassan, a consulting intern at Marktechpost and a dual degree student at IIT Madras, is passionate about applying technology and artificial intelligence to address real-world challenges. With a strong interest in solving practical problems, she brings a new perspective to the intersection of ai and real-life solutions.

<!– ai CONTENT END 2 –>