In software engineering, there is a direct correlation between team performance and creating strong, stable applications. The data community aims to adopt the rigorous engineering principles commonly used in software development into its own practices, including systematic approaches to design, development, testing, and maintenance. This requires carefully combining applications and metrics to provide complete insight, precision, and control. It means evaluating all aspects of a team's performance, with a focus on continuous improvement, and applies to mainframe as well as distributed and cloud environments, perhaps more so.

This is achieved through practices such as infrastructure as code (IaC) for deployments, automated testing, application observability, and complete ownership of the application lifecycle. Through years of research, the DevOps Research and Assessment (DORA) The team has identified four key metrics that indicate the performance of a software development team:

- Deployment frequency – How often does an organization successfully launch into production?

- Change delivery time – The amount of time it takes for a commitment to go into production.

- Change failure rate – The percentage of deployments that cause a failure in production.

- It's time to restore service – How long does it take an organization to recover from a production failure?

These metrics provide a quantitative way to measure the effectiveness and efficiency of DevOps practices. Although much of DevOps analysis focuses on distributed and cloud technologies, the mainframe still maintains a unique and powerful position, and can use DORA 4 metrics to promote its reputation as an engine of commerce.

This blog post discusses how BMC Software added AWS generative ai capabilities to its product. BMC AMI zAdviser Enterprise. zAdviser uses Amazon Bedrock to provide summaries, analysis, and improvement recommendations based on DORA metrics data.

Challenges of Tracking DORA 4 Metrics

Tracking DORA 4 metrics means putting the numbers together and putting them on a dashboard. However, measuring productivity is essentially measuring the performance of individuals, which can make them feel scrutinized. This situation might require a change in organizational culture to focus on collective achievements and emphasize that automation tools improve the developer experience.

It is also vital to avoid focusing on irrelevant metrics or excessively tracking data. The essence of DORA metrics is to summarize information into a core set of key performance indicators (KPIs) for evaluation. Mean time to restore (MTTR) is typically the easiest KPI to track: most organizations use tools like BMC Helix ITSM or others that log events and track issues.

Capturing change delivery time and change failure rate can be more challenging, especially on mainframes. Change lead time and change failure rate KPIs that aggregate data from code commits, log files, and automated test results. Using a Git-based SCM brings these insights together seamlessly. Mainframe teams using BMC's Git-based DevOps platform, AMI DevX, can collect this data as easily as distributed teams can.

Solution Overview

Amazon Bedrock is a fully managed service that offers a selection of high-performance foundation models (FMs) from leading ai companies such as AI21 Labs, Anthropic, Cohere, Meta, Stability ai, and Amazon through a single API, along with a broad set of capabilities you need to build generative ai applications with security, privacy, and responsible ai.

BMC AMI zAdviser Enterprise provides a wide range of DevOps KPIs to optimize mainframe development and enable teams to proactively identify and resolve issues. Using machine learning, AMI zAdviser monitors mainframe build, test, and deploy functions across DevOps toolchains and then provides ai-based recommendations for continuous improvement. In addition to capturing and reporting on development KPIs, zAdviser captures data on how BMC DevX products are adopted and used. This includes the number of programs that were debugged, the outcome of testing efforts using DevX testing tools, and many other data points. These additional data points can provide deeper insight into development KPIs, including DORA metrics, and can be used in future generative ai efforts with Amazon Bedrock.

The following architecture diagram shows the final implementation of zAdviser Enterprise using generative ai to provide summaries, analysis, and improvement recommendations based on KPI data from DORA metrics.

The solution workflow includes the following steps:

- Create the aggregation query to retrieve the Elasticsearch metrics.

- Extract stored mainframe metrics data from zAdviser, which is hosted on Amazon Elastic Compute Cloud (Amazon EC2) and deployed on AWS.

- Add the data retrieved from Elasticsearch and form the message for the generative call to the Amazon Bedrock ai API.

- Pass the generative ai message to Amazon Bedrock (using Anthropic's Claude2 model on Amazon Bedrock).

- Store the Amazon Bedrock response (an HTML-formatted document) in Amazon Simple Storage Service (Amazon S3).

- Trigger the KPI email process through AWS Lambda:

- The HTML-formatted email is pulled from Amazon S3 and added to the body of the email.

- The PDF of the client's KPIs is extracted from zAdviser and attached to the email.

- The email is sent to subscribers.

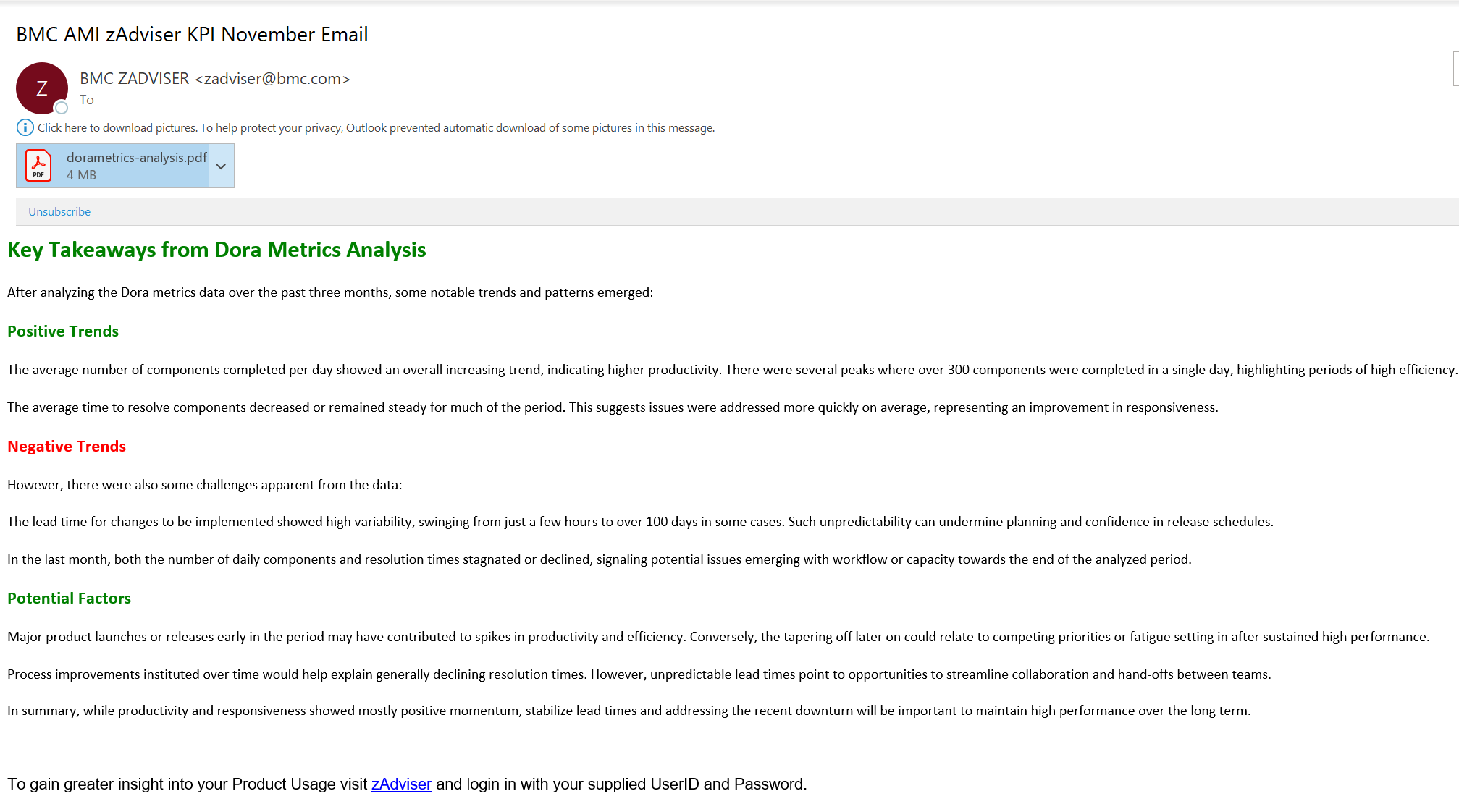

The following screenshot shows the LLM summary of DORA metrics generated with Amazon Bedrock and sent as an email to the customer, with a PDF attachment containing the zAdviser DORA Metrics KPI Dashboard report.

Key takeaways

In this solution, you don't need to worry about your data being exposed on the Internet when sent to an ai client. The API call to Amazon Bedrock does not contain any personally identifiable information (PII) or any data that could identify a customer. The only data transmitted consists of numerical values in the form of DORA metric KPIs and instructions for generative ai operations. Importantly, the generative ai client does not retain, learn from, or cache this data.

The zAdviser engineering team managed to quickly implement this feature in a short period of time. The rapid progress was facilitated by zAdviser's significant investment in AWS services and, more importantly, the ease of use of Amazon Bedrock through API calls. This underscores the transformative power of generative ai technology built into the Amazon Bedrock API. Equipped with the zAdviser Enterprise industry-specific knowledge repository and customized with continuously collected organization-specific DevOps metrics, this API demonstrates the potential of ai in this field.

Generative ai has the potential to lower the barrier to entry for building ai-powered organizations. Large language models (LLMs), in particular, can bring tremendous value to companies looking to explore and use unstructured data. Beyond chatbots, LLMs can be used in a variety of tasks, such as classification, editing, and summarizing.

Conclusion

This post explored the transformative impact of generative ai technology in the form of Amazon Bedrock APIs equipped with BMC zAdviser's industry-specific knowledge, tailored with organization-specific DevOps metrics collected on an ongoing basis.

Review the BMC website to learn more and set up a demo.

About the authors

Unmarked Sunil is a senior partner solutions architect at Amazon Web Services. He works with various independent software vendors (ISVs) and strategic clients across industries to accelerate their digital transformation journey and cloud adoption.

Unmarked Sunil is a senior partner solutions architect at Amazon Web Services. He works with various independent software vendors (ISVs) and strategic clients across industries to accelerate their digital transformation journey and cloud adoption.

Vijay Balakrishna is a senior manager of partner development at Amazon Web Services. She helps independent software vendors (ISVs) across industries accelerate their digital transformation journey.

Vijay Balakrishna is a senior manager of partner development at Amazon Web Services. She helps independent software vendors (ISVs) across industries accelerate their digital transformation journey.

Spencer Hallman is the lead product manager for BMC AMI zAdviser Enterprise. Previously, he was product manager for BMC AMI Strobe and BMC AMI Ops Automation for Batch Thruput. Prior to Product Management, Spencer was the subject matter expert for Mainframe Performance. His diverse experience over the years also includes programming across multiple platforms and languages, as well as work in the field of operations research. He holds a Master of Business Administration with a concentration in Operations Research from Temple University and a Bachelor of Science in Computer Science from the University of Vermont. He lives in Devon, Pennsylvania, and when he's not attending virtual meetings, he enjoys walking his dogs, riding his bike, and spending time with his family.

Spencer Hallman is the lead product manager for BMC AMI zAdviser Enterprise. Previously, he was product manager for BMC AMI Strobe and BMC AMI Ops Automation for Batch Thruput. Prior to Product Management, Spencer was the subject matter expert for Mainframe Performance. His diverse experience over the years also includes programming across multiple platforms and languages, as well as work in the field of operations research. He holds a Master of Business Administration with a concentration in Operations Research from Temple University and a Bachelor of Science in Computer Science from the University of Vermont. He lives in Devon, Pennsylvania, and when he's not attending virtual meetings, he enjoys walking his dogs, riding his bike, and spending time with his family.

NEWSLETTER

NEWSLETTER