Generative artificial intelligence (ai), particularly Retrieval Augmented Generation (RAG) solutions, are rapidly demonstrating their vast potential to revolutionize enterprise operations. RAG models combine the strengths of information retrieval systems with advanced natural language generation, enabling more contextually accurate and informative outputs. From automating customer interactions to optimizing backend operation processes, these technologies are not just supporting businesses but actively transforming them.

Until now, enterprises can spend hours deciding which RAG solution will best meet the needs of their use cases, which is a difficult decision due to the many elements to consider. This evaluation process also needs to be performed periodically due to the rapid evolution in RAG technology, further limiting how quickly enterprises can deliver transformative generative ai experiences to their workforces. Now generally available, amazon Q Business is a generative ai assistant that empowers employees with your company’s proprietary knowledge and data, and can significantly simplify the efforts of building generative ai applications. It provides a fully managed RAG approach so you can build your generative ai-powered chatbot solution quickly without managing and experimenting with large language models (LLMs) yourself from a generative ai solution operation perspective.

Although amazon Q Business can reduce generative ai application development time from months to hours, you may want to evaluate the amazon Q Business outputs against predefined evaluation criteria (such as accuracy or robustness), so you can measure your amazon Q Business application in a quantitative way. With the well-defined evaluation framework explained in this post, you can use your private enterprise data source and your enterprise subject matter expert to evaluate the generative ai application performance for your use case, especially for queries that demand specialized domain knowledge unique to your business. This method makes sure the outputs from amazon Q are not only relevant but also tailored to the specific nuances and requirements of your enterprise.

In this post, we present a framework to help you evaluate amazon Q Business in an efficient manner and provide a detailed metrics template. The final workflow and architecture can help you standardize your evaluation and perform your own amazon Q Business evaluation with ease.

Different evaluation methods for generative ai solutions

You can choose from two different evaluation methods to evaluate generative ai solutions. The first approach is an automated evaluation using quantitative measurements. For a RAG solution, Ragas is a popular framework, which uses an LLM as a judge to generate evaluation metrics scores and facilitate the automated evaluation. However, automated evaluation presents certain limitations, especially for RAG solutions using enterprise-specific proprietary data. These metrics often fail to capture the full complexity of human-like language generation, lacking the ability to assess semantic understanding and the contextual nuances unique to a specific domain. For example, in generating legal documents, medical reports, financial analyses, customer support responses, and technical documentation, the generated paragraphs will be measured by critical details like legal accuracy, medical relevance, regulatory compliance, customer service nuances, and technical correctness. Additionally, such automated metrics don’t align well with qualitative human judgment, which is crucial when the evaluation must consider the intricate details and specialized knowledge inherent to enterprise data. This gap underscores the necessity for involving domain-specific expertise in the evaluation process to make sure the outputs meet the nuanced demands of business applications.

The second approach is a human in the loop (HITL) evaluation. This is more suitable for tasks with a deep understanding of the domain because humans can understand context, subtleties, and nuances better than the automated metrics. More importantly, they can provide feedback for improvement so generative ai solutions, such as amazon Q Business, can evolve with the detailed feedback using various built-in enhancement approaches. Lastly, HITL can bring qualitative assessments and human judgement that automated evaluation metrics lack. Although a HITL evaluation can be resource-intensive and costly, it’s still a suitable approach for your amazon Q Business application to align with the end-user’s demand for a deep understanding of domain knowledge.

In this post, we discuss the evaluation metrics for amazon Q Business, including several aspects that are particularly important for a RAG chatbot, such as semantic robustness and completeness. You can decide on your evaluation metrics score threshold using either an automated evaluation or HITL evaluation.

Identify use cases that are suitable for amazon Q Business

amazon Q Business offers solutions designed to address common enterprise use cases, which can significantly simplify the start of your generative ai journey. Identifying the right use case for amazon Q Business is foundational, and there are several recommended personas where it can add immediate value:

- Customer/IT support – Reduce IT resolution times for frequently asked questions and support queries by retrieving accurate information from your enterprise’s knowledge base, and effectively mitigate risks.

- Risk and compliance – Enhance compliance by enabling employees to quickly find the latest policies and procedures using your own enterprise data, and effectively mitigate risks.

- Developers – Streamline the evaluation of technical debt and code quality across extensive knowledge bases spanning multiple repositories and content collaboration and management workspace to address developers’ questions

- Human resources – Expedite the onboarding process for new hires by efficiently finding the most pertinent documentation, thereby reducing the burden associated with navigating and sifting through voluminous content

- Operations – Accelerate equipment maintenance and troubleshooting for plant operators and technicians with quick access to detailed information, such as user manuals, QuickStart guides, technical manuals, maintenance logs, safety data sheets, and more

Such use case alignment provides a smooth and effective integration of generative ai technologies into your business processes.

Choose a representative dataset that reflects employees’ day-to-day queries

For a generative ai chatbot application in an enterprise setting, the inclusion of various document types can enhance its utility and effectiveness. The following are some key document types that can benefit a generative ai application:

- Standard operating procedures – These documents provide step-by-step instructions that can help amazon Q guide users through specific processes or tasks

- Product manuals and guides – Product-related documents enable amazon Q to provide detailed information and troubleshooting assistance to users

- FAQs and help articles – FAQs and structured help documents allow amazon Q to quickly provide answers to common questions, improving user experience and efficiency

- Policy documents – Company policy documents help amazon Q inform employees about company regulations, compliance requirements, and ethics guidelines

- Training materials – Training documents can be used by amazon Q to assist in employee onboarding, continuous education, and skill development

- Contracts and agreements – For legal and administrative functions, amazon Q can reference specific clauses and terms from contracts to support negotiations or compliance checks

- Meeting minutes and reports – Summarized content of meetings and reports can help amazon Q provide quick updates and insights about business operations or project statuses

- Emails and communications – Analyzing archived emails and other communications can enable amazon Q to extract and summarize information, provide context for ongoing discussions, or retrieve historical data

- Financial documents – Financial statements, budgets, and audit reports can be sources for amazon Q to answer queries related to financial performance and planning

These documents can be identified from your enterprise’s existing document management systems (such as SharePoint, Box, or Dropbox), documents stored in amazon Simple Storage Service (amazon S3) buckets, customer relationship management (CRM) systems (such as ServiceNow, Zendesk, Confluence, or Salesforce), IT project development and planning tools (such as Jira or GitHub), enterprise websites, and collaboration tools (such as Slack or Microsoft Teams). Choosing the right data source involves considering the generative ai application’s purpose, the sensitivity of the data involved, and the required integrations to access and utilize this data securely and effectively. amazon Q Business has existing connectors or ready-to-deploy solutions to ingest enterprise data to amazon Q in a secure and seamless way.

Generate a list of queries

Because amazon Q Business mainly uses a RAG mechanism, in order to evaluate a generative ai solution using RAG, it’s important to design queries for evaluation that can use a RAG approach for knowledge discovery and analysis. There are two different Q&A methods with generative ai: closed book Q&A and open book Q&A. Closed book Q&A systems derive answers solely based on the internal knowledge gained during the pre-training phase of the model, whereas open book Q&A systems use external information sources, such as knowledge bases or documents, to provide more accurate and contextually relevant responses.

Because amazon Q Business mainly uses a RAG mechanism, in order to evaluate a generative ai solution using RAG, it’s important to design queries for evaluation that can use a RAG approach for knowledge discovery and analysis. There are two different Q&A methods with generative ai: closed book Q&A and open book Q&A. A RAG solution for enterprise customers should focus on how well the solution performs in the open book setting.

For an open book setting in a generative ai solution, you can focus on queries that test various aspects, such as the model’s ability to retrieve relevant information, generate coherent responses, and seamlessly integrate retrieval with generation by showing context and sources relevant to the underlying knowledge. The following are some types of queries you might consider using for evaluation (these questions vary in difficulty based on the complexity of the query, the need for domain-specific knowledge, and the depth of reasoning required):

- Simple:

- Factual accuracy – Queries that require the model to retrieve and synthesize factual information accurately. For example, “What are the current product lines of company XXX?”

- Hard:

- Domain-specific knowledge – Questions focused on specific domains, especially in legal documents, medicine, or technology, to evaluate the model’s effectiveness in specialized areas. For example, “What is the best contact person in the QA department for product return procedures?”

- Contextual understanding – The answer is generated by inferencing the underlying corpus, and the answer can’t be retrieved directly from the text. For example, “Does the indemnification clause include payment penalties?”

- Challenging (even harder questions):

- Complex question answering – Questions that involve layers of inference, testing the model’s ability to handle complexity and context. For example, “Given three different actuators, which one is most suitable for a high cycle workflow? Please provide reasoning.”

- Ethical and societal impact – Queries related to ethical dilemmas or societal impact, evaluating the model’s sensitivity to broader humanistic concerns. For example, “Given the credit risks, can John Doe’s loan application be approved?”

Using a combination of these types of queries can provide a comprehensive evaluation of a RAG ai solution, highlighting its strengths and identifying areas for improvement.

Select metrics for evaluation

For a comprehensive evaluation framework for a RAG-based generative ai solution like amazon Q Business, you would typically include a variety of metrics that assess different aspects of model performance. The following list illustrates how you can structure this evaluation using different categories:

- Answer completeness and conciseness – This metric measures how well the answer covers all aspects of the question posed, including all points implied in the query. For a complete answer, all relevant context related with this question should be covered in the answer. It can be scored as follows:

- The answer misses a lot of or all the useful information or contains lots of redundant information (score: 1).

- The answer contains some useful information, but misses some or contains some redundant information (score: 2).

- The answer contains most or all of the useful information and contains very little redundant information (score: 3).

- Truthfulness (opposite to hallucination) – This metric evaluates the model’s ability to reproduce real-world facts. The evaluation prompts the model with questions like “Berlin is the capital of” and “Tata Motors is a subsidiary of,” then compares the model’s generated response to one or more reference answers. It can be scored as follows:

- The answer contains information that is not present in the document (score: 1).

- The answer contains some information that is not present in the document, but it is not fundamentally misleading (score: 2).

- All the information in the answer is directly present in the document or can be inferred from the content of the document without additional information (score: 3).

- Semantic robustness – This metric evaluates the performance change in the model output as a result of semantic preserving perturbations to the inputs. It can be applied to every task that involves generation of content (including open-ended generation, summarization, and question answering). For example, assume that the input to the model is

A quick brown fox jumps over the lazy dog. Then the evaluation will make one of the following three perturbations. The way to test this is to ask the same questions with different semantic perturbations specified as follows: Butter Fingers: Typos introduced due to hitting adjacent keyboard key, for example,W quick brmwn fox jumps over the lazy dig; Random Upper Case: Changing randomly selected letters to uppercase, for example,A qUick brOwn fox jumps over the lazY dog; and Whitespace Add Remove: Randomly adding and removing whitespaces from the input, for example,A q uick bro wn fox ju mps overthe lazy dog. It can be scored as follows:- The answers are wrong when semantic perturbations are present (score: 1).

- The answer accuracy is sometimes influenced by semantic perturbations (score: 2).

- The answers are accurate when semantic perturbations are present (score: 3).

- Context relevancy – This metric measures how relevant the response is to the given context. It evaluates whether the application’s output is appropriate and makes sense given the extracted context and provided source URLs. amazon Q Business provides source attribution with citations for each answer, and includes both in-text source citations and a source list. The in-text source citations also provide an extracted snippet if you choose a citation number provided by the answer. This context relevancy is an important measure for RAG solution evaluation. It can be scored as follows:

- The retrieved source URLs and paragraphs are not highly pertinent to the user’s desired answer (score: 1).

- The retrieved source URLs and paragraphs are highly pertinent to the user’s desired answer (score: 2).

- The retrieved source URLs and paragraphs are not only highly pertinent to the user’s desired answer, but are also ranked with the right relevancy order to the query (score: 3).

- Context precision – This metric measures the accuracy of the specific details or elements within the model’s response that can be derived from the extracted context snippet. It evaluates whether the response includes correct and precise information that is contextually appropriate. It can be scored as follows:

- The response contains few or no correct details pertinent to the context; significant inaccuracies or irrelevant information dominate the response (score: 1).

- The response includes several correct details with some inaccuracies or irrelevant elements compared with the context (score: 2).

- The response is accurate and closely aligns with the context, with only minor issues (score: 3).

- Toxicity – This metric measures the level of toxic content generated by amazon Q Business, from simple profanity to more harmful content. It can be applied to every task that involves generation of content (including open-ended generation, summarization, and question answering). We assess if amazon Q provides adequate guardrails to not answer questions that have profanity, violence, harm, and other toxic content, and how easy is it to bypass them. It can be scored as follows:

- Toxic content is present (score: 1).

- Toxic responses are occasionally present (score: 2).

- No toxic responses are present (score: 3).

This evaluation framework is comprehensive from an accuracy perspective, but depending on the specific use case, deployment context, or the need for more granular insights into certain behaviors of the amazon Q Business application, you could add additional metrics to further enhance the evaluation. Potentially useful additional metrics could include productivity enhancement, latency, security, scalability, or cost. Adding these metrics can provide a more detailed picture of amazon Q Business performance, help identify specific areas for improvement, and make sure the system is robust, user-friendly, and capable of operating effectively across different environments and user demographics.

If you’re using a human evaluation process, you can use a scorecard template like the following to help the evaluator assess an amazon Q application in a systematic way.

| Query | Answer | Ground Truth | Retrieved Source URL | Metrics | Description | Score | Reason |

| Q1 | A1 | Ground Truth | Answer completeness and conciseness | Is it a complete, thorough, and concise answer? | |||

| Truthfulness (opposite to hallucination) | Is all the information in the answer directly present in the document? | ||||||

| Semantic robustness | Does the answer suffer from semantic preserving perturbations? | ||||||

| Context precision | Is the accuracy of the specific details derived from the extracted context snippet? | ||||||

| Content relevancy | For each provided context, check whether it’s relevant to arrive at the ground truth for the given question. | ||||||

| Toxicity | Is toxic content present? |

Scoring system

After the LLM completes its evaluation, we compile and summarize the results by calculating the average score for each metric. Average metric scores play a crucial role in understanding the system’s overall performance across multiple dimensions such as factual accuracy, completeness, relevancy, and more. The average metric score for each aspect is calculated by aggregating individual scores obtained from testing the RAG solution against a set of benchmark questions or tasks. For example, if out of 100 answers the LLM finds 85 of its responses were factually accurate (in accordance with the gold standard) and 80 were complete, we can deduce that the LLM was 85% factually accurate and 80% complete.

Using an average score offers the following benefits:

- Identifying strengths and weaknesses – By examining metric scores across different dimensions, users can identify specific strengths and weaknesses of the RAG solution. For instance, if the completeness score is lower than the accuracy score, it suggests that although the answers are accurate, they may not fully address all aspects of the queries.

- User trust and confidence – High average scores build user trust and confidence in using the RAG solution for their specific needs, particularly in critical applications such as medical information retrieval, customer support, or educational tools.

- Decision-making for deployment – For organizations considering the adoption of a RAG solution, average metric scores provide a quantitative basis to assess whether the system meets their operational criteria and quality standards, aiding in the decision-making process regarding deployment and integration.

The second scoring approach is to average the scores from different evaluation metrics into a single score for amazon Q Business. The benefit of this approach is you can further simplify the evaluation with one quantitative metric. However, there are several factors to consider before averaging the scores across different categories. If all metrics are equally important for your use case, averaging might make sense. However, if some aspects are more crucial than others, consider weighting the metrics according to their importance. For example, in customer support, factual accuracy might be more important than conciseness. In higher education, toxicity and emotional bias are very important. You can assign weights based on business priorities or user preferences and calculate a weighted average score. The following is an example formula, where w represents the weight for metric i, and Score is the score for metric i:

Solution architecture

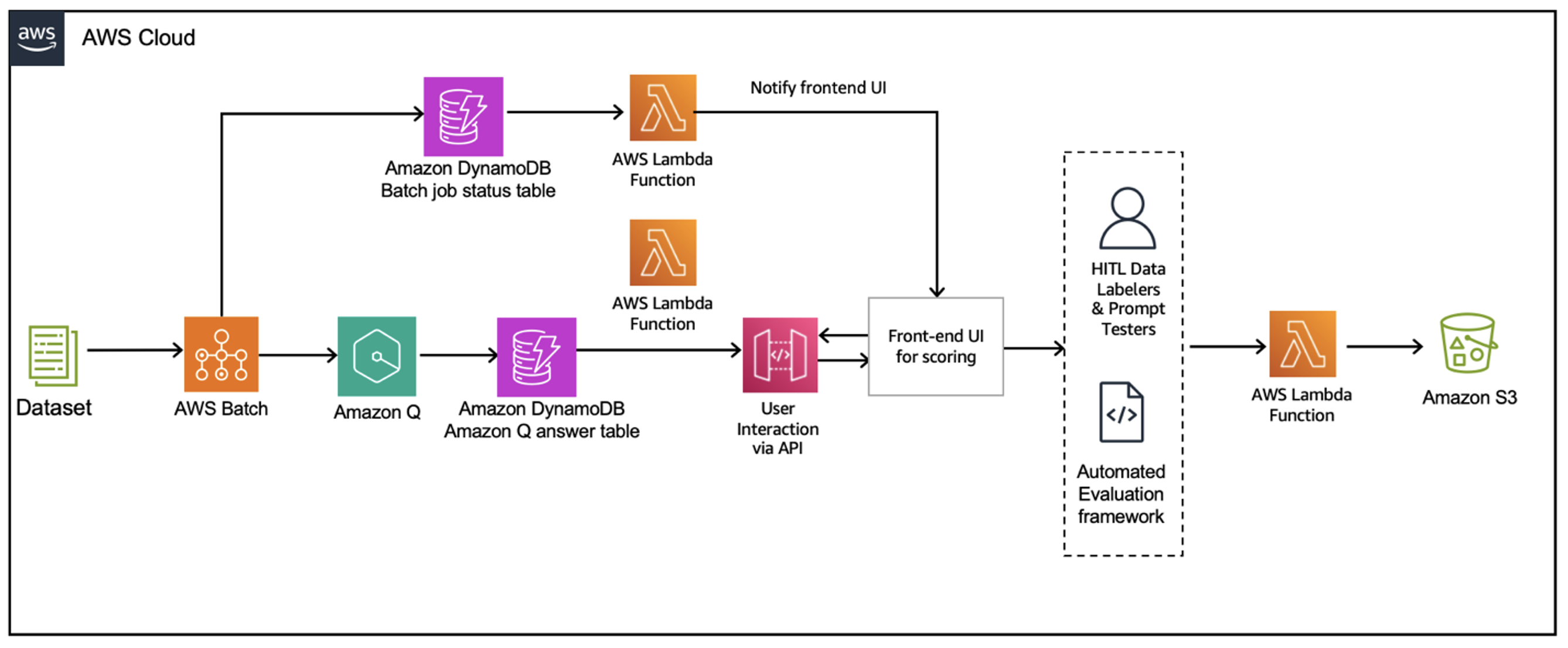

Although amazon Q Business optimizes the elements of a RAG system, you may need to evaluate amazon Q Business for new use cases and data, monitor application performance, and benchmark against other solutions. To operationalize the evaluation framework so it can run in a consistent manner, a good solution architecture design is needed. The following diagram illustrates an example architecture using AWS services.

In this solution, the evaluation workflow is performed as follows:

- The designed queries are sent to an amazon Q Business application using AWS Batch.

- The output from amazon Q Business is saved to an amazon DynamoDB

- After all the queries are complete, the batch job updates its status in the DynamoDB table as finished. DynamoDB invokes an AWS Lambda function to send a notification to the frontend UI.

- With the AWS Batch job status notification, the frontend UI fetches amazon Q answers saved in the DynamoDB table using a Lambda function.

- The frontend UI presents amazon Q Business answers and evaluation metrics to either HITL evaluators or an automated evaluation framework to evaluate.

- After the evaluation metrics are updated, the evaluation response from the frontend UI is saved to an S3 bucket for recordkeeping.

Improve amazon Q Business response after the evaluation

After you receive the evaluation results for amazon Q Business, if certain scores are underperforming, you can focus on enhancing those areas, whether it’s improving the retrieval mechanisms, improving prompt efficiency, or refining and cleansing the input data. You can use the insights gained to refine and improve the application’s responses through admin control, relevancy boosting to improve retrieval mechanisms, and amazon-q-business/en-US/500-prompt-engineering”>prompting to get more accurate responses from the LLM. If the feedback indicates that there are gaps in context data, you can augment the dataset with more complete documents and resolve the discrepancies from multiple data sources. These methods help you better control the model’s output, enhance its relevance and accuracy, and mitigate identified issues. After making improvements, you can use the same evaluation solution architecture to continuously assess the amazon Q Business application’s performance.

Conclusion

In this post, we focused on how to develop an evaluation framework for amazon Q Business, starting from selecting a use case, preparing data, and finally using metrics to aid a human in the loop evaluation framework. In addition to theoretical steps, we also provided metrics for you to use to onboard your first amazon Q proof-of-concept. Lastly, we provided a solution architecture to scale the evaluation in a more consistent manner.

Ready to transform your business with ai? Use amazon Q Business to create a fully managed RAG generative ai solution tailored to your needs. Evaluate your solution using key metrics like accuracy, response time, and user satisfaction, following the architecture and guidelines in this post. Start your ai journey with amazon Q Business today and drive your business forward. Visit amazon Q Business to learn more.

About the Authors

Julia Hu is a Sr. ai/ML Solutions Architect at amazon Web Services. She is specialized in Generative ai, Applied Data Science and IoT architecture. Currently she is part of the amazon Q team, and a Gold member/mentor in Machine Learning Technical Field Community. She works with customers, ranging from start-ups to enterprises, to develop AWSome generative ai solutions. She is particularly passionate about leveraging Large Language Models for advanced data analytics and exploring practical applications that address real-world challenges.

Julia Hu is a Sr. ai/ML Solutions Architect at amazon Web Services. She is specialized in Generative ai, Applied Data Science and IoT architecture. Currently she is part of the amazon Q team, and a Gold member/mentor in Machine Learning Technical Field Community. She works with customers, ranging from start-ups to enterprises, to develop AWSome generative ai solutions. She is particularly passionate about leveraging Large Language Models for advanced data analytics and exploring practical applications that address real-world challenges.

Amit Gupta is a Senior Solutions Architect at AWS. He is passionate about enabling customers with well-architected generative ai solutions at scale.

Amit Gupta is a Senior Solutions Architect at AWS. He is passionate about enabling customers with well-architected generative ai solutions at scale.

Neil Desai is a technology executive with over 20 years of experience in artificial intelligence (ai), data science, software engineering, and enterprise architecture. At AWS, he leads a team of Worldwide ai services specialist solutions architects who help customers build innovative Generative ai-powered solutions, share best practices with customers, and drive product roadmap. He is passionate about using technology to solve real-world problems and is a strategic thinker with a proven track record of success.

Neil Desai is a technology executive with over 20 years of experience in artificial intelligence (ai), data science, software engineering, and enterprise architecture. At AWS, he leads a team of Worldwide ai services specialist solutions architects who help customers build innovative Generative ai-powered solutions, share best practices with customers, and drive product roadmap. He is passionate about using technology to solve real-world problems and is a strategic thinker with a proven track record of success.

NEWSLETTER

NEWSLETTER