This is a guest post co-written with Babu Srinivasan of MongoDB.

As industries evolve in today's fast-paced business landscape, the inability to have real-time forecasts poses significant challenges for industries that rely heavily on accurate and timely insights. The absence of real-time forecasting across various industries presents pressing business challenges that can significantly impact decision making and operational efficiency. Without real-time information, companies struggle to adapt to dynamic market conditions, accurately anticipate customer demand, optimize inventory levels, and make proactive strategic decisions. Industries such as finance, retail, supply chain management and logistics face the risk of missed opportunities, increased costs, inefficient allocation of resources and the inability to meet customer expectations. By exploring these challenges, organizations can recognize the importance of real-time forecasting and explore innovative solutions to overcome these obstacles, allowing them to remain competitive, make informed decisions, and thrive in today's fast-paced business environment.

Harnessing the transformative potential of the native MongoDB platform time series data capabilities and integrating them with the power of Amazon SageMaker Canvas, organizations can overcome these challenges and unlock new levels of agility. MongoDB's robust time series data management enables storage and retrieval of large volumes of time series data in real time, while advanced machine learning algorithms and predictive capabilities provide accurate and dynamic forecast models with SageMaker Canvas .

In this post, we will explore the potential of using MongoDB and SageMaker Canvas time series data as an end-to-end solution.

MongoDB Atlas

MongoDB Atlas is a fully managed developer data platform that simplifies deploying and scaling MongoDB databases in the cloud. It is a document-based storage that provides a fully managed database, with integrated full-text and vector files. Look forsupport for Geospatial queries, Graphics and native support for efficient time series storage and query capabilities. MongoDB Atlas offers automatic sharding, scale-out, and flexible indexing for high-volume data ingestion. Among all, the native time series capabilities are a standout feature, making them ideal for managing large volumes of time series data such as business-critical application data, telemetry, server logs, and more. With efficient querying, aggregation, and analysis, businesses can extract valuable insights from time-stamped data. By utilizing these capabilities, businesses can efficiently store, manage, and analyze time series data, enabling data-driven decisions and competitive advantage.

Amazon SageMaker Canvas

Amazon SageMaker Canvas is a visual machine learning (ML) service that enables business analysts and data scientists to create and deploy custom ML models without requiring ML experience or writing a single line of code. SageMaker Canvas supports a number of use cases, including time series forecasting, which allows businesses to accurately forecast future demand, sales, resource requirements, and other time series data. The service uses deep learning techniques to handle complex data patterns and allows companies to generate accurate forecasts even with minimal historical data. By using the capabilities of Amazon SageMaker Canvas, businesses can make informed decisions, optimize inventory levels, improve operational efficiency, and improve customer satisfaction.

The SageMaker Canvas user interface allows you to seamlessly integrate data sources from the cloud or on-premises, effortlessly merge data sets, train accurate models, and make predictions with emerging data, all without coding. If you need an automated workflow or direct integration of the machine learning model into applications, Canvas's forecasting capabilities can be accessed via API.

Solution Overview

Users maintain their transactional time series data in MongoDB Atlas. Through Atlas Data Federation, data is pulled into the Amazon S3 bucket. Amazon SageMaker Canvas accesses data to create models and forecasts. The forecast results are stored in an S3 bucket. Using MongoDB Data Federation services, forecasts are presented visually through MongoDB Charts.

The following diagram describes the architecture of the proposed solution.

Previous requirements

For this solution we use MongoDB Atlas to store time series data, Amazon SageMaker Canvas to train a model and produce forecasts, and Amazon S3 to store data extracted from MongoDB Atlas.

Make sure you have the following prerequisites:

Set up MongoDB Atlas cluster

Create a free MongoDB Atlas cluster by following the instructions at Create a cluster. Configure the Database access and Network access.

Complete a time series collection in MongoDB Atlas

For the purposes of this demo, you can use a sample data set from Kaggle and upload the same to MongoDB Atlas with MongoDB tools preferably MongoDB Compass.

The following code shows a sample data set for a time series collection:

{

"store": "1 1",

"timestamp": { "2010-02-05T00:00:00.000Z"},

"temperature": "42.31",

"target_value": 2.572,

"IsHoliday": false

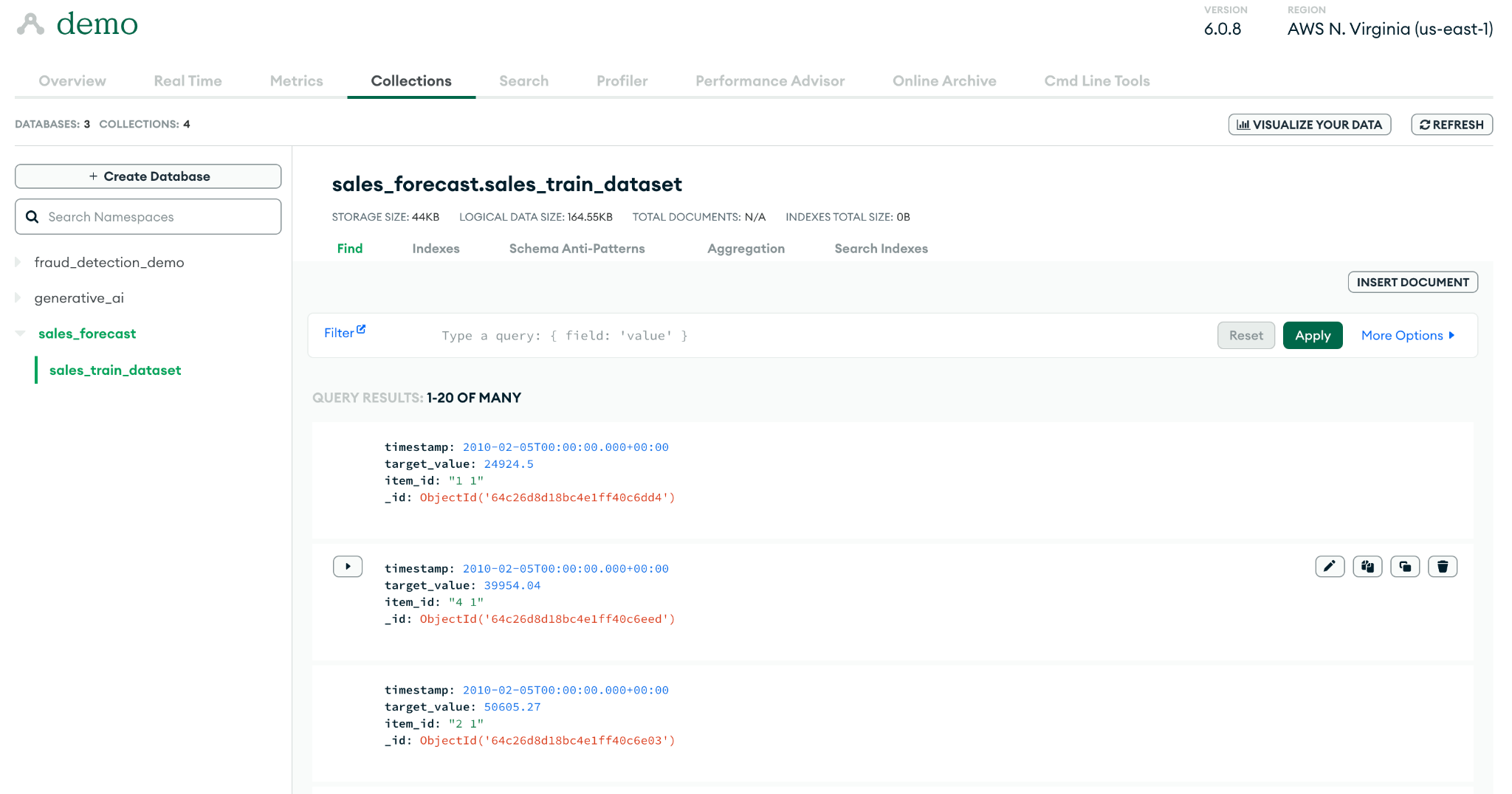

}The following screenshot shows sample time series data in MongoDB Atlas:

Create an S3 bucket

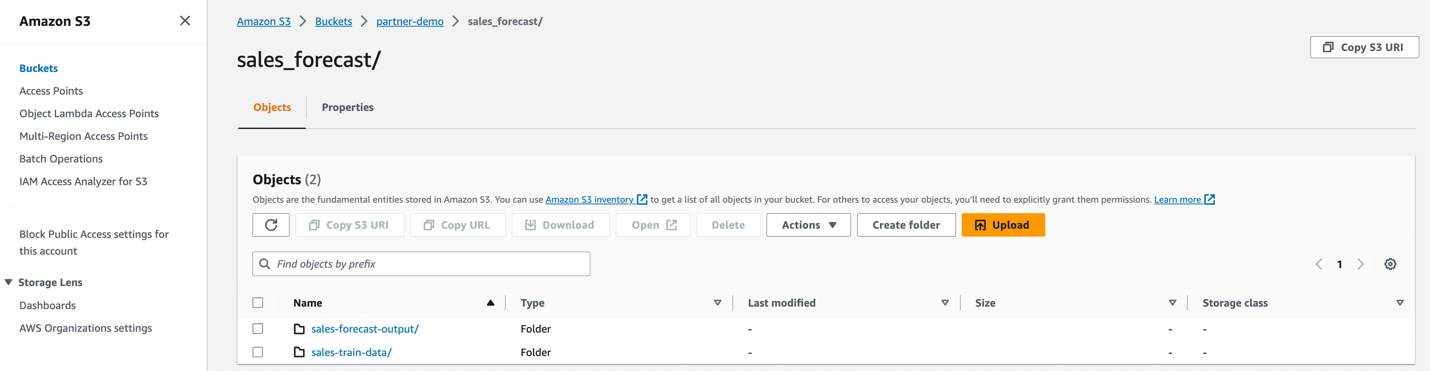

Create an S3 bucket in AWS, where time series data needs to be stored and analyzed. Please note that we have two folders. sales-train-data is used to store data extracted from MongoDB Atlas, while sales-forecast-output contains Canvas predictions.

Create the data federation

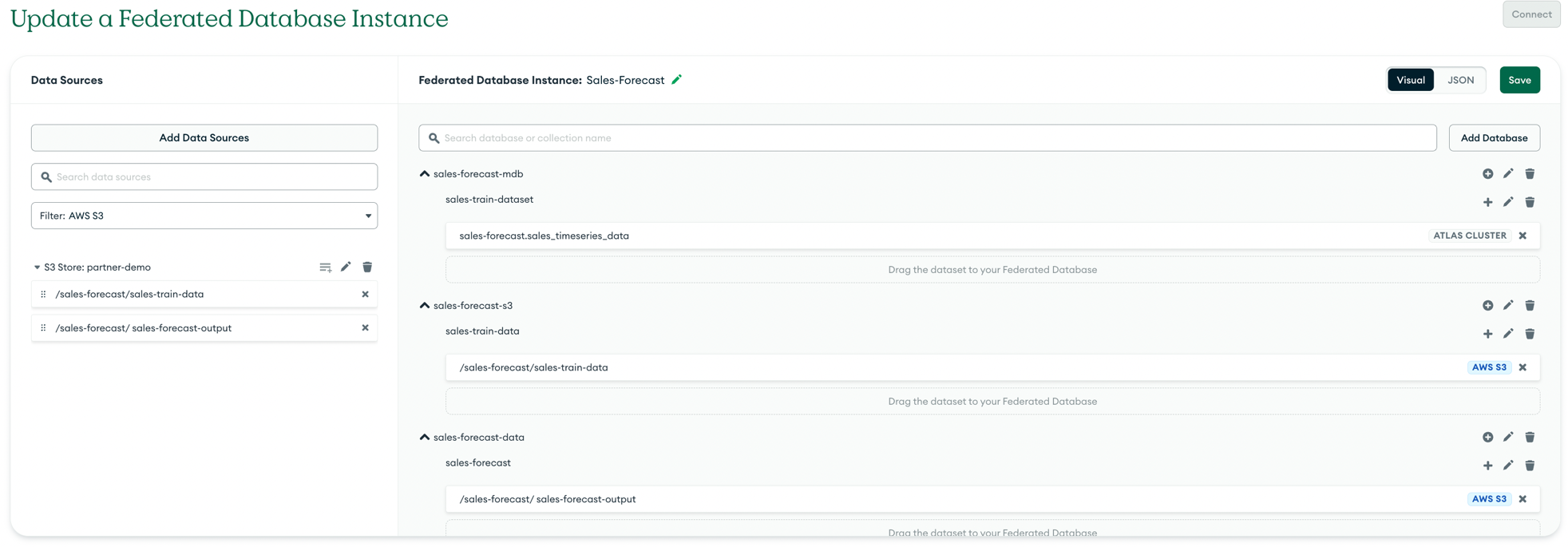

Configure the Data Federation in Atlas and register the previously created S3 bucket as part of the data source. Note that the three different databases/collections are created in the data federation for the Atlas cluster, the S3 bucket for the MongoDB Atlas data, and the S3 bucket to store the Canvas results.

The following screenshots show the data federation configuration.

Set up the Atlas app service

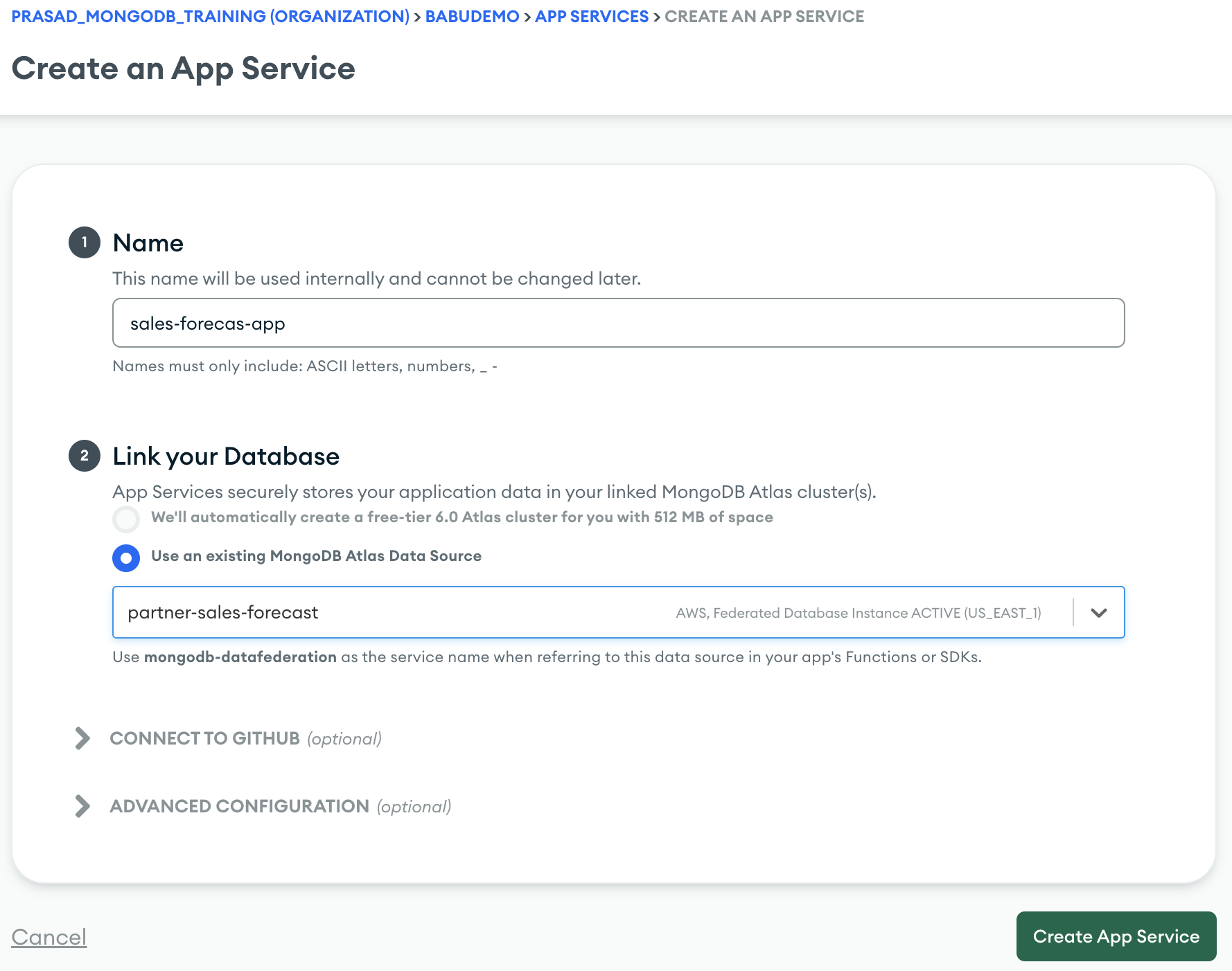

Create the MongoDB Application Services implement the functions to transfer data from MongoDB Atlas cluster to S3 bucket using the $out aggregation.

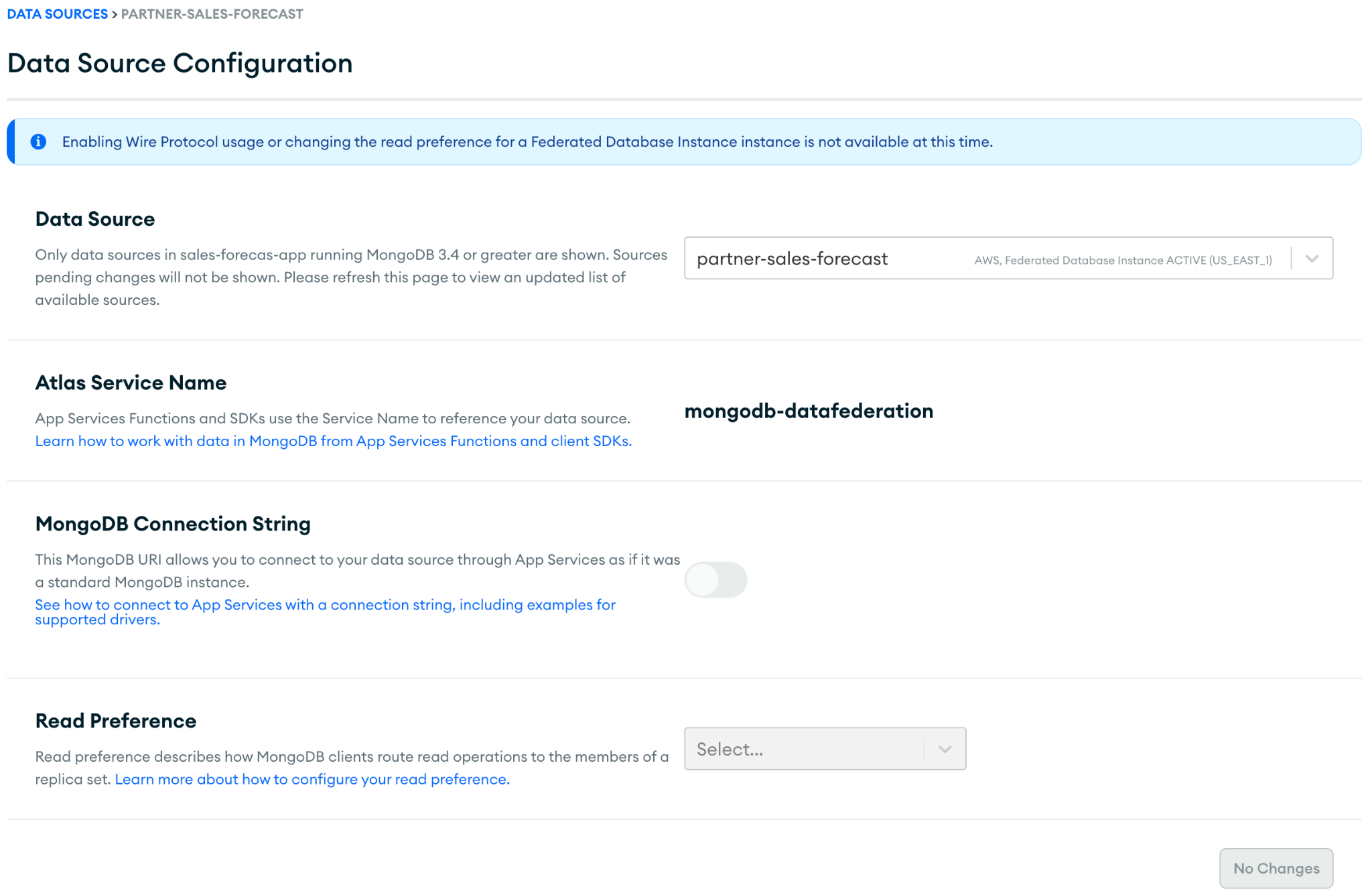

Verify data source configuration

The Application services create a new Service Name Registrations that should be called data services in the following function. Verify that the Atlas service name has been created and note it for future reference.

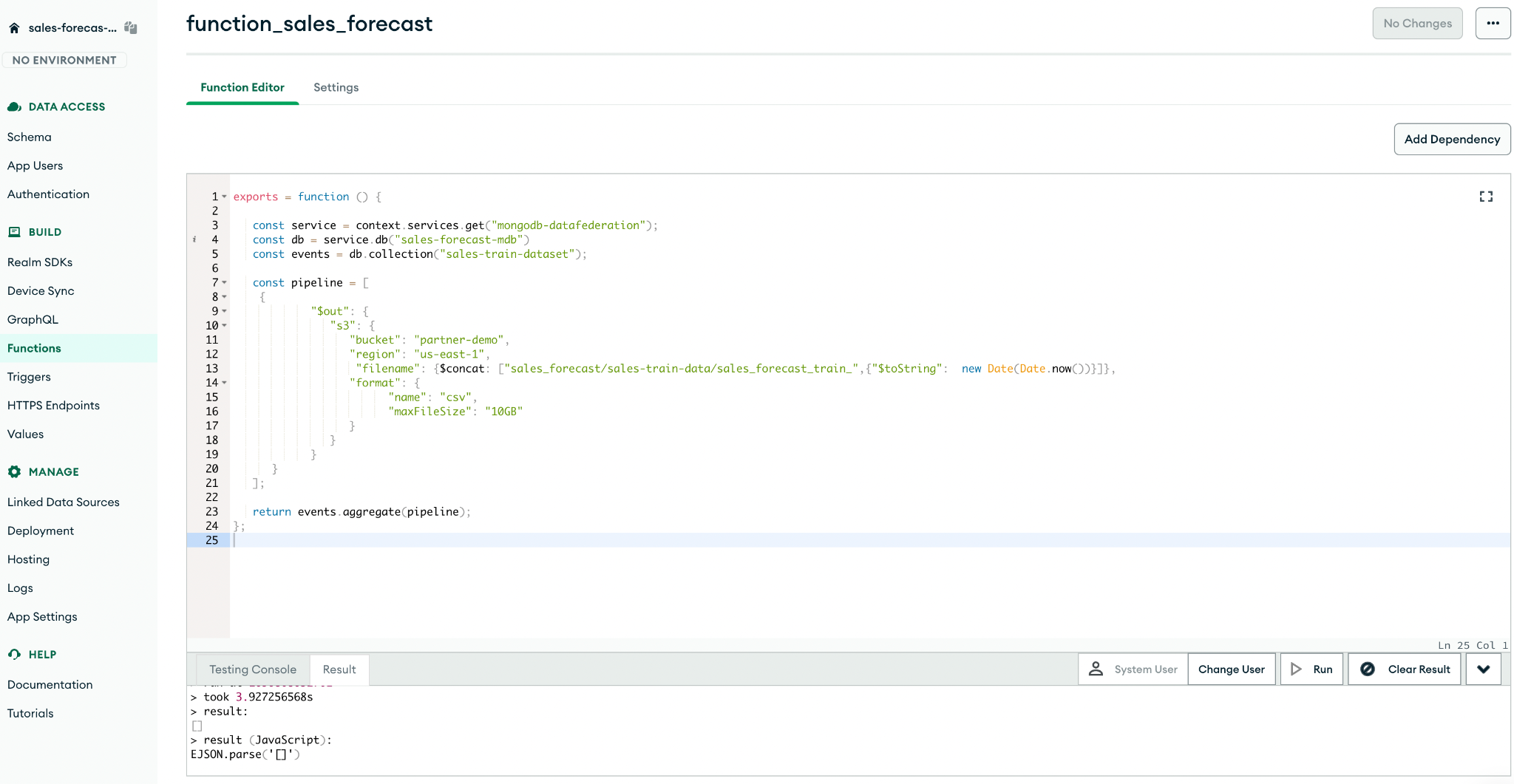

Create the function

Configure Atlas application services to create the trigger and functions. Triggers should be scheduled to write data to S3 at a periodic frequency based on the business need to train the models.

The following script shows the function to write to the S3 bucket:

exports = function () {

const service = context.services.get("");

const db = service.db("")

const events = db.collection("");

const pipeline = (

{

"$out": {

"s3": {

"bucket": "<S3_bucket_name>",

"region": "<AWS_Region>",

"filename": {$concat: ("<S3path>/<filename>_",{"$toString": new Date(Date.now())})},

"format": {

"name": "json",

"maxFileSize": "10GB"

}

}

}

}

);

return events.aggregate(pipeline);

};

Sample function

The function can be run via the Run tab and errors can be debugged using the logging functions in Application Services. Additionally, errors can be debugged using the Logs menu in the left panel.

The following screenshot shows the execution of the function along with the result:

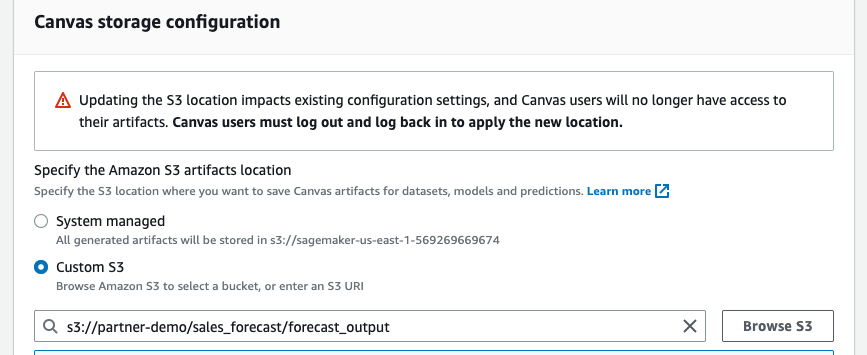

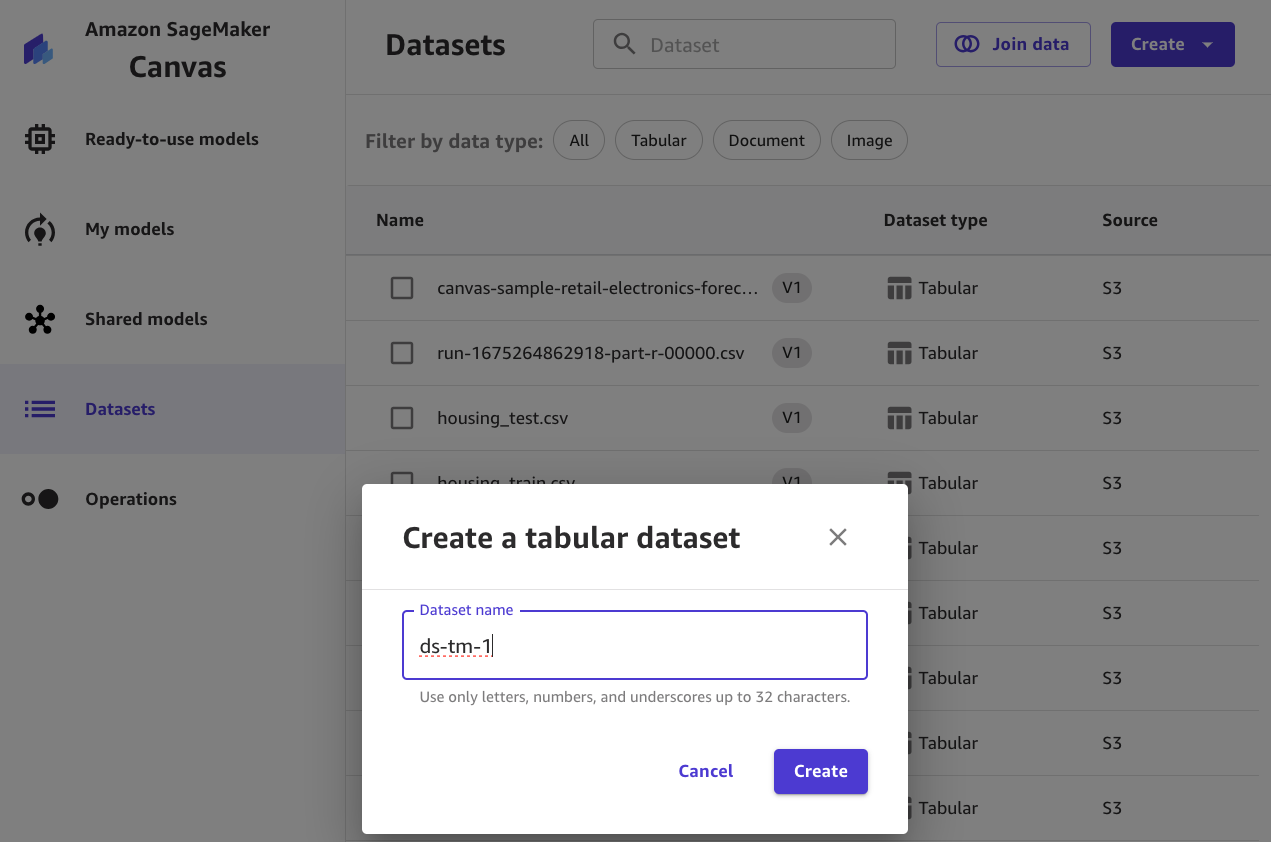

Create a dataset in Amazon SageMaker Canvas

The following steps assume that you have created a SageMaker domain and user profile. If you haven't already, be sure to set up your SageMaker domain and user profile. In your user profile, update your S3 bucket to be personalized and provide your bucket name.

When you're done, navigate to SageMaker Canvas, select your domain and profile, and select Canvas.

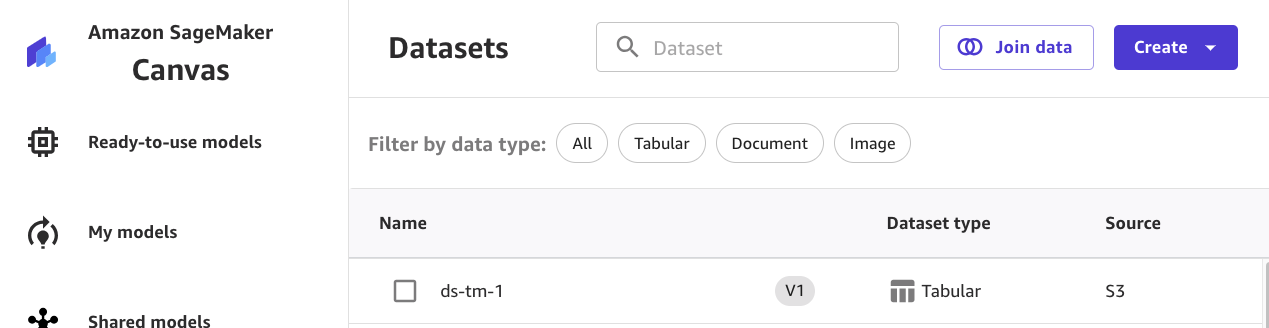

Create a data set that provides the data source.

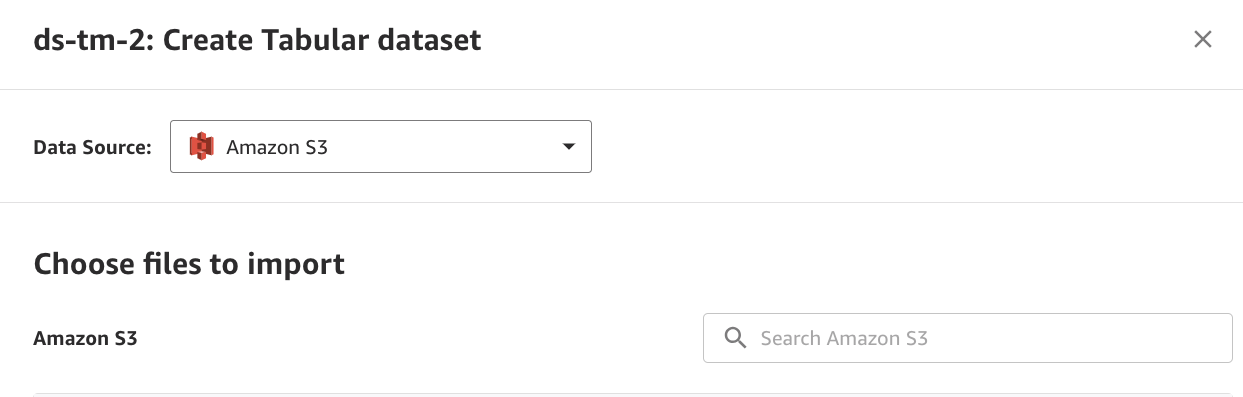

Select the source of the data set as S3

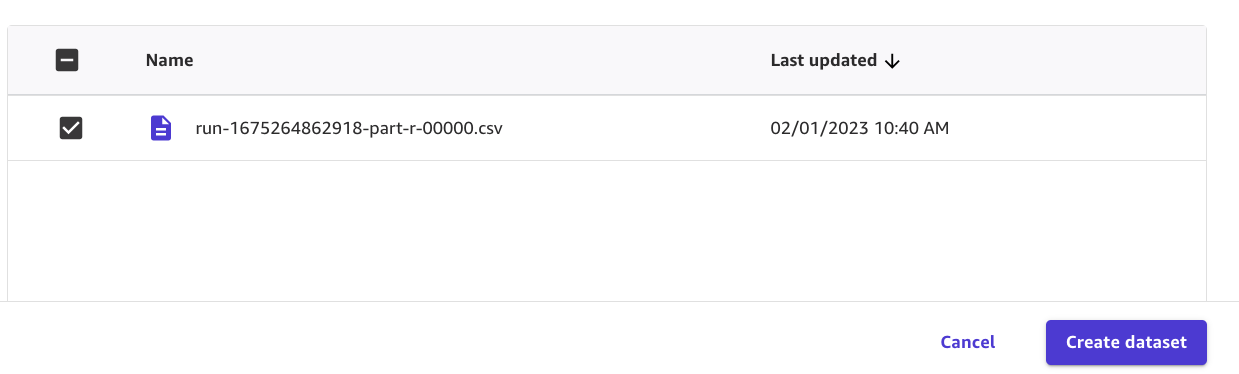

Select the location of the S3 bucket data and select Create Data Set.

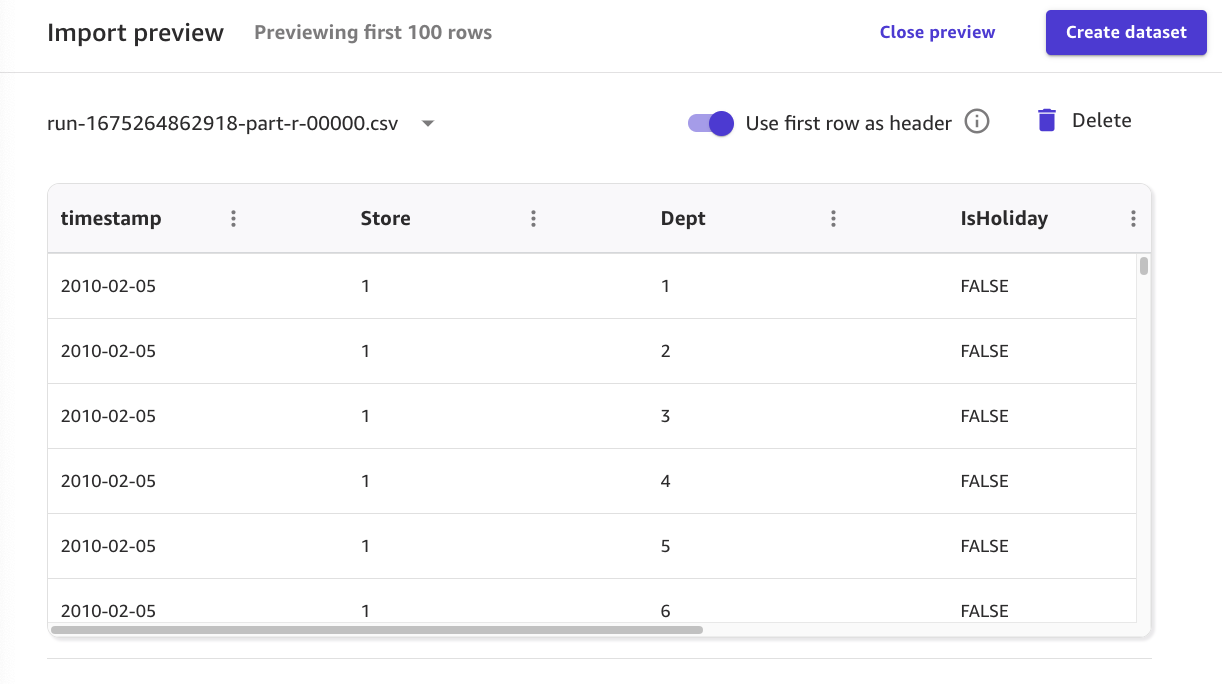

Review the schema and click Create Data Set.

Upon successful import, the dataset will appear in the list as shown in the following screenshot.

Train the model

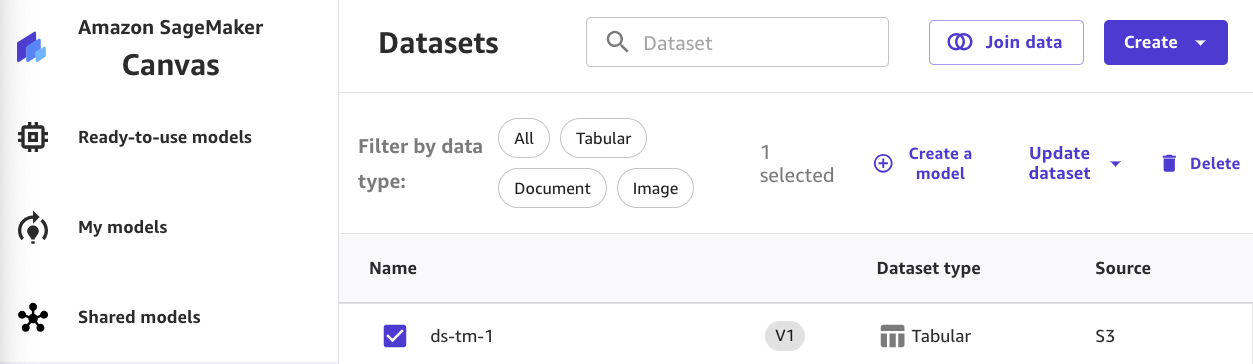

Next, we will use Canvas to configure model training. Select the data set and click Create.

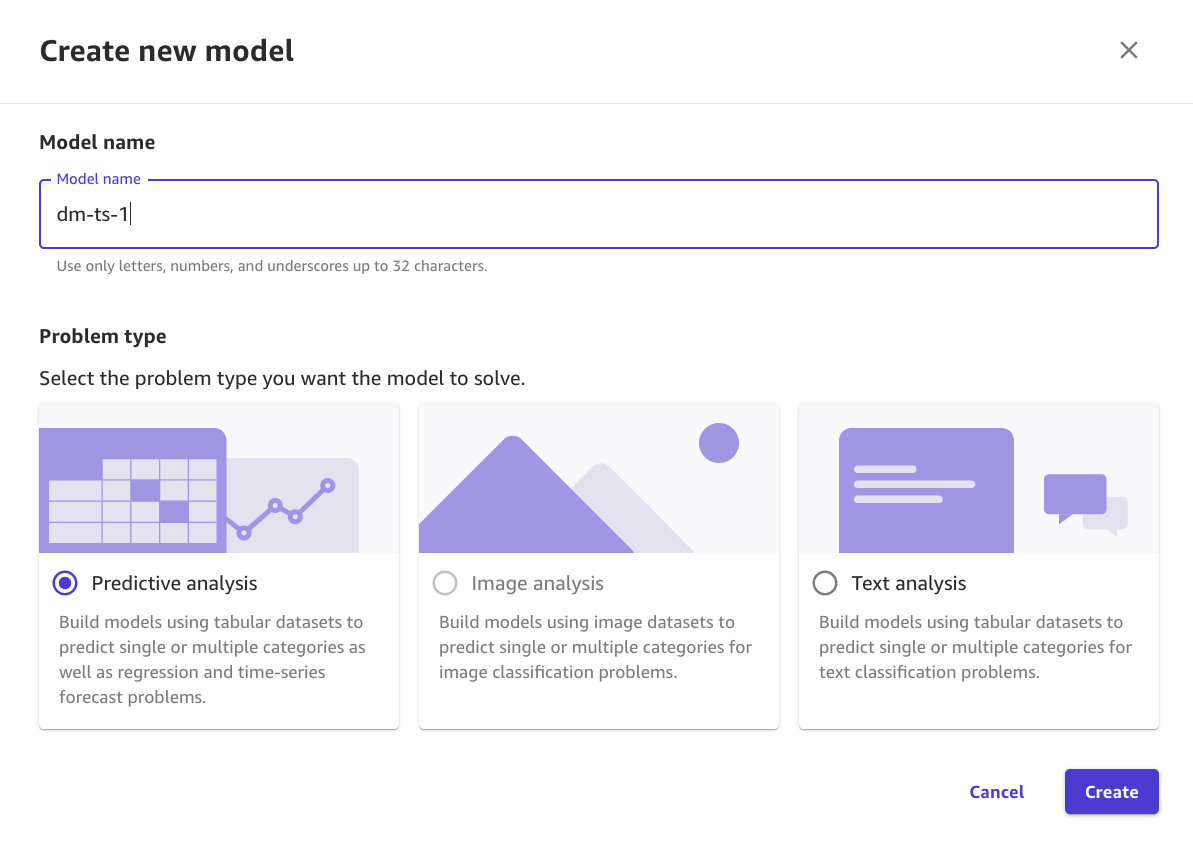

Create a model name, select Predictive Analytics, and select Create.

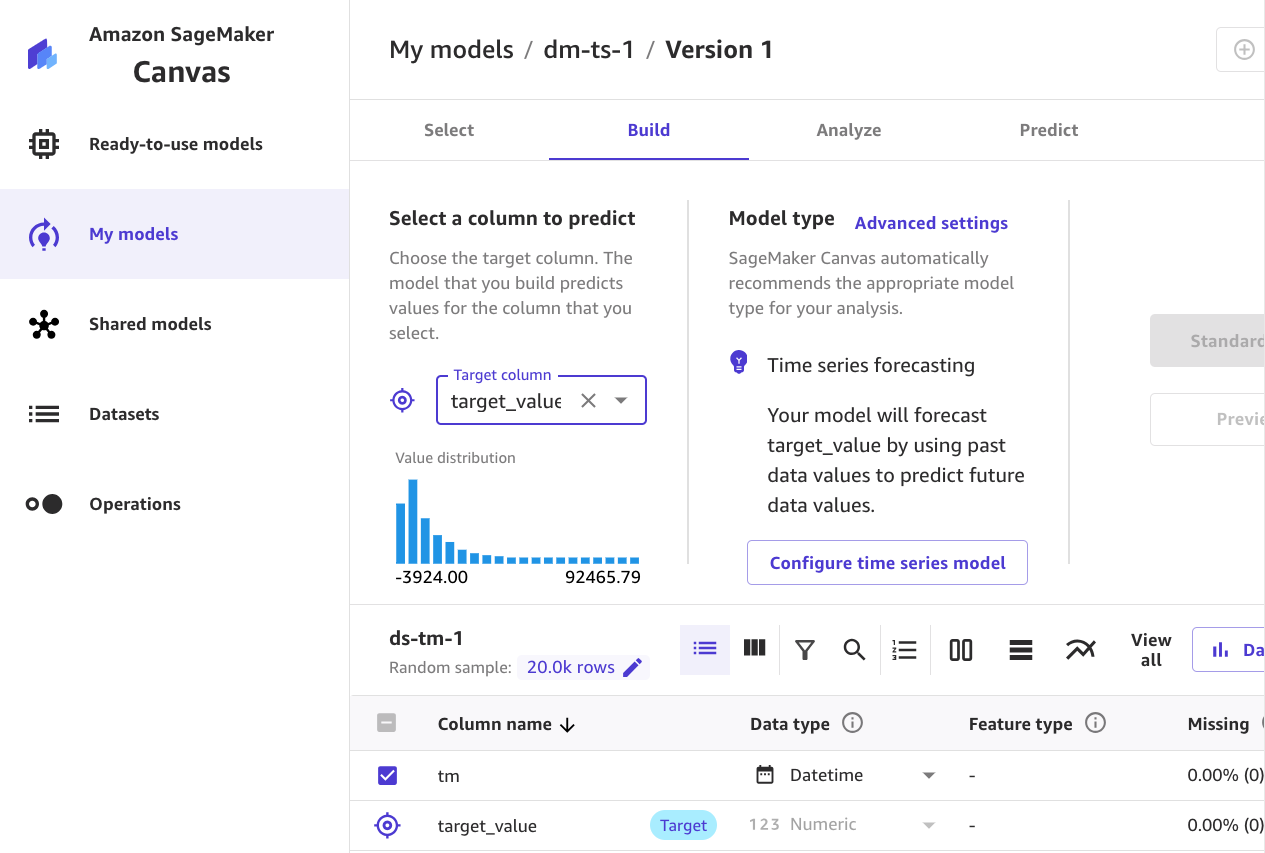

Select destination column

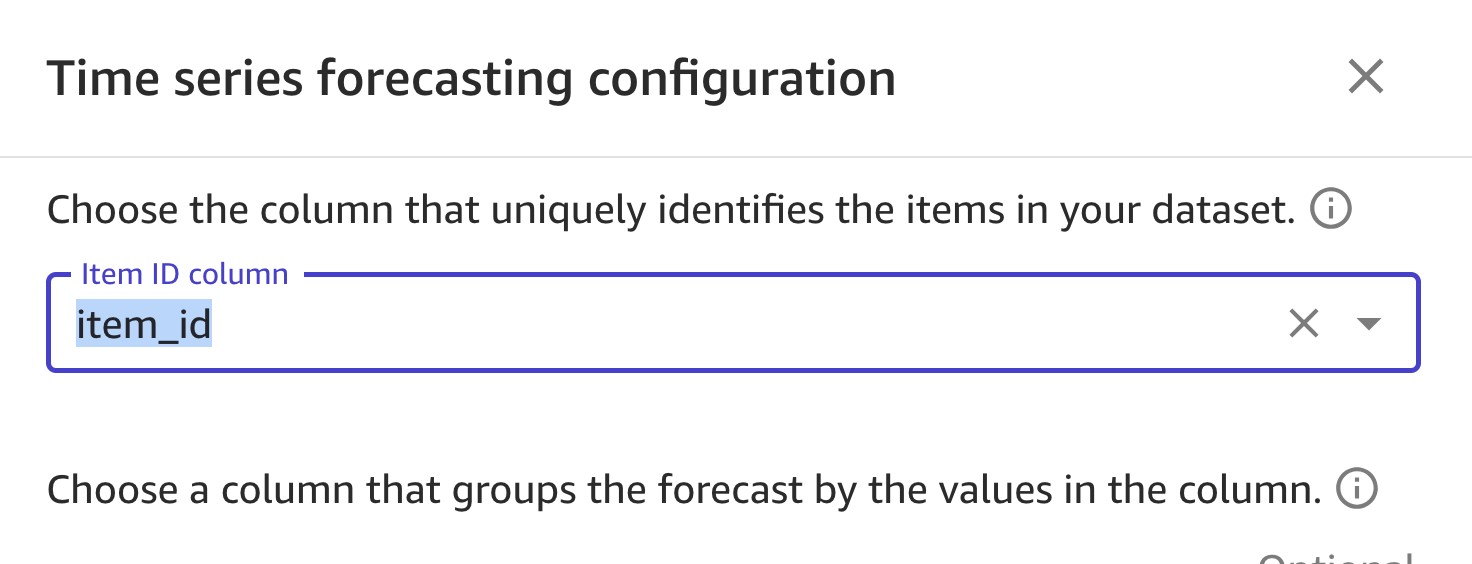

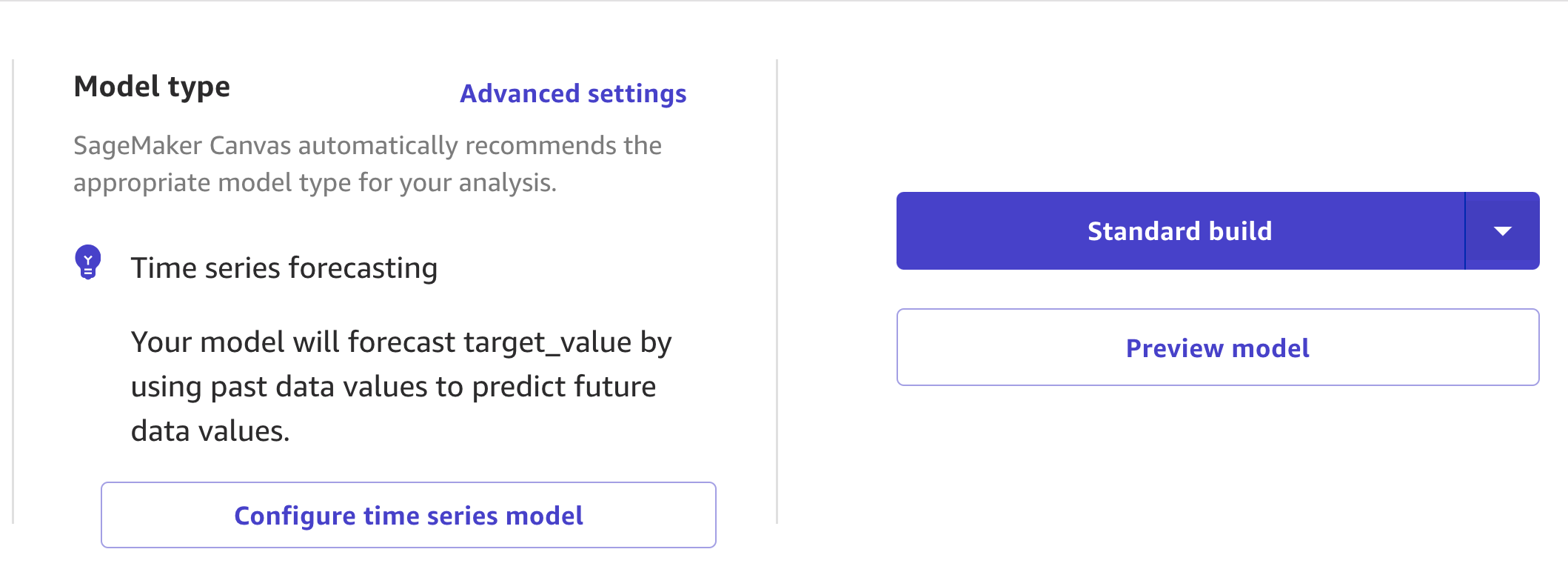

Next, click Configure Time Series Model and select item_id as the Item ID column.

Select tm for timestamp column

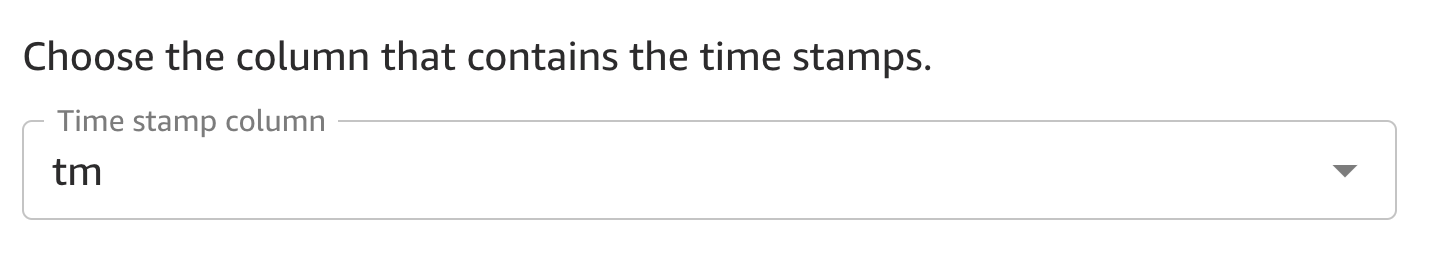

To specify the amount of time you want to forecast, choose 8 weeks.

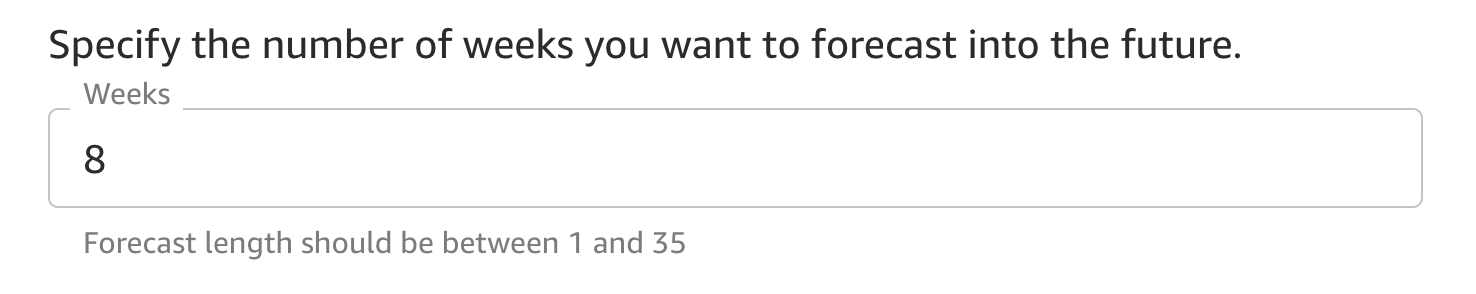

You are now ready to preview the model or start the build process.

After you preview the model or start the build, your model will be created and may take up to four hours. You can exit the screen and return to view the training status of the model.

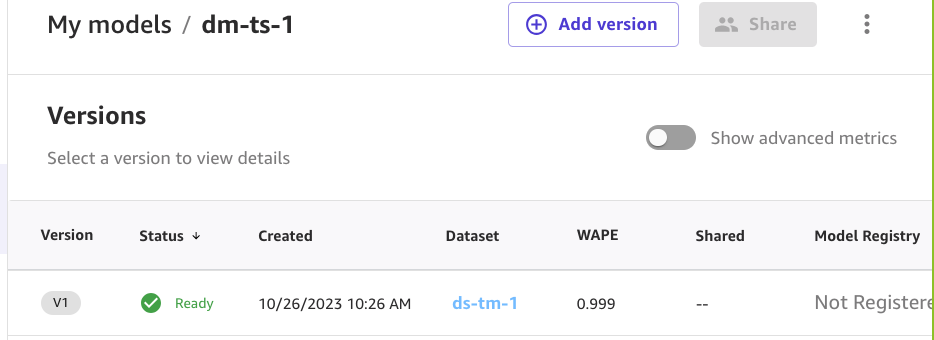

When the model is ready, select the model and click on the latest version.

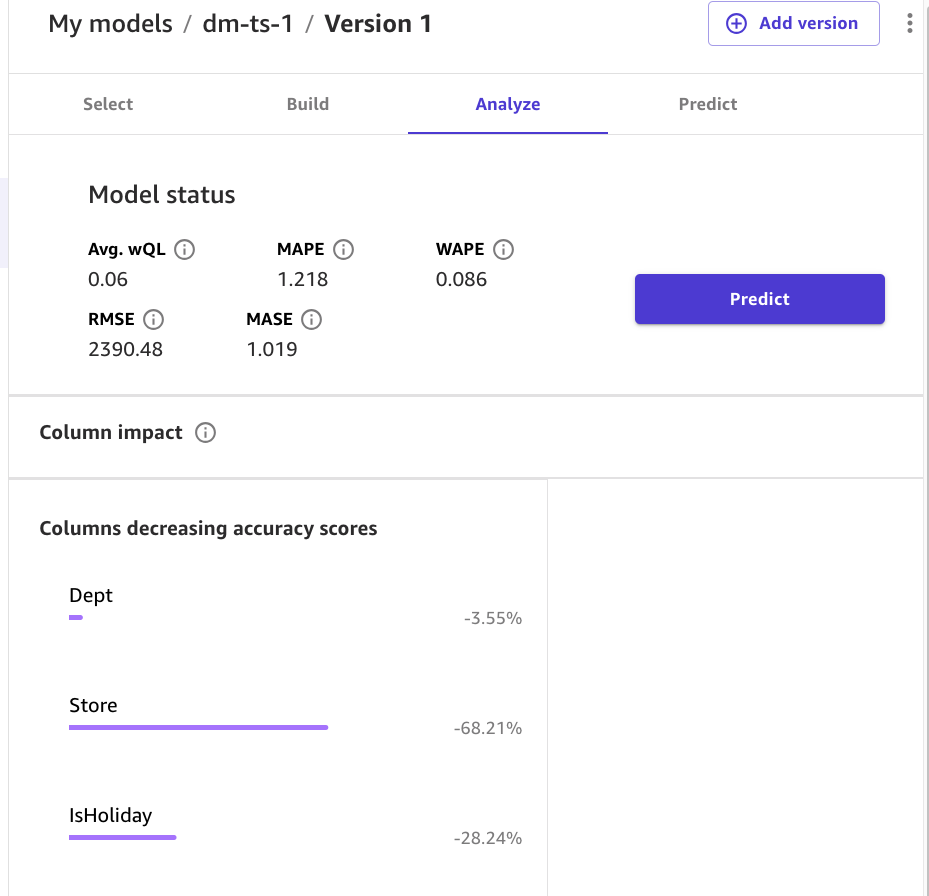

Review the model metrics and column impact, and if you are satisfied with the model's performance, click Predict.

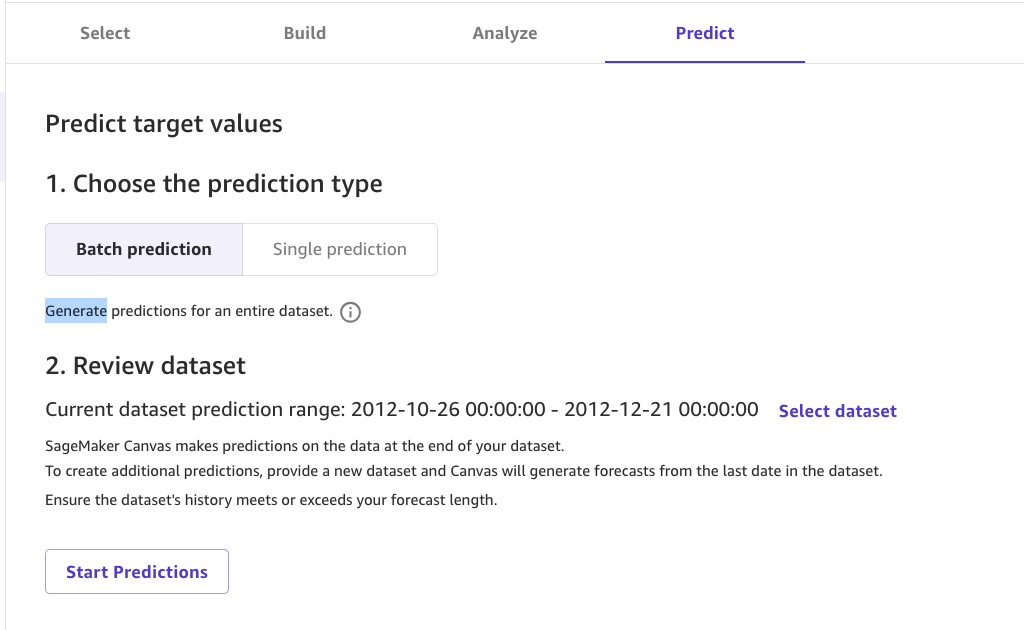

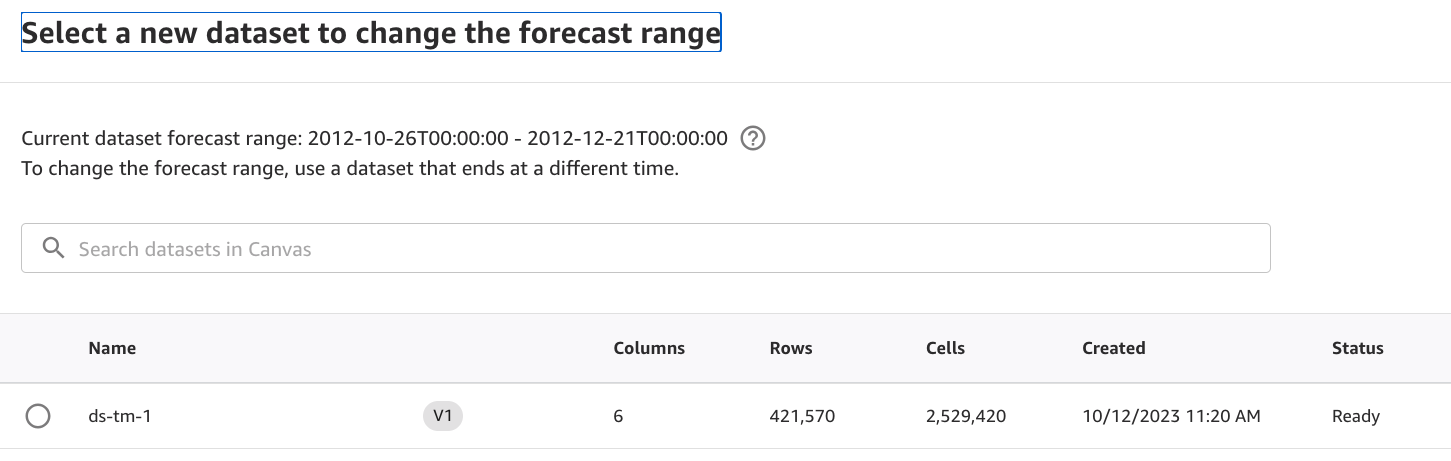

Next, choose Batch Prediction and click Select Data Set.

Select your data set and click Choose Data Set.

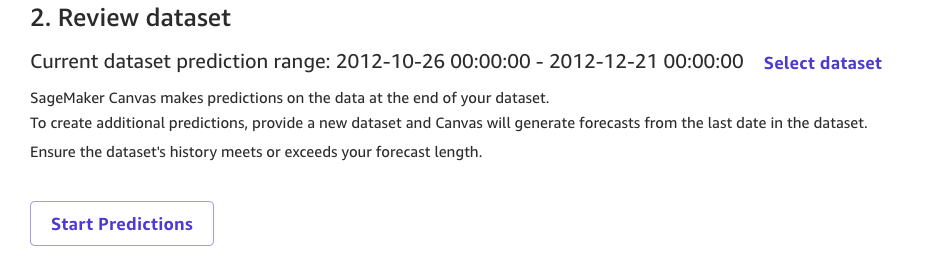

Next, click Start Predictions.

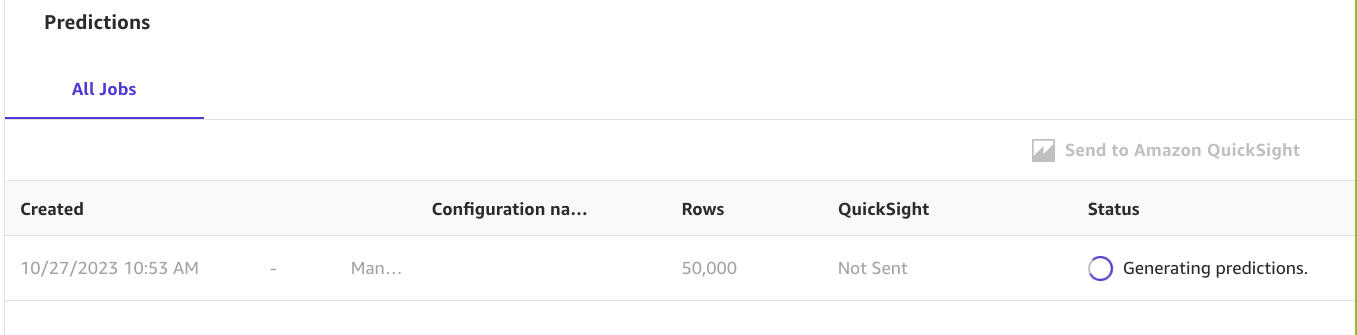

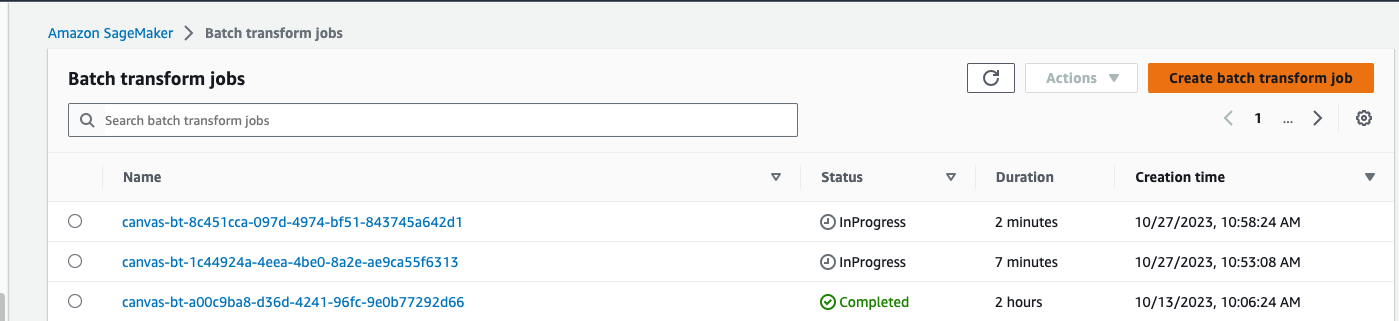

Observe a created job or observe the progress of the job in SageMaker in Inference, Batch Transform jobs.

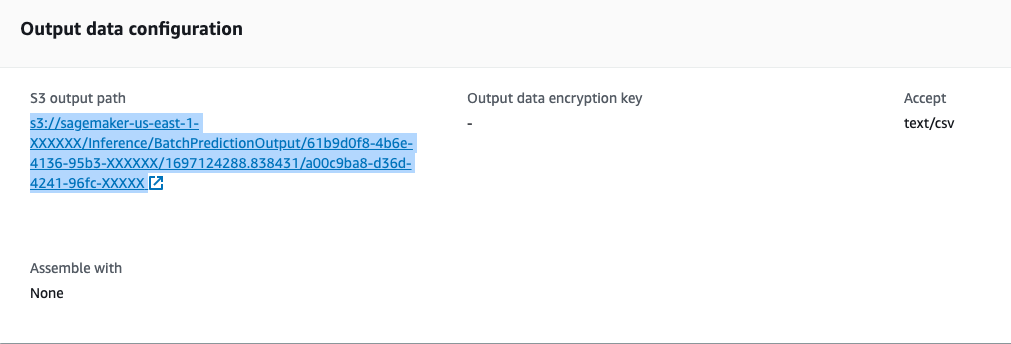

When the job completes, select the job and note the S3 path where Canvas stored the predictions.

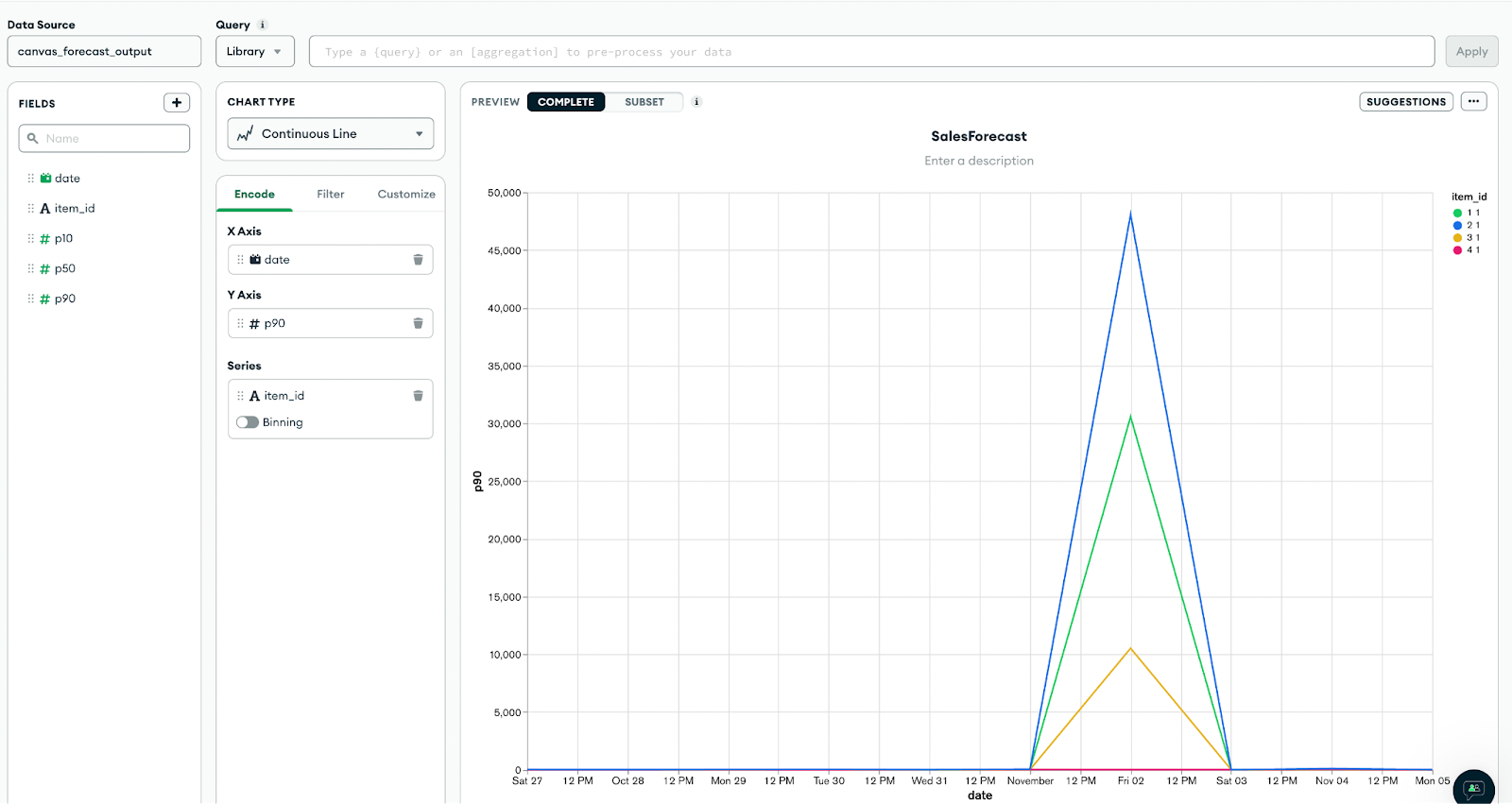

View forecast data in Atlas Charts

To display forecast data, create the MongoDB Atlas Charts based on federated data (amazon-forecast-data) for the P10, P50 and P90 forecasts, as shown in the table below.

Clean

- Delete the MongoDB Atlas cluster

- Delete Atlas Data Federation configuration

- Delete the Atlas Application Service application

- Delete S3 bucket

- Delete the dataset and models from Amazon SageMaker Canvas

- Delete Atlas Charts

- Sign out of Amazon SageMaker Canvas

Conclusion

In this post we extracted time series data from the MongoDB time series collection. This is a special collection optimized for storage and query speed of time series data. We use Amazon SageMaker Canvas to train models and generate predictions and visualize the predictions in Atlas Charts.

For more information, see the following resources.

About the authors

Igor Alekseev He is a Senior Partner Solutions Architect at AWS in the data and analytics domain. In his role, Igor works with strategic partners helping them build complex architectures optimized for AWS. Before joining AWS, as a Data/Solutions Architect, he implemented many projects in the Big Data domain, including several data lakes in the Hadoop ecosystem. As a data engineer, he was involved in applying ai/ML to fraud detection and office automation.

Igor Alekseev He is a Senior Partner Solutions Architect at AWS in the data and analytics domain. In his role, Igor works with strategic partners helping them build complex architectures optimized for AWS. Before joining AWS, as a Data/Solutions Architect, he implemented many projects in the Big Data domain, including several data lakes in the Hadoop ecosystem. As a data engineer, he was involved in applying ai/ML to fraud detection and office automation.

Babu Srinivasan is a Senior Partner Solutions Architect at MongoDB. In his current role, he works with AWS to create technical integrations and reference architectures for AWS and MongoDB solutions. He has more than two decades of experience in Database and Cloud technologies. He is passionate about providing technical solutions to clients working with multiple global systems integrators (GSIs) across multiple geographies.

Babu Srinivasan is a Senior Partner Solutions Architect at MongoDB. In his current role, he works with AWS to create technical integrations and reference architectures for AWS and MongoDB solutions. He has more than two decades of experience in Database and Cloud technologies. He is passionate about providing technical solutions to clients working with multiple global systems integrators (GSIs) across multiple geographies.

NEWSLETTER

NEWSLETTER