In training large language models (LLM), effective orchestration and management of computing resources pose a significant challenge. Automating resource provisioning, scaling, and workflow management is vital to optimizing resource usage and streamlining complex workflows, thereby achieving efficient deep learning training processes. Simplified orchestration allows researchers and practitioners to focus more on model experimentation, hyperparameter tuning, and data analysis, rather than dealing with cumbersome infrastructure management tasks. Direct orchestration also accelerates innovation, shortens time to market for new models and applications, and ultimately improves the overall efficiency and effectiveness of LLM's research and development efforts.

This post explores the seamless integration of AWS Trainium with AWS Batch and shows how you can leverage the powerful machine learning (ML) acceleration capabilities of Trainium along with the efficient orchestration capabilities offered by AWS Batch. Trainium provides massive scalability, allows you to effortlessly scale training jobs from small models to LLM, and offers cost-effective access to computational power, making LLM training affordable and accessible. AWS Batch is a managed service that facilitates batch computing workloads in the AWS cloud, handling tasks such as infrastructure management and job scheduling, while allowing you to focus on application development and analysis of results. AWS Batch provides comprehensive features, including managed batch computing, containerized workloads, custom compute environments, and prioritized job queues, along with seamless integration with other AWS services.

Solution Overview

The following diagram illustrates the architecture of the solution.

The training process develops as follows:

- The user creates a Docker image configured to fit the demands of the underlying training task.

- The image is pushed to amazon Elastic Container Registry (amazon ECR) to prepare it for deployment.

- The user submits the training job to AWS Batch with the Docker image.

Let's dive into this solution to see how you can integrate Trainium with AWS Batch. The following example demonstrates how to train the Llama 2-7B model using AWS Batch with Trainium.

Previous requirements

It is recommended not to run the following scripts on your local machine. Instead, clone the GitHub repository and run the provided scripts on an x86_64 based instance, preferably using a C5.xlarge instance type with Linux/Ubuntu OS. For this post, we ran the example on an amazon Linux 2023 instance.

You should have the following resources and tools before you begin AWS Batch training:

Clone the repository

Clone the GitHub repository and navigate to the required directory:

Update settings

First, update the config.txt file to specify values for the following variables:

After providing these values, your config.txt file should look similar to the following code

Get the Llama tokenizer

To tokenize the dataset, you will need to obtain the Hugging Face tokenizer. Follow the instructions to access the Llama tokenizer. (You must acknowledge and accept the license terms.) Once you have been granted access, you will be able to download the tokenizer from Hugging Face. After a successful download, place the tokenizer.model file in the root directory (\llama2).

Set up flame training

Run the setup.sh script, which simplifies the previous steps to start AWS Batch training. This script downloads the Python files necessary to train the Llama 2-7B model. Additionally, it performs environment variable substitution within the provided format. templates and scripts designed to establish AWS Batch resources. When run, it makes sure that your directory structure conforms to the following settings:

Tokenize the data set

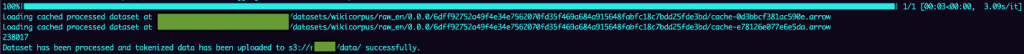

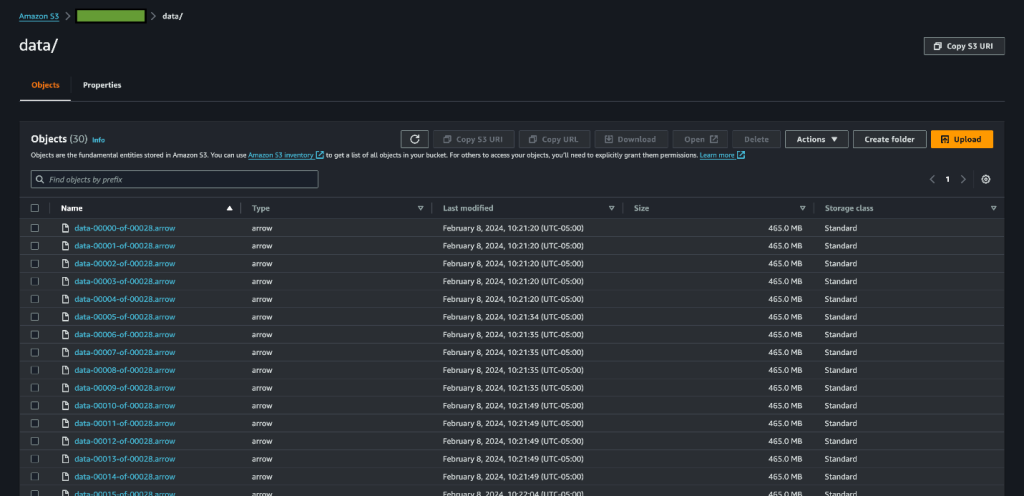

Then run the download_and_tokenize_data.sh script to complete the data preprocessing steps for training Llama 2-7B. In this case we use the wikicorpus dataset from Hugging Face. After data set recovery, the script performs tokenization and uploads the tokenized data set to the predefined S3 location specified within the config.txt configuration file. The following screenshots show the preprocessing results.

Provision resources

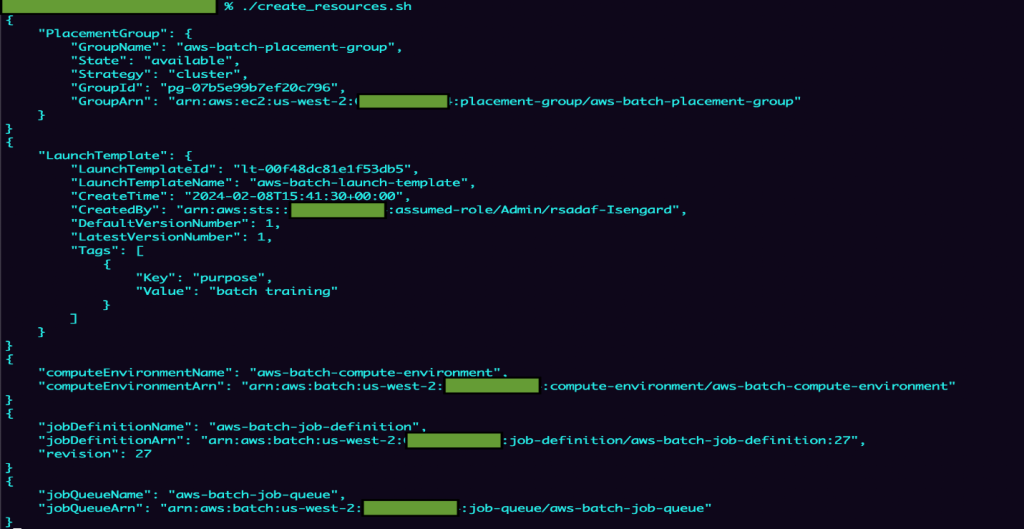

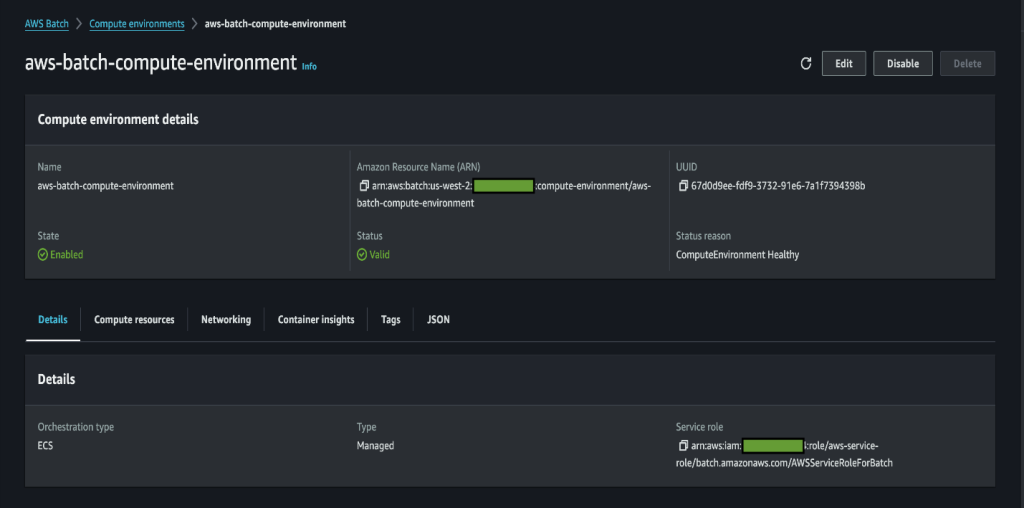

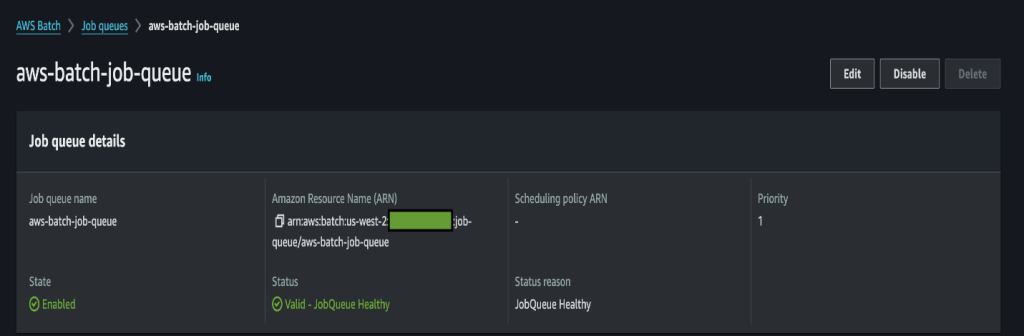

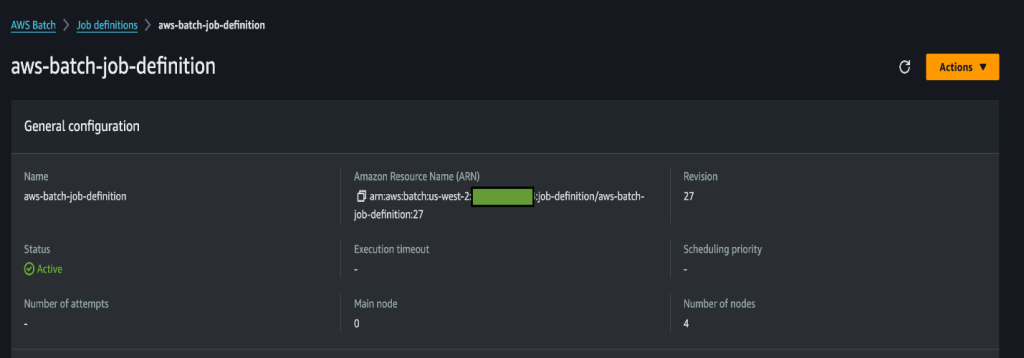

Then run the create_resources.sh script, which organizes the provisioning of the resources necessary for the training task. This includes creating a placement group, a release template, a compute environment, a job queue, and a job definition. The following screenshots illustrate this process.

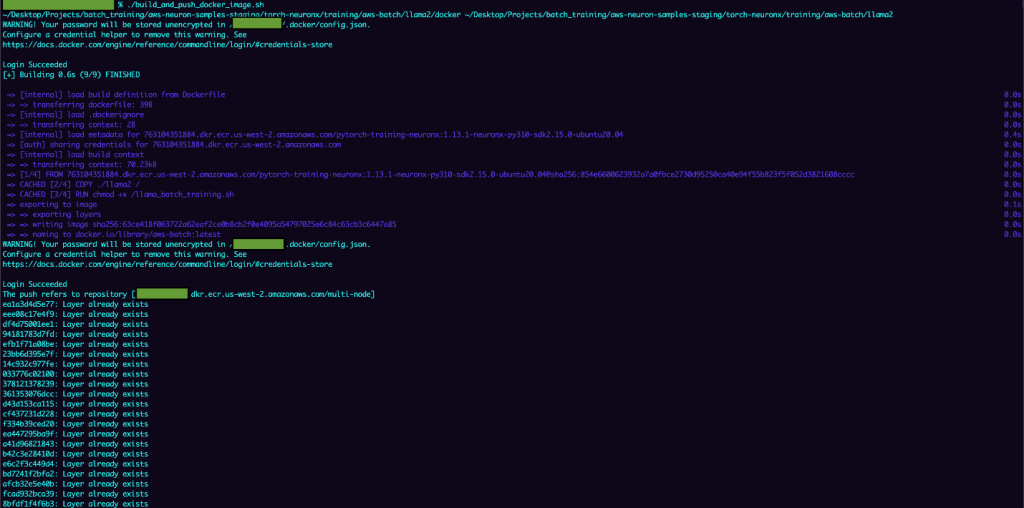

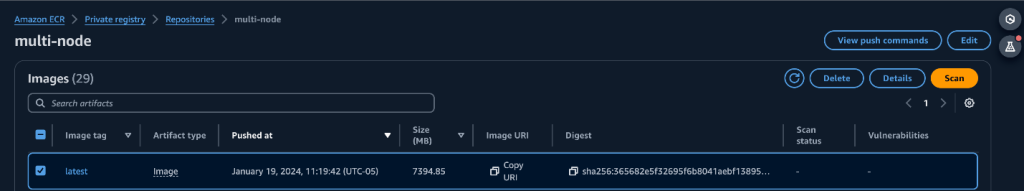

Build and ship the Docker image

Now you can run the script. build_and_push_docker_image.sh, which builds a custom Docker container image for your specific training task. This script uses a Deep learning container image published by the Neuron team, containing the required software stack, and then added instructions for running the Llama 2-7B training on top. The training script uses the neuronx_distributed library with tensor parallelism together with the Zero-1 Optimizer. Subsequently, the newly generated Docker container image is uploaded to its designated ECR repository as specified by the variable ECR_REPO in the configuration file config.txt.

If you want to modify any of Llama's training hyperparameters, make the necessary changes in ./docker/llama_batch_training.sh before running build_and_push_docker_image.sh.

The following screenshots illustrate the process to create and push the Docker image.

Submit training work

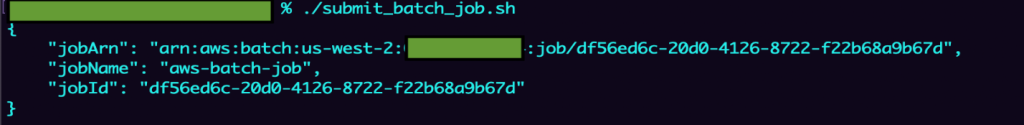

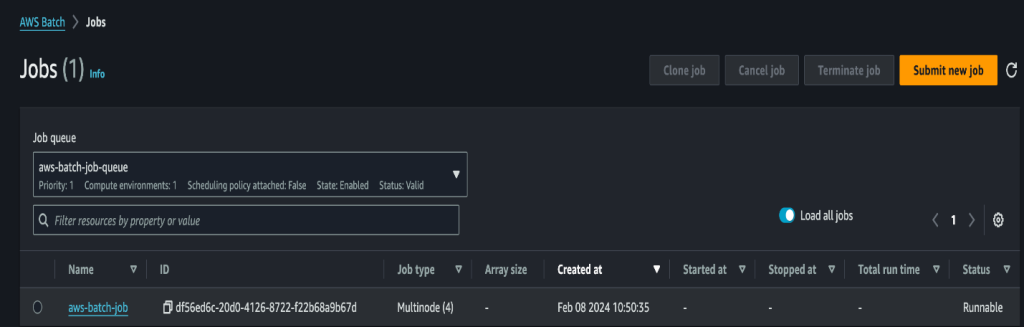

Run the submit_batch_job.sh script to start the AWS Batch job and start training the Llama2 model, as shown in the following screenshots.

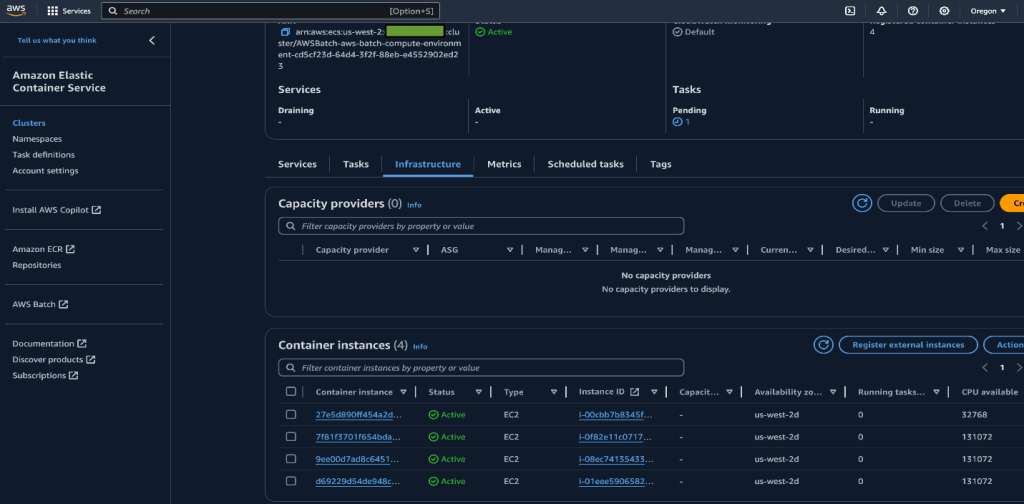

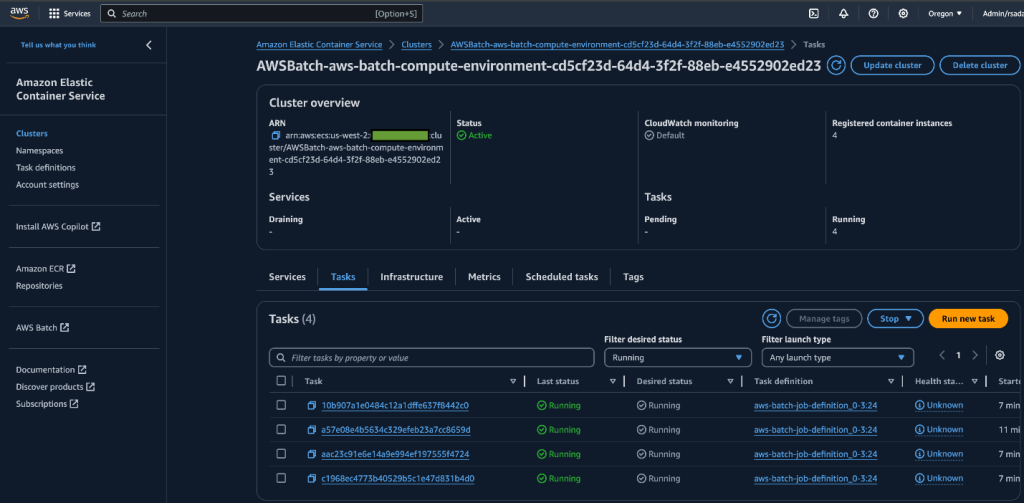

After the batch job is submitted, an amazon Elastic Container Service (amazon ECS) cluster is dynamically provisioned. When it is operational, you can navigate to the cluster to monitor all tasks actively running on the cluster. Trn1.32xl instances, launched through this work. By default, this example is configured to use 4 trn1.32xl instances. To customize this setting, you can modify the numNodes parameter in the submit_batch_job.sh script.

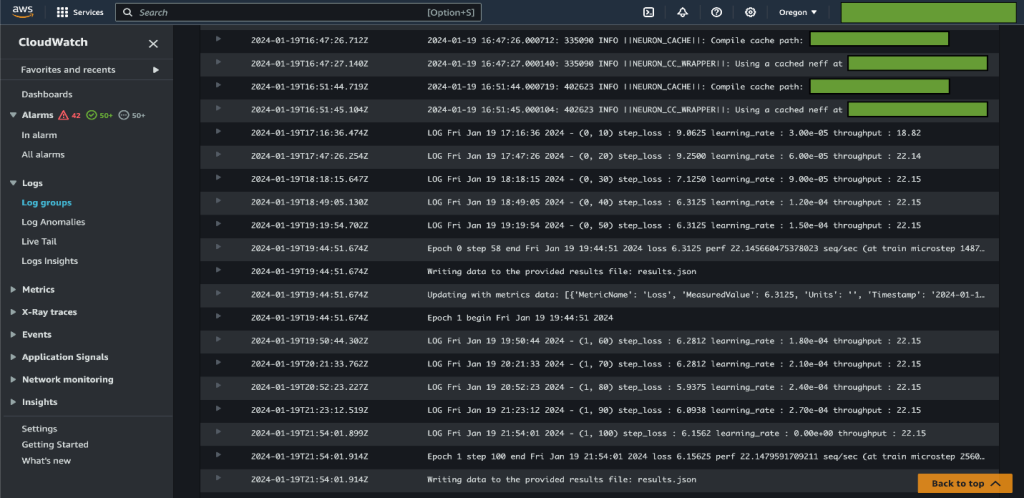

Records and tracking

After job submission, you can use amazon CloudWatch Logs to perform comprehensive monitoring, storage, and visualization of all logs generated by AWS Batch. Complete the following steps to access the logs:

- In the CloudWatch console, choose Registration groups low Records in the navigation panel.

- Choose

/aws/batch/jobto view batch job logs. - Search for log groups that match AWS Batch job names or definitions.

- Choose the job to view its details.

The following screenshot shows an example.

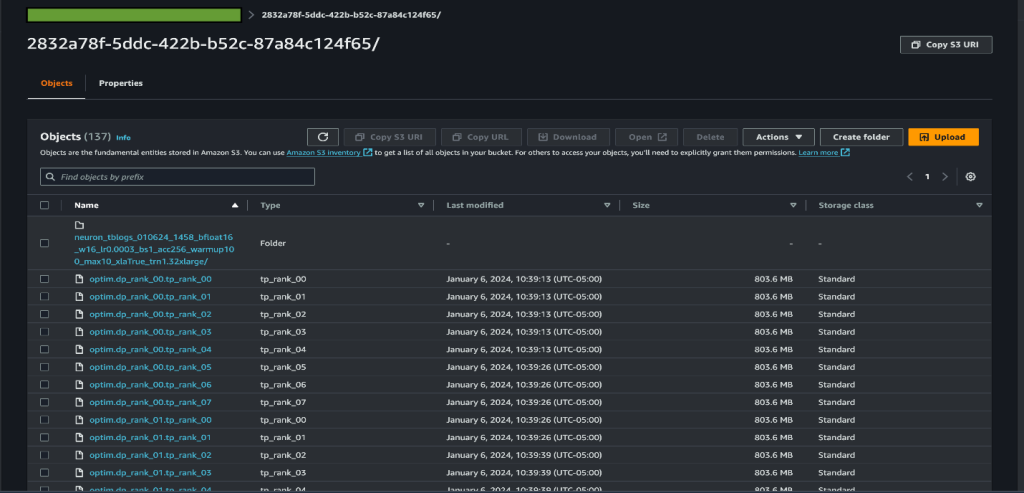

Checkpoints

Checkpoints generated during training will be stored in the predefined S3 location specified as CHECKPOINT_SAVE_URI in it config.txt archive. By default, the checkpoint is saved when training is completed. However, you can adjust this behavior by choosing to save the checkpoint after every N steps within the training cycle. For detailed instructions on this customization, see Checkpoints.

Clean

When you're done, run the cleanup.sh script to manage the deletion of resources created during publishing. This script is responsible for removing various components such as the startup template, placement group, job definition, job queue, and compute environment. AWS Batch automatically handles cleanup of the ECS stack and Trainium instances, so you don't need to manually delete or stop them.

Conclusion

Trainium's seamless integration with AWS Batch represents a significant advancement in the ML training space. By combining the unparalleled capabilities of Trainium with the powerful orchestration capabilities of AWS Batch, you can benefit in numerous ways. First, you gain access to massive scalability, with the ability to effortlessly scale training jobs from small models to LLM. With up to 16 Trainium chips per instance and the potential for distributed training across tens of thousands of accelerators, you can tackle even the most demanding training tasks with ease with Trainium instances. Plus, it offers a cost-effective solution that helps you harness the energy you need at an attractive price. With the fully managed service that AWS Batch offers for compute workloads, you can offload operational complexities such as infrastructure provisioning and job scheduling, allowing you to focus your efforts on building applications and analyzing results. Ultimately, integrating Trainium with AWS Batch allows you to accelerate innovation, shorten time to market for new models and applications, and improve the overall efficiency and effectiveness of your machine learning efforts.

Now that you've learned how to orchestrate Trainium using AWS Batch, we encourage you to try it in your next deep learning training job. You can explore more tutorials that will help you gain hands-on experience with AWS Batch and Trainium, and enable you to manage your deep learning workloads and training resources for better performance and profitability. So why wait? Start exploring these tutorials today and take your deep learning training to the next level with Trainium and AWS Batch!

About the authors

Scott Perry is a solutions architect on the Annapurna ML accelerator team at AWS. Based in Canada, he helps customers deploy and optimize deep learning training and inference workloads using AWS Inferentia and AWS Trainium. His interests include large language models, deep reinforcement learning, IoT, and genomics.

Scott Perry is a solutions architect on the Annapurna ML accelerator team at AWS. Based in Canada, he helps customers deploy and optimize deep learning training and inference workloads using AWS Inferentia and AWS Trainium. His interests include large language models, deep reinforcement learning, IoT, and genomics.

Sadaf Rasool is a machine learning engineer on the Annapurna ML Accelerator team at AWS. As an enthusiastic and optimistic ai/ML professional, he remains steadfast in the belief that the ethical and responsible application of ai has the potential to improve society for years to come, fostering both economic growth and social well-being.

Sadaf Rasool is a machine learning engineer on the Annapurna ML Accelerator team at AWS. As an enthusiastic and optimistic ai/ML professional, he remains steadfast in the belief that the ethical and responsible application of ai has the potential to improve society for years to come, fostering both economic growth and social well-being.

NEWSLETTER

NEWSLETTER