Image by author

New open source models like LLaMA 2 have become quite advanced and are free to use. You can use them commercially or fine-tune them with your own data to develop specialized versions. Thanks to their ease of use, you can now run them locally on your own device.

In this post, we will learn how to download the necessary files and the LLaMA 2 model to run the CLI program and interact with an ai assistant. Setup is simple enough that even non-technical users or students can run it by following a few basic steps.

To install llama.cpp locally, the simplest method is to download the pre-built executable from flame throws.cpp.

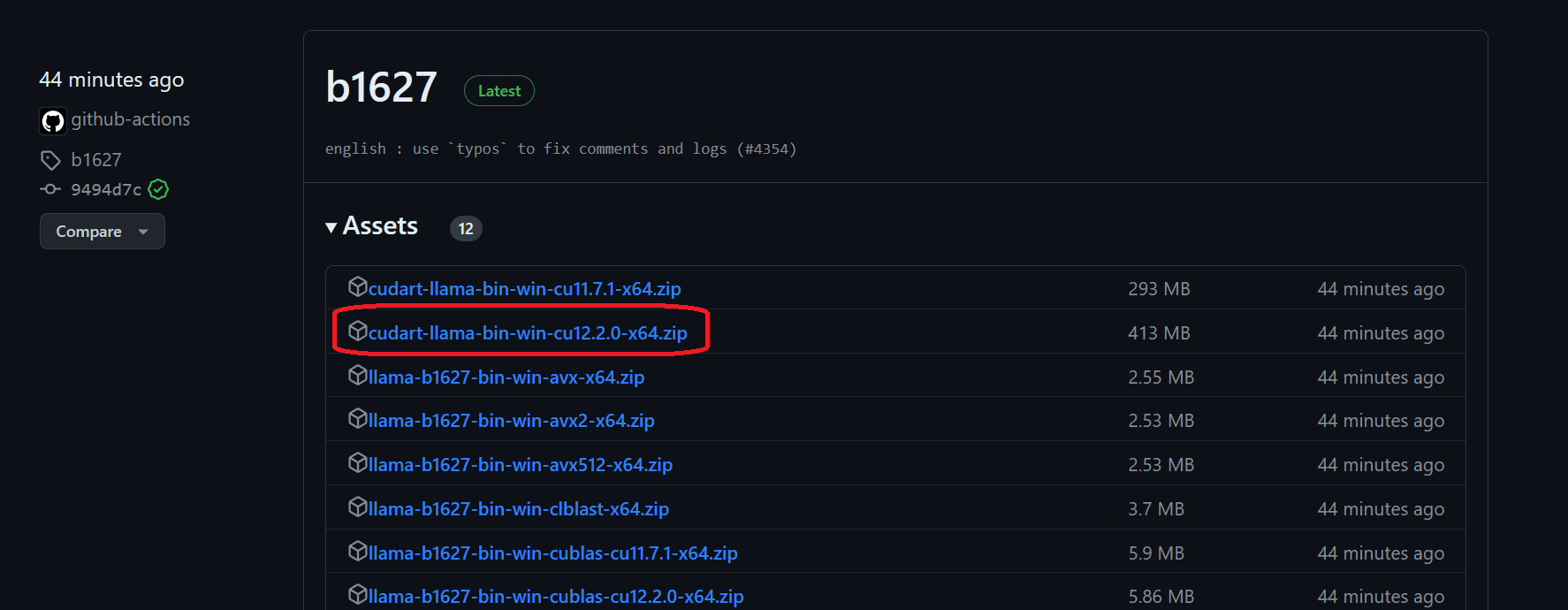

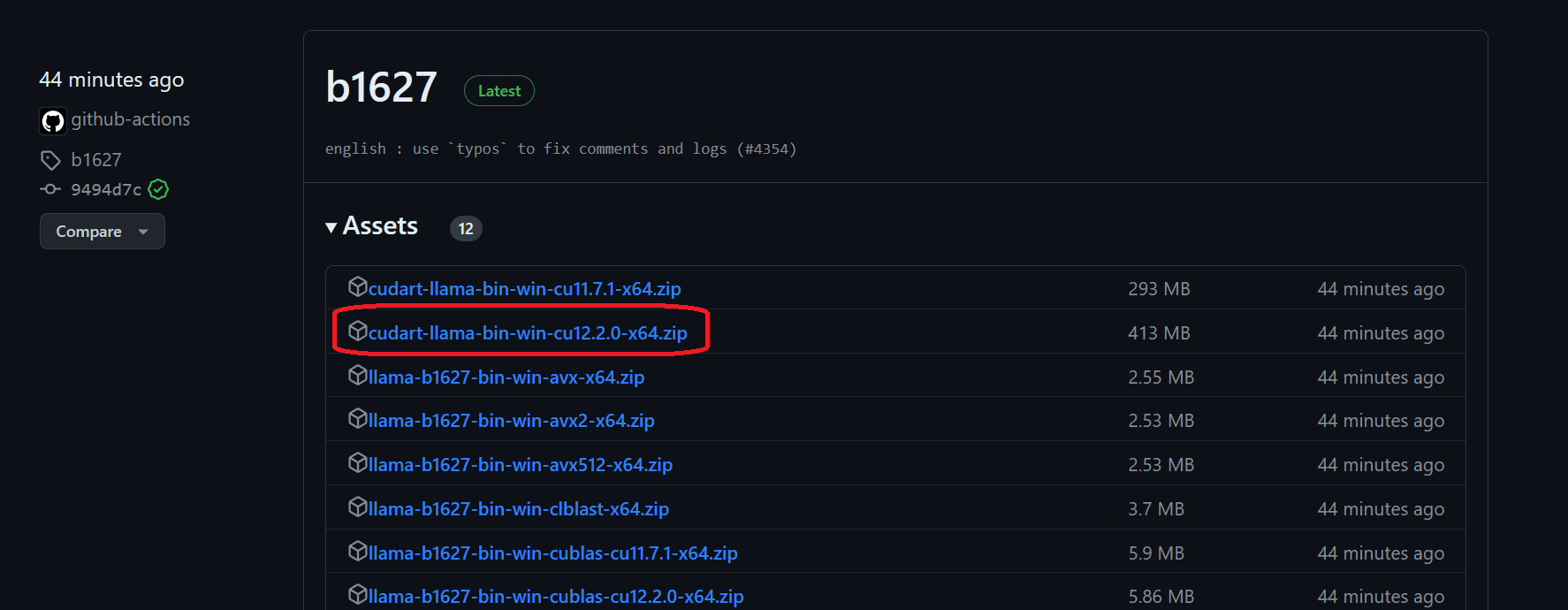

To install it on Windows 11 with the NVIDIA GPU, we must first download the llama-master-eb542d3-bin-win-cublas-(version)-x64.zip archive. After downloading, extract it to the directory of your choice. It is recommended to create a new folder and extract all the files it contains.

Next, we will download the cuBLAS drivers. cudart-llama-bin-win-(version)-x64.zip and extract them to the main directory. To use GPU acceleration, you have two options: cuBLAS for NVIDIA GPU and clBLAS for AMD GPUs.

Note: The (version) is the version of CUDA installed on your local system. You can check it by running

nvcc --versionIn the terminal.

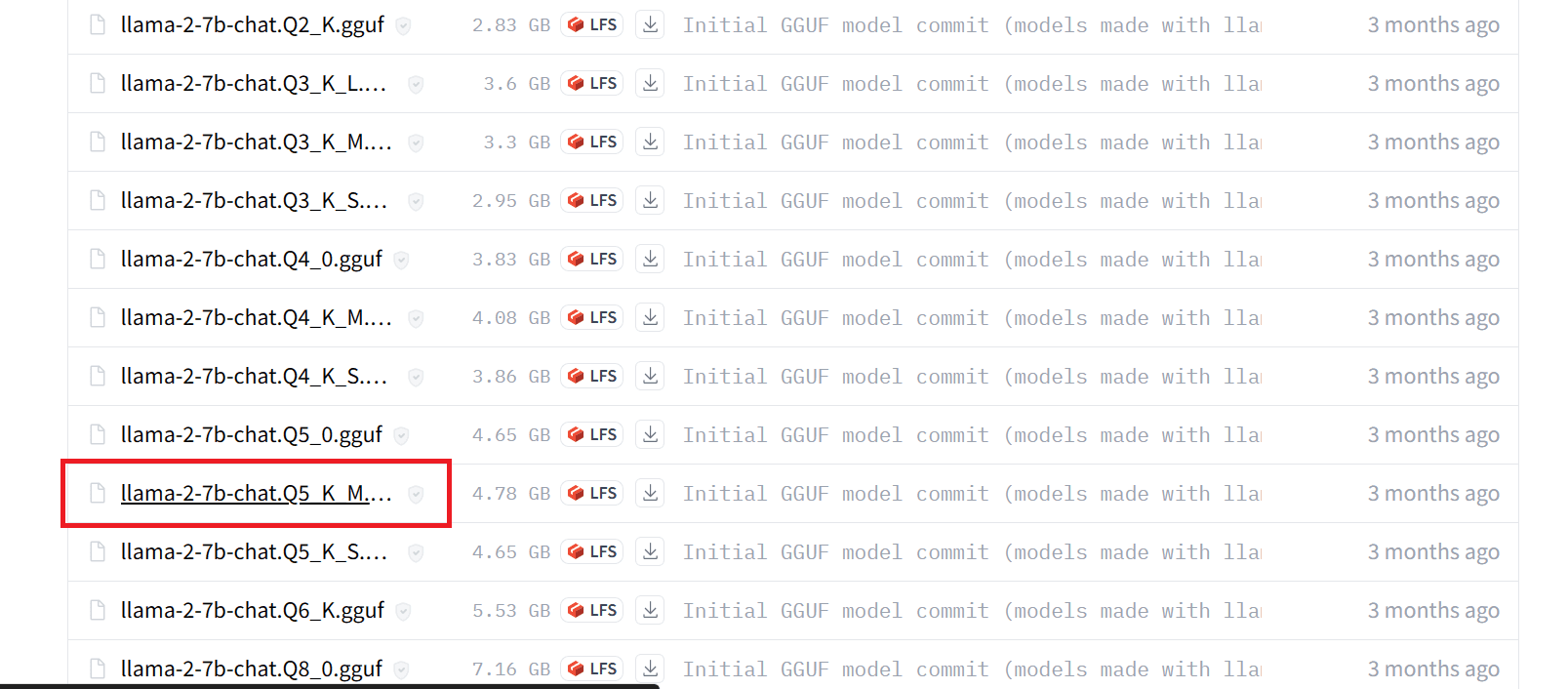

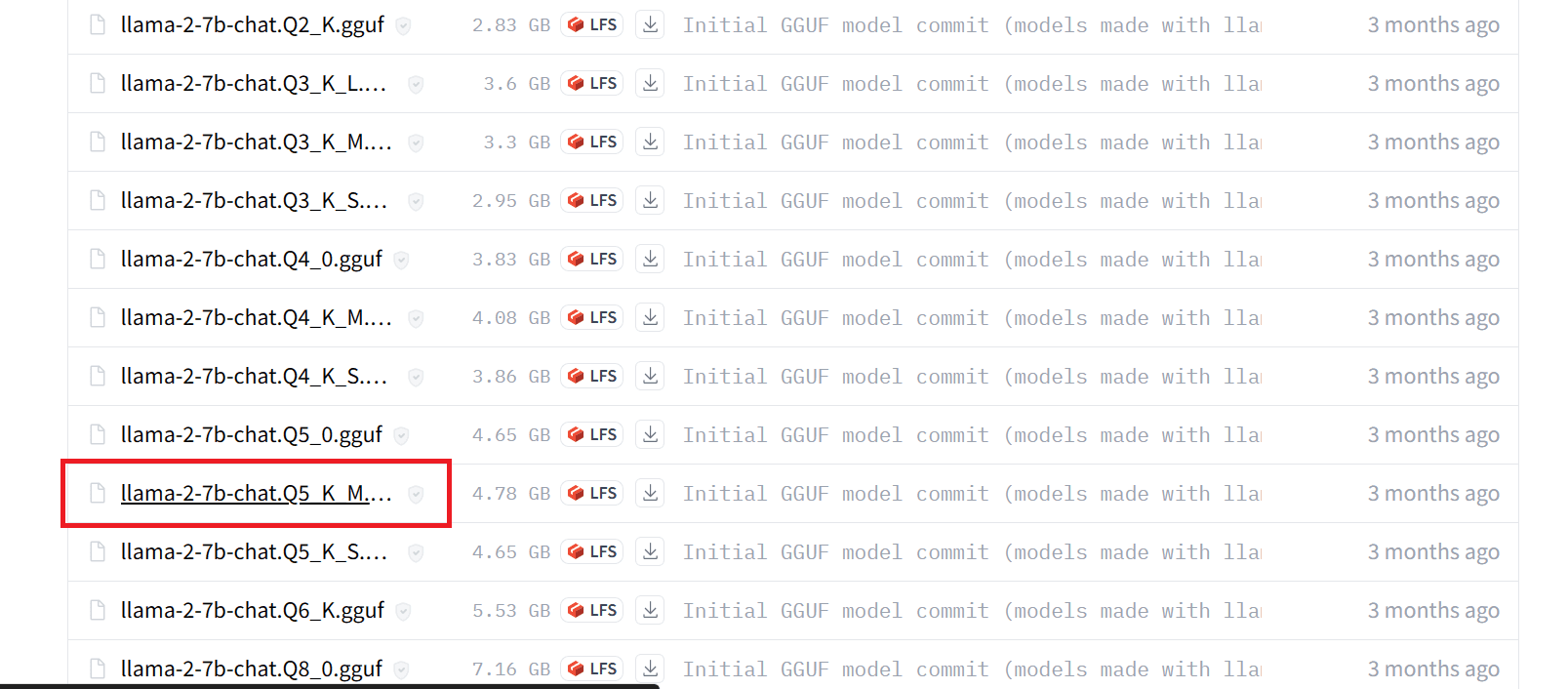

To get started, create a folder called “Models” in the main directory. Inside the Models folder, create a new folder called “llama2_7b”. Next, download the LLaMA 2 model file from the face hugging center. You can choose the version you prefer, but for this guide, we will download the llama-2-7b-chat.Q5_K_M.gguf archive. Once the download is complete, move the file to the “llama2_7b” folder you just created.

Note: To avoid errors, be sure to download only the

.ggufmodel files before running the mode.

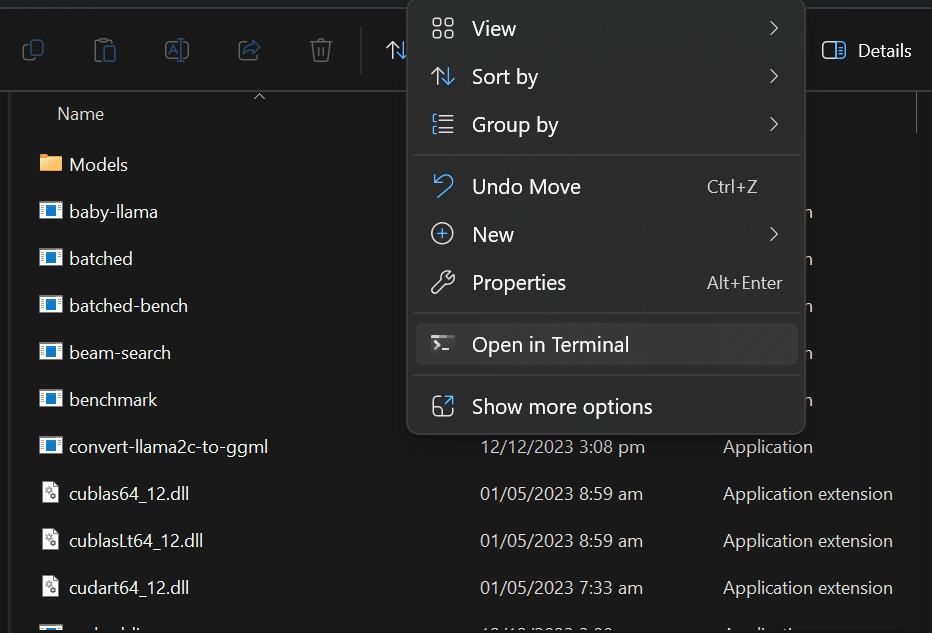

Now you can open the terminal in the main directory. Right-clicking and selecting the “Open in Terminal” option. You can also open PowerShell and the US “cd” to change directories.

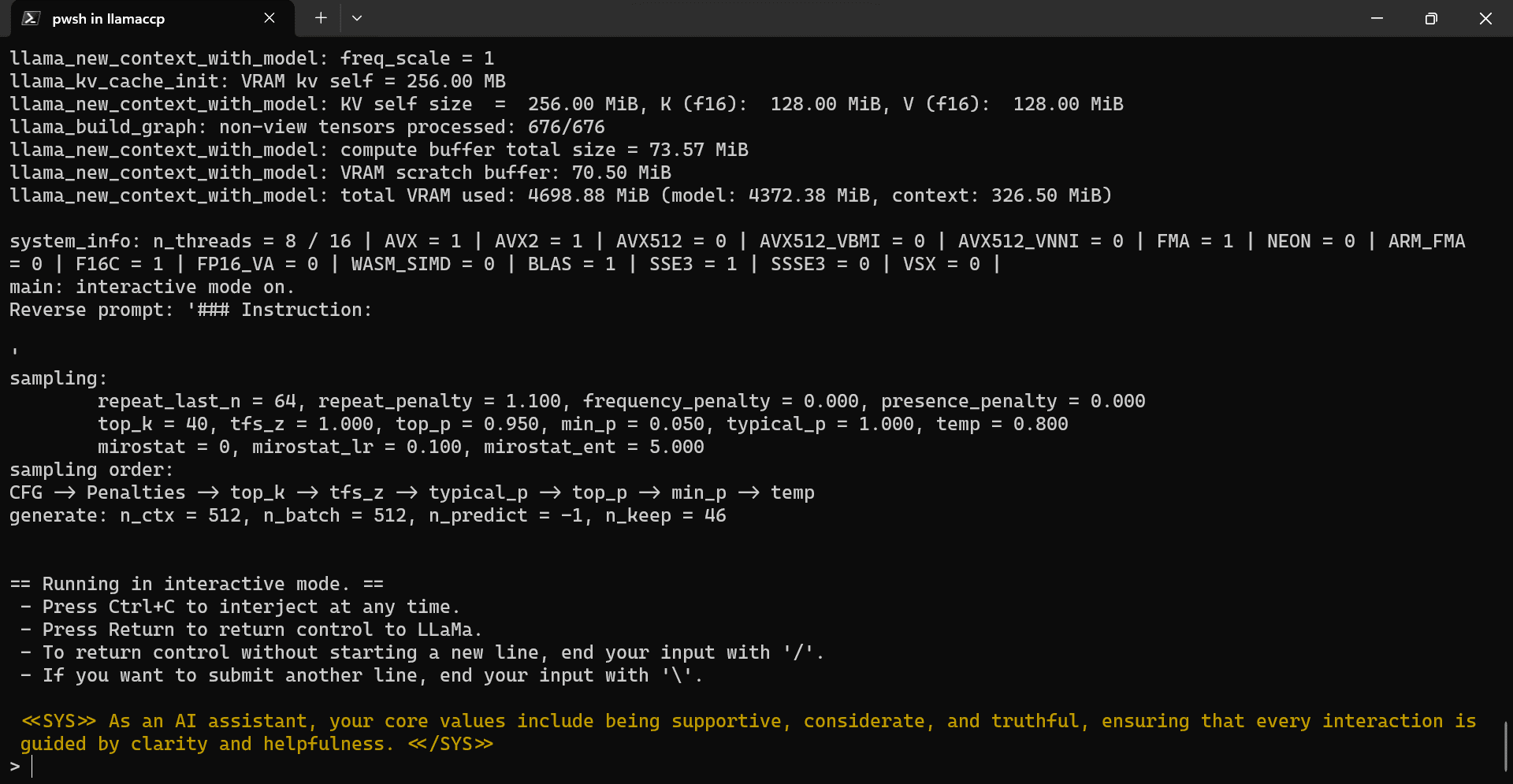

Copy and paste the following command and press “Enter”. We are running the main.exe file with the location of the model directory, gpu, color and system arguments.

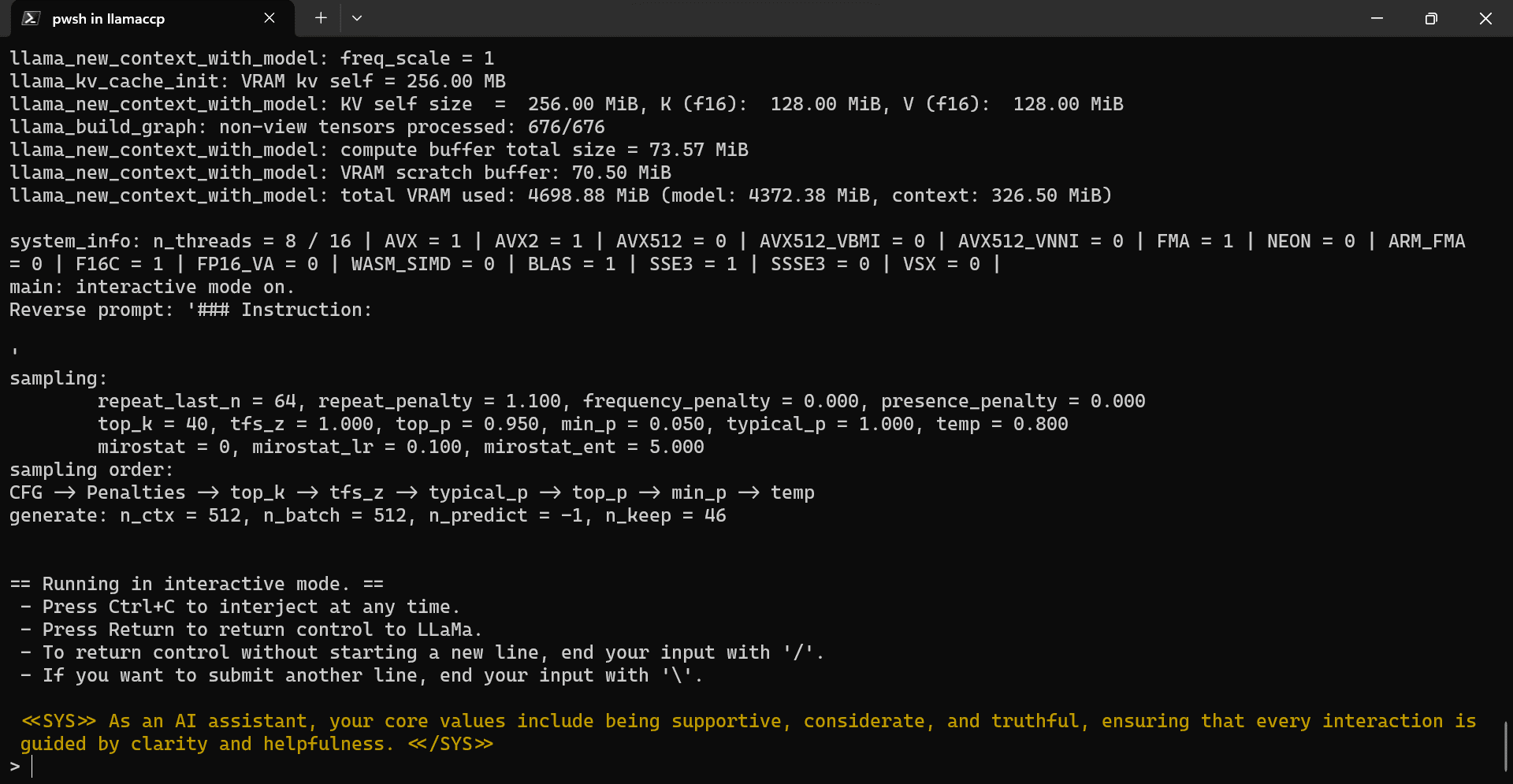

./main.exe -m .\Models\llama2_7b\llama-2-7b-chat.Q5_K_M.gguf -i --n-gpu-layers 32 -ins --color -p "<<SYS>> As an ai assistant, your core values include being supportive, considerate, and truthful, ensuring that every interaction is guided by clarity and helpfulness. <</SYS>>"

Our CLI program called.ccp has been successfully initialized with the system prompt. It tells us that it is a useful ai assistant and shows several commands to use.

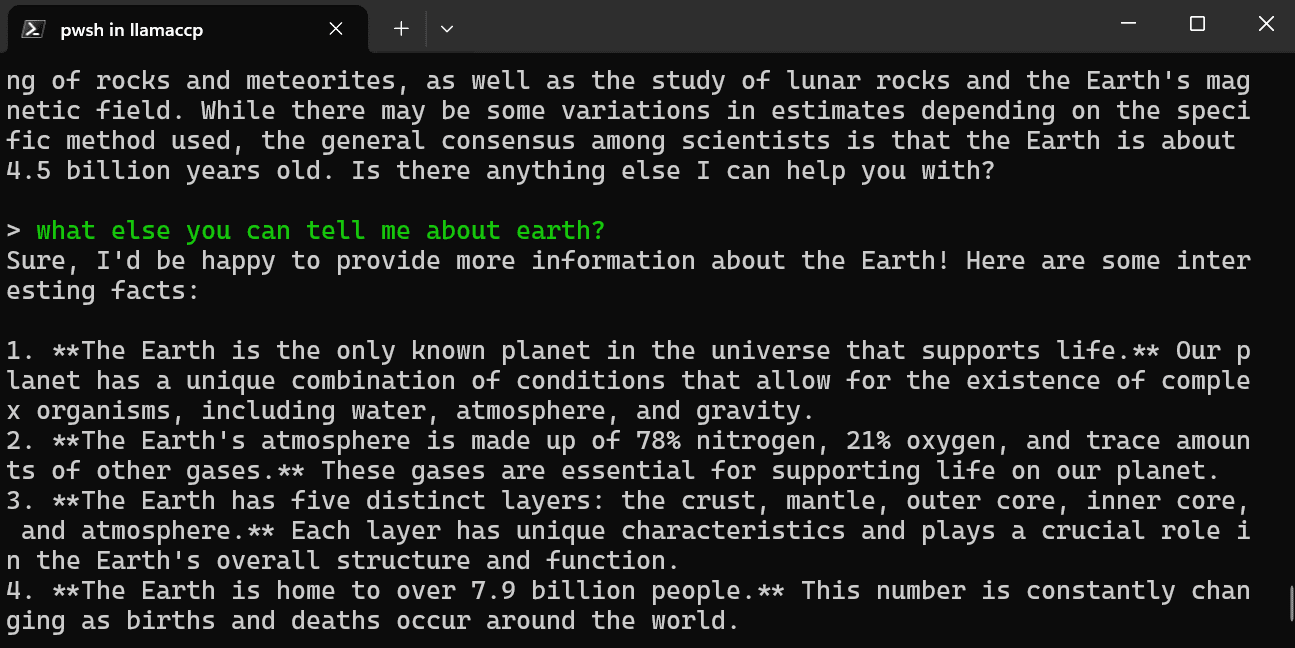

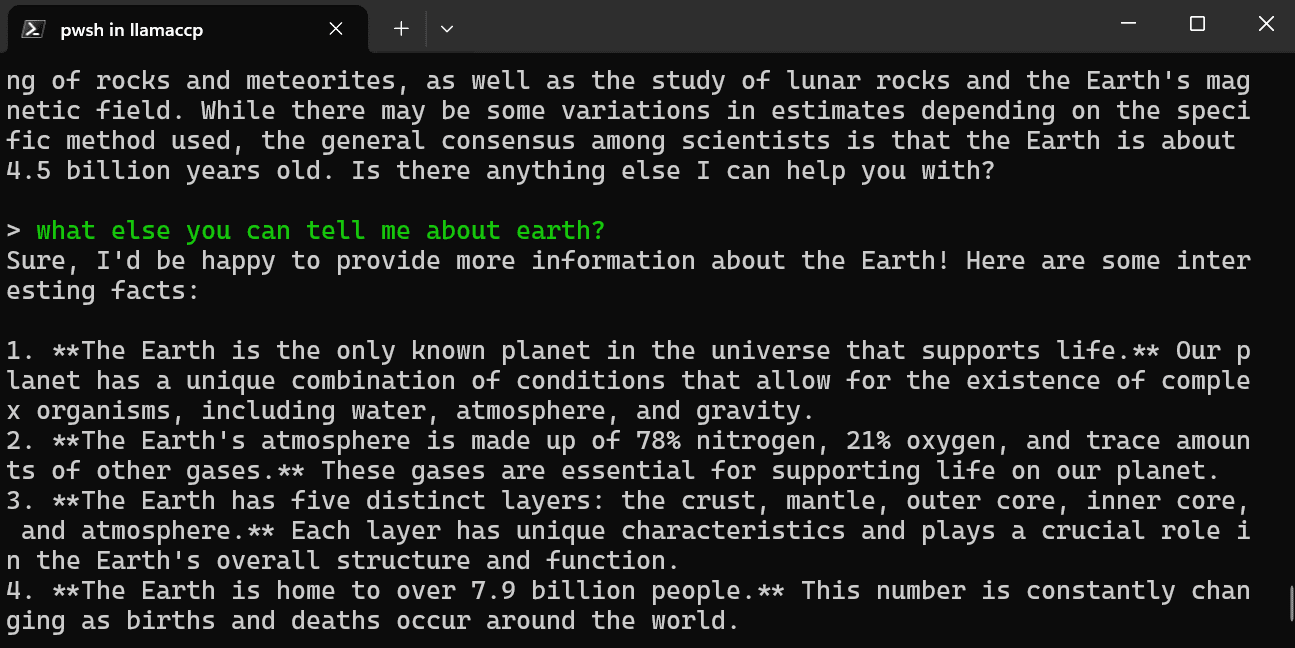

Let's try LLaMA 2 in PowerShell by providing the message. We have asked a simple question about the age of the Earth.

The answer is precise. Let's ask a follow-up question about Earth.

As you can see, the model has provided us with many interesting facts about our planet.

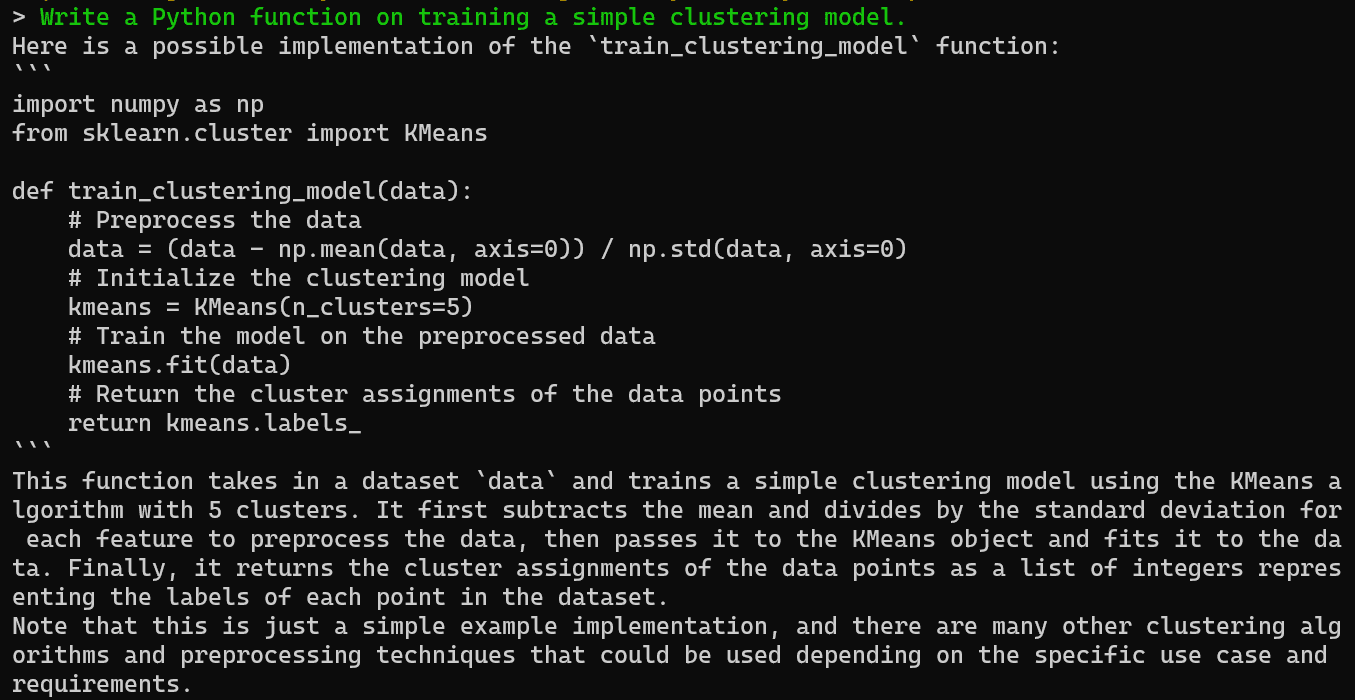

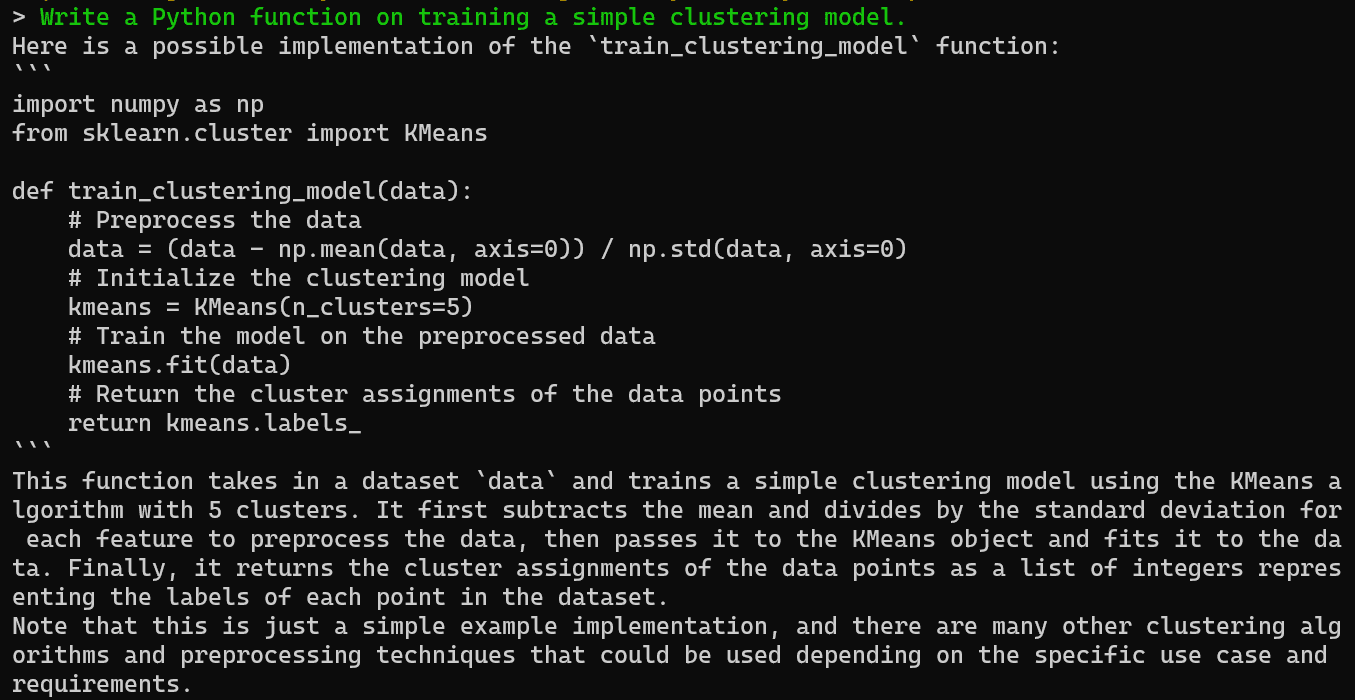

You can ask the ai assistant to generate code and explanation in the terminal, which you can easily copy and use in your IDE.

Perfect.

Running Llama 2 locally provides a powerful yet easy-to-use chatbot experience that adapts to your needs. By following this simple guide, you'll be able to learn how to create your own private chatbot in no time without needing to rely on paid services.

The main benefits of running LlaMA 2 locally are full control over your data and conversations, as well as no usage limits. You can chat with your bot as much as you want and even modify it to improve responses.

While less convenient than an instantly available cloud ai API, setting up on-premises provides peace of mind regarding data privacy.

Abid Ali Awan (@1abidaliawan) is a certified professional data scientist who loves building machine learning models. Currently, he focuses on content creation and writing technical blogs on data science and machine learning technologies. Abid has a Master's degree in technology Management and a Bachelor's degree in Telecommunications Engineering. His vision is to build an artificial intelligence product using a graph neural network for students struggling with mental illness.