In today’s world of video and image analysis, detector models play a vital role in the technology. They should be ideally accurate, speedy and scalable. Their applications vary from small factory detection tasks to self-driving cars and also help in advanced image processing. The YOLO (You Only Look Once) model has purely pushed the boundaries of what’s possible, maintaining accuracy with speed. Recently YOLOv11 model has been released and it is one of the best models compared to its family.

In this article, the main focus is on the in-detail architecture components explanation and how it works, with a small implementation at the end for hands-on. This is a part of my research work, so I thought to share the following analysis.

Learning Outcomes

- Understand the evolution and significance of the YOLO model in real-time object detection.

- Analyze YOLOv11’s advanced architectural components, like C3K2 and SPFF, for enhanced feature extraction.

- Learn how attention mechanisms, like C2PSA, improve small object detection and spatial focus.

- Compare performance metrics of YOLOv11 with earlier YOLO versions to evaluate improvements in speed and accuracy.

- Gain hands-on experience with YOLOv11 through a sample implementation for practical insights into its capabilities.

This article was published as a part of the Data Science Blogathon.

What is YOLO?

Object detection is a challenging task in computer vision. It involves accurately identifying and localizing objects within an image. Traditional techniques, like R-CNN, often take a long time to process images. These methods generate all potential object responses before classifying them. This approach is inefficient for real-time applications.

Birth of YOLO: You Only Look Once

Joseph Redmon, Santosh Divvala, Ross Girshick, Ali Farhadi published a paper named “You Only Look Once: Unified, Real-Time Object Detection” at CVPR, introducing a revolutionary model named YOLO. The main motive is to create a faster, single-shot detection algorithm without compromising on accuracy. This takes as a regression problem, where an image is once passed through FNN to get the bounding box coordinates and respective class for multiple objects.

Milestones in YOLO Evolution (V1 to V11)

Since the introduction of YOLOv1, the model has undergone several iterations, each improving upon the last in terms of accuracy, speed, and efficiency. Here are the major milestones across the different YOLO versions:

- YOLOv1 (2016): The original YOLO model, which was designed for speed, achieved real-time performance but struggled with small object detection due to its coarse grid system

- YOLOv2 (2017): Introduced batch normalization, anchor boxes, and higher resolution input, resulting in more accurate predictions and improved localization

- YOLOv3 (2018): Brought in multi-scale predictions using feature pyramids, which improved the detection of objects at different sizes and scales

- YOLOv4 (2020): Focused on improvements in data augmentation, including mosaic augmentation and self-adversarial training, while also optimizing backbone networks for faster inference

- YOLOv5 (2020): Although controversial due to the lack of a formal research paper, YOLOv5 became widely adopted due to its implementation in PyTorch, and it was optimized for practical deployment

- YOLOv6, YOLOv7 (2022): Brought improvements in model scaling and accuracy, introducing more efficient versions of the model (like YOLOv7 Tiny), which performed exceptionally well on edge devices

- YOLOv8: YOLOv8 introduced architectural changes such as the CSPDarkNet backbone and path aggregation, improving both speed and accuracy over the previous version

- YOLOv11: The latest YOLO version, YOLOv11, introduces a more efficient architecture with C3K2 blocks, SPFF (Spatial Pyramid Pooling Fast), and advanced attention mechanisms like C2PSA. YOLOv11 is designed to enhance small object detection and improve accuracy while maintaining the real-time inference speed that YOLO is known for.

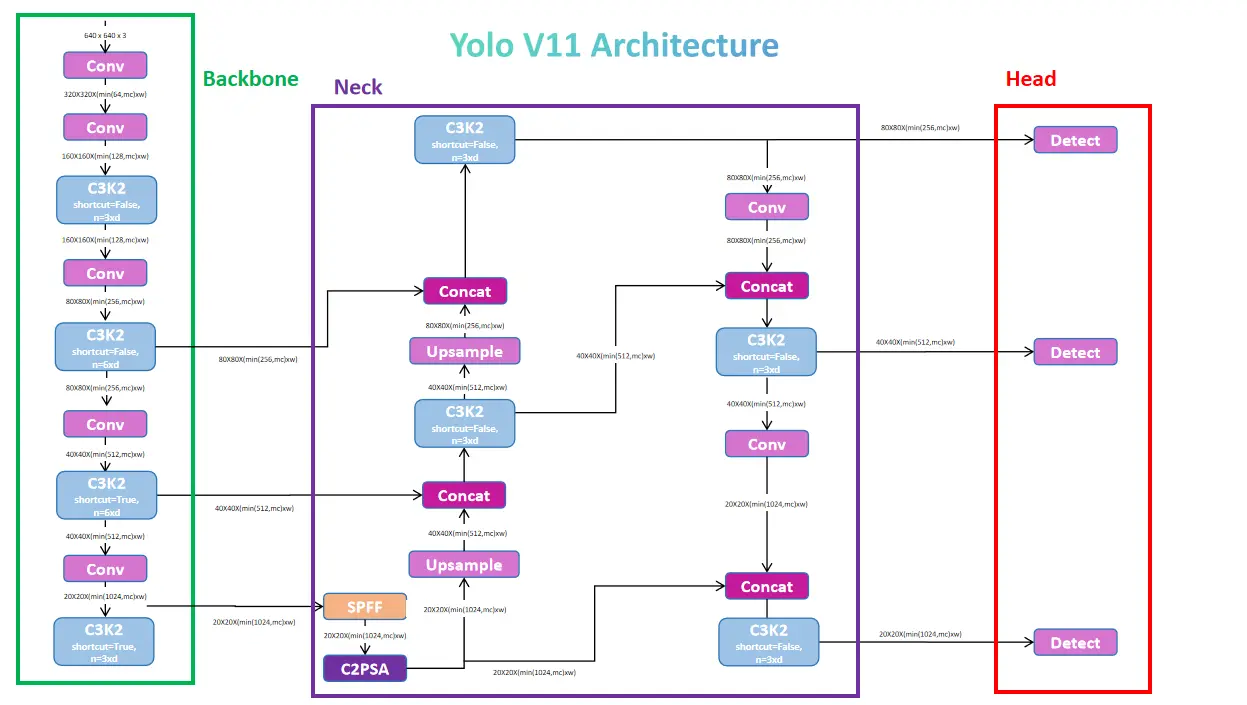

YOLOv11 Architecture

The architecture of YOLOv11 is designed to optimize both speed and accuracy, building on the advancements introduced in earlier YOLO versions like YOLOv8, YOLOv9, and YOLOv10. The main architectural innovations in YOLOv11 revolve around the C3K2 block, the SPFF module, and the C2PSA block, all of which enhance its ability to process spatial information while maintaining high-speed inference.

Backbone

The backbone is the core of YOLOv11’s architecture, responsible for extracting essential features from input images. By utilizing advanced convolutional and bottleneck blocks, the backbone efficiently captures crucial patterns and details, setting the stage for precise object detection.

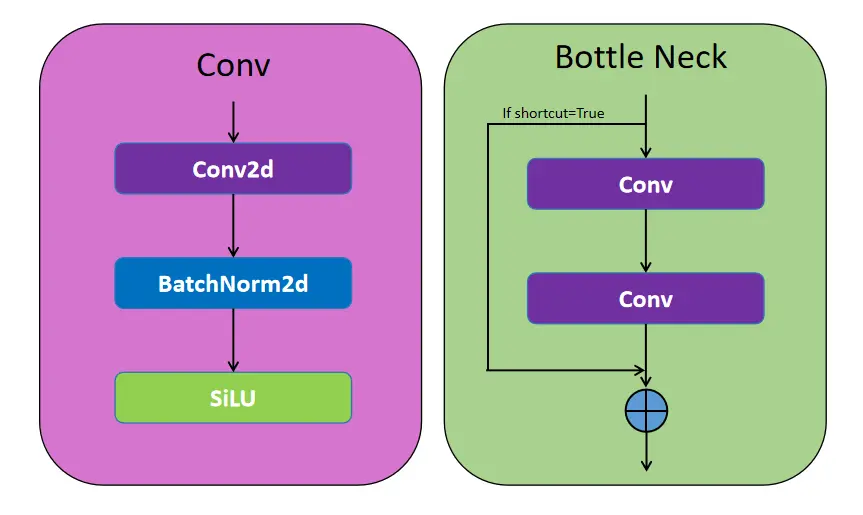

Convolutional Block

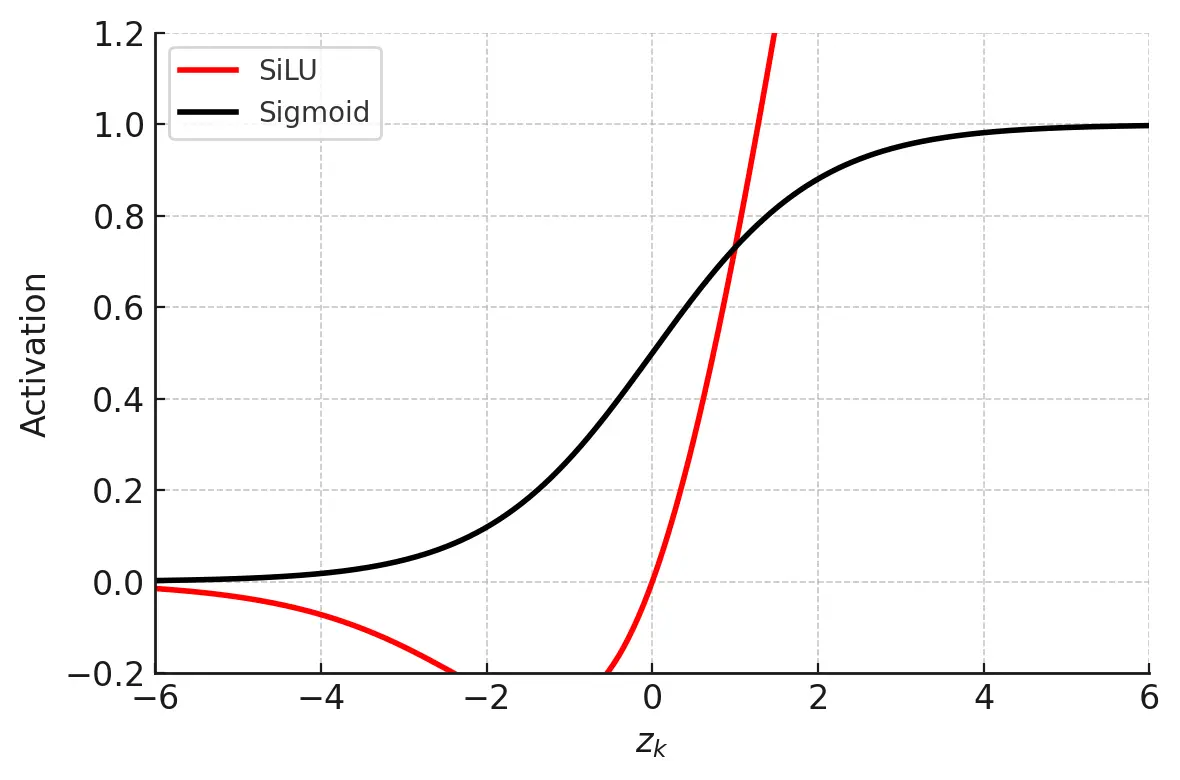

This block is named as Conv Block which process the given c,h,w passing through a 2D convolutional layer following with a 2D Batch Normalization layer at last with a SiLU Activation Function.

Bottle Neck

This is a sequence of convolutional block with a shortcut parameter, this would decide if you want to get the residual part or not. It is similar to the ResNet Block, if shortcut is set to False then no residual would be considered.

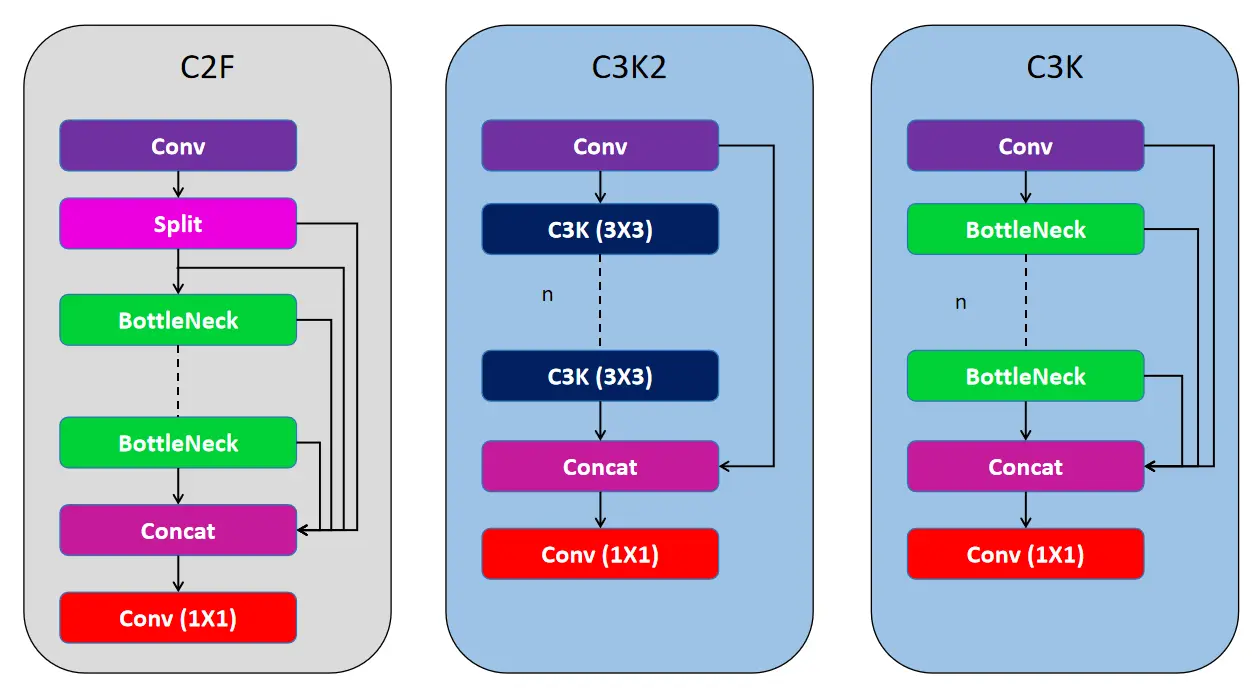

C2F (YOLOv8)

The C2F block (Cross Stage Partial Focus, CSP-Focus), is derived from CSP network, specifically focusing on efficiency and feature map preservation. This block contains a Conv Block then splitting the output into two halves (where the channels gets divided), and they are processed through a series of ’n’ Bottle Neck layers and lastly concatinates every layer output following with a final Conv block. This helps to enhance feature map connections without redundant information.

C3K2

YOLOv11 uses C3K2 blocks to handle feature extraction at different stages of the backbone. The smaller 3×3 kernels allow for more efficient computation while retaining the model’s ability to capture essential features in the image. At the heart of YOLOv11’s backbone is the C3K2 block, which is an evolution of the CSP (Cross Stage Partial) bottleneck introduced in earlier versions. The C3K2 block optimizes the flow of information through the network by splitting the feature map and applying a series of smaller kernel convolutions (3×3), which are faster and computationally cheaper than larger kernel convolutions.By processing smaller, separate feature maps and merging them after several convolutions, the C3K2 block improves feature representation with fewer parameters compared to YOLOv8’s C2f blocks.

The C3K block contains a similar structure to C2F block but no splitting will be done here, the input is passed through a Conv block following with a series of ’n’ Bottle Neck layers with concatinations and ends with final Conv Block.

The C3K2 uses C3K block to process the information. It has 2 Conv block at start and end following with a series of C3K block and lastly concatinating the Conv Block output and the last C3K block output and ends with a final Conv Block.This block focuses on maintaining a balance between speed and accuracy, leveraging the CSP structure.

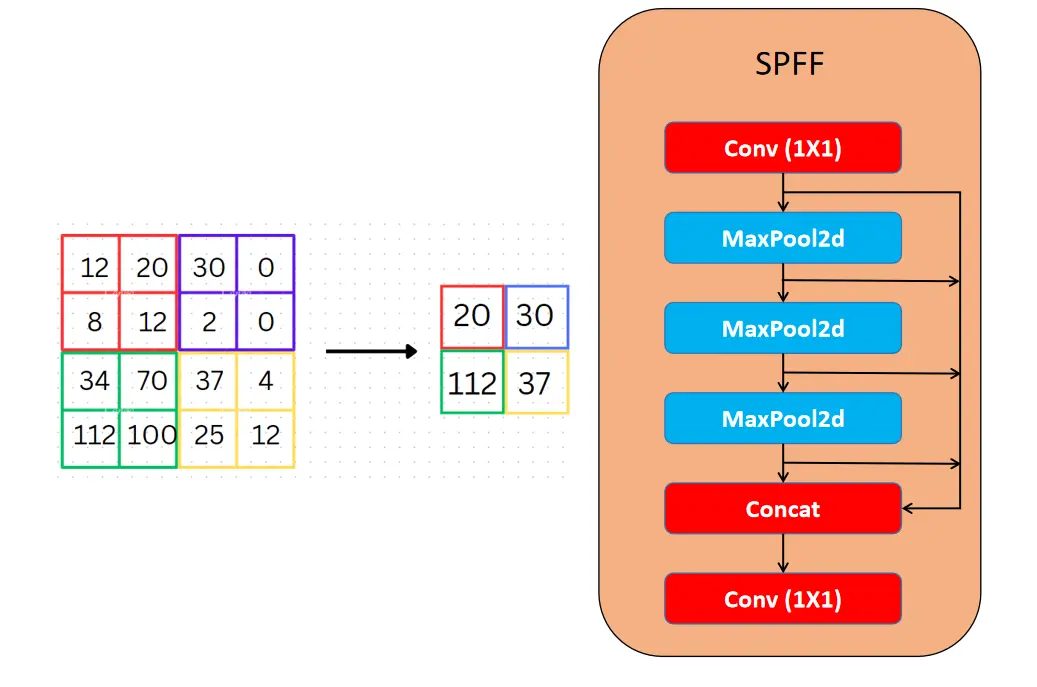

Neck: Spatial Pyramid Pooling Fast (SPFF) and Upsampling

YOLOv11 retains the SPFF module (Spatial Pyramid Pooling Fast), which was designed to pool features from different regions of an image at varying scales. This improves the network’s ability to capture objects of different sizes, especially small objects, which has been a challenge for earlier YOLO versions.

SPFF pools features using multiple max-pooling operations (with varying kernel sizes) to aggregate multi-scale contextual information. This module ensures that even small objects are recognized by the model, as it effectively combines information across different resolutions. The inclusion of SPFF ensures that YOLOv11 can maintain real-time speed while enhancing its ability to detect objects across multiple scales.

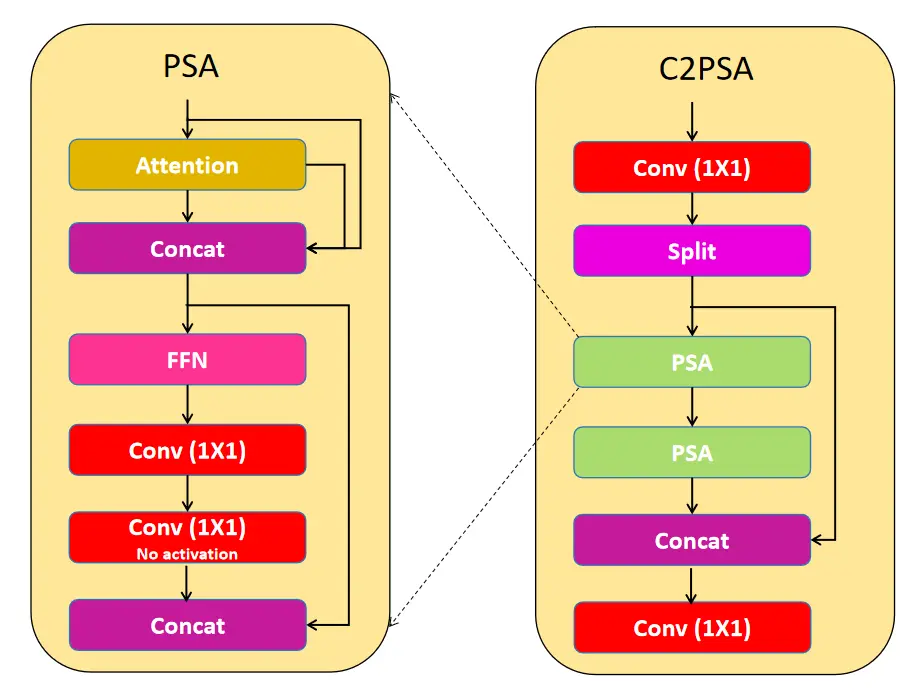

Attention Mechanisms: C2PSA Block

One of the significant innovations in YOLOv11 is the addition of the C2PSA block (Cross Stage Partial with Spatial Attention). This block introduces attention mechanisms that improve the model’s focus on important regions within an image, such as smaller or partially occluded objects, by emphasizing spatial relevance in the feature maps.

Position-Sensitive Attention

This class encapsulates the functionality for applying position-sensitive attention and feed-forward networks to input tensors, enhancing feature extraction and processing capabilities. This layers includes processing the input layer with Attention layer and concatinating the input and attention layer output, then it is passed through a Feed forward Neural Networks following with Conv Block and then Conv Block without activation and then concatinating the Conv Block output and the first contact layer output.

C2PSA

The C2PSA block uses two PSA (Partial Spatial Attention) modules, which operate on separate branches of the feature map and are later concatenated, similar to the C2F block structure. This setup ensures the model focuses on spatial information while maintaining a balance between computational cost and detection accuracy. The C2PSA block refines the model’s ability to selectively focus on regions of interest by applying spatial attention over the extracted features. This allows YOLOv11 to outperform previous versions like YOLOv8 in scenarios where fine object details are necessary for accurate detection.

Head: Detection and Multi-Scale Predictions

Similar to earlier YOLO versions, YOLOv11 uses a multi-scale prediction head to detect objects at different sizes. The head outputs detection boxes for three different scales (low, medium, high) using the feature maps generated by the backbone and neck.

The detection head outputs predictions from three feature maps (usually from P3, P4, and P5), corresponding to different levels of granularity in the image. This approach ensures that small objects are detected in finer detail (P3) while larger objects are captured by higher-level features (P5).

Code Implementation for YOLOv11

Here’s a minimal and concise implementation for YOLOv11 using PyTorch. This will give you a clear starting point for testing object detection on images.

Step 1: Installation and Setup

First, make sure you have the necessary dependencies installed. You can try this part on Google Colab

import os

HOME = os.getcwd()

print(HOME)!pip install ultralytics supervision roboflow

import ultralytics

ultralytics.checks()vStep 2: Loading the YOLOv11 Model

The following code snippet demonstrates how to load the YOLOv11 model and run inference on an input image and video

# This CLI command is to detect for image, you can replace the source with the video file path

# to perform detection task on video.

!yolo task=detect mode=predict model=yolo11n.pt conf=0.25 source="/content/image.png" save=TrueResults

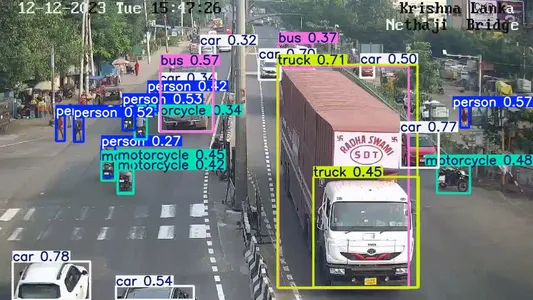

YOLOv11 detects the horse with high precision, showcasing its object localization capability.

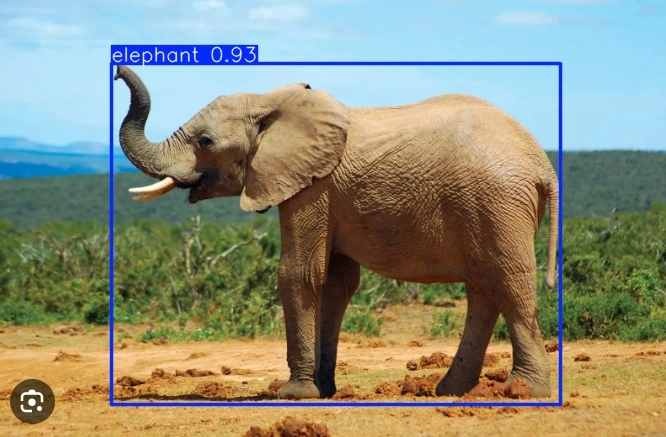

The YOLOv11 model identifies and outlines the elephant, emphasizing its skill in recognizing larger objects.

YOLOv11 accurately detects the bus, demonstrating its robustness in identifying different types of vehicles.

This minimal code covers loading, running, and displaying results using the YOLOv11 model. You can expand upon it for advanced use cases like batch processing or adjusting model confidence thresholds, but this serves as a quick and effective starting point. You can find more interesting tasks to implement using YOLOv11 using these helper functions: Tasks Solution

Performance Metrics Explanation for YOLOv11

We will now explore performance metrics for YOLOv11 below:

Mean Average Precision (mAP)

- mAP is the average precision computed across multiple classes and IoU thresholds. It is the most common metric for object detection tasks, providing insight into how well the model balances precision and recall.

- Higher mAP values indicate better object localization and classification, especially for small and occluded objects. Improvement due to

Intersection Over Union (IoU)

- IoU calculates the overlap between the expected bounding box and the ground truth box. An IoU threshold (often set between 0.5 and 0.95) is used to assess if a prediction is regarded a true positive.

Frames Per Second (FPS)

- FPS measures the speed of the model, indicating how many frames the model can process per second. A higher FPS means faster inference, which is critical for real-time applications.

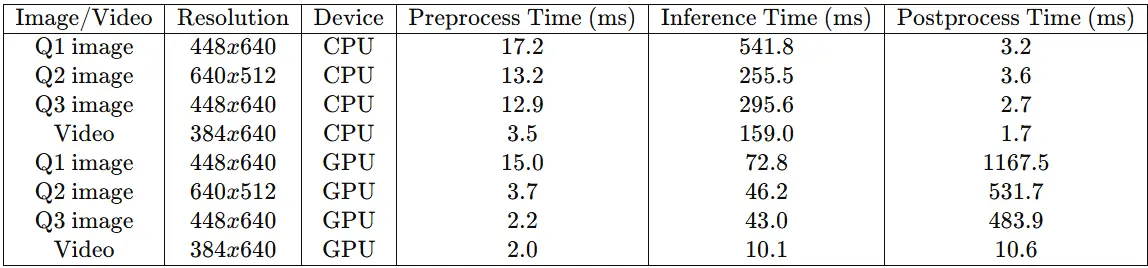

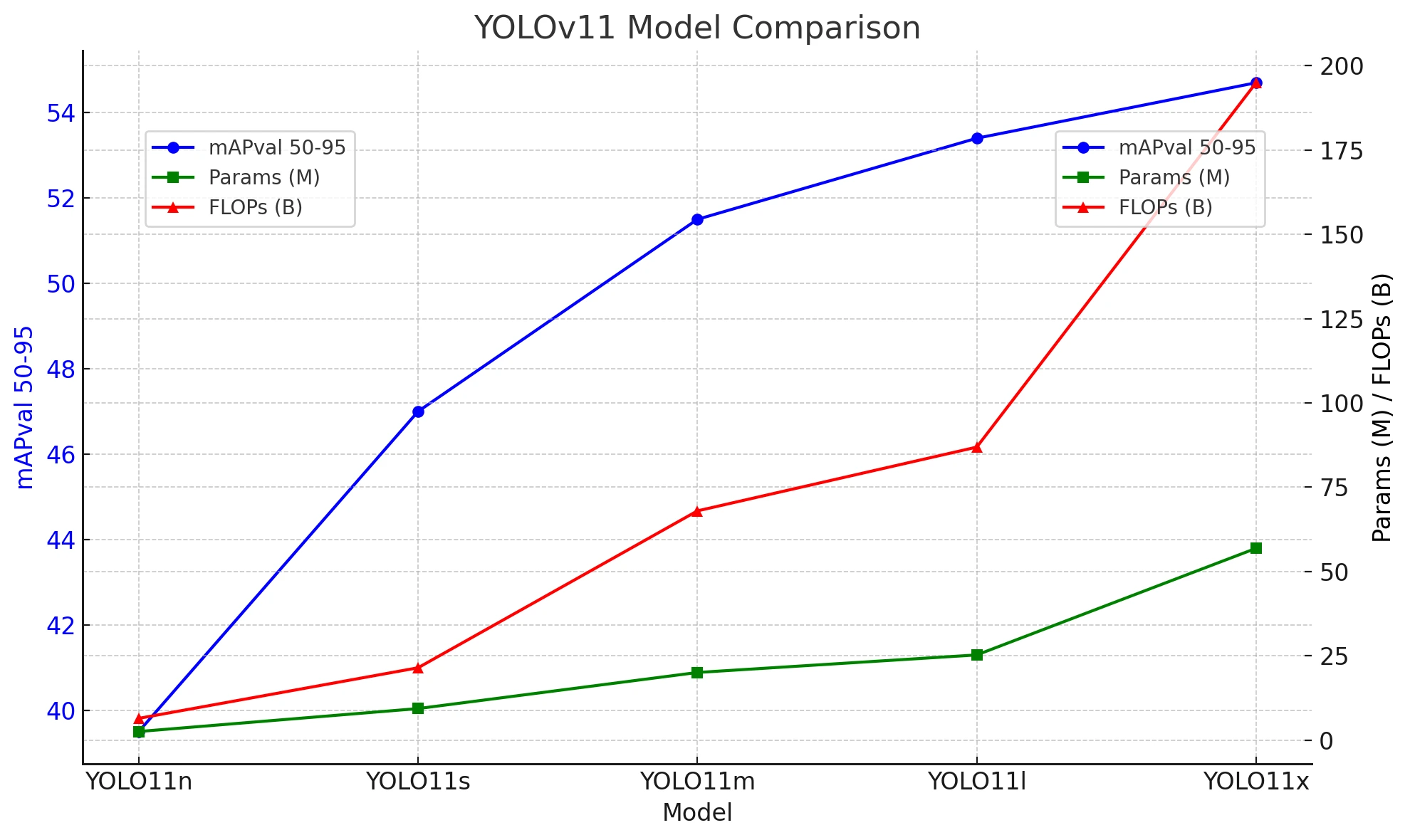

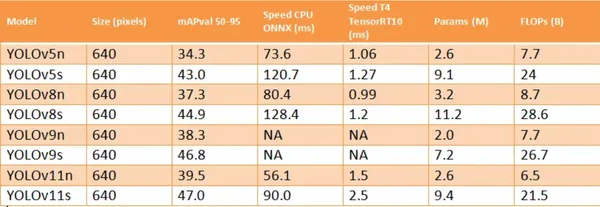

Performance Comparison of YOLOv11 with Previous Versions

In this section, we will compare YOLOv5, YOLOv8 and YOLOv9 with YOLOv11 The performance comparison will cover metrics such as mean Average Precision (mAP), inference speed (FPS), and parameter efficiency across various tasks like object detection and segmentation.

Conclusion

YOLOv11 marks a pivotal advancement in object detection, combining speed, accuracy, and efficiency through innovations like C3K2 blocks for feature extraction and C2PSA attention for focusing on critical image regions. With improved mAP scores and FPS rates, it excels in real-world applications such as autonomous driving and medical imaging. Its capabilities in multi-scale detection and spatial attention allow it to handle complex object structures while maintaining fast inference. YOLOv11 effectively balances the speed-accuracy tradeoff, offering an accessible solution for researchers and practitioners in various computer vision applications, from edge devices to real-time video analytics.

Key Takeaways

- YOLOv11 achieves superior speed and accuracy, surpassing previous versions like YOLOv8 and YOLOv10.

- The introduction of C3K2 blocks and C2PSA attention mechanisms significantly improves feature extraction and focus on critical image regions.

- Ideal for autonomous driving and medical imaging, YOLOv11 excels in scenarios requiring precision and rapid inference.

- The model effectively handles complex object structures, maintaining fast inference rates in challenging environments.

- YOLOv11 offers an accessible setup, making it suitable for researchers and practitioners in various computer vision fields.

Frequently Asked Questions

A. YOLOv11 introduces the C3K2 blocks and SPFF (Spatial Pyramid Pooling Fast) modules specifically designed to enhance the model’s ability to capture fine details at multiple scales. The advanced attention mechanisms in the C2PSA block also help focus on small, partially occluded objects. These innovations ensure that small objects are accurately detected without sacrificing speed.

A. The C2PSA block introduces partial spatial attention, allowing YOLOv11 to emphasize relevant regions in an image. It combines attention mechanisms with position-sensitive features, enabling better focus on critical areas like small or cluttered objects. This selective attention mechanism improves the model’s ability to detect complex scenes, surpassing previous versions in accuracy.

A. YOLOv11’s C3K2 block uses 3×3 convolution kernels to achieve more efficient computations without compromising feature extraction. Smaller kernels allow the model to process information faster and more efficiently, which is essential for maintaining real-time performance. This also reduces the number of parameters, making the model lighter and more scalable.

A. The SPFF (Spatial Pyramid Pooling Fast) module pools features at different scales using multi-sized max-pooling operations. This ensures that objects of various sizes, especially small ones, are captured effectively. By aggregating multi-resolution context, the SPFF module boosts YOLOv11’s ability to detect objects at different scales, all while maintaining speed.

The media shown in this article is not owned by Analytics Vidhya and is used at the Author’s discretion.