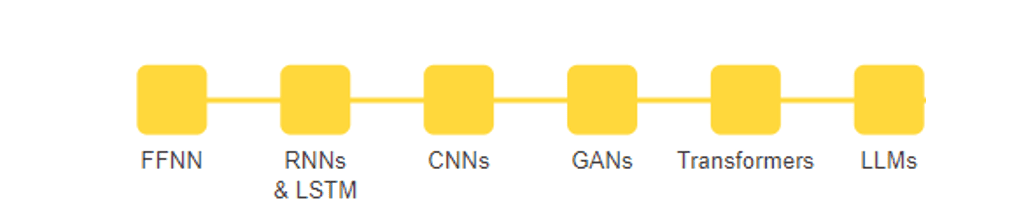

Walkthrough of neural network evolution (image by author).

Neural networks, the fundamental building blocks of artificial intelligence, have revolutionized the way we process information, offering a glimpse into the future of technology. These complex computational systems, inspired by the intricacies of the human brain, have become pivotal in tasks ranging from image recognition and natural language understanding to autonomous driving and medical diagnosis. As we explore neural networks’ historical evolution, we will uncover their remarkable journey of how they have evolved to shape the modern landscape of ai.

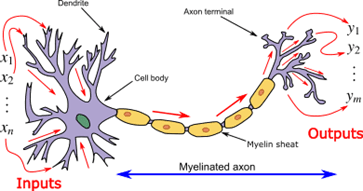

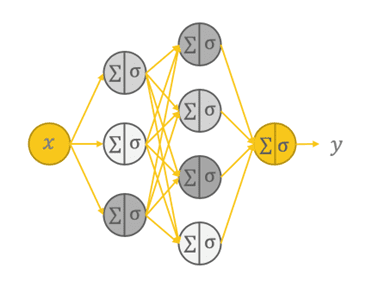

Neural networks, the foundational components of deep learning, owe their conceptual roots to the intricate biological networks of neurons within the human brain. This remarkable concept began with a fundamental analogy, drawing parallels between biological neurons and computational networks.

This analogy centers around the brain, which is composed of roughly 100 billion neurons. Each neuron maintains about 7,000 synaptic connections with other neurons, creating a complex neural network that underlies human cognitive processes and decision making.

Individually, a biological neuron operates through a series of simple electrochemical processes. It receives signals from other neurons through its dendrites. When these incoming signals add up to a certain level (a predetermined threshold), the neuron switches on and sends an electrochemical signal along its axon. This, in turn, affects the neurons connected to its axon terminals. The key thing to note here is that a neuron’s response is like a binary switch: it either fires (activates) or stays quiet, without any in-between states.

Biological neurons were the inspiration for artificial neural networks (image: Wikipedia).

Artificial neural networks, as impressive as they are, remain a far cry from even remotely approaching the astonishing intricacies and profound complexities of the human brain. Nonetheless, they have demonstrated significant prowess in addressing problems that are challenging for conventional computers but appear intuitive to human cognition. Some examples are image recognition and predictive analytics based on historical data.

Now that we’ve explored the foundational principles of how biological neurons function and their inspiration for artificial neural networks, let’s journey through the evolution of neural network frameworks that have shaped the landscape of artificial intelligence.

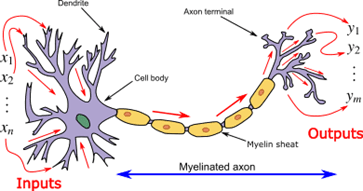

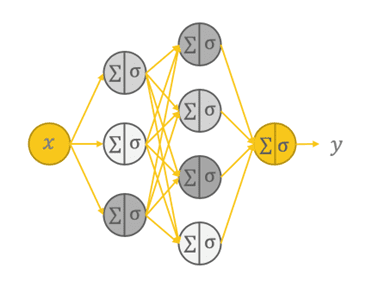

Feed forward neural networks, often referred to as a multilayer perceptron, are a fundamental type of neural networks, whose operation is deeply rooted in the principles of information flow, interconnected layers, and parameter optimization.

At their core, FFNNs orchestrate a unidirectional journey of information. It all begins with the input layer containing n neurons, where data is initially ingested. This layer serves as the entry point for the network, acting as a receptor for the input features that need to be processed. From there, the data embarks on a transformative voyage through the network’s hidden layers.

One important aspect of FFNNs is their connected structure, which means that each neuron in a layer is intricately connected to every neuron in that layer. This interconnectedness allows the network to perform computations and capture relationships within the data. It’s like a communication network where every node plays a role in processing information.

As the data passes through the hidden layers, it undergoes a series of calculations. Each neuron in a hidden layer receives inputs from all neurons in the previous layer, applies a weighted sum to these inputs, adds a bias term, and then passes the result through an activation function (commonly ReLU, Sigmoid, or tanH). These mathematical operations enable the network to extract relevant patterns from the input, and capture complex, nonlinear relationships within data. This is where FFNNs truly excel compared to more shallow ML models.

Architecture of fully-connected feed-forward neural networks (image by author).

However, that’s not where it ends. The real power of FFNNs lies in their ability to adapt. During training the network adjusts its weights to minimize the difference between its predictions and the actual target values. This iterative process, often based on optimization algorithms like gradient descent, is called backpropagation. Backpropagation empowers FFNNs to actually learn from data and improve their accuracy in making predictions or classifications.

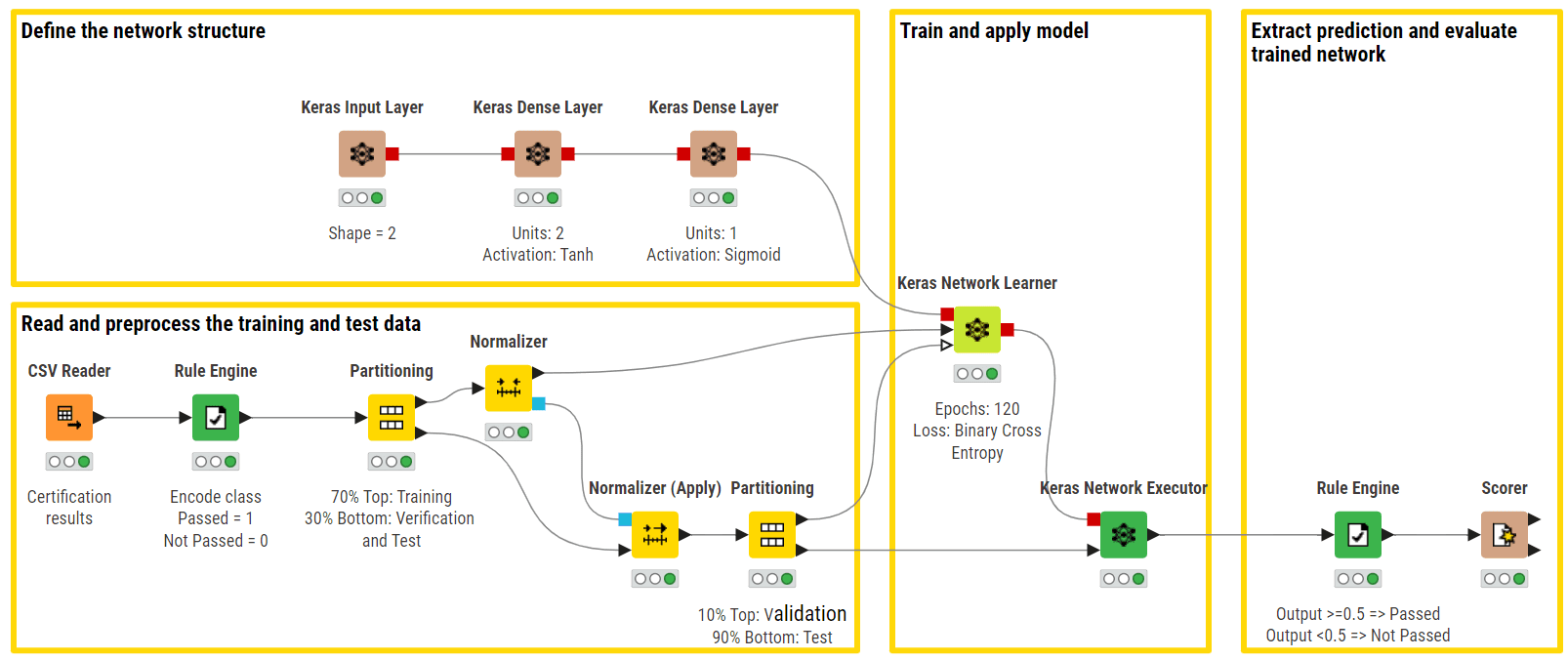

Example KNIME workflow of FFNN used for the binary classification of certification exams (pass vs fail). In the upper branch, we can see the network architecture, which is made of an input layer, a fully connected hidden layer with a tanH activation function, and an output layer that uses a Sigmoid activation function (image by author).

While powerful and versatile, FFNNs display some relevant limitations. For example, they fail to capture sequentiality and temporal/syntactic dependencies in the data –two crucial aspects for tasks in language processing and time series analysis. The need to overcome these limitations prompted the evolution of a new type of neural network architecture. This transition paved the way for Recurrent Neural Networks (RNNs), which introduced the concept of feedback loops to better handle sequential data.

At their core, RNNs share some similarities with FFNNs. They too are composed of layers of interconnected nodes, processing data to make predictions or classifications. However, their key differentiator lies in their ability to handle sequential data and capture temporal dependencies.

In a FFNN, information flows in a single, unidirectional path from the input layer to the output layer. This is suitable for tasks where the order of data doesn’t matter much. However, when dealing with sequences like time series data, language, or speech, maintaining context and understanding the order of data is crucial. This is where RNNs shine.

RNNs introduce the concept of feedback loops. These act as a sort of “memory” and allow the network to maintain a hidden state that captures information about previous inputs and to influence the current input and output. While traditional neural networks assume that inputs and outputs are independent of each other, the output of recurrent neural networks depend on the prior elements within the sequence. This recurrent connection mechanism makes RNNs particularly fit to handle sequences by “remembering” past information.

Another distinguishing characteristic of recurrent networks is that they share the same weight parameter within each layer of the network, and those weights are adjusted leveraging the backpropagation through time (BPTT) algorithm, which is slightly different from traditional backpropagation as it is specific to sequence data.

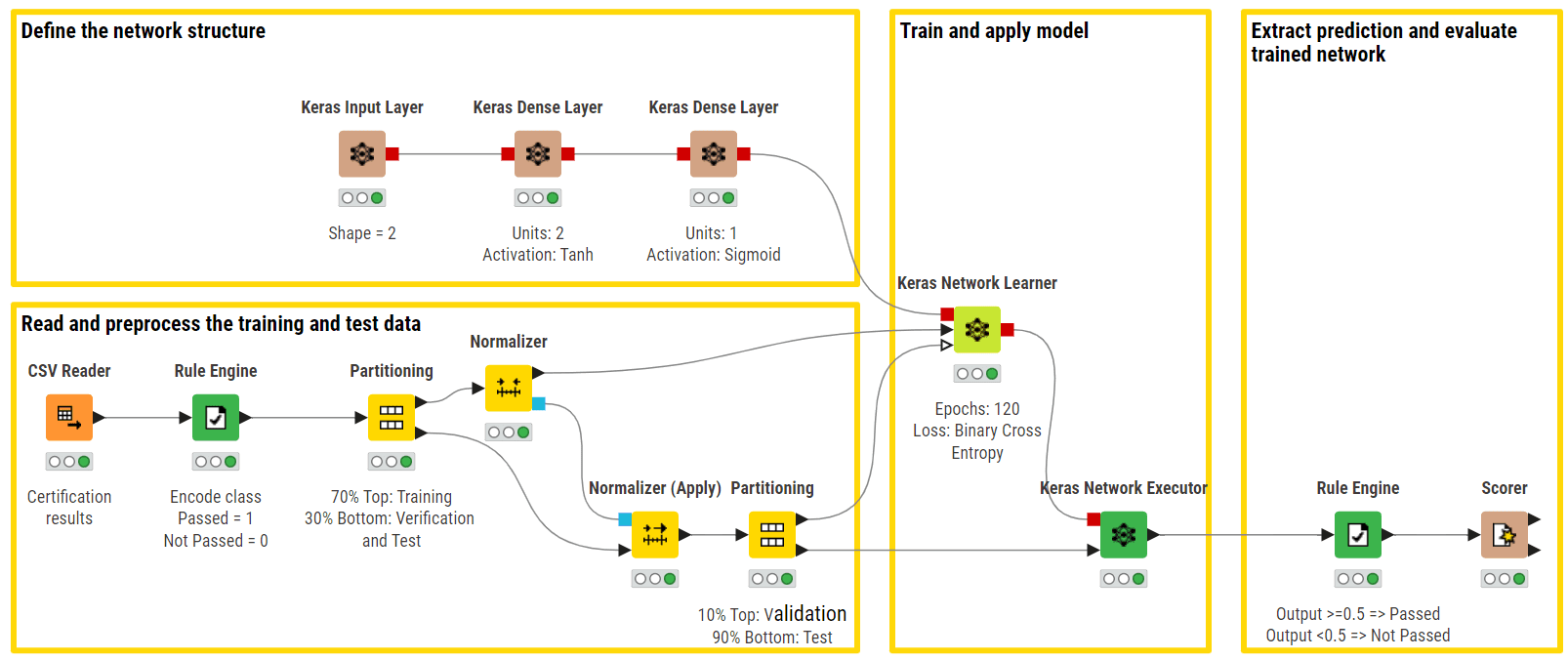

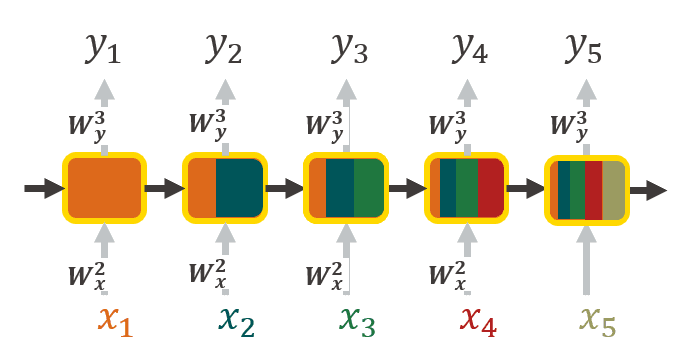

Unrolled representation of RNNs, where each input is enriched with context information coming from previous inputs. The color represents the propagation of context information (image by author).

However, traditional RNNs have their limitations. While in theory they should be able to capture long-range dependencies, in reality they struggle to do so effectively, and can even suffer from the vanishing gradient problem, which hinders their ability to learn and remember information over many time steps.

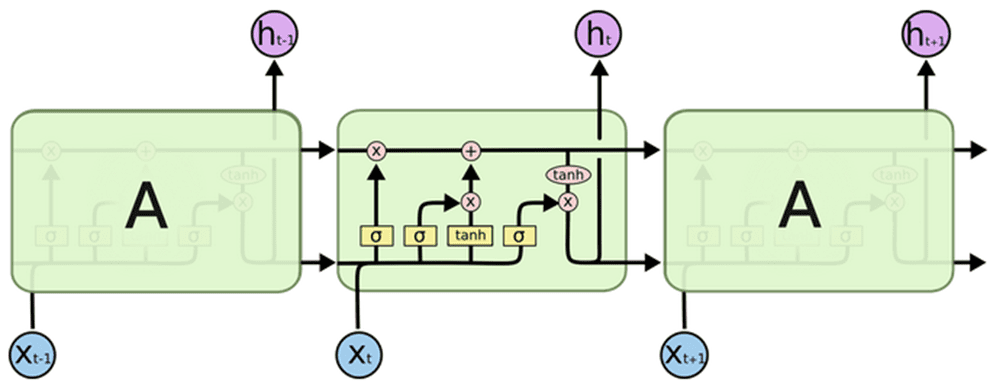

This is where Long Short-Term Memory (LSTM) units come into play. They are specifically designed to handle these issues by incorporating three gates into their structure: the Forget gate, Input gate, and Output gate.

- Forget gate: This gate decides which information from the time step should be discarded or forgotten. By examining the cell state and the current input, it determines which information is irrelevant for making predictions in the present.

- Input gate: This gate is responsible for incorporating information into the cell state. It takes into account both the input and the previous cell state to decide what new information should be added to enhance its state.

- Output gate: This gate concludes what output will be generated by the LSTM unit. It considers both the current input and the updated cell state to produce an output that can be utilized for predictions or passed on to time steps.

Visual representation of Long-Short Term Memory units (image by Christopher Olah).

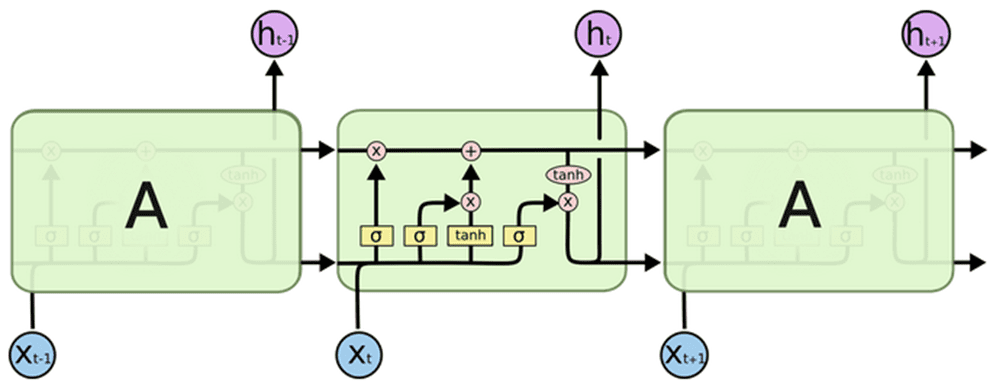

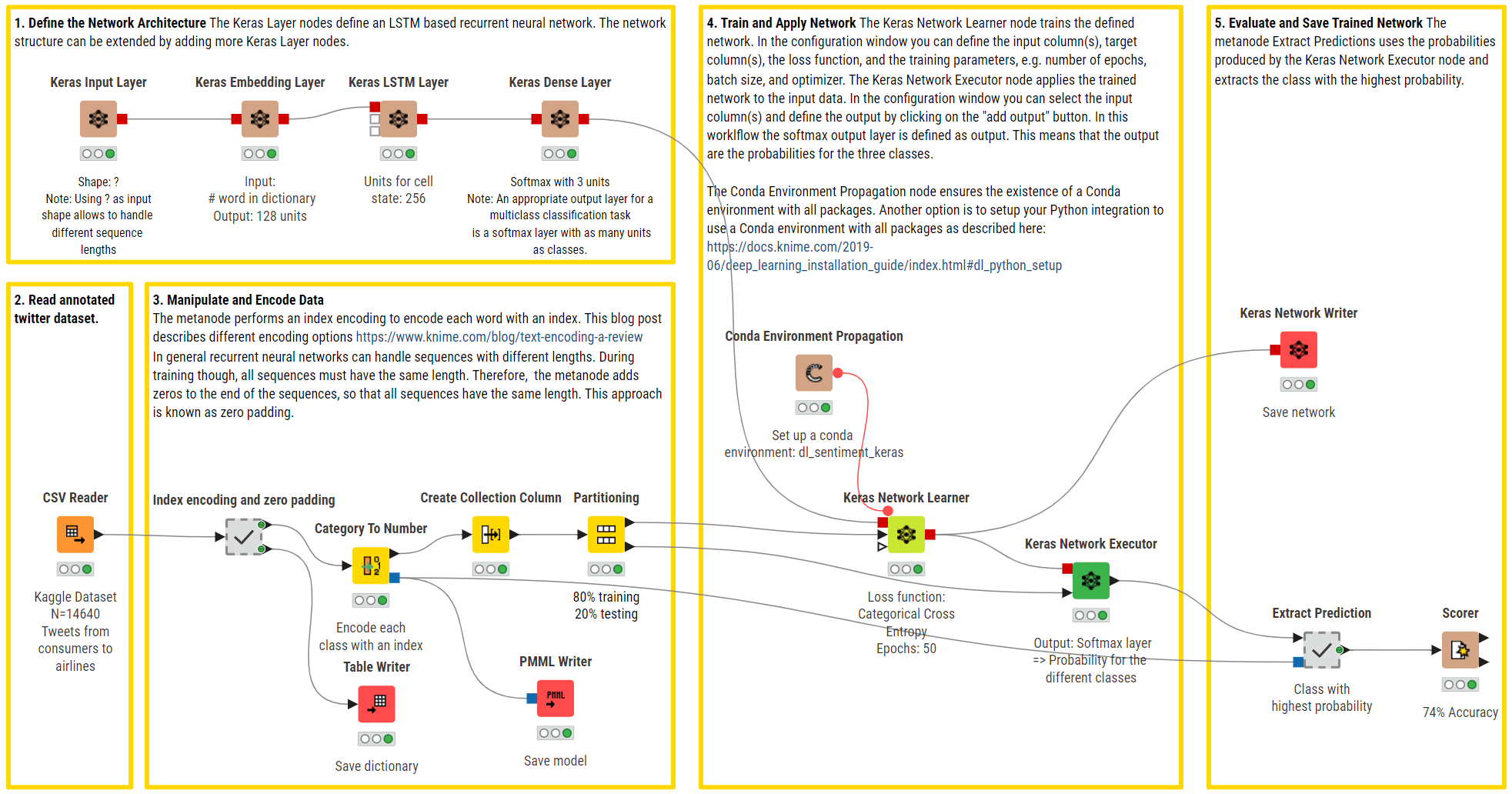

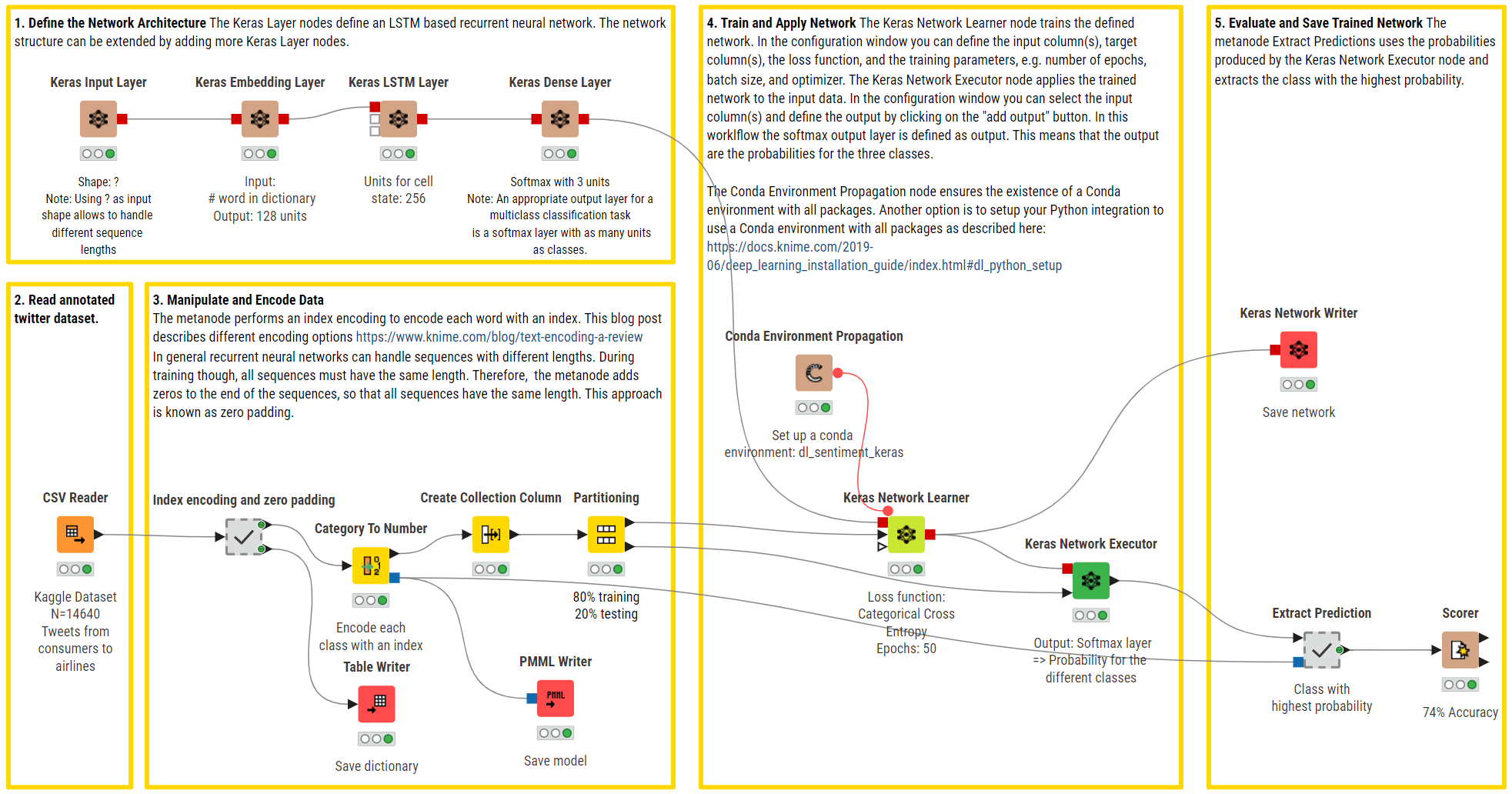

Example KNIME workflow of RNNs with LSTM units used for a multi-class sentiment prediction (positive, negative, neutral). The upper branch defines the network architecture using an input layer to handle strings of different lengths, an embedding layer, an LSTM layer with several units, and a fully connected output layer with a Softmax activation function to return predictions.

In summary, RNNs, and especially LSTM units, are tailored for sequential data, allowing them to maintain memory and capture temporal dependencies, which is a critical capability for tasks like natural language processing, speech recognition, and time series prediction.

As we shift from RNNs capturing sequential dependencies, the evolution continues with Convolutional Neural Networks (CNNs). Unlike RNNs, CNNs excel at spatial feature extraction from structured grid-like data, making them ideal for image and pattern recognition tasks. This transition reflects the diverse applications of neural networks across different data types and structures.

CNNs are a special breed of neural networks, particularly well-suited for processing image data, such as 2D images or even 3D video data. Their architecture relies on a multilayered feed-forward neural network with at least one convolutional layer.

What makes CNNs stand out is their network connectivity and approach to feature extraction, which allows them to automatically identify relevant patterns in the data. Unlike traditional FFNNs, which connect every neuron in one layer to every neuron in the next, CNNs employ a sliding window known as a kernel or filter. This sliding window scans across the input data and is especially powerful for tasks where spatial relationships matter, like identifying objects in images or tracking motion in videos. As the kernel is moved across the image, a convolution operation is performed between the kernel and the pixel values (from a strictly mathematical standpoint, this operation is a cross correlation), and a nonlinear activation function, usually ReLU, is applied. This produces a high value if the feature is in the image patch and a small value if it is not.

Together with the kernel, the addition and fine-tuning of hyperparameters, such as stride (i.e., the number of pixels by which we slide the kernel) and dilation rate (i.e., the spaces between each kernel cell), allows the network to focus on specific features, recognizing patterns and details in specific regions without considering the entire input at once.

Convolution operation with stride length = 2 (GIF by Sumit Saha).

Some kernels may specialize in detecting edges or corners, while others might be tuned to recognize more complex objects like cats, dogs, or street signs within an image. By stacking together several convolutional and pooling layers, CNNs build a hierarchical representation of the input, gradually abstracting features from low-level to high-level, just as our brains process visual information.

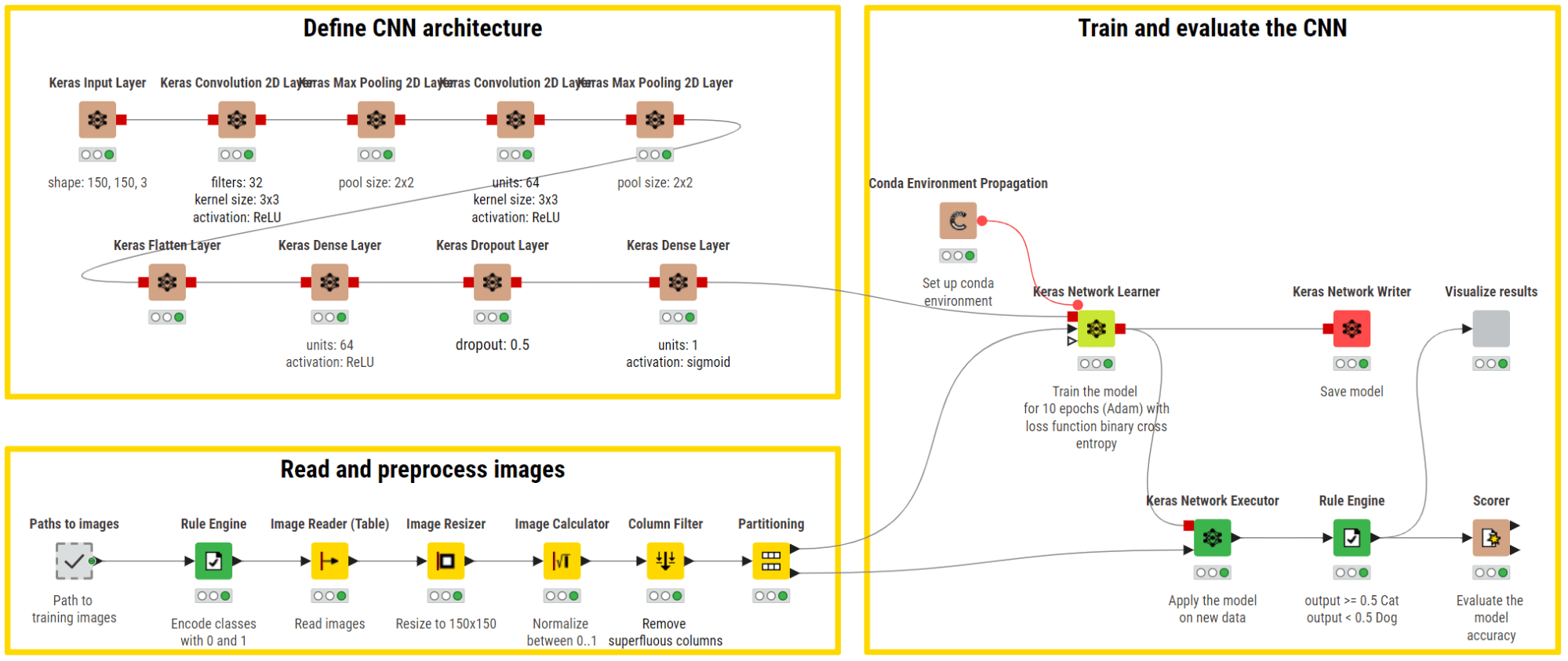

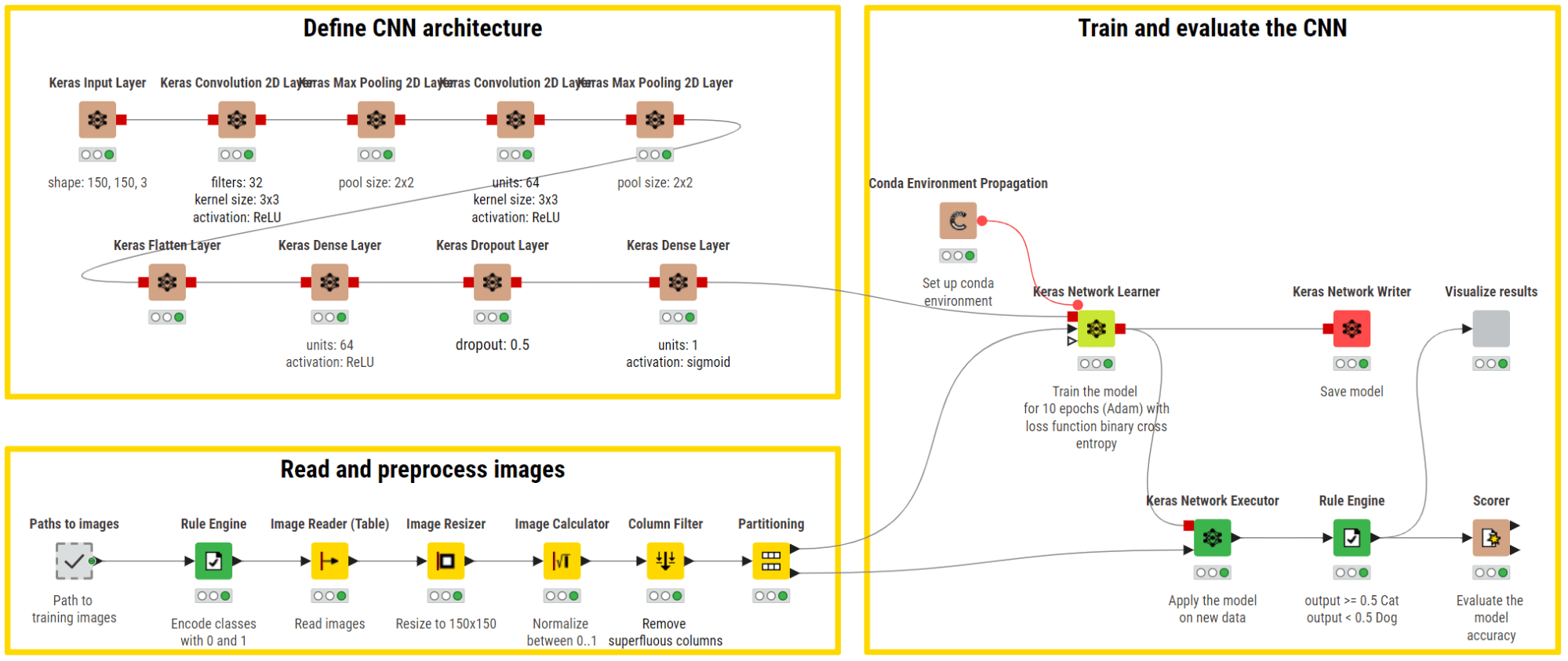

Example KNIME workflow of CNN for binary image classification (cats vs dogs). The upper branch defines the network architecture using a series of convolutional layers and max pooling layers for automatic feature extraction from images. A flatten layer is then used to prepare the extracted features as a unidimensional input for the FFNN to perform a binary classification.

While CNNs excel at feature extraction and have revolutionized computer vision tasks, they act as passive observers, for they are not designed to generate new data or content. This is not an inherent limitation of the network per se but having a powerful engine and no fuel makes a fast car useless. Indeed, real and meaningful image and video data tend to be hard and expensive to collect and tend to face copyright and data privacy restrictions. This constraint led to the development of a novel paradigm that builds on CNNs but marks a leap from image classification to creative synthesis: Generative Adversarial Networks (GANs).

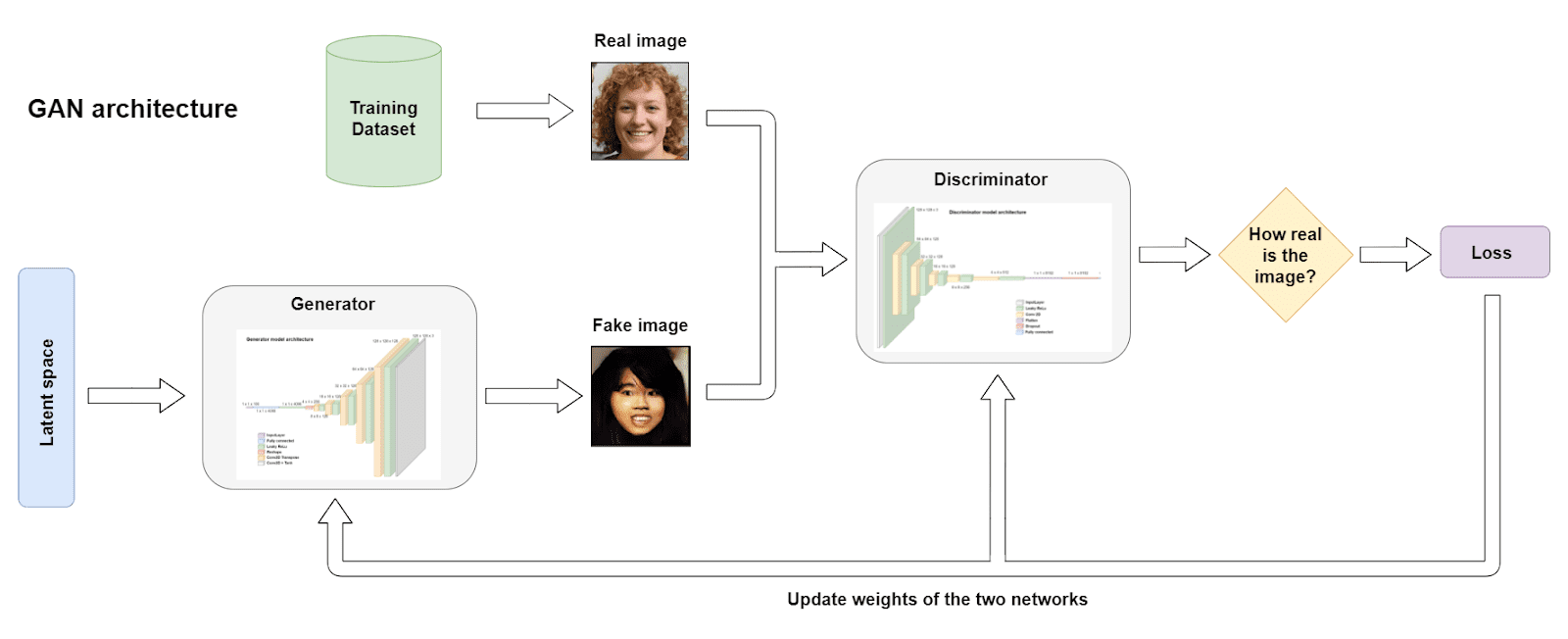

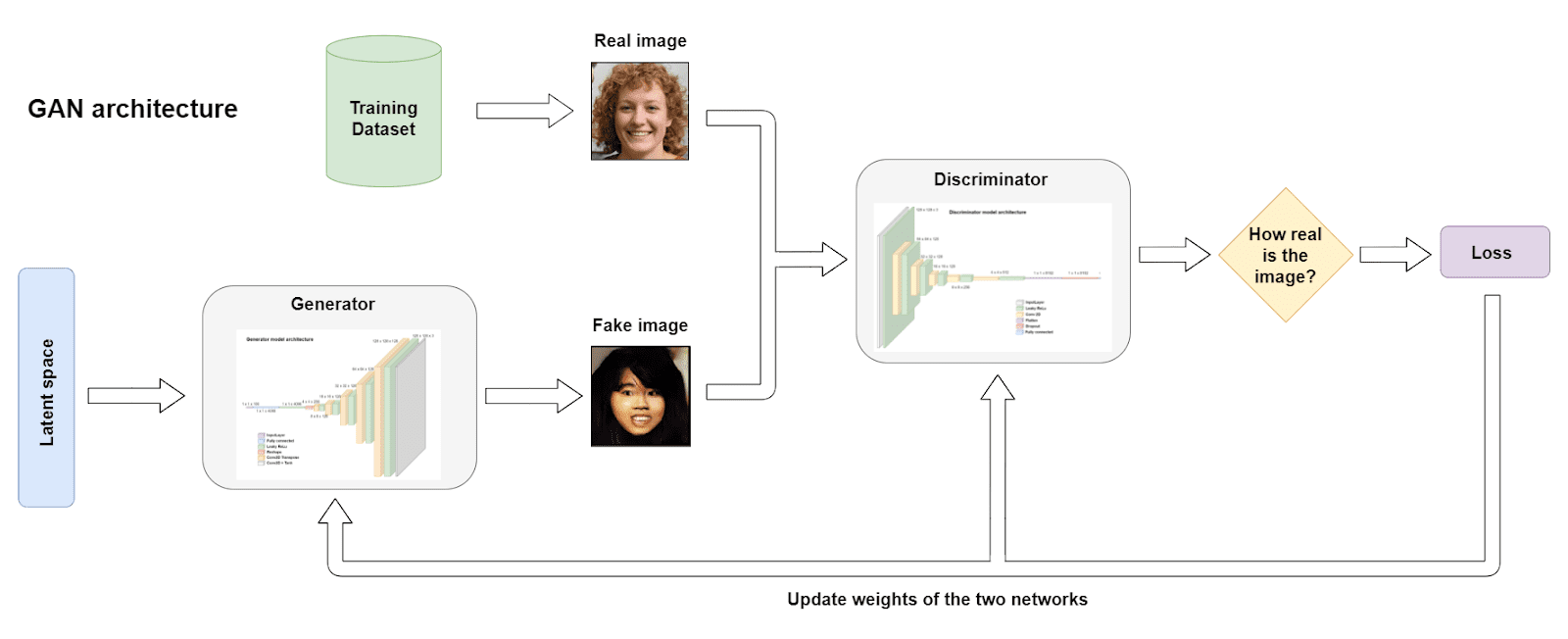

GANs are a particular family of neural networks whose primary, but not the only, purpose is to produce synthetic data that closely mimics a given dataset of real data. Unlike most neural networks, GANs’ ingenious architectural design consisting of two core models:

- Generator model: The first player in this neural network duet is the generator model. This component is tasked with a fascinating mission: given random noise or input vectors, it strives to create artificial samples that are as close to resembling real samples as possible. Imagine it as an art forger, attempting to craft paintings that are indistinguishable from masterpieces.

- Discriminator model: Playing the adversary role is the discriminator model. Its job is to differentiate between the generated samples produced by the generator and the authentic samples from the original dataset. Think of it as an art connoisseur, trying to spot the forgeries among the genuine artworks.

Now, here’s where the magic happens: GANs engage in a continuous, adversarial dance. The generator aims to improve its artistry, continually fine-tuning its creations to become more convincing. Meanwhile, the discriminator becomes a sharper detective, honing its ability to tell the real from the fake.

GAN architecture (image by author).

As training progresses, this dynamic interplay between the generator and discriminator leads to a fascinating outcome. The generator strives to generate samples that are so realistic that even the discriminator can’t tell them apart from the genuine ones. This competition drives both components to refine their abilities continuously.

The result? A generator that becomes astonishingly adept at producing data that appears authentic, be it images, music, or text. This capability has led to remarkable applications in various fields, including image synthesis, data augmentation, image-to-image translation, and image editing.

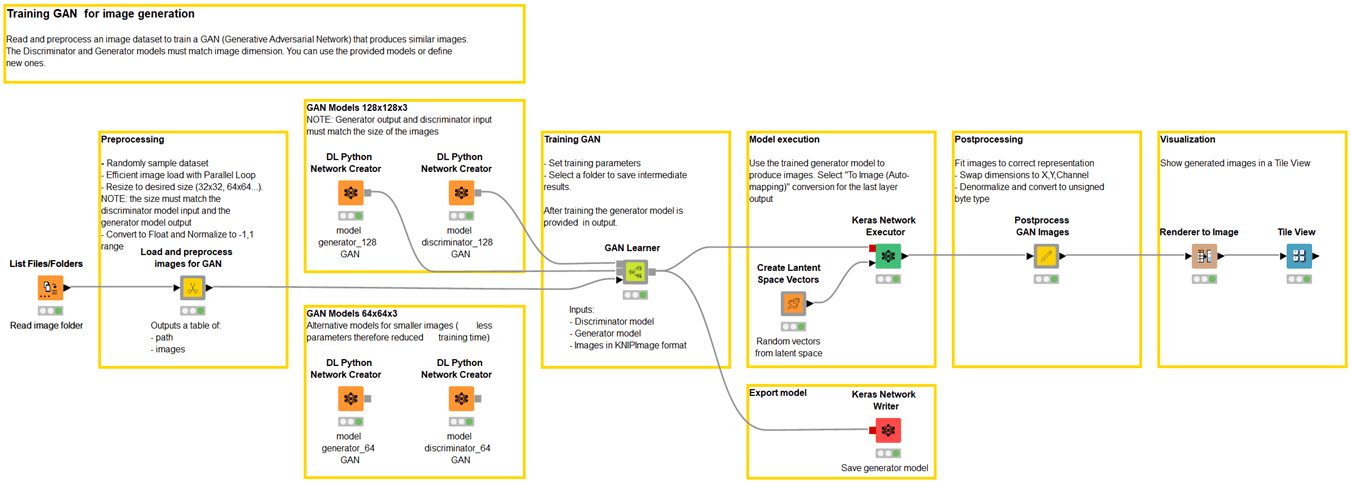

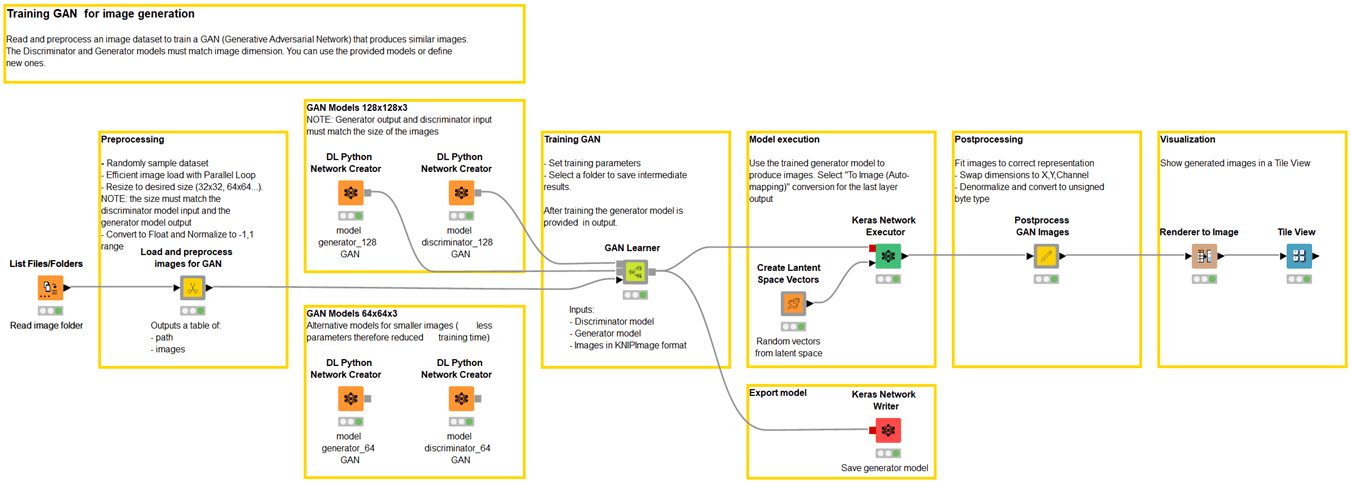

Example KNIME workflow of GANs for the generation of synthetic images (i.e., animals, human faces and Simpson characters).

GANs pioneered realistic image and video content creation by pitting a generator against a discriminator. Extending the need for creativity and advanced operations from image to sequential data, models for more sophisticated natural language understanding, machine translation, and text generation were introduced. This initiated the development of Transformers, a remarkable deep neural network architecture that not only outperformed previous architectures by effectively capturing long-range language dependencies and semantic context, but also became the undisputed foundation of the most recent ai-driven applications.

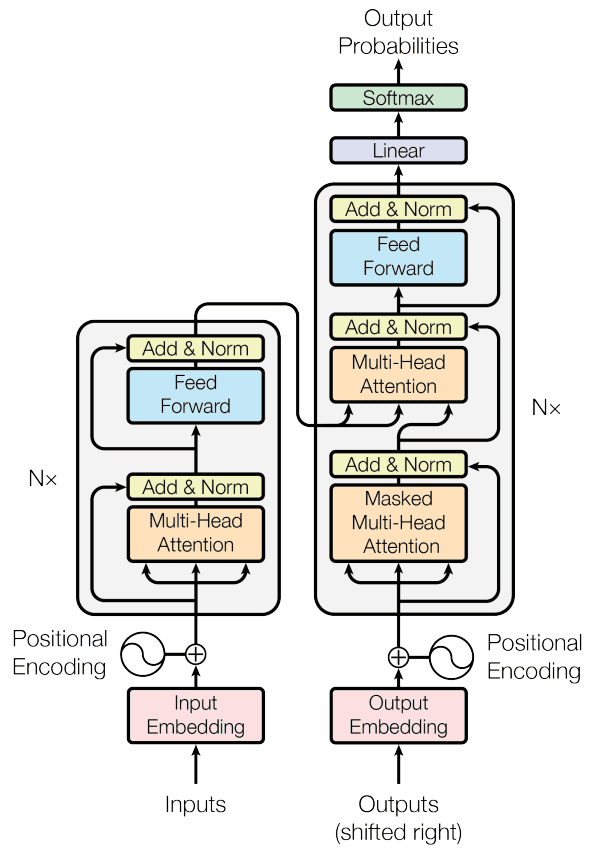

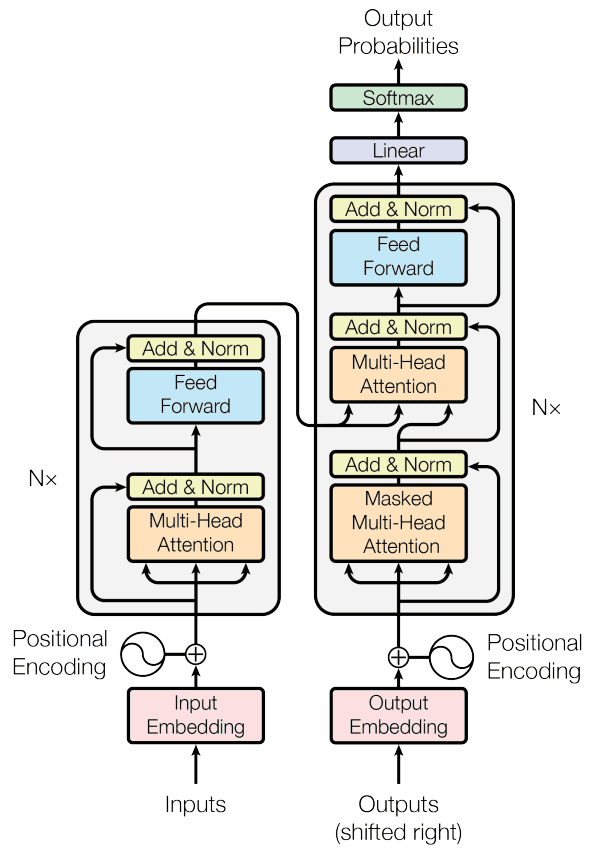

Developed in 2017, Transformers boast a unique feature that allows them to replace traditional recurrent layers: a self-attention mechanism that allows them to model intricate relationships between all words in a document, regardless of their position. This makes Transformers excellent at tackling the challenge of long-range dependencies in natural language. Transformer architectures consist of two main building blocks:

- Encoder. Here the input sequence is embedded into vectors and then is exposed to the self-attention mechanism. The latter computes attention scores for each token, determining its importance in relation to others. These scores are used to create weighted sums, which are fed into a FFNN to generate context-aware representations for each token. Multiple encoder layers repeat this process, enhancing the model’s ability to capture hierarchical and contextual information.

- Decoder. This block is responsible for generating output sequences and follows a similar process to that of the encoder. It is able to place the proper focus on and understand the encoder’s output and its own past tokens during each step, ensuring accurate generation by considering both input context and previously generated output.

Transformer model architecture (image by: Vaswani et al., 2017).

Consider this sentence: “I arrived at the bank after crossing the river”. The word “bank” can have two meanings –either a financial institution or the edge of a river. Here’s where transformers shine. They can swiftly focus on the word “river” to disambiguate “bank” by comparing “bank” to every other word in the sentence and assigning attention scores. These scores determine the influence of each word on the next representation of “bank”. In this case, “river” gets a higher score, effectively clarifying the intended meaning.

To work that well, Transformers rely on millions of trainable parameters, require large corpora of texts and sophisticated training strategies. One notable training approach employed with Transformers is masked language modeling (MLM). During training, specific tokens within the input sequence are randomly masked, and the model’s objective is to predict these masked tokens accurately. This strategy encourages the model to grasp contextual relationships between words because it must rely on the surrounding words to make accurate predictions. This approach, popularized by the BERT model, has been instrumental in achieving state-of-the-art results in various NLP tasks.

An alternative to MLM for Transformers is autoregressive modeling. In this method, the model is trained to generate one word at a time while conditioning on previously generated words. Autoregressive models like GPT (Generative Pre-trained Transformer) follow this methodology and excel in tasks where the goal is to predict unidirectionally the next most suitable word, such as free text generation, question answering and text completion.

Furthermore, to compensate for the need for extensive text resources, Transformers excel in parallelization, meaning they can process data during training faster than traditional sequential approaches like RNNs or LSTM units. This efficient computation reduces training time and has led to groundbreaking applications in natural language processing, machine translation, and more.

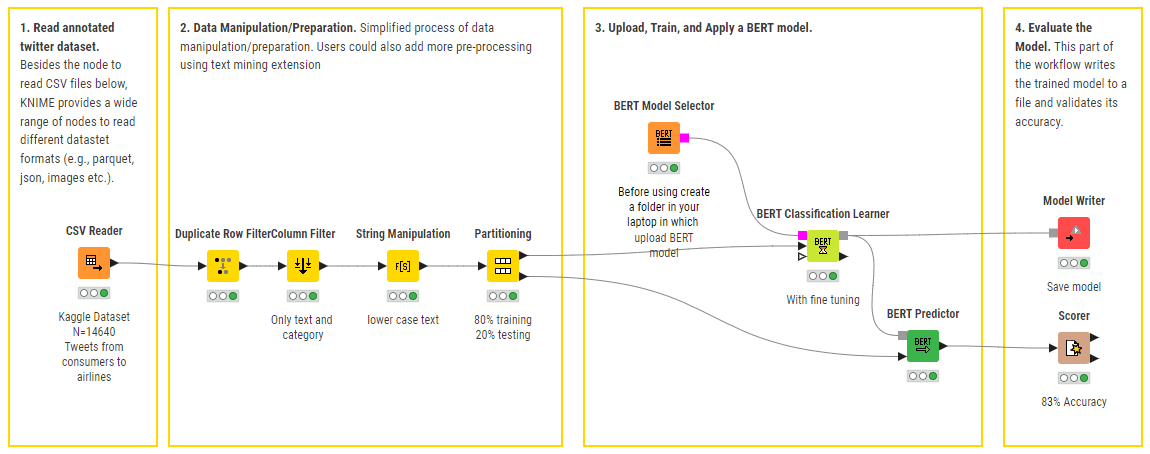

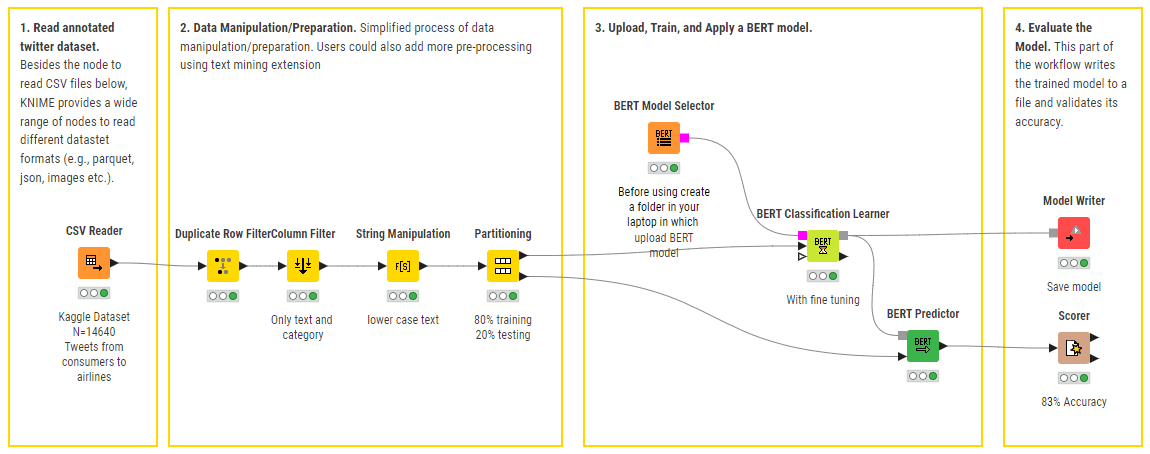

A pivotal Transformer model developed by Google in 2018 that made a substantial impact is BERT (Bidirectional Encoder Representations from Transformers). BERT relied on MLM training and introduced the concept of bidirectional context, meaning it considers both the left and right context of a word when predicting the masked token. This bidirectional approach significantly enhanced the model’s understanding of word meanings and contextual nuances, establishing new benchmarks for natural language understanding and a wide array of downstream NLP tasks.

Example KNIME workflow of BERT for multi-class sentiment prediction (positive, negative, neutral). Minimal preprocessing is performed and the pretrained BERT model with fine-tuning is leveraged.

On the heels of Transformers that introduced powerful self-attention mechanisms, the growing demand for versatility in applications and performing complex natural language tasks, such as document summarization, text editing, or code generation, necessitated the development of large language models. These models employ deep neural networks with billions of parameters to excel in such tasks and meet the evolving requirements of the data analytics industry.

Large language models (LLMs) are a revolutionary category of multi-purpose and multi-modal (accepting image, audio and text inputs) deep neural networks that have garnered significant attention in recent years. The adjective large stems from their vast size, as they encompass billions of trainable parameters. Some of the most well-known examples include OpenAI’s ChatGTP, Google’s Bard or Meta’s LLaMa.

What sets LLMs apart is their unparalleled ability and flexibility to process and generate human-like text. They excel in natural language understanding and generation tasks, ranging from text completion and translation to question answering and content summarization. The key to their success lies in their extensive training on massive text corpora, allowing them to capture a rich understanding of language nuances, context, and semantics.

These models employ a deep neural architecture with multiple layers of self-attention mechanisms, enabling them to weigh the importance of different words and phrases in a given context. This dynamic adaptability makes them exceptionally proficient in processing inputs of various types, comprehending complex language structures, and generating outputs based on human-defined prompts.

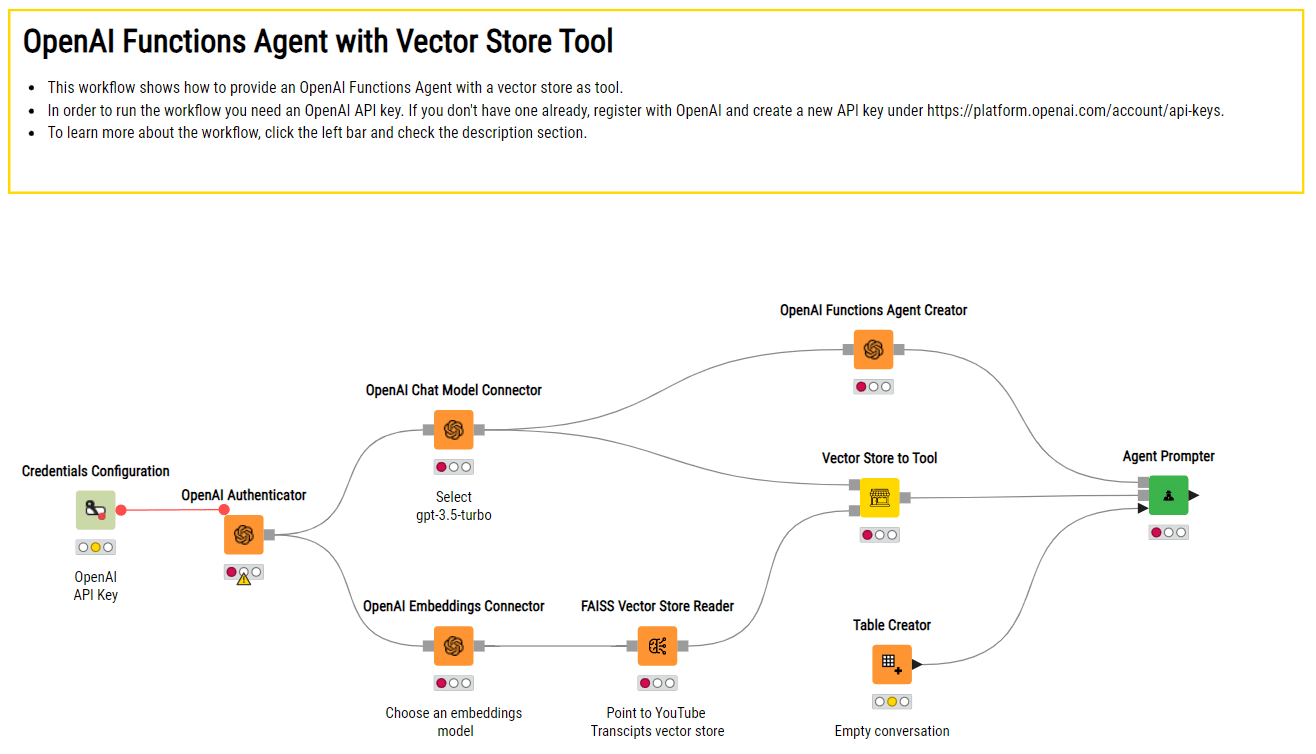

Example KNIME workflow of creating an ai assistant that relies on OpenAI’s ChatGPT and a vector store with custom documents to answer domain-specific questions.

LLMs have paved the way for a multitude of applications across various industries, from healthcare and finance to entertainment and customer service. They’ve even sparked new frontiers in creative writing and storytelling.

However, their enormous size, resource-intensive training processes and potential copyright infringements for generated content have also raised concerns about ethical usage, environmental impact, and accessibility. Lastly, while increasingly enhanced, LLMs may contain some serious flaws, such as “hallucinating” incorrect facts, being biased, gullible, or persuaded into creating toxic content.

The evolution of neural networks, from their humble beginnings to the uprising of large language models, raises a profound philosophical question: Will this journey ever come to an end?

The trajectory of technology has always been marked by relentless advancement. Each milestone only serves as a stepping stone to the next innovation. As we strive to create machines that can replicate human cognition and understanding, it’s tempting to ponder whether there’s an ultimate destination, a point where we say, “This is it; we’ve reached the pinnacle.”

However, the essence of human curiosity and the boundless complexities of the natural world suggest otherwise. Just as our understanding of the universe continually deepens, the quest to develop more intelligent, capable, and ethical neural networks may be an endless journey.Walkthrough of neural network evolution (image by author).

Neural networks, the fundamental building blocks of artificial intelligence, have revolutionized the way we process information, offering a glimpse into the future of technology. These complex computational systems, inspired by the intricacies of the human brain, have become pivotal in tasks ranging from image recognition and natural language understanding to autonomous driving and medical diagnosis. As we explore neural networks’ historical evolution, we will uncover their remarkable journey of how they have evolved to shape the modern landscape of ai.

Neural networks, the foundational components of deep learning, owe their conceptual roots to the intricate biological networks of neurons within the human brain. This remarkable concept began with a fundamental analogy, drawing parallels between biological neurons and computational networks.

This analogy centers around the brain, which is composed of roughly 100 billion neurons. Each neuron maintains about 7,000 synaptic connections with other neurons, creating a complex neural network that underlies human cognitive processes and decision making.

Individually, a biological neuron operates through a series of simple electrochemical processes. It receives signals from other neurons through its dendrites. When these incoming signals add up to a certain level (a predetermined threshold), the neuron switches on and sends an electrochemical signal along its axon. This, in turn, affects the neurons connected to its axon terminals. The key thing to note here is that a neuron’s response is like a binary switch: it either fires (activates) or stays quiet, without any in-between states.

Biological neurons were the inspiration for artificial neural networks (image: Wikipedia).

Artificial neural networks, as impressive as they are, remain a far cry from even remotely approaching the astonishing intricacies and profound complexities of the human brain. Nonetheless, they have demonstrated significant prowess in addressing problems that are challenging for conventional computers but appear intuitive to human cognition. Some examples are image recognition and predictive analytics based on historical data.

Now that we’ve explored the foundational principles of how biological neurons function and their inspiration for artificial neural networks, let’s journey through the evolution of neural network frameworks that have shaped the landscape of artificial intelligence.

Feed forward neural networks, often referred to as a multilayer perceptron, are a fundamental type of neural networks, whose operation is deeply rooted in the principles of information flow, interconnected layers, and parameter optimization.

At their core, FFNNs orchestrate a unidirectional journey of information. It all begins with the input layer containing n neurons, where data is initially ingested. This layer serves as the entry point for the network, acting as a receptor for the input features that need to be processed. From there, the data embarks on a transformative voyage through the network’s hidden layers.

One important aspect of FFNNs is their connected structure, which means that each neuron in a layer is intricately connected to every neuron in that layer. This interconnectedness allows the network to perform computations and capture relationships within the data. It’s like a communication network where every node plays a role in processing information.

As the data passes through the hidden layers, it undergoes a series of calculations. Each neuron in a hidden layer receives inputs from all neurons in the previous layer, applies a weighted sum to these inputs, adds a bias term, and then passes the result through an activation function (commonly ReLU, Sigmoid, or tanH). These mathematical operations enable the network to extract relevant patterns from the input, and capture complex, nonlinear relationships within data. This is where FFNNs truly excel compared to more shallow ML models.

Architecture of fully-connected feed-forward neural networks (image by author).

However, that’s not where it ends. The real power of FFNNs lies in their ability to adapt. During training the network adjusts its weights to minimize the difference between its predictions and the actual target values. This iterative process, often based on optimization algorithms like gradient descent, is called backpropagation. Backpropagation empowers FFNNs to actually learn from data and improve their accuracy in making predictions or classifications.

Example KNIME workflow of FFNN used for the binary classification of certification exams (pass vs fail). In the upper branch, we can see the network architecture, which is made of an input layer, a fully connected hidden layer with a tanH activation function, and an output layer that uses a Sigmoid activation function (image by author).

While powerful and versatile, FFNNs display some relevant limitations. For example, they fail to capture sequentiality and temporal/syntactic dependencies in the data –two crucial aspects for tasks in language processing and time series analysis. The need to overcome these limitations prompted the evolution of a new type of neural network architecture. This transition paved the way for Recurrent Neural Networks (RNNs), which introduced the concept of feedback loops to better handle sequential data.

At their core, RNNs share some similarities with FFNNs. They too are composed of layers of interconnected nodes, processing data to make predictions or classifications. However, their key differentiator lies in their ability to handle sequential data and capture temporal dependencies.

In a FFNN, information flows in a single, unidirectional path from the input layer to the output layer. This is suitable for tasks where the order of data doesn’t matter much. However, when dealing with sequences like time series data, language, or speech, maintaining context and understanding the order of data is crucial. This is where RNNs shine.

RNNs introduce the concept of feedback loops. These act as a sort of “memory” and allow the network to maintain a hidden state that captures information about previous inputs and to influence the current input and output. While traditional neural networks assume that inputs and outputs are independent of each other, the output of recurrent neural networks depend on the prior elements within the sequence. This recurrent connection mechanism makes RNNs particularly fit to handle sequences by “remembering” past information.

Another distinguishing characteristic of recurrent networks is that they share the same weight parameter within each layer of the network, and those weights are adjusted leveraging the backpropagation through time (BPTT) algorithm, which is slightly different from traditional backpropagation as it is specific to sequence data.

Unrolled representation of RNNs, where each input is enriched with context information coming from previous inputs. The color represents the propagation of context information (image by author).

However, traditional RNNs have their limitations. While in theory they should be able to capture long-range dependencies, in reality they struggle to do so effectively, and can even suffer from the vanishing gradient problem, which hinders their ability to learn and remember information over many time steps.

This is where Long Short-Term Memory (LSTM) units come into play. They are specifically designed to handle these issues by incorporating three gates into their structure: the Forget gate, Input gate, and Output gate.

- Forget gate: This gate decides which information from the time step should be discarded or forgotten. By examining the cell state and the current input, it determines which information is irrelevant for making predictions in the present.

- Input gate: This gate is responsible for incorporating information into the cell state. It takes into account both the input and the previous cell state to decide what new information should be added to enhance its state.

- Output gate: This gate concludes what output will be generated by the LSTM unit. It considers both the current input and the updated cell state to produce an output that can be utilized for predictions or passed on to time steps.

Visual representation of Long-Short Term Memory units (image by Christopher Olah).

Example KNIME workflow of RNNs with LSTM units used for a multi-class sentiment prediction (positive, negative, neutral). The upper branch defines the network architecture using an input layer to handle strings of different lengths, an embedding layer, an LSTM layer with several units, and a fully connected output layer with a Softmax activation function to return predictions.

In summary, RNNs, and especially LSTM units, are tailored for sequential data, allowing them to maintain memory and capture temporal dependencies, which is a critical capability for tasks like natural language processing, speech recognition, and time series prediction.

As we shift from RNNs capturing sequential dependencies, the evolution continues with Convolutional Neural Networks (CNNs). Unlike RNNs, CNNs excel at spatial feature extraction from structured grid-like data, making them ideal for image and pattern recognition tasks. This transition reflects the diverse applications of neural networks across different data types and structures.

CNNs are a special breed of neural networks, particularly well-suited for processing image data, such as 2D images or even 3D video data. Their architecture relies on a multilayered feed-forward neural network with at least one convolutional layer.

What makes CNNs stand out is their network connectivity and approach to feature extraction, which allows them to automatically identify relevant patterns in the data. Unlike traditional FFNNs, which connect every neuron in one layer to every neuron in the next, CNNs employ a sliding window known as a kernel or filter. This sliding window scans across the input data and is especially powerful for tasks where spatial relationships matter, like identifying objects in images or tracking motion in videos. As the kernel is moved across the image, a convolution operation is performed between the kernel and the pixel values (from a strictly mathematical standpoint, this operation is a cross correlation), and a nonlinear activation function, usually ReLU, is applied. This produces a high value if the feature is in the image patch and a small value if it is not.

Together with the kernel, the addition and fine-tuning of hyperparameters, such as stride (i.e., the number of pixels by which we slide the kernel) and dilation rate (i.e., the spaces between each kernel cell), allows the network to focus on specific features, recognizing patterns and details in specific regions without considering the entire input at once.

Convolution operation with stride length = 2 (GIF by Sumit Saha).

Some kernels may specialize in detecting edges or corners, while others might be tuned to recognize more complex objects like cats, dogs, or street signs within an image. By stacking together several convolutional and pooling layers, CNNs build a hierarchical representation of the input, gradually abstracting features from low-level to high-level, just as our brains process visual information.

Example KNIME workflow of CNN for binary image classification (cats vs dogs). The upper branch defines the network architecture using a series of convolutional layers and max pooling layers for automatic feature extraction from images. A flatten layer is then used to prepare the extracted features as a unidimensional input for the FFNN to perform a binary classification.

While CNNs excel at feature extraction and have revolutionized computer vision tasks, they act as passive observers, for they are not designed to generate new data or content. This is not an inherent limitation of the network per se but having a powerful engine and no fuel makes a fast car useless. Indeed, real and meaningful image and video data tend to be hard and expensive to collect and tend to face copyright and data privacy restrictions. This constraint led to the development of a novel paradigm that builds on CNNs but marks a leap from image classification to creative synthesis: Generative Adversarial Networks (GANs).

GANs are a particular family of neural networks whose primary, but not the only, purpose is to produce synthetic data that closely mimics a given dataset of real data. Unlike most neural networks, GANs’ ingenious architectural design consisting of two core models:

- Generator model: The first player in this neural network duet is the generator model. This component is tasked with a fascinating mission: given random noise or input vectors, it strives to create artificial samples that are as close to resembling real samples as possible. Imagine it as an art forger, attempting to craft paintings that are indistinguishable from masterpieces.

- Discriminator model: Playing the adversary role is the discriminator model. Its job is to differentiate between the generated samples produced by the generator and the authentic samples from the original dataset. Think of it as an art connoisseur, trying to spot the forgeries among the genuine artworks.

Now, here’s where the magic happens: GANs engage in a continuous, adversarial dance. The generator aims to improve its artistry, continually fine-tuning its creations to become more convincing. Meanwhile, the discriminator becomes a sharper detective, honing its ability to tell the real from the fake.

GAN architecture (image by author).

As training progresses, this dynamic interplay between the generator and discriminator leads to a fascinating outcome. The generator strives to generate samples that are so realistic that even the discriminator can’t tell them apart from the genuine ones. This competition drives both components to refine their abilities continuously.

The result? A generator that becomes astonishingly adept at producing data that appears authentic, be it images, music, or text. This capability has led to remarkable applications in various fields, including image synthesis, data augmentation, image-to-image translation, and image editing.

Example KNIME workflow of GANs for the generation of synthetic images (i.e., animals, human faces and Simpson characters).

GANs pioneered realistic image and video content creation by pitting a generator against a discriminator. Extending the need for creativity and advanced operations from image to sequential data, models for more sophisticated natural language understanding, machine translation, and text generation were introduced. This initiated the development of Transformers, a remarkable deep neural network architecture that not only outperformed previous architectures by effectively capturing long-range language dependencies and semantic context, but also became the undisputed foundation of the most recent ai-driven applications.

Developed in 2017, Transformers boast a unique feature that allows them to replace traditional recurrent layers: a self-attention mechanism that allows them to model intricate relationships between all words in a document, regardless of their position. This makes Transformers excellent at tackling the challenge of long-range dependencies in natural language. Transformer architectures consist of two main building blocks:

- Encoder. Here the input sequence is embedded into vectors and then is exposed to the self-attention mechanism. The latter computes attention scores for each token, determining its importance in relation to others. These scores are used to create weighted sums, which are fed into a FFNN to generate context-aware representations for each token. Multiple encoder layers repeat this process, enhancing the model’s ability to capture hierarchical and contextual information.

- Decoder. This block is responsible for generating output sequences and follows a similar process to that of the encoder. It is able to place the proper focus on and understand the encoder’s output and its own past tokens during each step, ensuring accurate generation by considering both input context and previously generated output.

Transformer model architecture (image by: Vaswani et al., 2017).

Consider this sentence: “I arrived at the bank after crossing the river”. The word “bank” can have two meanings –either a financial institution or the edge of a river. Here’s where transformers shine. They can swiftly focus on the word “river” to disambiguate “bank” by comparing “bank” to every other word in the sentence and assigning attention scores. These scores determine the influence of each word on the next representation of “bank”. In this case, “river” gets a higher score, effectively clarifying the intended meaning.

To work that well, Transformers rely on millions of trainable parameters, require large corpora of texts and sophisticated training strategies. One notable training approach employed with Transformers is masked language modeling (MLM). During training, specific tokens within the input sequence are randomly masked, and the model’s objective is to predict these masked tokens accurately. This strategy encourages the model to grasp contextual relationships between words because it must rely on the surrounding words to make accurate predictions. This approach, popularized by the BERT model, has been instrumental in achieving state-of-the-art results in various NLP tasks.

An alternative to MLM for Transformers is autoregressive modeling. In this method, the model is trained to generate one word at a time while conditioning on previously generated words. Autoregressive models like GPT (Generative Pre-trained Transformer) follow this methodology and excel in tasks where the goal is to predict unidirectionally the next most suitable word, such as free text generation, question answering and text completion.

Furthermore, to compensate for the need for extensive text resources, Transformers excel in parallelization, meaning they can process data during training faster than traditional sequential approaches like RNNs or LSTM units. This efficient computation reduces training time and has led to groundbreaking applications in natural language processing, machine translation, and more.

A pivotal Transformer model developed by Google in 2018 that made a substantial impact is BERT (Bidirectional Encoder Representations from Transformers). BERT relied on MLM training and introduced the concept of bidirectional context, meaning it considers both the left and right context of a word when predicting the masked token. This bidirectional approach significantly enhanced the model’s understanding of word meanings and contextual nuances, establishing new benchmarks for natural language understanding and a wide array of downstream NLP tasks.

Example KNIME workflow of BERT for multi-class sentiment prediction (positive, negative, neutral). Minimal preprocessing is performed and the pretrained BERT model with fine-tuning is leveraged.

On the heels of Transformers that introduced powerful self-attention mechanisms, the growing demand for versatility in applications and performing complex natural language tasks, such as document summarization, text editing, or code generation, necessitated the development of large language models. These models employ deep neural networks with billions of parameters to excel in such tasks and meet the evolving requirements of the data analytics industry.

Large language models (LLMs) are a revolutionary category of multi-purpose and multi-modal (accepting image, audio and text inputs) deep neural networks that have garnered significant attention in recent years. The adjective large stems from their vast size, as they encompass billions of trainable parameters. Some of the most well-known examples include OpenAI’s ChatGTP, Google’s Bard or Meta’s LLaMa.

What sets LLMs apart is their unparalleled ability and flexibility to process and generate human-like text. They excel in natural language understanding and generation tasks, ranging from text completion and translation to question answering and content summarization. The key to their success lies in their extensive training on massive text corpora, allowing them to capture a rich understanding of language nuances, context, and semantics.

These models employ a deep neural architecture with multiple layers of self-attention mechanisms, enabling them to weigh the importance of different words and phrases in a given context. This dynamic adaptability makes them exceptionally proficient in processing inputs of various types, comprehending complex language structures, and generating outputs based on human-defined prompts.

Example KNIME workflow of creating an ai assistant that relies on OpenAI’s ChatGPT and a vector store with custom documents to answer domain-specific questions.

LLMs have paved the way for a multitude of applications across various industries, from healthcare and finance to entertainment and customer service. They’ve even sparked new frontiers in creative writing and storytelling.

However, their enormous size, resource-intensive training processes and potential copyright infringements for generated content have also raised concerns about ethical usage, environmental impact, and accessibility. Lastly, while increasingly enhanced, LLMs may contain some serious flaws, such as “hallucinating” incorrect facts, being biased, gullible, or persuaded into creating toxic content.

The evolution of neural networks, from their humble beginnings to the uprising of large language models, raises a profound philosophical question: Will this journey ever come to an end?

The trajectory of technology has always been marked by relentless advancement. Each milestone only serves as a stepping stone to the next innovation. As we strive to create machines that can replicate human cognition and understanding, it’s tempting to ponder whether there’s an ultimate destination, a point where we say, “This is it; we’ve reached the pinnacle.”

However, the essence of human curiosity and the boundless complexities of the natural world suggest otherwise. Just as our understanding of the universe continually deepens, the quest to develop more intelligent, capable, and ethical neural networks may be an endless journey.

Anil is a Data Science Evangelist at KNIME.

NEWSLETTER

NEWSLETTER