Image by author

Pandas is the most used Python library for data analysis and manipulation. But the data you read from the source often requires a series of data cleaning steps before you can analyze it to gain valuable insights, answer business questions, or build machine learning models.

This guide breaks down the process of cleaning data with pandas into 7 practical steps. We will create a sample data set and follow the data cleaning steps.

Let us begin!

How to create a sample data frame

Before we start the actual data cleaning steps, let's create a pandas data frame with employee records. We will use Faker for synthetic data generation. So install it first:

If you want, you can follow the same example. You can also use a data set of your choice. Here is the code to generate 1000 records:

import pandas as pd

from faker import Faker

import random

# Initialize Faker to generate synthetic data

fake = Faker()

# Set seed for reproducibility

Faker.seed(42)

# Generate synthetic data

data = ()

for _ in range(1000):

data.append({

'Name': fake.name(),

'Age': random.randint(18, 70),

'Email': fake.email(),

'Phone': fake.phone_number(),

'Address': fake.address(),

'Salary': random.randint(20000, 150000),

'Join_Date': fake.date_this_decade(),

'Employment_Status': random.choice(('Full-Time', 'Part-Time', 'Contract')),

'Department': random.choice(('IT', 'Engineering','Finance', 'HR', 'Marketing'))

})

Let's modify this data frame a little to introduce missing values, duplicate records, outliers, and more:

# Let's tweak the records a bit!

# Introduce missing values

for i in random.sample(range(len(data)), 50):

data(i)('Email') = None

# Introduce duplicate records

data.extend(random.sample(data, 100))

# Introduce outliers

for i in random.sample(range(len(data)), 20):

data(i)('Salary') = random.randint(200000, 500000)Now let's create a data frame with these records:

# Create dataframe

df = pd.DataFrame(data)Note that we set the seed to Faker and not the random module. So there will be some randomness in the records you generate.

Step 1: Understand the data

Step 0 is always understand the business question/problem you are trying to solve.. Once you know that, you can start working with the data you've read into your pandas data frame.

But before you can do anything meaningful with the data set, it's important to first get a high-level overview of the data set. This includes getting basic information about the different fields and the total number of records, inspecting the data frame header, and similar things.

Here we execute the info() method in data frame:

Output >>>

RangeIndex: 1100 entries, 0 to 1099

Data columns (total 9 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 Name 1100 non-null object

1 Age 1100 non-null int64

2 Email 1047 non-null object

3 Phone 1100 non-null object

4 Address 1100 non-null object

5 Salary 1100 non-null int64

6 Join_Date 1100 non-null object

7 Employment_Status 1100 non-null object

8 Department 1100 non-null object

dtypes: int64(2), object(7)

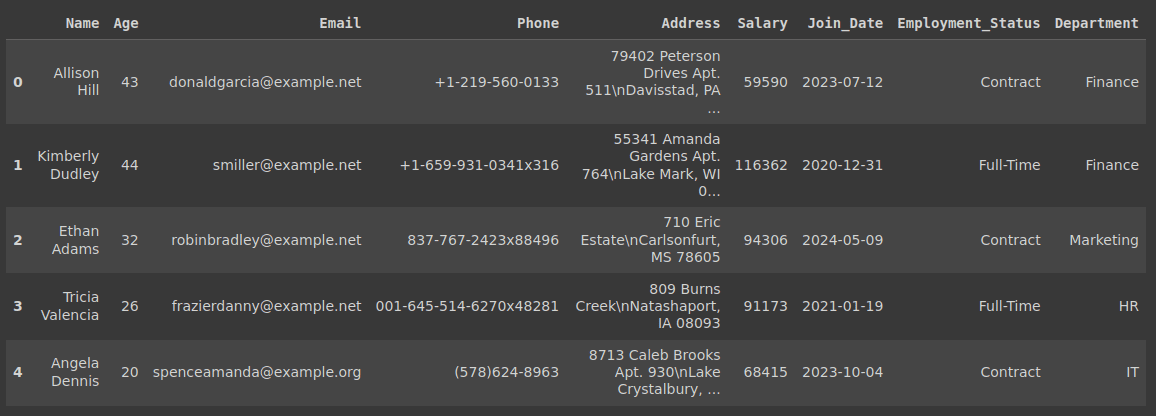

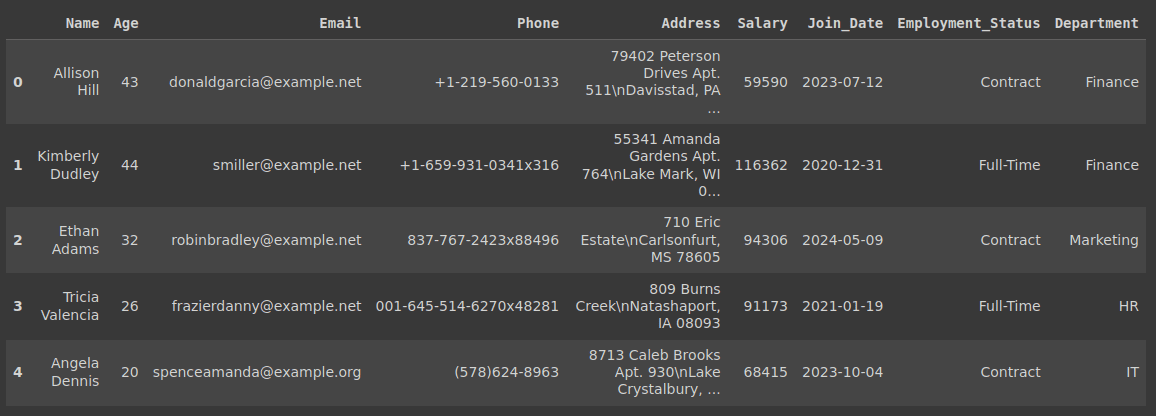

memory usage: 77.5+ KBAnd inspect the head of the data frame:

Output of df.head()

Step 2: Handling Duplicates

Duplicate records are a common problem that distorts analysis results. Therefore, we need to identify and remove all duplicate records to work only with the unique data records.

This is how we find all the duplicates in the data frame and then put them in place:

# Check for duplicate rows

duplicates = df.duplicated().sum()

print("Number of duplicate rows:", duplicates)

# Removing duplicate rows

df.drop_duplicates(inplace=True)Output >>>

Number of duplicate rows: 100Step 3: Handling missing data

Missing data is a common data quality issue in many data science projects. If you take a quick look at the result of the info() method from the previous step, you should see that the number of non-null objects is not identical for all fields and there are missing values in the email column. We'll get the exact count anyway.

To get the number of missing values in each column, you can run:

# Check for missing values

missing_values = df.isna().sum()

print("Missing Values:")

print(missing_values)Output >>>

Missing Values:

Name 0

Age 0

Email 50

Phone 0

Address 0

Salary 0

Join_Date 0

Employment_Status 0

Department 0

dtype: int64If values are missing in one or more numerical columns, we can apply appropriate imputation techniques. But because the 'Email' field is missing, let's set the missing emails to a placeholder email like this:

# Handling missing values by filling with a placeholder

df('Email').fillna('unknown@example.com', inplace=True)Step 4: Transform data

When you work on the data set, there may be one or more fields that do not have the expected data type. In our sample data frame, the 'Join_Date' field needs to be converted to a valid datetime object:

# Convert 'Join_Date' to datetime

df('Join_Date') = pd.to_datetime(df('Join_Date'))

print("Join_Date after conversion:")

print(df('Join_Date').head())Output >>>

Join_Date after conversion:

0 2023-07-12

1 2020-12-31

2 2024-05-09

3 2021-01-19

4 2023-10-04

Name: Join_Date, dtype: datetime64(ns)Because we have the joining date, it's actually more useful to have a “Years_Employed” column as shown:

# Creating a new feature 'Years_Employed' based on 'Join_Date'

df('Years_Employed') = pd.Timestamp.now().year - df('Join_Date').dt.year

print("New feature 'Years_Employed':")

print(df(('Join_Date', 'Years_Employed')).head())Output >>>

New feature 'Years_Employed':

Join_Date Years_Employed

0 2023-07-12 1

1 2020-12-31 4

2 2024-05-09 0

3 2021-01-19 3

4 2023-10-04 1Step 5: Clean text data

It is quite common to encounter inconsistently formatted string fields or similar problems. Cleaning text can be as simple as applying a case conversion or as difficult as writing a complex regular expression to get the string into the required format.

In the example data frame we have, we see that the 'Address' column contains many '\n' characters which make it difficult to read. So replace them with spaces like this:

# Clean address strings

df('Address') = df('Address').str.replace('\n', ' ', regex=False)

print("Address after text cleaning:")

print(df('Address').head())Output >>>

Address after text cleaning:

0 79402 Peterson Drives Apt. 511 Davisstad, PA 35172

1 55341 Amanda Gardens Apt. 764 Lake Mark, WI 07832

2 710 Eric Estate Carlsonfurt, MS 78605

3 809 Burns Creek Natashaport, IA 08093

4 8713 Caleb Brooks Apt. 930 Lake Crystalbury, CA...

Name: Address, dtype: objectStep 6: Handling Outliers

If you scroll back up, you'll see that we set some of the values in the “Salary” column to be extremely high. These outliers must also be identified and handled appropriately so that they do not bias the analysis.

You will often want to keep in mind that converts a data point to an outlier (if it is incorrect data entry or if it is actually valid values and not outliers). You can then choose to handle them: remove records with outliers or get the subset of rows with outliers and analyze them separately.

Let's use the z score and find those salary values that are more than three standard deviations from the mean:

# Detecting outliers using z-score

z_scores = (df('Salary') - df('Salary').mean()) / df('Salary').std()

outliers = df(abs(z_scores) > 3)

print("Outliers based on Salary:")

print(outliers(('Name', 'Salary')).head())Output >>>

Outliers based on Salary:

Name Salary

16 Michael Powell 414854

131 Holly Jimenez 258727

240 Daniel Williams 371500

328 Walter Bishop 332554

352 Ashley Munoz 278539Step 7: Merge data

In most projects, the data you have can No is the data you will want to use for analysis. You need to find the most relevant fields to use and also merge data from other data frames to get more useful data that you can use for analysis.

As a quick exercise, create another related data frame and merge it with the existing data frame into a common column so the join makes sense. Merging in pandas works very similar to joins in SQL, so I suggest you try it as an exercise.

Ending

That's all for this tutorial! We created a sample data frame with records and worked through the various data cleaning steps. Below is an overview of the steps: understand the data, handle duplicates, missing values, transform data, clean text data, handle outliers, and merge data.

If you want to learn everything about data management with pandas, check out 7 Steps to Master Data Management with Pandas and Python.

twitter.com/balawc27″ rel=”noopener”>Bala Priya C. is a developer and technical writer from India. He enjoys working at the intersection of mathematics, programming, data science, and content creation. His areas of interest and expertise include DevOps, data science, and natural language processing. He likes to read, write, code and drink coffee! Currently, he is working to learn and share his knowledge with the developer community by creating tutorials, how-to guides, opinion pieces, and more. Bala also creates engaging resource descriptions and coding tutorials.

<script async src="//platform.twitter.com/widgets.js” charset=”utf-8″>

NEWSLETTER

NEWSLETTER