Every company has tons of data, but it is hidden in PDF files or your employees’ email attachments.

Successful companies make data-driven decisions, and that is possible because of data extraction and analysis tools.

This article examines the top 10 data extraction tools and their pros and cons. By the end, you will be equipped to select the best data extraction software according to your requirements.

Here’s a snapshot of our recommendations:

- Best for Data extraction From Documents – Nanonets

- Best for Web scraping for e-commerce – Import.io

- Best for Table Extraction – Nanonets

- Best for Data Unification – Hevo

Are you looking for data extraction software? Look no further! Try Nanonets for free and automate data extraction in 15 minutes.

Data extraction is the process of retrieving data from a source into a structured format for further analysis. By structured, we mean that it has been arranged in columns and rows so it can be easily imported into another program or database.

This can involve extracting specific pieces of data, such as contact information or financial data, or extracting data from a larger dataset and organizing it in a way that makes it easier to analyze.

Data extraction can refer to scraping information from web pages or emails but includes any other type of text-based file such as spreadsheets (Excel), documents (Word), XML, PDFs, etc. The goal of data extraction is to get the raw data out so you can do something with it—for example, run analytics on your CRM contacts list or create mailing lists using customer emails and addresses.

Today, with the help of ai, data extraction has become much more accurate and intuitive. Through ai models trained on thousands of documents, data extraction tools today can extract all the required information with over 90% accuracy through zero-shot models and keep improving in accuracy as more and more documents are processed.

Data extraction has important use cases across industries and can help streamline and automate many business processes. From invoice data extraction to healthcare document management, data extraction can be used across teams and businesses.

Now, let’s review the top data extraction systems in 2024!

Data extraction is a complex process that can be broken down into different steps.

The first step is to find the data you want to extract, often using an automated tool or another method of gathering data from sources such as websites or databases. Once you have found your target data, there are various ways of extracting it.

Given the complex process, here are our best picks as a data extraction tool for your use cases!

#1. Nanonets

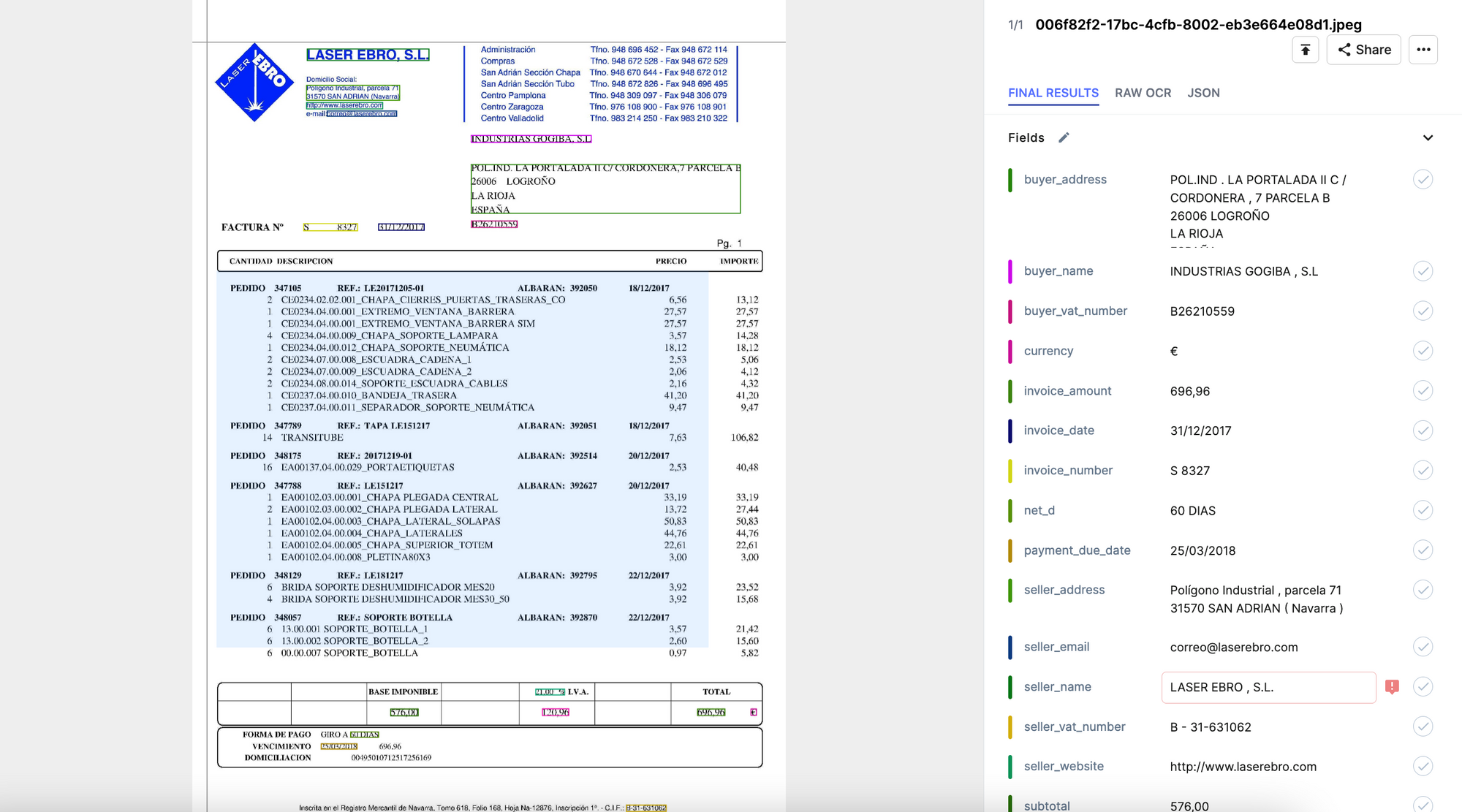

Nanonets is an ai data extraction software for businesses looking to automate document processes and eliminate manual tasks using no-code workflow automation. Nanonets can extract data from PDFs, documents, images, emails, scanned documents, or unstructured datasets with more than 95% accuracy.

Nanonets’ intelligent document processing platform can reduce expenses by 50% and processing times by 90%.

Pros of using Nanonets

- Easy to use

- 97%+ Accurate

- Excellent support team

- Fast information recognition

- Ability to intake large volumes of documents

- Reasonable pricing – Check Pricing

- 200+ languages supported

- 24×7 customer support

- Free Plans + Cost-effective Pricing Plans

- Personal training sessions

- In-built powerful OCR software

- Cloud and On-premise hosting

- White label options

500+ enterprises trust Nanonets to automate data extraction processes in real-time. Here’s a snapshot of their experiences.

Nanonets is a safe choice for enterprises of all sizes for automated data extraction.

Let us help you optimize your document data extraction processes. Book a free consultation call to see how you can save 80% cost & 90% time with Nanonet’s intelligent automation platform.

#2. Hevo

Hevo is a data extraction tool that helps you extract large amounts of data from websites. It’s used to capture and process all the data on any website, supports over 50 file formats, and can scrape data from web pages or audio files.

The tool has an easy-to-use interface, so even if you’re unfamiliar with coding, you should be able to use it effectively.

Pricing: Forever free plans. Paid Plans start from $299/month.

Best for: Data unification.

Pros:

- Can manage a large number of pipelines

- Automatic detection of data sources

- Easy Integrations

Cons:

- Costlier pricing plans for more data sources

- Limitations for complex use cases (Source)

#3. Brightdata

Brightdata is a cloud-based data extraction tool that can extract data from documents, websites, and databases. It works with over 80 file formats, including PDFs and Microsoft Word documents.

The software supports multiple data extraction methods: it can pull information directly from the page source code or specific sections of pages; it can parse tables on a page; it can also scan image files (like JPEGs) to text.

Pricing: Forever free plans. Paid Plans start from $500/month.

Best for: Web Scraping

Pros:

- Smooth user interface

- Great uptime

- Huge proxy infrastructure

- Good customer support

Cons:

- High pricing

- Manual account activation

- Not ideal for beginners

- Slow email support

- Unblocker tool is costly

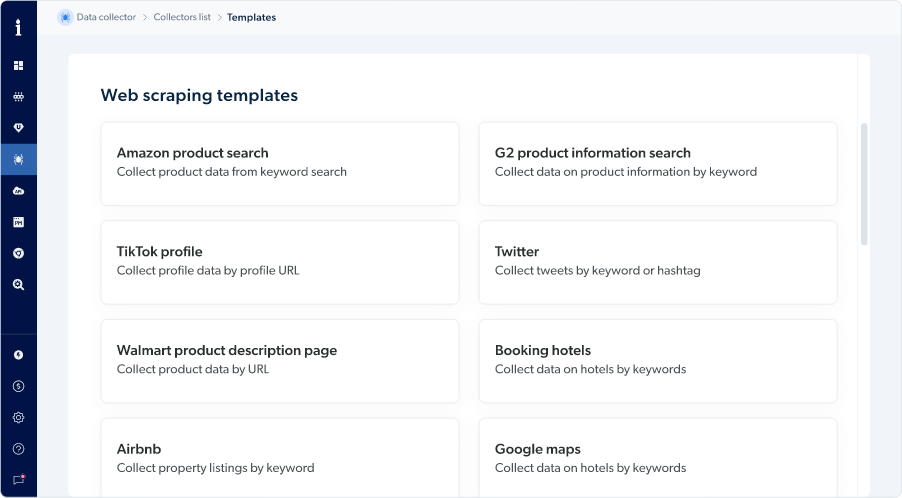

#4. Import.io

Import.io is a tool that can be used to extract data from websites and social media, as well as emails, documents, and more. The software has various features like email parsing that make it easy for users to get the data they need without writing code or using complicated tools.

Pricing: Available on request

Best for Web Scraping

Pros:

- Precise and effective

- Scrapes a specific section of a website

- Simple to use

- No coding required

Cons:

- Workflow UI is confusing

- Costlier compared to other competitors

- Additional web scraping features required

- Desktop app required

- Software crashes frequently

- Slow support

#5 Improvado

Improvado provides a wide range of data extraction, analytics, cleaning, transformation tools, and dashboard creation. Improvado revenue data platform allows organizations to understand the ROI of sales and marketing channels in real-time.

Pricing: Available on request

Best for Marketing Data Unification

Pros:

- Streamlines data from 300+ data sources

- Full-cycle support

- Thorough data collection

Cons:

- Data Transformation functionality can be improved

- Limited customizations

- Dashboard UI is confusing

- Complex procedures require help from the support team

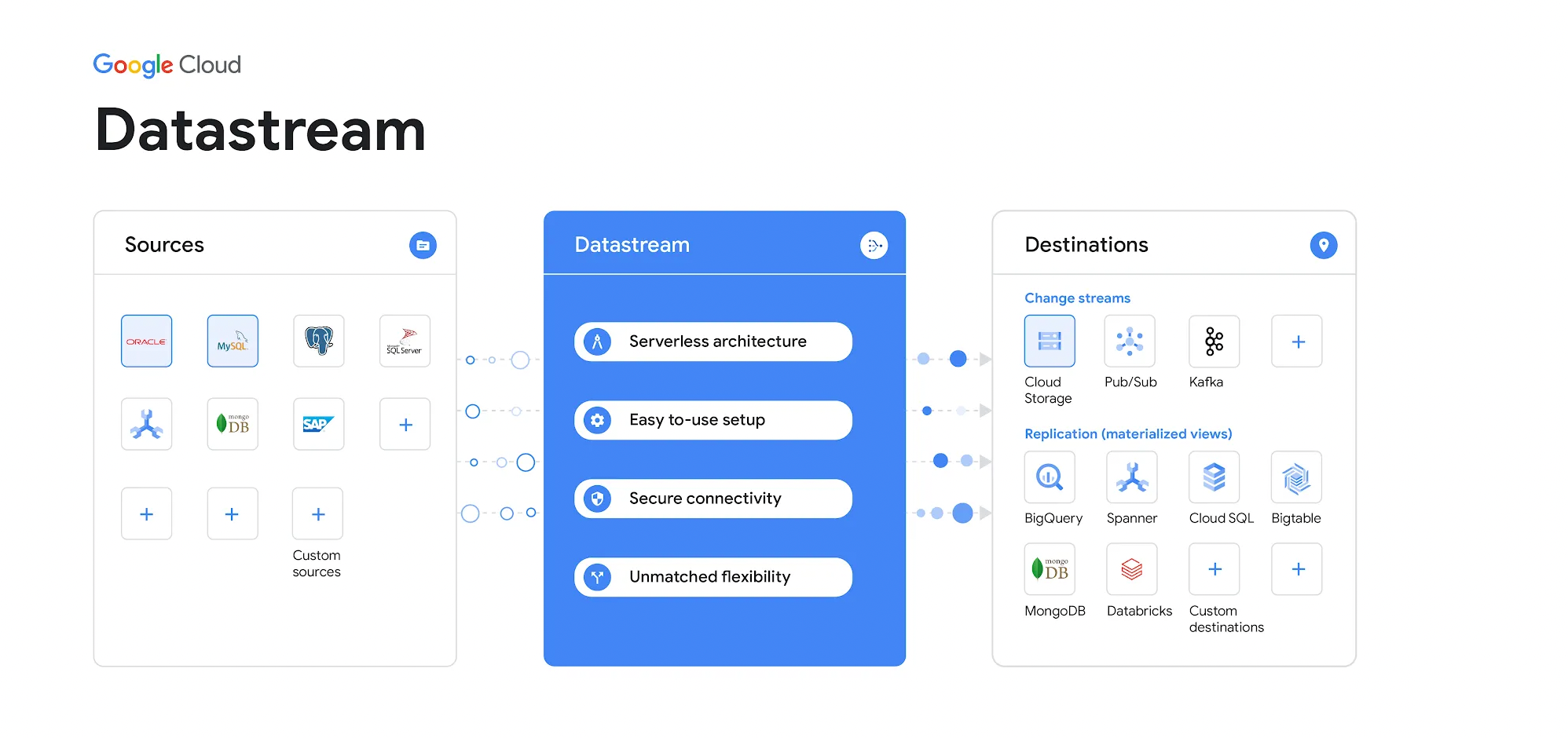

#6. DataStream

Datastream is a data warehouse and pipeline platform that helps companies ingest, process, and analyze their data. Datastream enables users to extract data from multiple sources into multiple databases for real-time analysis. Users can also use Datastream’s API for integration within other applications like sales & marketing tools, CRM systems, ERP systems, etc.

Pricing: Available on request

Best for Data connector

Pros:

- Easy implementation

- Time tracking

- Super intuitive interface

- Easy role-based access

Cons:

- Expensive for some small business owners

- Lack of advanced features

- A little overwhelming to an inexperienced user

- Monthly fees are high

#7. Scraper API

Scraper API is a web scraping tool that allows you to easily extract data from websites on the internet with speed, accuracy, and efficiency. It’s also scalable and reliable, so you can work with large amounts of information without worrying about lag time in your workflow.

Scraper API has an intuitive interface that makes it simple for anyone who wants to extract data without previous experience with such tools.

Pricing: Plans start from $49/month ($299/month for businesses)

Best for Webpage Scraping

Pros:

- Large Proxy Pool

- Excellent Customization Options

- Easy to use

- Fully customization

- Beginner Friendly

- Good Location Support

Cons:

- Limitations with smaller plans

- Fewer buttons to navigate

- Expensive for small businesses

- Dashboard widgets could be more interactive

- The help desk has very long wait times

#8. Tabula

Tabula is a data extraction tool for extracting tables from PDFs. It’s written in Python, and it’s free to use. Tabula is easy to use, highly customizable, and can extract tables from PDFs.

Similar to PyPDF2 Python PDF library.

Pros:

- High Performance

- Ease of Use

Cons:

#9. Matillion

Matillion is a self-serve data extraction tool.

The user interface of this data extraction platform is easy; therefore, you don’t need to be an IT professional or proficient programmer. The platform has been built with flexibility so that its functionality will grow as your needs change over time.

Pricing: $2/credit

Best for Data Unification

Pros:

- Easy to use, intutitive UI

- Easy to monitor

- Data integration and transformation

- Easy to setup

Cons:

- Expensive

- Hard limit on the hardware

- No user community site

- Role-based access is absent

- No backup option

- Pricing is high

- Support is slower

<h3 id="10-levity-ai“>#10. Levity ai

Levity ai is a data extraction tool that uses cloud-based machine learning and ai to extract data from unstructured data sources. It allows businesses to extract data from websites, social media, surveys, forms, etc. The tool has three modules: a web crawler module, an interactive form analysis module, and an email scraping module.

Pricing: $200/month onwards

Pros:

- Reporting on collections

- Simple bulk subscription management

Cons:

- Setup is quite complex

- High pricing

- Poor customer support

- Communication with support again needs a lot of work

- The product catalog lacks vital features

- Mobile optimized interface is nonexistent

Extract data from invoices, identity cards, or documents on autopilot with Nanonets’ workflows!

We’ve taken a look at ten different tools in this blog. It’s time to pick up our bests.

- Best for Data extraction From Documents – Nanonets

- Best for Web scraping for e-commerce – Import.io

- Best for Table Extraction – Nanonets

- Best for Data Unification – Hevo

The best data extraction tool is Nanonets. Nanonets has a free version that allows you to extract up to 500 pages per month for personal use only. Start your free trial now.

Nanonets have been developed with 100% accuracy, so you can be sure that all your data will be extracted without any errors or inconsistencies. The tool also comes with an easy-to-use interface and supports 200+ languages. Hence, it’s suitable for use by people from different backgrounds with varying levels of proficiency in technology.

Best for Web scraping for e-commerce – Import.io

Import.io has an intuitive drag-and-drop interface that makes it easy to set up extraction jobs, even for non-technical users. You can also use the built-in templates to save time when working on specific projects (like an eCommerce store).

The only downside is that you need an API key from each website before using this tool if you want to scrape its content – otherwise, it’s free!

Nanonets is an excellent data extraction tool that can extract data from tables in various formats.

This software uses an algorithm to identify the fields in a table and then allows you to select them individually or all at once via the mouse or keyboard shortcut keys.

In addition, you can specify column headings and format them using formatting options such as bolding, italics, or underlining and insert formulas into your extracted results before exporting them into CSV files for further analysis in Microsoft Excel or Google Sheets, among others.

Best for Data Unification – Hevo

Hevo is a data extraction tool that can be used to unify the extracted data from websites, documents, and spreadsheets. Hevo also works with data from multiple sources, and it’s cloud-based, so you don’t need to download or install anything on your computer.

The best part about this service is that no monthly fees are required for its usage because they charge based on how much information they extract/unify at once (you pay per page).

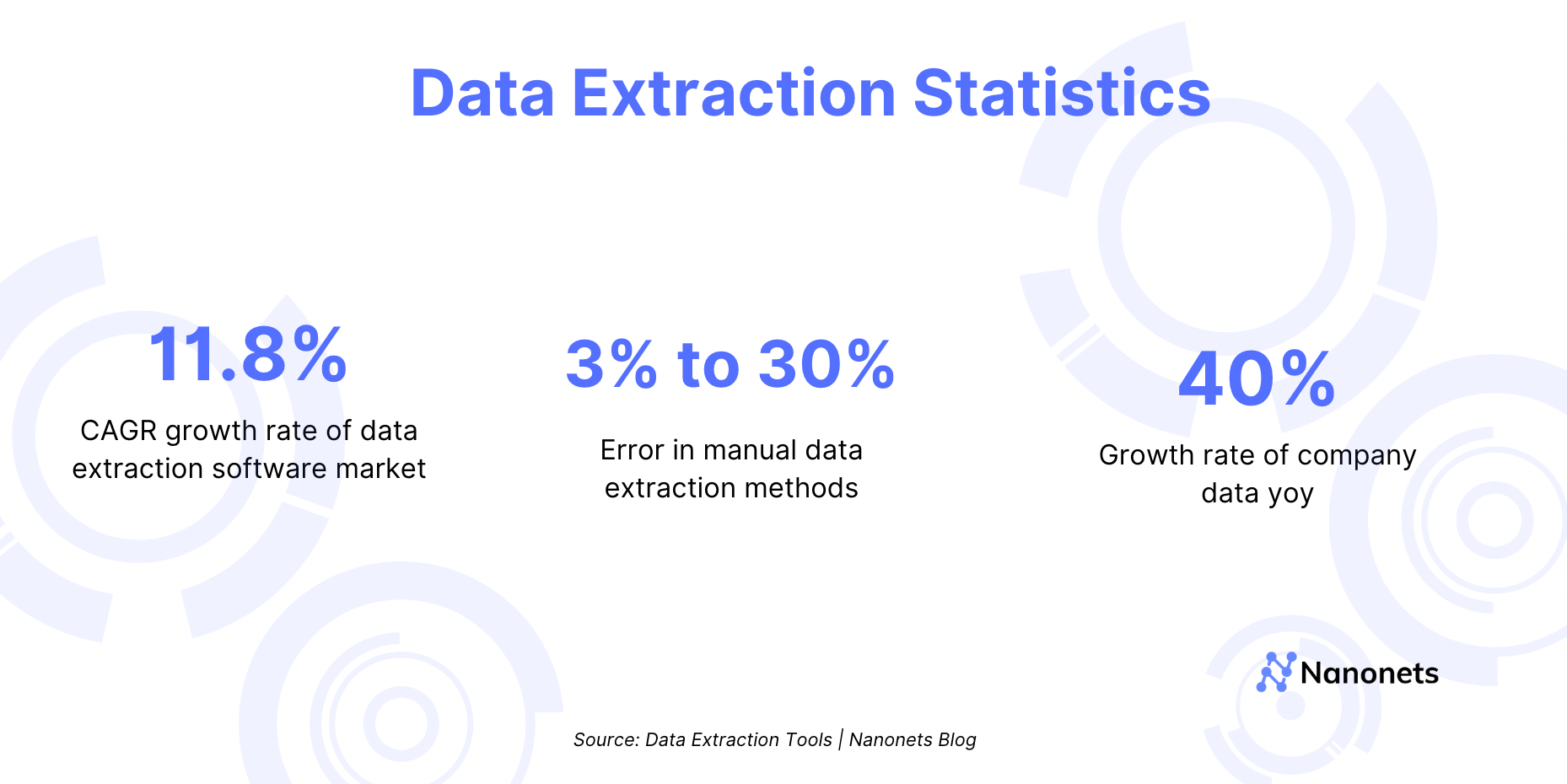

Businesses deal with data from customers, sales, social media, product feedback, and more. The data extraction software market growth rate provides insight into how data extraction software plays a crucial role in data management at companies.

The data extraction software market is expected to grow at 11.8% y-o-y from $2.14 Billion in 2019 to $4.90 in 2027.

Corporations are collecting more data than ever, with the collected data increasing by 42% yearly!

Now that we know a lot of data is present, what if we get data analysts to do the job?

Want to automate data extraction? Save Time, Effort & Money while enhancing efficiency with Nanonets!

There are several factors you should consider when selecting a data extraction tool. Here are some of the most important to keep in mind:

- The level of compliance with security standards and regulations.

- The ability to secure sensitive data during extraction.

- The ability to retain metadata from source files, including author, time/date stamps, and formatting (such as indentations).

- Integration with other applications, such as document management systems or ERP systems, for automated notifications about metadata and file structure changes.

- Compatibility with various operating systems such as Linux or Mac OS x for cross-platform use cases like desktop publishing workflows or mobile device backups by users who have different devices such as smartphones or tablets but share a typical work environment at home/office where all their files may reside on shared storage drives accessible through cloud services

Conclusion

Data extraction is transforming semi- or unstructured data into structured data. To put it another way, this process transforms semi- or unstructured data into structured data.

Data extraction has become crucial due to the dramatic rise in unstructured and semi-structured data. However, the data extraction procedure makes your job precise, improves your chances of making sales, and makes you more agile.

You must use the best data extraction software according to your needs to realize the full potential of data at your company. We hope our blog helps you make the decision.

Try Nanonets Data Extraction Platform to extract data from documents, PDFs, and images on autopilot.

FAQ

Data extraction is the process of collecting specific data from a larger dataset or source for additional analysis. This can include extracting data from databases, websites, or other structured or unstructured sources like documents, images or emails.

By extracting only the relevant data, businesses can save time and resources and gain valuable insights into their operations, customers, and competitors. This can help businesses improve their processes, identify new opportunities, and stay competitive in a rapidly changing marketplace.

What are data extraction tools?

A data extraction tool is a software program that allows users to extract specific data from a larger dataset or source. Data extraction tools automate data extraction, making it faster, error-free and more efficient than manual extraction methods.

What are the benefits of using data extraction tools?

Data extraction tools are essential for data management for a variety of reasons. Data extraction software makes this procedure repeatable, automated, and sustainable in addition to streamlining the process of obtaining the raw data that will eventually influence application or analytics use. A crucial step in modernizing these repositories is using data extraction tools in a data warehouse, which enables data warehouses to integrate web-based sources in addition to conventional, on-premise sources. The advantages of data extraction tools are as follows:

Accuracy

Data extraction is a very accurate process. It lets you extract data from the source with high precision, which means you can have more confidence in the information you get when extracting data and using it for your business processes.

Control

Data extraction allows you to control all extractions, including selecting sources, designing extraction rules and defining destination data warehouse location/format. This gives you complete flexibility over what data can be extracted from various sources, where it will be stored, and how users will access it.

Efficiency & Productivity

With the correct tools, automated migration processes can significantly reduce the manual effort required to migrate large amounts of data between systems or locations. As well as saving time on each migration project itself, this also improves overall productivity by reducing the number of human errors made during manual processes (such as mistakes made during copy-pasting).

Scalability

One of the most significant advantages of using data extraction tools is that they can handle large data and are often very easily scalable. This means you can extract data from multiple sources at once and collate this information in your destination location without needing to change configuration settings.

Ease-of-use

Data extraction tools are generally very easy to use and set up, so there is little training required for users who want to perform migrations themselves.

What is a data extraction example?

An example of data extraction would be email parsing. A data extraction software like Nanonets can automatically extract data from emails like email addresses, email attachments, subject lines, or specific keywords and convert them into a CSV.

Why use data extraction software?

Well, a data extraction solution can accentuate the tasks performed by data analysts. Also, the software has added benefits over using manual processes.

The first reason to adopt Data extraction would be to automate manual tasks that take up a lot of time. Over 25% of Ceos’ time is spent on manual data entry tasks, which can be easily automated using an intelligent automation platform.

One other reason is to reduce errors in the data collection processes. Manual Data Extraction has a high error rate of up to 30%, which adds inconsistencies in your datasets.

Data Extraction tools can also improve the productivity of employees as employees focus on more strategic tasks rather than tedious and repetitive manual tasks.

Data extraction is the process of extracting information from physical documents, PDFs, customer profiles, social and media blogs, etc. in a simple method.

Why is automated data entry better than manual data entry?

- Automated data extraction can regularly produce accuracy rates of up to 95%+.

- It supports faster customer response times due to the quick processing of massive amounts of data.

- Although the initial cost of the investment is high, the long-term return is assured.

- Since all data entered by the system is automatically reviewed and confirmed using previous models, no reprocessing is required.

- artificial intelligence (ai) and smart machine learning algorithms automatically adapt to file formats.

As a result, automated data extraction requires no human interaction and processes quickly.

What are the steps involved in data extraction?

The 1st phase of the ETL (Extract, Transform, and Load) process is data extraction. After properly extracting the data, you can only convert and load the data into the destinations you want to use for future data analysis.

To put it simply, unstructured data extraction is obtaining data from a source system to utilize it in a data warehouse environment. The Data Extraction process may often be divided into three phases:

- Recognize Modifications: You must keep an eye out for any alterations to your data. A new table or column could be included, for instance.

- Define the Data to be Extracted: You should decide which portions of your data need to be extracted and then specify those portions. Then, the entire data set is extracted all at once using the complete extraction approach.

- Process Data Extraction: You have finished all the necessary steps and are prepared to undertake Data Extraction using either manually created scripts or automatic Data Extraction Tools.

Recommended Reading:

The blog was originally published on 12 May 2022 and was updated on January 1 2024 with fresh and updated content.