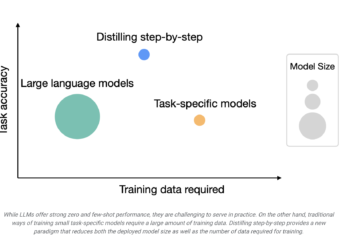

Researchers at the University of Washington and Google have developed step-by-step distillation technology to train a dedicated small machine learning model with less data

In recent years, large language models (LLMs) have revolutionized the field of natural language processing, enabling unprecedented and long-shot learning ...