UC Berkeley Researchers Propose RingAttention: A Memory-Efficient AI Approach to Reduce Transformer Memory Requirements

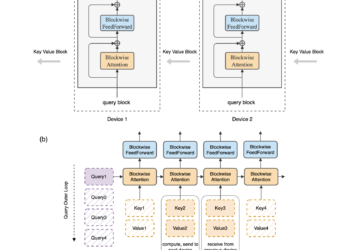

One type of deep learning model architecture is called Transformers in the context of many next-generation ai models. They have ...