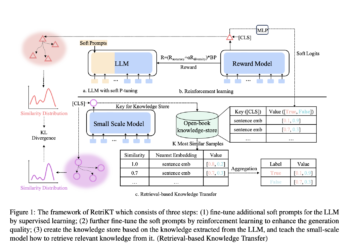

Researchers in China introduced a new compression paradigm called retrieval-based knowledge transfer (RetriKT): revolutionizing the deployment of large-scale pre-trained language models in real-world applications

Natural Language Processing (NLP) applications have shown remarkable performance using pre-trained language models (PLM), including BERT/RoBERTa. However, due to their ...

NEWSLETTER

NEWSLETTER