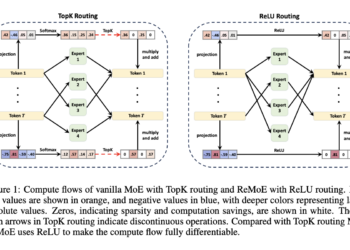

Tsinghua University researchers propose ReMoE: a fully differentiable MoE architecture with ReLU routing

The development of Transformer models has significantly advanced artificial intelligence, offering remarkable performance in various tasks. However, these advances often ...