Relaxed Recursive Transformers with Layered Low-Rank Adaptation: Achieving High Performance and Low Computational Cost on Large Language Models

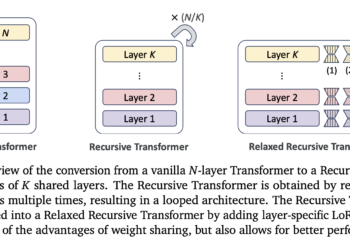

Large language models (LLMs) are based on deep learning architectures that capture complex linguistic relationships within layered structures. Primarily based ...