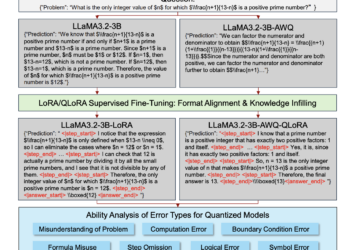

This AI article explores quantization techniques and their impact on mathematical reasoning in large language models

Mathematical reasoning is the backbone of artificial intelligence and is very important in arithmetic, geometric and competitive level problems. Recently, ...