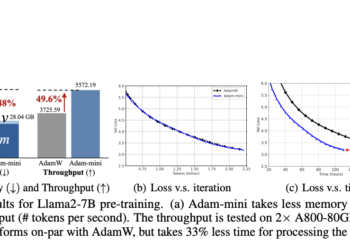

Moonshot AI and UCLA researchers release Moonlight: a mixture model 3B/16B-PARAMETER (MOE) Trained with 5.7T tokens using MUON OPTIMIZER

The training of large language models (LLM) has become central to advance artificial intelligence, however, it is not exempt from ...

NEWSLETTER

NEWSLETTER