RARE (Reasoning Reasoning Modeling): A scalable frame for the specific domain reasoning in light language models

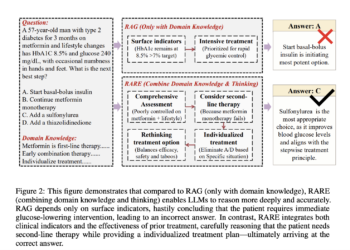

LLMs have demonstrated a strong general purpose performance in several tasks, including mathematical reasoning and automation. However, they fight in ...

NEWSLETTER

NEWSLETTER