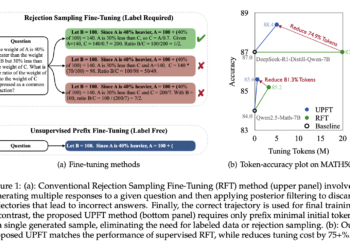

Tencent ai Lab presents a prefix without supervision of fine adjustment (UPFT): an efficient method that trains models in just the first 8-32 tokens of individual self-generated solutions

Unleashing a more efficient approach for fine adjustment reasoning in large language models, the recent work of the Tencent ai ...

NEWSLETTER

NEWSLETTER