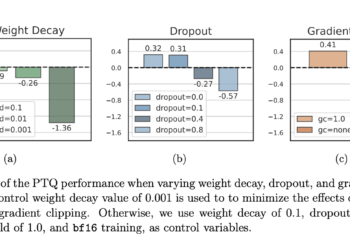

Cohere AI researchers investigate how to overcome quantization cliffs in large-scale machine learning models using optimization techniques

The rise of artificial intelligence to large language models (LLM) has redefined natural language processing. However, implementing these colossal models ...