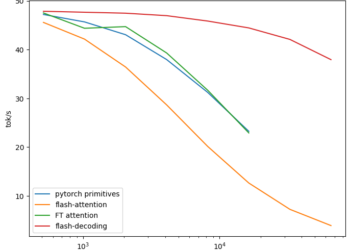

This AI research introduces flash decoding: a new FlashAttention-based AI approach to perform long-context LLM inference up to 8x faster

Large language models (LLMs), such as ChatGPT and Llama, have attracted substantial attention due to their exceptional natural language processing ...