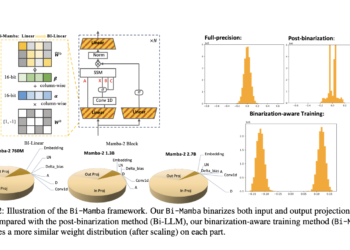

MBZUAI and CMU researchers present Bi-Mamba: a scalable and efficient 1-bit Mamba architecture designed for large language models in multiple sizes (780M, 1.3B and 2.7B parameters)

The evolution of machine learning has brought significant advances in language models, which are essential for tasks such as text ...