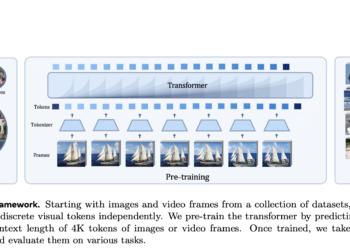

This AI article introduces Toto: Autoregressive Video Models for Unified Image and Video Pre-Training on Various Tasks

Autoregressive pretraining has proven to be revolutionary in machine learning, especially when it comes to processing sequential data. Predictive modeling ...

NEWSLETTER

NEWSLETTER