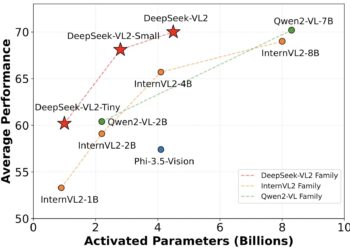

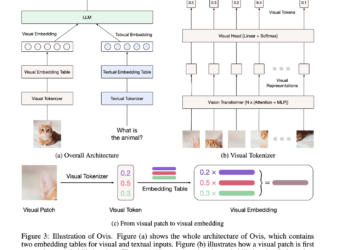

DeepSeek-VL2 Series Open Source DeepSeek-AI: Three Parameter Models 3B, 16B and 27B with Mixture of Experts (MoE) Architecture Redefining Vision Language AI

The integration of vision and language capabilities in ai has led to advances in vision-language models (VLM). These models aim ...

NEWSLETTER

NEWSLETTER