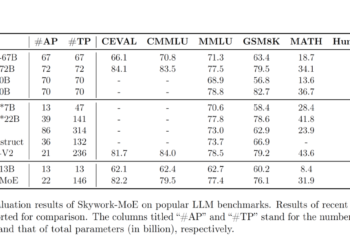

Skywork Team Introduces Skywork-MoE: A High-Performance Merge of Experts (MoE) Model with 146B Parameters, 16 Experts and 22B Parameters Enabled

The development of large language models (LLM) has been a focal point in advancing NLP capabilities. However, training these models ...

NEWSLETTER

NEWSLETTER