Introduction

Interaction terms are incorporated into regression models to capture the effect of two or more independent variables on the dependent variable. Sometimes it is not just the simple relationship between the control variables and the target variable that is being investigated; interaction terms can be very useful in such instances. They are also useful when the relationship between one independent variable and the dependent variable is conditional on the level of another independent variable.

This, of course, implies that the effect of one predictor on the response variable depends on the level of another predictor. In this blog, we examine the idea of interaction terms through a simulated scenario: predicting over and over again the amount of time users would spend in an e-commerce channel using their past behavior.

Learning objectives

- Understand how interaction terms improve the predictive power of regression models.

- Learn how to create and incorporate interaction terms into a regression analysis.

- Analyze the impact of interaction terms on model accuracy through a practical example.

- Visualize and interpret the effects of interaction terms on predicted outcomes.

- Learn when and why to apply interaction terms in real-world scenarios.

This article was published as part of the Data Science Blogathon.

Understanding the basics of interaction terms

In real life, we do not encounter one variable operating in isolation from the others, and therefore real-life models are much more complex than those we study in classes. For example, the effect of end-user navigation actions, such as adding items to a cart, on the time spent on an e-commerce platform differs when the user adds the item to a cart and purchases it. Therefore, adding interaction terms as variables to a regression model allows these intersections to be recognized and therefore improve the model's fitness for purpose in terms of explaining patterns underlying the observed data and/or predicting future values of the dependent variable.

Mathematical representation

Let us consider a linear regression model with two independent variables, X1 and X2:

Y = β0 + β1X1 + β2X2 + ϵ,

where Y is the dependent variable, β0 is the intercept, β1 and β2 are the coefficients of the independent variables X1 and X2, respectively, and ϵ is the error term.

Add an interaction term

To include an interaction term between X1 and X2, we introduce a new variable X1⋅X2 :

Y = β0 + β1X1 + β2X2 + β3(X1⋅X2) + ϵ,

where β3 represents the interaction effect between X1 and X2. The term X1⋅X2 is the product of the two independent variables.

How do interaction terms influence regression coefficients?

- β0: The intercept, which represents the expected value of Y when all independent variables are zero.

- β1: The effect of X1 on Y when X2 is zero.

- β2: The effect of X2 on Y when X1 is zero.

- β3: The change in the effect of X1 on Y for a one-unit change in X2, or equivalently, the change in the effect of X2 on Y for a one-unit change in X1.

Example: User activity and time spent

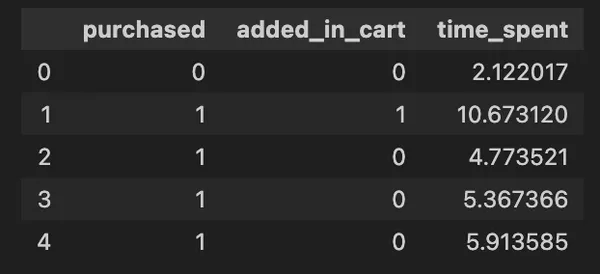

First, let's create a simulated dataset to represent user behavior in an online store. The data consists of:

- added_to_cart: Indicates whether a user has added products to their cart (1 for added and 0 for not added).

- purchased: Whether or not the user completed a purchase (1 for completion or 0 for no completion).

- time_spent: The amount of time a user spends on an e-commerce platform. We aim to predict the length of a user's visit to an online store by analyzing whether they add products to their cart and complete a transaction.

# import libraries

import pandas as pd

import numpy as np

# Generate synthetic data

def generate_synthetic_data(n_samples=2000):

np.random.seed(42)

added_in_cart = np.random.randint(0, 2, n_samples)

purchased = np.random.randint(0, 2, n_samples)

time_spent = 3 + 2*purchased + 2.5*added_in_cart + 4*purchased*added_in_cart + np.random.normal(0, 1, n_samples)

return pd.DataFrame({'purchased': purchased, 'added_in_cart': added_in_cart, 'time_spent': time_spent})

df = generate_synthetic_data()

df.head()Production:

Simulated scenario: user behavior on an e-commerce platform

As a next step, we will first build an ordinary least squares regression model that takes these market actions into account, but without taking into account their interaction effects. Our hypotheses are as follows: (Hypothesis 1) There is an effect of time spent on the website when each action is performed separately. Now, we will build a second model that includes the interaction term that exists between adding products to the cart and making a purchase.

This will help us contrast the impact of those actions, separately or together, on time spent on the website. This suggests that we want to find out whether users who add products to the cart and make a purchase spend more time on the site than the time spent when considering each behavior separately.

Model without interaction term

After building the model the following results were observed:

- With a mean square error (MSE) of 2.11, the model without the interaction term explains approximately 80% (test R-squared) and 82% (training R-squared) of the variance in time taken. This indicates that predictions of time taken are, on average, 2.11 square units off from actual time taken. While this model can be improved, it is reasonably accurate.

- Furthermore, the chart below indicates that while the model works quite well, there is still a lot of room for improvement, especially when it comes to capturing higher values of time spent.

# Import libraries

from sklearn.model_selection import train_test_split

from sklearn.linear_model import LinearRegression

from sklearn.metrics import mean_squared_error, r2_score

import statsmodels.api as sm

from sklearn.model_selection import train_test_split

import matplotlib.pyplot as plt

# Model without interaction term

x = df(('purchased', 'added_in_cart'))

y = df('time_spent')

X_train, X_test, y_train, y_test = train_test_split(x, y, test_size=0.3, random_state=42)

# Add a constant for the intercept

X_train_const = sm.add_constant(X_train)

X_test_const = sm.add_constant(X_test)

model = sm.OLS(y_train, X_train_const).fit()

y_pred = model.predict(X_test_const)

# Calculate metrics for model without interaction term

train_r2 = model.rsquared

test_r2 = r2_score(y_test, y_pred)

mse = mean_squared_error(y_test, y_pred)

print("Model without Interaction Term:")

print('Training R-squared Score (%):', round(train_r2 * 100, 4))

print('Test R-squared Score (%):', round(test_r2 * 100, 4))

print("MSE:", round(mse, 4))

print(model.summary())

# Function to plot actual vs predicted

def plot_actual_vs_predicted(y_test, y_pred, title):

plt.figure(figsize=(8, 4))

plt.scatter(y_test, y_pred, edgecolors=(0, 0, 0))

plt.plot((y_test.min(), y_test.max()), (y_test.min(), y_test.max()), 'k--', lw=2)

plt.xlabel('Actual')

plt.ylabel('Predicted')

plt.title(title)

plt.show()

# Plot without interaction term

plot_actual_vs_predicted(y_test, y_pred, 'Actual vs Predicted Time Spent (Without Interaction Term)')Production:

Model with one interaction term

- A better fit of the model with the interaction term is indicated by the scatterplot with the interaction term, which shows predicted values substantially closer to the actual values.

- The model explains much more of the variance in time spent on the interaction term, as demonstrated by the higher R-squared value of the test (from 80.36% to 90.46%).

- The model predictions with the interaction term are more accurate, as demonstrated by the lower MSE (from 2.11 to 1.02).

- The closer alignment of the points with the diagonal line, particularly for higher values of time_spent, indicates improved fit. The interaction term helps express how user actions collectively affect the amount of time spent.

# Add interaction term

df('purchased_added_in_cart') = df('purchased') * df('added_in_cart')

x = df(('purchased', 'added_in_cart', 'purchased_added_in_cart'))

y = df('time_spent')

X_train, X_test, y_train, y_test = train_test_split(x, y, test_size=0.3, random_state=42)

# Add a constant for the intercept

X_train_const = sm.add_constant(X_train)

X_test_const = sm.add_constant(X_test)

model_with_interaction = sm.OLS(y_train, X_train_const).fit()

y_pred_with_interaction = model_with_interaction.predict(X_test_const)

# Calculate metrics for model with interaction term

train_r2_with_interaction = model_with_interaction.rsquared

test_r2_with_interaction = r2_score(y_test, y_pred_with_interaction)

mse_with_interaction = mean_squared_error(y_test, y_pred_with_interaction)

print("\nModel with Interaction Term:")

print('Training R-squared Score (%):', round(train_r2_with_interaction * 100, 4))

print('Test R-squared Score (%):', round(test_r2_with_interaction * 100, 4))

print("MSE:", round(mse_with_interaction, 4))

print(model_with_interaction.summary())

# Plot with interaction term

plot_actual_vs_predicted(y_test, y_pred_with_interaction, 'Actual vs Predicted Time Spent (With Interaction Term)')

# Print comparison

print("\nComparison of Models:")

print("R-squared without Interaction Term:", round(r2_score(y_test, y_pred)*100,4))

print("R-squared with Interaction Term:", round(r2_score(y_test, y_pred_with_interaction)*100,4))

print("MSE without Interaction Term:", round(mean_squared_error(y_test, y_pred),4))

print("MSE with Interaction Term:", round(mean_squared_error(y_test, y_pred_with_interaction),4))Production:

Model performance comparison

- The predictions of the model without the interaction term are represented by the blue dots. When the actual values of time spent are higher, these dots are more spread out from the diagonal line.

- The predictions of the model with the interaction term are represented by the red dots. The model with the interaction term produces more accurate predictions, especially for higher values of actual time spent, since these points are closer to the diagonal line.

# Compare model with and without interaction term

def plot_actual_vs_predicted_combined(y_test, y_pred1, y_pred2, title1, title2):

plt.figure(figsize=(10, 6))

plt.scatter(y_test, y_pred1, edgecolors="blue", label=title1, alpha=0.6)

plt.scatter(y_test, y_pred2, edgecolors="red", label=title2, alpha=0.6)

plt.plot((y_test.min(), y_test.max()), (y_test.min(), y_test.max()), 'k--', lw=2)

plt.xlabel('Actual')

plt.ylabel('Predicted')

plt.title('Actual vs Predicted User Time Spent')

plt.legend()

plt.show()

plot_actual_vs_predicted_combined(y_test, y_pred, y_pred_with_interaction, 'Model Without Interaction Term', 'Model With Interaction Term')

Production:

Conclusion

The improvement in model performance with the interaction term demonstrates that sometimes adding interaction terms to the model can increase its significance. This example highlights how interaction terms can capture additional information that is not apparent from the main effects alone. In practice, considering interaction terms in regression models can potentially lead to more accurate and insightful predictions.

In this blog, we first generate a synthetic dataset to simulate user behavior on an e-commerce platform. We then build two regression models: one without interaction terms and one with interaction terms. By comparing their performance, we demonstrate the significant impact of interaction terms on the model accuracy.

Key points

- Regression models with interaction terms can help better understand the relationships between two or more variables and the target variable by capturing their combined effects.

- The inclusion of interaction terms can significantly improve model performance, as evidenced by the higher R-squared values and lower MSE in this guide.

- Interaction terms are not just theoretical concepts, they can be applied to real-world scenarios.

Frequently Asked Questions

A. Variables are created by multiplying two or more independent variables. They are used to capture the combined effect of these variables on the dependent variable. This can provide a more nuanced understanding of the relationships in the data.

A. You should consider using TI when you suspect that the effect of one independent variable on the dependent variable depends on the level of another independent variable. For example, if you believe that the impact of adding items to the cart on the time spent on an e-commerce platform depends on whether the user makes a purchase. You should include an interaction term between these variables.

A. The coefficient of an interaction term represents the change in the effect of one independent variable on the dependent variable given a one-unit change in another independent variable. For example, in our example above we have an interaction term between purchased and added_to_cart, the coefficient tells us how the effect of adding items to the cart changes on the time spent when making a purchase.

The media displayed in this article is not the property of Analytics Vidhya and is used at the discretion of the author.