A key factor for ai research in mathematical reasoning is that it can further increase models' understanding and problem-solving capabilities for complex mathematical problems. Applications like these can be very important in education, finance, and technology – fields that rely on the accuracy of solutions and the speed with which problems are solved. This improvement in model capabilities can be translated into improved ai performance in various special tasks and in logical processes in general.

One of the most important challenges in this area is that large-scale, high-quality datasets designed for mathematical reasoning are time-consuming. Traditional methods of constructing such datasets often require a large amount of computational resources and a large amount of seed data, making them difficult to scale. This limits the ability of models to handle a wide variety of mathematical problems, which ends up causing errors, especially in value variations. This raises the problem of consistency in logic, where models make incorrect adjustments to their reasoning due to these variations and thus reduce the reliability of the models.

Cutting-edge techniques for improving mathematical reasoning in ai, such as thought chaining and thought programming, have models reason through a problem step by step or incorporate calculations into their reasoning. However, many of these methods have been costly in terms of reliance on large data sets and computational resources and should be made more scalable. They should also thoroughly model one of the big challenges: the inconsistencies that naturally arise when a change in numerical values in problems leads to erroneous deductions.

A research team from the Beijing Academy of artificial intelligence and the China University of Mining and technology has proposed a scalable dataset for programmatic mathematical reasoning called InfinityMath. According to the authors, InfinityMath aims to decouple numerical values from problems posed in mathematics. In this way, creating a huge and diverse dataset will require a manageable amount of computational resources. The dataset was created from seven high-quality mathematical sources and has over 101,380 data points. This makes it a fairly comprehensive tool for improving the reasoning ability of ai models.

InfinityMath’s methodology is multi-step to achieve maximum scalability and logical consistency. Masking numerical values of mathematical problems creates generic templates that provide a basis for generating problem-solving programs. These are then taken as general templates to develop programs that do not reference specific numbers, logically following the same reasoning procedure for all possible numerical variations. It can efficiently scale data and improve the resilience of ai models in different mathematical challenges. Such programs could be generated with sophisticated language models such as GPT-4 to reduce potential errors and improve overall quality.

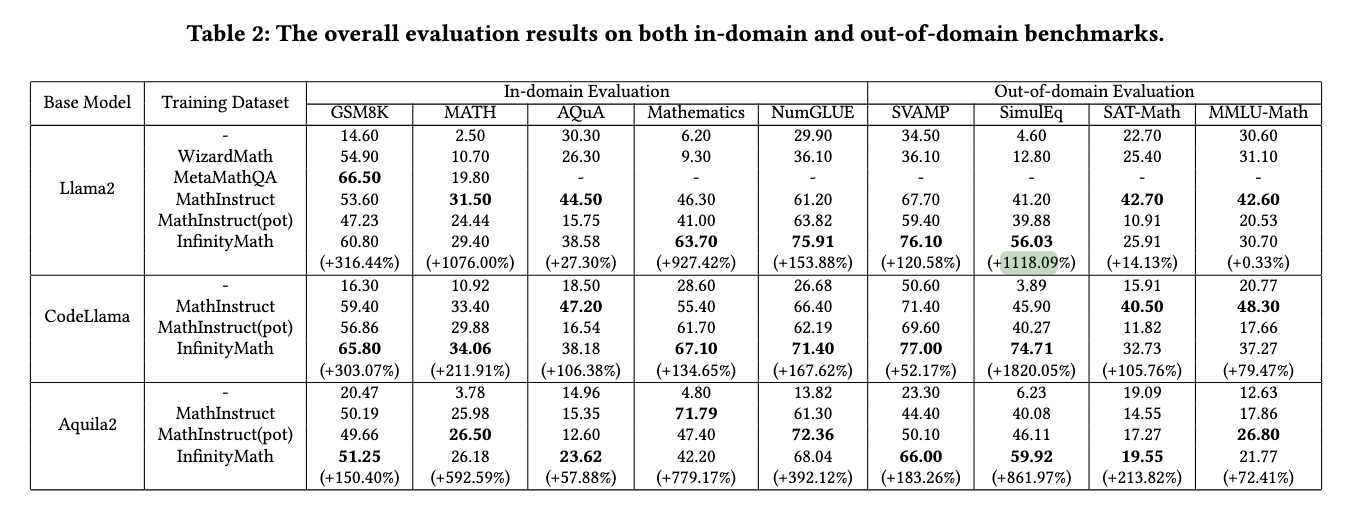

Models tuned on the InfinityMath dataset performed well on several benchmarks. For example, with the help of the InfinityMath dataset, the Llama2 model showed dramatic improvements in accuracy on the GSM8K dataset by 316.44% and on the MATH dataset by 1067.6%. Another model tuned on this dataset was CodeLlama, which also showed huge improvements: 120.58% on SVAMP and 1118.09% on SimulEq. These results show that, at the very least, InfinityMath can increase the accuracy and robustness of ai models and improve their reliability in solving various mathematical problems. This consistency was also ahead when it came to logical results due to numerical variations; traditional datasets often lack performance.

Thus, the InfinityMath effect extends beyond mere numerical accuracy to affect perhaps the most fundamental feature of mathematical reasoning. The authors performed stringent and improved evaluations on existing test sets, such as GSM8K+ and MATH+, that differed only in numerical values. Models trained on InfinityMath showed higher performance in logical consistency than any other dataset in accuracy and model effectiveness. This success underscores the role played by InfinityMath in further pushing the boundaries of mathematical reasoning and scaling and making an effective solution available to a very broad class of ai models.

In other words, InfinityMath is a major improvement in mathematical reasoning, solving two major challenges: scalability and logical consistency. The dataset was selected by a dedicated research team from the Beijing Academy of artificial intelligence and the China University of Mining and technology to ensure that a robust and highly extensible solution could ultimately enable ai models to solve extremely complex mathematical problems. In this case, the InfinityMath process not only separates numerical values from solving processes, but also makes building a large and highly diversified dataset more efficient to improve the accuracy and reliability of ai models. These results therefore allow for observing gains in improvement with multiple benchmark-related performances. Thus, this dataset could further improve ai and its applications in various fields.

Take a look at the Paper and Dataset. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on twitter.com/Marktechpost”>twitter and join our Telegram Channel and LinkedIn GrAbove!. If you like our work, you will love our fact sheet..

Don't forget to join our Subreddit with over 48 billion users

Find upcoming ai webinars here

Nikhil is a Consultant Intern at Marktechpost. He is pursuing an integrated dual degree in Materials from Indian Institute of technology, Kharagpur. Nikhil is an ai and Machine Learning enthusiast who is always researching applications in fields like Biomaterials and Biomedical Science. With a strong background in Materials Science, he is exploring new advancements and creating opportunities to contribute.

<script async src="//platform.twitter.com/widgets.js” charset=”utf-8″>