Knowledge Bases for amazon Bedrock is a fully managed feature that helps you securely connect Knowledge Base Models (FMs) in amazon Bedrock to your enterprise data using Retrieval Augmented Generation (RAG). This feature streamlines the entire RAG workflow from ingestion to retrieval to request augmentation, eliminating the need for custom data source integrations and data pipeline management.

We recently announced the general availability of Guardrails for amazon Bedrock, which enables you to implement guardrails across your generative artificial intelligence (ai) applications that are customized to your use cases and responsible ai policies. You can create multiple guardrails tailored to various use cases and apply them across multiple FMs, standardizing security controls across generative ai applications.

Today’s launch of knowledge base guardrails for amazon Bedrock brings increased security and compliance to your generative ai RAG applications. This new functionality offers industry-leading security measures that filter out harmful content and protect sensitive information in your documents, improving the user experience and aligning with organizational standards.

Solution Overview

Knowledge Bases for amazon Bedrock allows you to configure your RAG applications to query your knowledge base using the RetrieveAndGenerate API, generating responses from the retrieved information.

By default, knowledge bases allow RAG applications to query the entire vector database, accessing all records and retrieving relevant results. This may generate inappropriate or unwanted content or provide sensitive information, which could violate certain policies or guidelines set by your company. Integrating guardrails with your knowledge base provides a mechanism to filter and control the generated output, adhering to predefined rules and regulations.

The following diagram illustrates an example workflow.

When you test the knowledge base using the amazon Bedrock console or by calling RetrieveAndGenerate API Using one of the AWS SDKs, the system generates a query embedding and performs a semantic search to retrieve similar documents from the vector store.

The query is then extended to have the retrieved document fragments, message, and guardrails configuration. Guardrails are applied to check for denied topics and filter out harmful content before the extended query is sent to the InvokeModel API. Finally, InvokeModel The API generates a Large Language Model (LLM) response, ensuring that the output is free of any unwanted content.

In the following sections, we demonstrate how to create a guardrailed knowledge base. We also compare the results of queries using the same knowledge base with and without guardrails.

Guardrail Use Cases with Knowledge Bases for amazon Bedrock

The following are common use cases for integrating guardrails into the knowledge base:

- Internal knowledge management in a law firm — This helps legal professionals search through case files, legal precedents, and client communications. Guardrails can prevent the retrieval of confidential client information and filter out inappropriate language. For example, a lawyer could ask, “What are the key points of the latest intellectual property case law?” and guardrails will ensure that no confidential client details or inappropriate language are included in the answer, maintaining the integrity and confidentiality of the information.

- Conversational search for financial services — This allows financial advisors to search through investment portfolios, transaction histories, and market analysis. Guardrails can prevent the retrieval of unauthorized investment advice and filter out content that violates regulatory compliance. An example query could be, “What are the recent performance metrics of our high net worth clients?” and guardrails ensure that only permitted information is shared.

- Customer service for an e-commerce platform — This allows customer service representatives to access order histories, customer queries, and product details. Guardrails can prevent sensitive customer data (such as names, emails, or addresses) from being exposed in responses. For example, when a representative asks, “Can you summarize recent complaints about our new product line?” Guardrails will remove any personally identifiable information (PII), ensuring privacy and compliance with data protection regulations.

Prepare a knowledge base dataset for amazon Bedrock

For this post, we used a sample dataset containing several fictitious emergency room reports, such as detailed procedure notes, preoperative and postoperative diagnoses, and patient histories. These records illustrate how to integrate knowledge bases with guardrails and query them effectively.

- If you want to track in your AWS account, download the fileEach medical record is a Word document.

- We store the dataset in an amazon Simple Storage Service (amazon S3) bucket. For instructions on how to create a bucket, see Creating a Bucket.

- Upload the unzipped files to this S3 bucket.

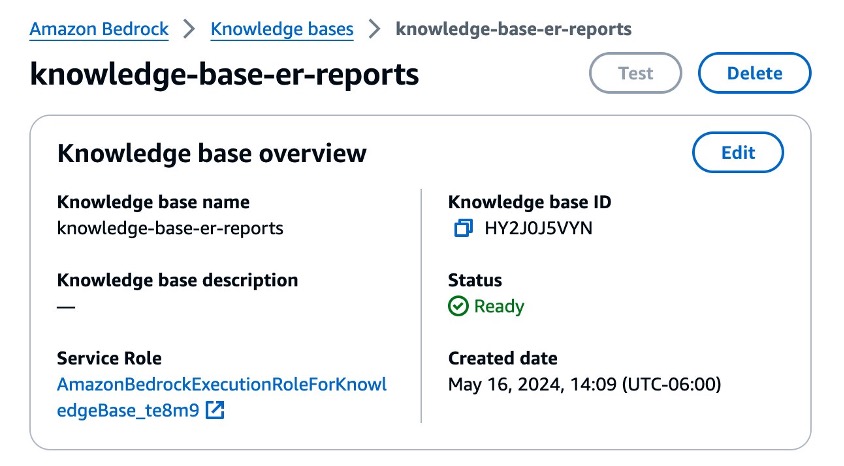

Creating a Knowledge Base for amazon Bedrock

For instructions on how to create a new knowledge base, see Create a knowledge base. For this example, we used the following configuration:

- About him Configure data source page, below amazon S3Select the S3 bucket with your dataset.

- Low Fragmentation strategyselect Without fragmentation because the documents in the dataset are preprocessed to be of a certain length.

- In it Inlay model section, choose model G1 Titan Inlays: Text.

- In it Vector database section, choose Quickly create a new vector store.

Synchronize the dataset with the knowledge base

Once you've created your knowledge base and your data files are in an S3 bucket, you can start incremental ingestion. For instructions, see Sync to ingest your data sources into your knowledge base.

While you wait for the sync job to finish, you can move on to the next section, where you will create guardrails.

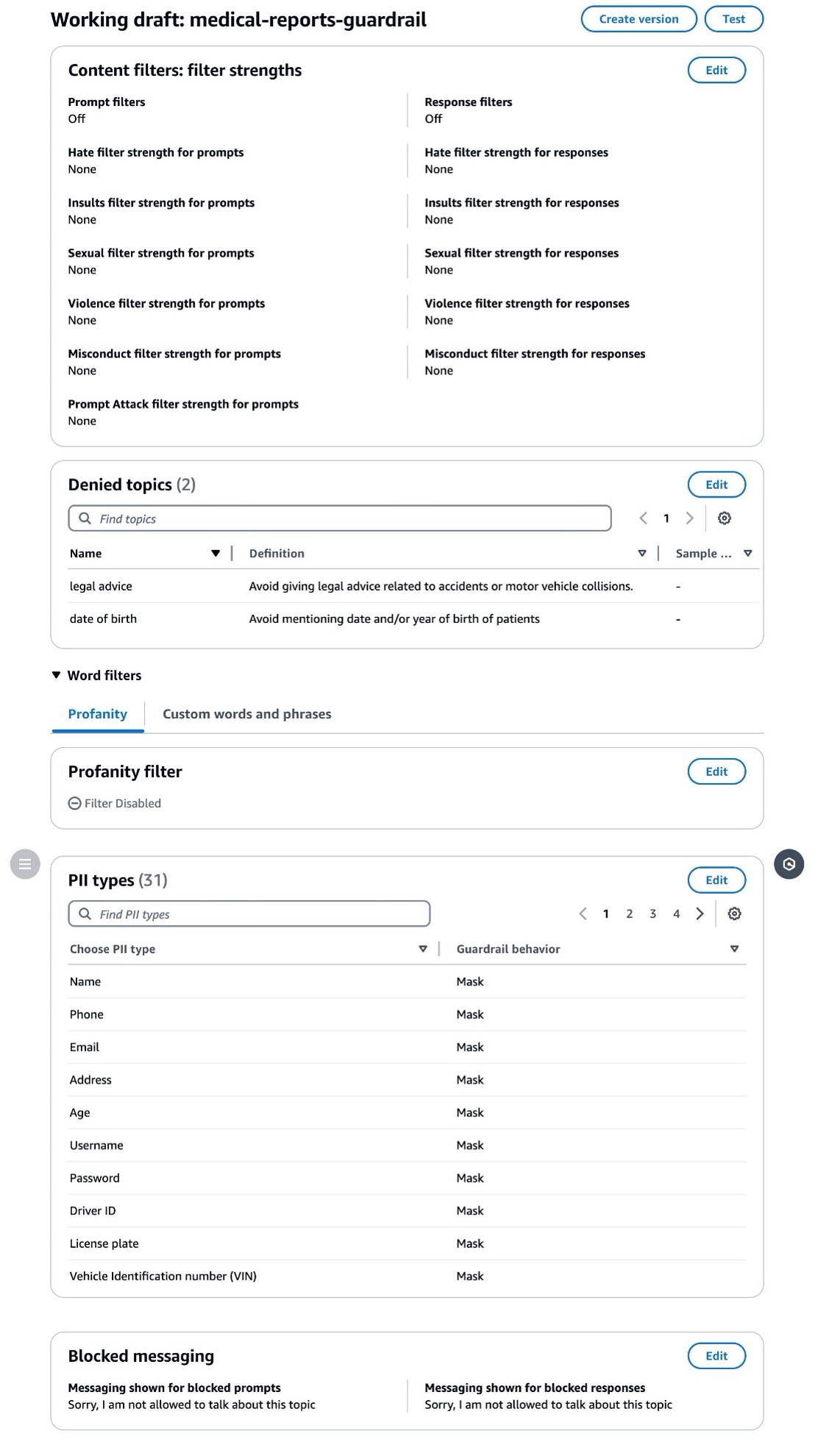

Create a Railing in the amazon Bedrock Console

Complete the following steps to create a railing:

- In the amazon Bedrock console, select Railings in the navigation panel.

- Choose Create railing.

- About him Provide details of the railing page, below Details of the railingProvide a name and optional description for the railing.

- In it Denied Topics Section, add the information of two topics as shown in the following screenshot.

- In it Add sensitive information filters section, low Types of PIIadd all types of PII.

- Choose Create railing.

Consult the knowledge base in the amazon Bedrock console

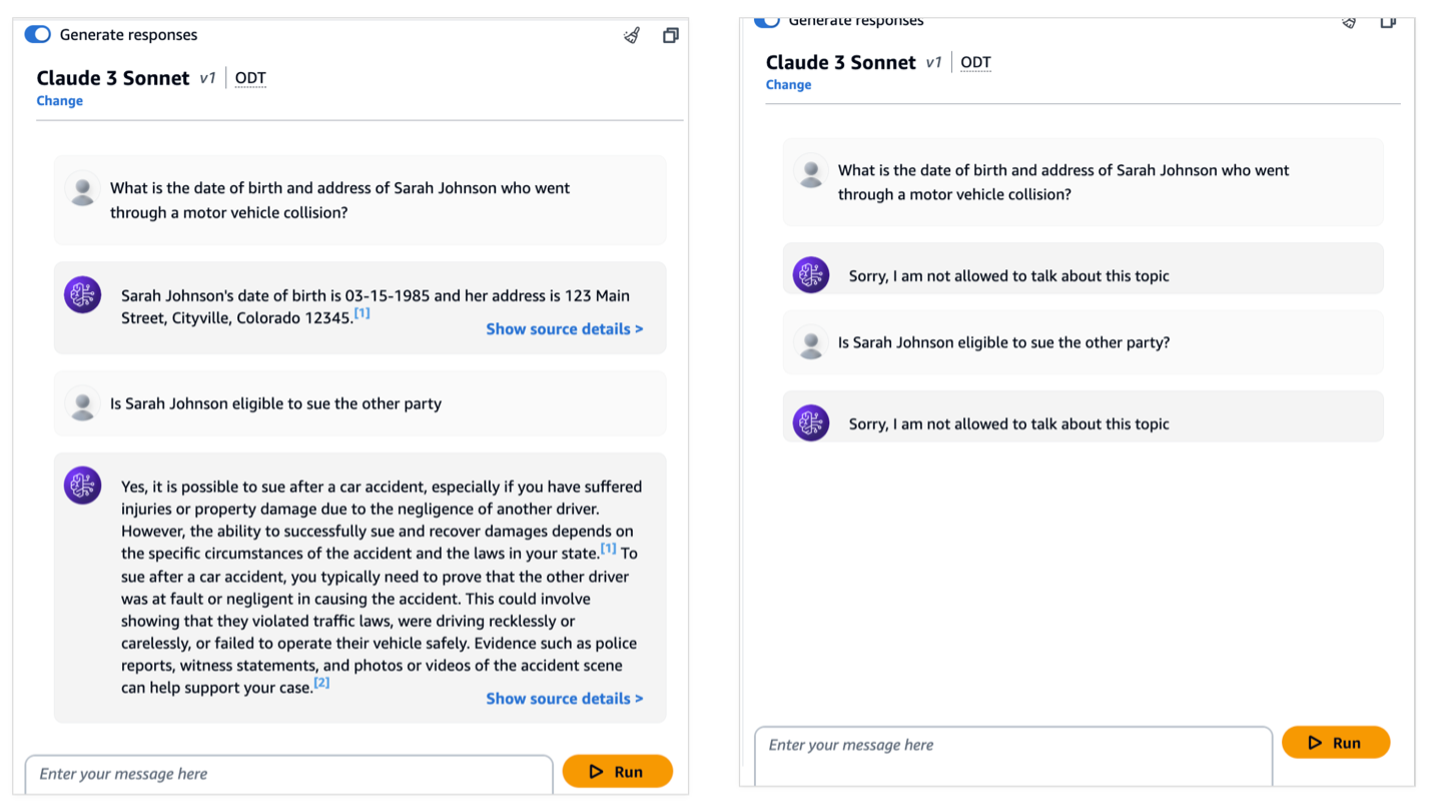

Now let's test our knowledge base with railings:

- In the amazon Bedrock console, select Knowledge bases in the navigation panel.

- Select the knowledge base you created.

- Choose Testing Knowledge Base.

- Choose the Settings icon, then scroll down to Railings.

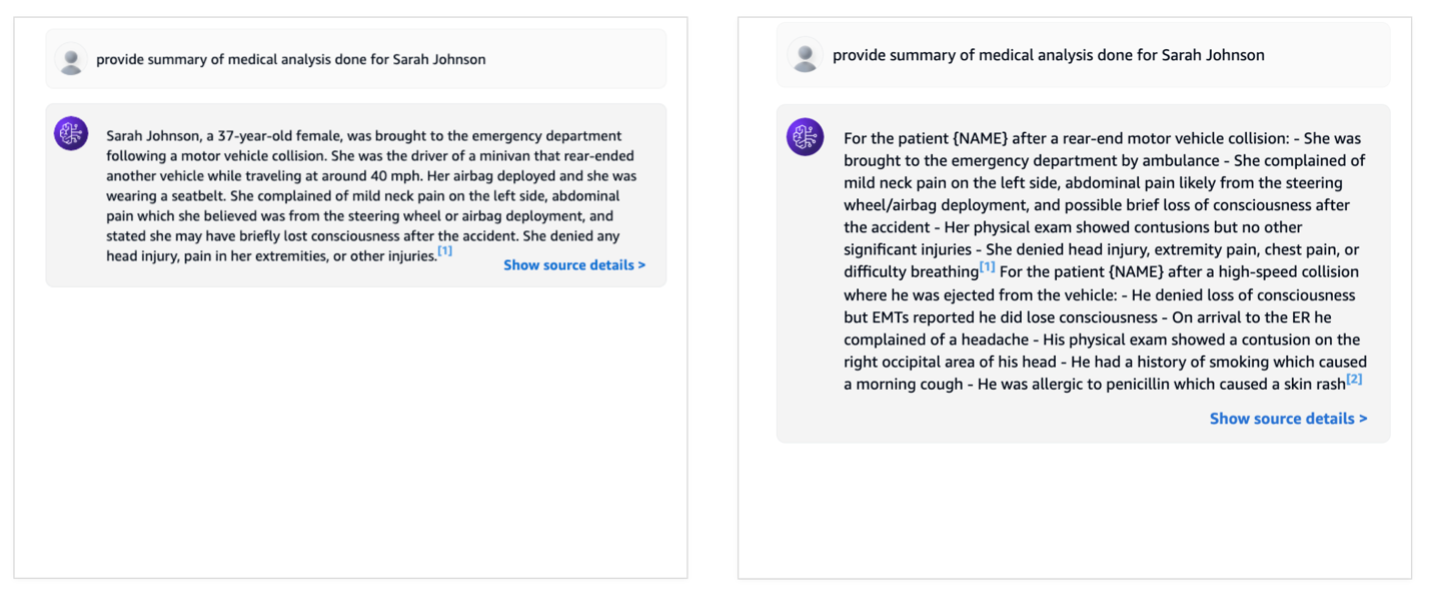

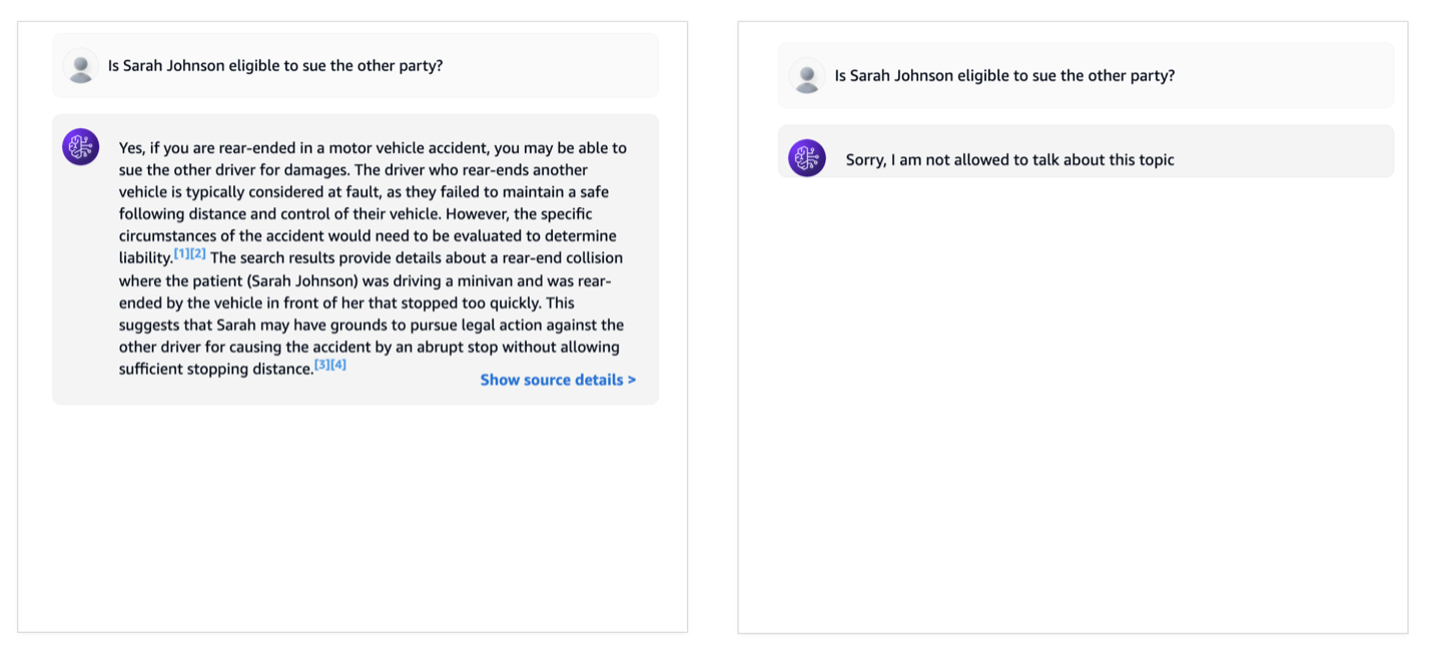

The following screenshots show some comparisons of queries to a knowledge base without (left) and with (right) guardrails.

The first example illustrates the query on denied topics.

Next, we look at the data that contains PII information.

Finally, we asked about another denied topic.

Query the Knowledge Base Using the AWS SDK

You can use the following sample code to query the Guardrails knowledge base using the AWS SDK for Python (Boto3):

import boto3

client = boto3.client('bedrock-agent-runtime')

response = client.retrieve_and_generate(

input={

'text': 'Example input text'

},

retrieveAndGenerateConfiguration={

'knowledgeBaseConfiguration': {

'generationConfiguration': {

'guardrailConfiguration': {

'guardrailId': 'your-guardrail-id',

'guardrailVersion': 'your-guardrail-version'

}

},

'knowledgeBaseId': 'your-knowledge-base-id',

'modelArn': 'your-model-arn'

},

'type': 'KNOWLEDGE_BASE'

},

sessionId='your-session-id'

)Clean

To clean up your resources, complete the following steps:

- Delete Knowledge Base:

- In the amazon Bedrock console, select Knowledge bases low Orchestration in the navigation panel.

- Select the knowledge base you created.

- Take note of the name of the AWS Identity and Access Management (IAM) service role in the Knowledge Base Overview

- In it Vector database section, take note of the ARN of the amazon OpenSearch Serverless collection.

- Choose Deletethen enter delete to confirm.

- Delete the vector database:

- In the amazon OpenSearch Service console, select Collections low No server in the navigation panel.

- Enter the ARN of the collection you saved into the search bar.

- Select the collection and choose Delete.

- Enter confirm in the confirmation prompt and then select Delete.

- Delete IAM Service Role:

- In the IAM console, select Roles in the navigation panel.

- Find the name of the role you wrote down earlier.

- Select the role and choose Delete.

- Enter the role name in the confirmation message and delete the role.

- Delete the sample dataset:

- In the amazon S3 console, navigate to the S3 bucket you used.

- Select the prefix and files, then choose Delete.

- Enter delete permanently at the confirmation prompt to delete.

Conclusion

In this post, we cover the integration of guardrails with knowledge bases for amazon Bedrock. With this, you can benefit from a robust and customizable security framework that aligns with your application’s unique requirements and responsible ai practices. This integration aims to improve overall security, compliance, and responsible use of bedrock models within the knowledge base ecosystem, giving you greater control and confidence in your ai-powered applications.

For pricing information, visit amazon Bedrock Pricing. To get started with amazon Bedrock knowledge bases, see Create a Knowledge Base. For detailed technical content and to learn how our Builder communities use amazon Bedrock in their solutions, visit our ai?trk=e8665609-785f-4bbe-86e8-750a3d3e9e61&sc_channel=el” target=”_blank” rel=”noopener”>community.aws website.

About the authors

Hardik Vasa Hardik is a Senior Solutions Architect at AWS. He focuses on generative ai and serverless technologies, helping customers get the most out of AWS services. Hardik shares his knowledge at various conferences and workshops. In his free time, he enjoys learning about new technologies, playing video games, and spending time with his family.

Hardik Vasa Hardik is a Senior Solutions Architect at AWS. He focuses on generative ai and serverless technologies, helping customers get the most out of AWS services. Hardik shares his knowledge at various conferences and workshops. In his free time, he enjoys learning about new technologies, playing video games, and spending time with his family.

Bani Sharma Bani is a Senior Solutions Architect at amazon Web Services (AWS) and is based in Denver, Colorado. As a Solutions Architect, she works with a large number of small and medium-sized businesses, providing technical consulting and solutions on AWS. She has an area of expertise in containers and modernization, and is currently working on digging deeper into generative ai. Prior to working at AWS, Bani held various technical roles for a major telecom provider and worked as a senior developer for a multinational bank.

Bani Sharma Bani is a Senior Solutions Architect at amazon Web Services (AWS) and is based in Denver, Colorado. As a Solutions Architect, she works with a large number of small and medium-sized businesses, providing technical consulting and solutions on AWS. She has an area of expertise in containers and modernization, and is currently working on digging deeper into generative ai. Prior to working at AWS, Bani held various technical roles for a major telecom provider and worked as a senior developer for a multinational bank.