Customer service organizations today face an immense opportunity. As customer expectations grow, brands have the opportunity to creatively apply new innovations to transform the customer experience. While meeting growing customer demands poses challenges, the latest advances in conversational artificial intelligence (ai) enable businesses to meet these expectations.

Today, customers expect timely answers to their questions that are helpful, accurate, and tailored to their needs. The new QnAIntent, powered by amazon Bedrock, can meet these expectations by understanding questions posed in natural language and responding conversationally in real time using its own authoritative knowledge sources. Our retrieval augmented generation (RAG) approach allows amazon Lex to take advantage of both the breadth of knowledge available in repositories and the fluidity of large language models (LLM).

amazon Bedrock is a fully managed service that offers a selection of high-performance foundation models (FM) from leading ai companies such as AI21 Labs, Anthropic, Cohere, Meta, Mistral ai, Stability ai and amazon through a single API, along with a broad set of capabilities to build generative ai applications with security, privacy, and responsible ai.

In this post, we show you how to add generative ai question answering capabilities to your bots. This can be done using your own selected knowledge sources and without writing a single line of code.

Read on to discover how QnAIntent can transform your customer experience.

Solution Overview

Implementing the solution consists of the following high-level steps:

- Create an amazon Lex bot.

- Create an amazon Simple Storage Service (amazon S3) bucket and upload a PDF file that contains the information used to answer questions.

- Create a knowledge base that will split your data into chunks and generate embeds using the amazon Titan Embeddings model. As part of this process, Knowledge Bases for amazon Bedrock automatically creates an amazon OpenSearch serverless vector search collection to contain your vectorized data.

- Add a new intent QnAIntent that will use the knowledge base to find answers to customer questions and then use the Anthropic Claude model to generate answers to questions and follow-up questions.

Previous requirements

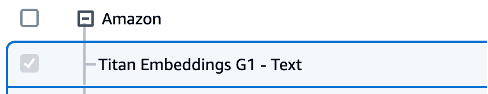

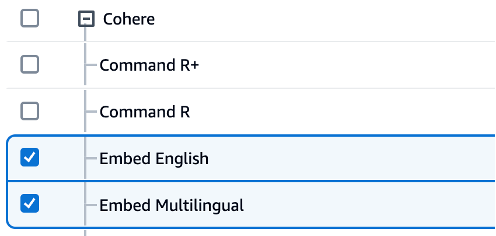

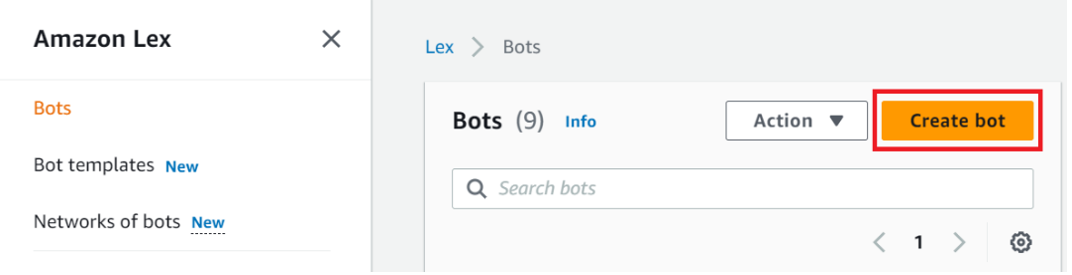

To follow the features described in this post, you need access to an AWS account with permissions to access amazon Lex, amazon Bedrock (with access to Anthropic Claude models and amazon Titan or Cohere Embeds), knowledge bases for amazon Bedrock, and the OpenSearch Serverless vector engine. To request access to models on amazon Bedrock, complete the following steps:

- In the amazon Bedrock console, choose Access to the model in the navigation panel.

- Choose Manage model access.

- Select the amazon and anthropic Models. (You can also choose to use Cohere models for embeddings.)

- Choose Request access to the model.

Create an amazon Lex bot

If you already have a bot that you want to use, you can skip this step.

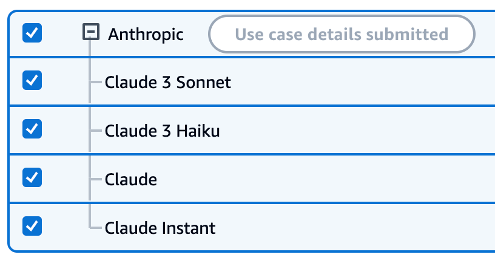

- In the amazon Lex console, choose robots in the navigation panel.

- Choose create robot

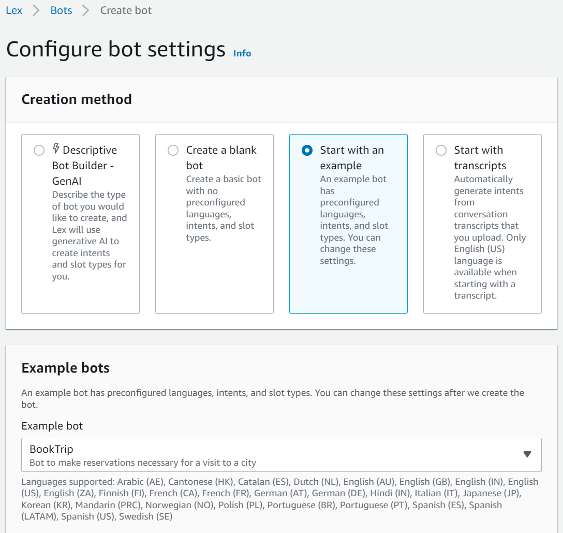

- Select Start with an example and choose the BookTrip example bot.

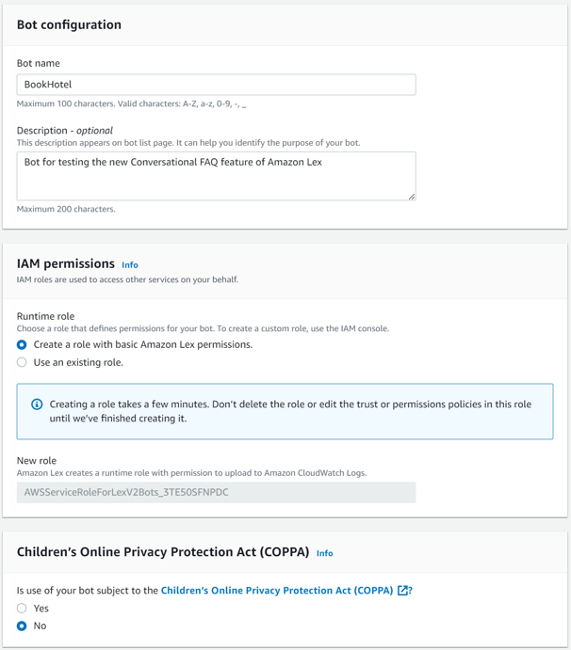

- For Robot nameEnter a name for the bot (for example, BookHotel).

- For runtime roleselect Create a role with basic amazon Lex permissions.

- In it Children's Online Privacy Protection Act (COPPA) section, you can select No because this bot is not intended for children under 13 years of age.

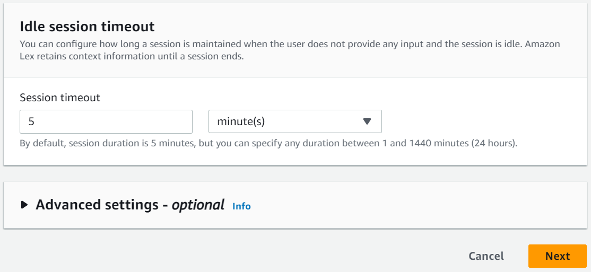

- Keep the Idle session timeout set to 5 minutes.

- Choose Next.

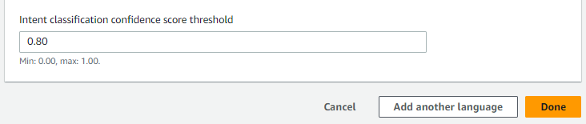

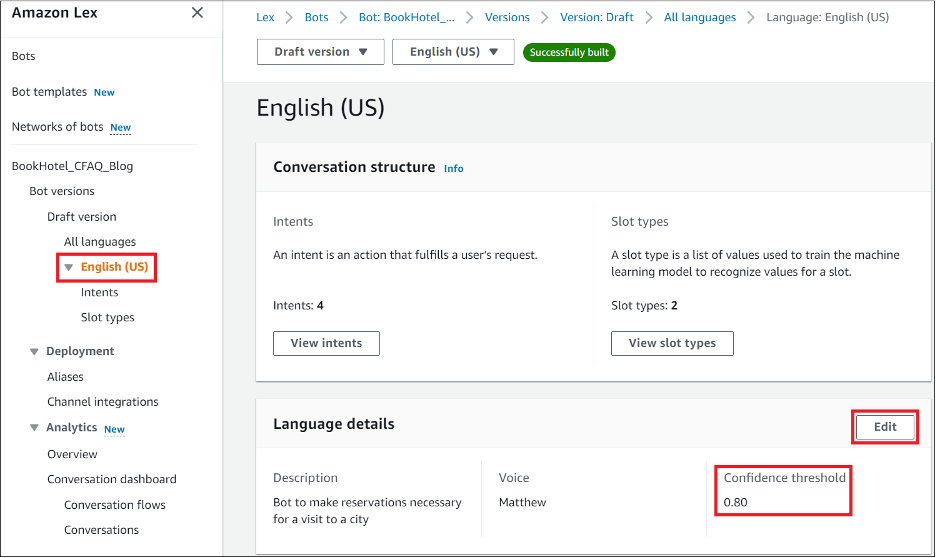

- When using QnAIntent to answer questions in a bot, you may want to increase the confidence threshold of the intent classification so that your questions are not accidentally interpreted as matching one of your intents. We set this to 0.8 for now. You may need to adjust this up or down based on your own testing.

- Choose Made.

- Choose Save intent.

Upload content to amazon S3

Now create an S3 bucket to store the documents you want to use for your knowledge base.

- In the amazon S3 console, choose cubes in the navigation panel.

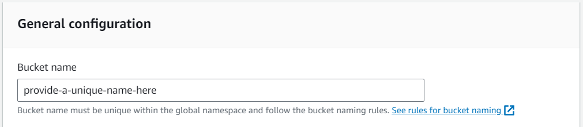

- Choose Create deposit.

- For Bucket nameenter a unique name.

- Keep the default values for all other options and choose Create deposit.

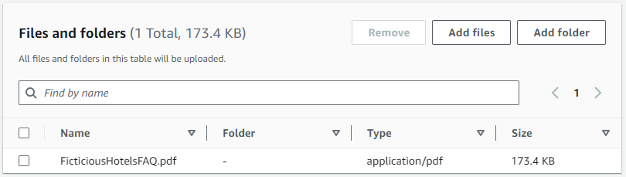

For this post, we created an FAQ document for the fictional hotel chain called Example Corp FictitiousHotels. Download the PDF document to move forward.

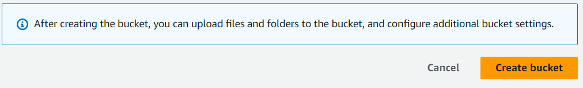

- About him cubes page, navigate to the bucket you created.

If you don't see it, you can search for it by name.

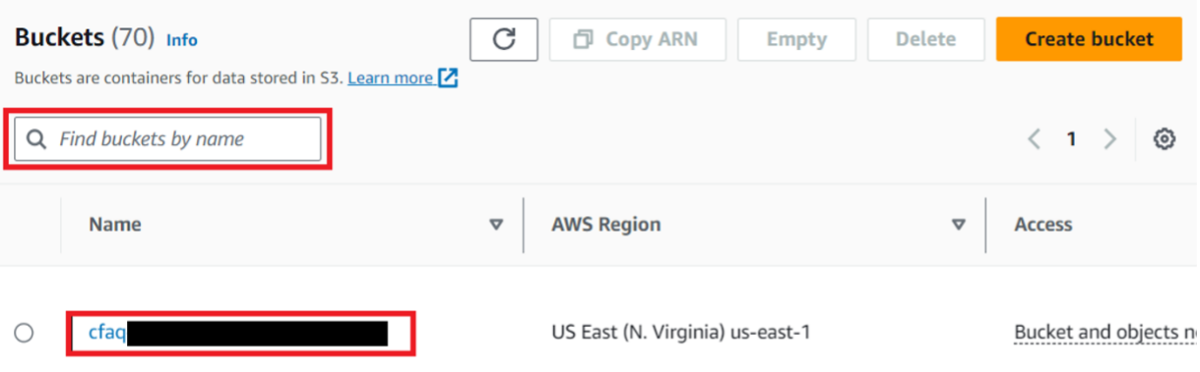

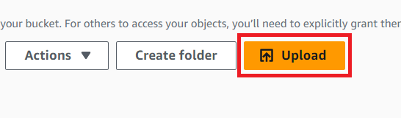

- Choose Increase.

- Choose Add files.

- Choose the

ExampleCorpFicticiousHotelsFAQ.pdfthat you downloaded. - Choose Increase.

The file will now be accessible in the S3 bucket.

Create a knowledge base

Now you can configure the knowledge base:

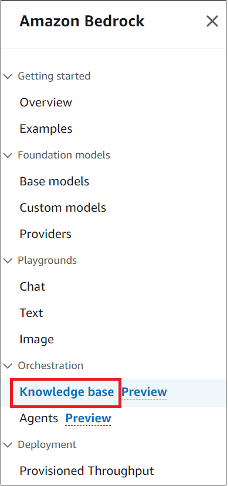

- In the amazon Bedrock console, choose Knowledge base in the navigation panel.

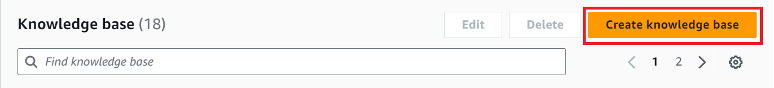

- Choose Create knowledge base.

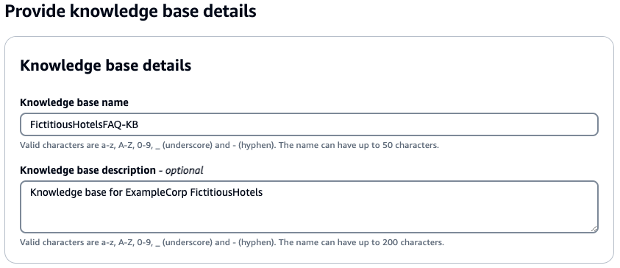

- For Knowledge base nameenter a name.

- For Knowledge Base Descriptionenter an optional description.

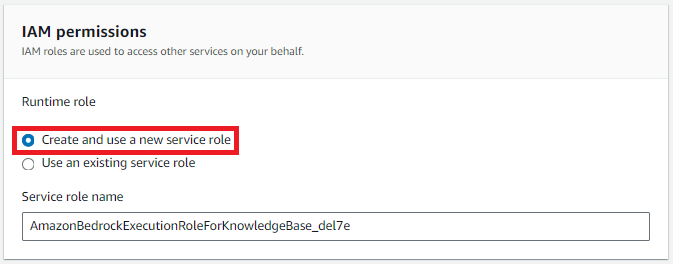

- Select Create and use a new service role.

- For Service role nameenter a name or keep the default.

- Choose Next.

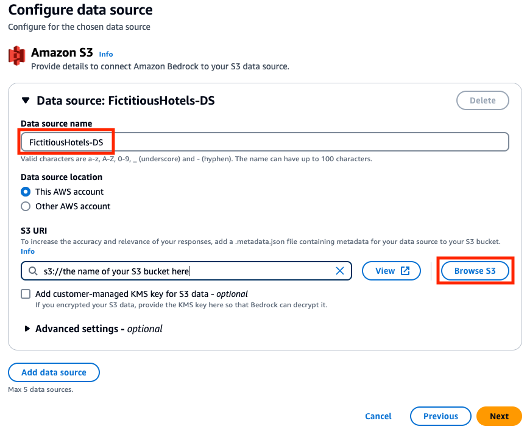

- For Data source nameenter a name.

- Choose Explore S3 and navigate to the S3 bucket where you uploaded the PDF file earlier.

- Choose Next.

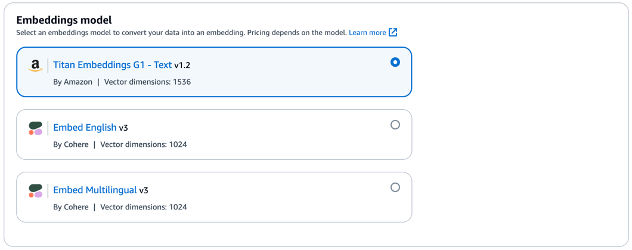

- Choose an inlay pattern.

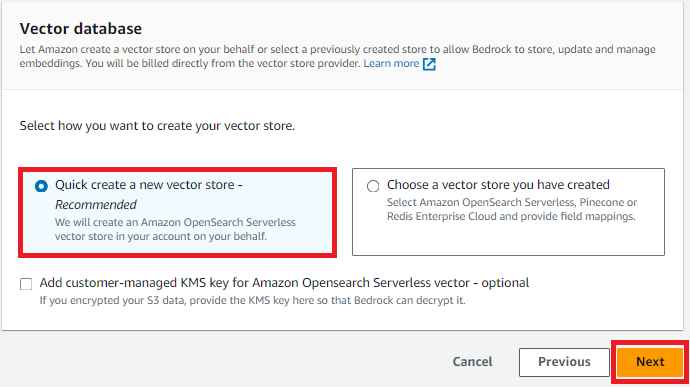

- Select Quickly create a new vector store to create a new OpenSearch Serverless vector store to store the vectorized content.

- Choose Next.

- Review your settings, then choose Create knowledge base.

After a few minutes the knowledge base will have been created.

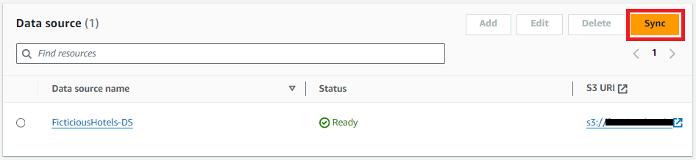

- Choose Sync up synchronize to fragment the documents, calculate the embeddings and store them in the vector store.

This may take a while. You can continue with the rest of the steps, but synchronization must complete before you can query the knowledge base.

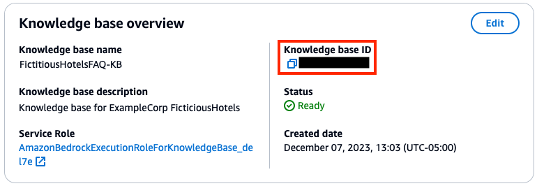

- Copy the knowledge base ID. You will reference this when you add this knowledge base to your amazon Lex bot.

Add QnAIntent to the amazon Lex Bot

To add QnAIntent, follow these steps:

- In the amazon Lex console, choose robots in the navigation panel.

- Choose your bot.

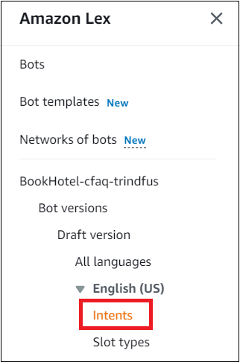

- In the navigation pane, choose Intentions.

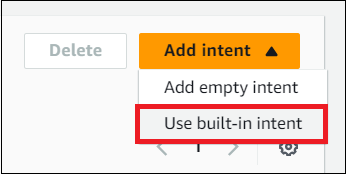

- About him Add intent menu, choose Use built-in intent.

- For Built-in intentchoose amazon.QnAIntento.

- For Intent nameenter a name.

- Choose Add.

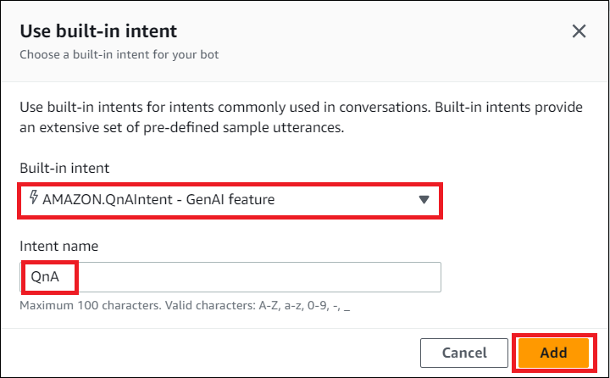

- Choose the model you want to use to generate the responses (in this case, Anthropic Claude 3 Sonnet, but you can select Anthropic Claude 3 Haiku for a cheaper, lower latency option).

- For Choose knowledge storeselect Knowledge Base for amazon Bedrock.

- For Knowledge Base for amazon Bedrock ID.Enter the ID you noted earlier when you created your knowledge base.

- Choose Save intent.

- Choose Build to build the robot.

- Choose Proof to test the new intention.

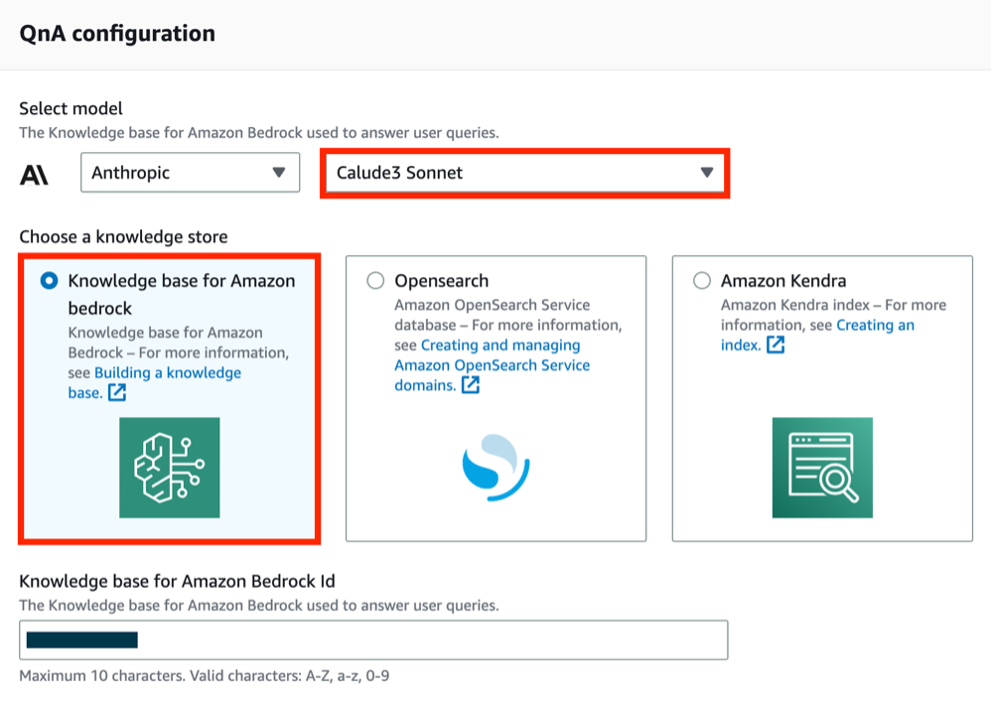

The following screenshot shows an example conversation with the bot.

In the second question about pool hours in Miami, you refer back to the previous question about pool hours in Las Vegas and still get a relevant answer based on your conversation history.

It is also possible to ask questions that require the robot to reason a little about the available data. When we asked about a good resort for a family vacation, the bot recommended the Orlando resort based on availability of kids' activities, proximity to theme parks, and more.

Update trust threshold

Some questions may accidentally coincide with your other intentions. If you encounter this, you can adjust your bot's trust threshold. To modify this setting, choose the language of your bot (English) and in the Language details section, choose Edit.

After updating the trust threshold, rebuild the bot for the change to take effect.

Add additional steps

By default, the next step in the bot conversation is set to Wait for user input after having answered a question. This keeps the conversation going in the bot and allows the user to ask follow-up questions or invoke any of the other intents in your bot.

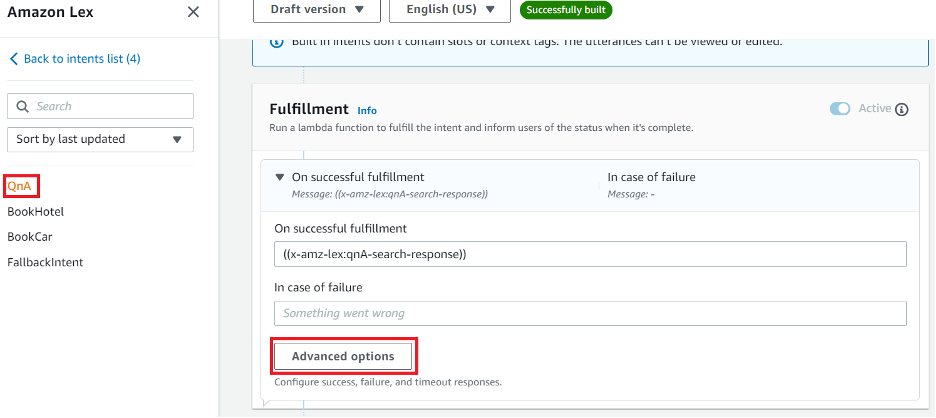

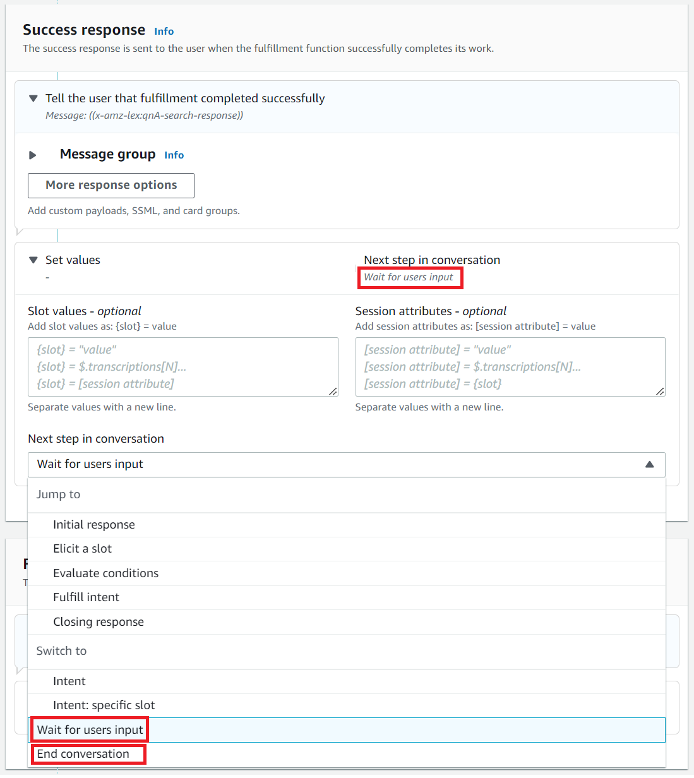

If you want the conversation to end and return control to the calling application (for example, amazon Connect), you can change this behavior to End conversation. To update the settings, complete the following steps:

- In the amazon Lex console, navigate to QnAIntent.

- In it Compliance section, choose Advanced Options.

- About him Next step in the conversation drop down menu, choose End conversation.

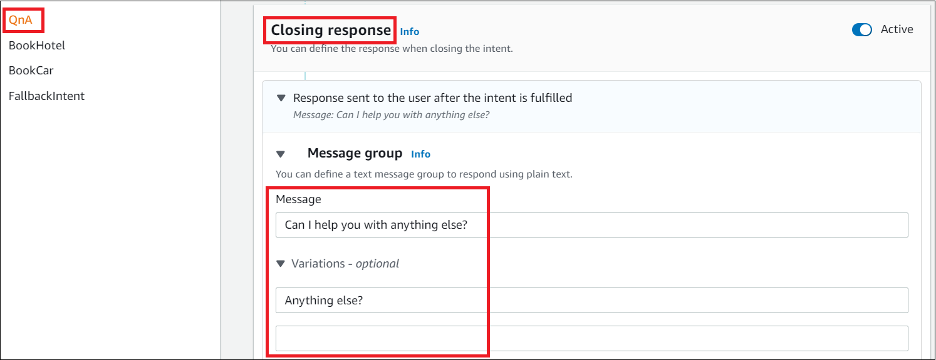

If you want the bot to add a specific message after each QnAIntent response (such as “Can I help you with anything else?”), you can add a closing response to QnAIntent.

Clean

To avoid incurring ongoing costs, delete the resources you created as part of this release:

- amazon robot Lex

- cube S3

- OpenSearch Serverless Collection (this is not automatically deleted when you delete your knowledge base)

- Knowledge bases

Conclusion

The new QnAIntent in amazon Lex enables natural conversations by connecting customers to curated knowledge sources. Powered by amazon Bedrock, QnAIntent understands natural language questions and answers conversationally, keeping customers engaged with contextual follow-up responses.

QnAIntent brings the latest innovations to you to transform static FAQs into fluid dialogues that solve customer needs. This helps scale excellent self-service to delight customers.

Try it yourself. Reinvent your customer experience!

About the Author

Thomas Rinfuss is a Senior Solutions Architect on the amazon Lex team. He invents, develops, prototypes and evangelizes new features and technical solutions for Language ai services that improve customer experience and facilitate adoption.

Thomas Rinfuss is a Senior Solutions Architect on the amazon Lex team. He invents, develops, prototypes and evangelizes new features and technical solutions for Language ai services that improve customer experience and facilitate adoption.