Document comprehension is a critical field that focuses on converting documents into meaningful information. This involves reading and interpreting text and understanding the layout, non-textual elements and style of the text. The ability to understand spatial layout, visual cues, and textual semantics is essential to accurately extract and interpret information from documents. This field has gained significant importance with the advent of large language models (LLM) and the increasing use of document images in various applications.

The main challenge addressed in this research is the effective extraction of information from documents that contain a combination of textual and visual elements. Traditional text-only models often need help interpreting spatial arrangements and visual elements, resulting in incomplete or inaccurate understanding. This limitation is particularly evident in tasks such as Document Visual Question Answering (DocVQA), where understanding context requires seamlessly integrating visual and textual information.

Existing methods for document understanding typically rely on optical character recognition (OCR) engines to extract text from images. However, these methods could improve their ability to incorporate visual cues and the spatial arrangement of text, which are crucial for comprehensive document understanding. For example, in DocVQA, the performance of text-only models is significantly lower compared to models that can process both text and images. The research highlighted the need for models to integrate these elements to effectively improve accuracy and performance.

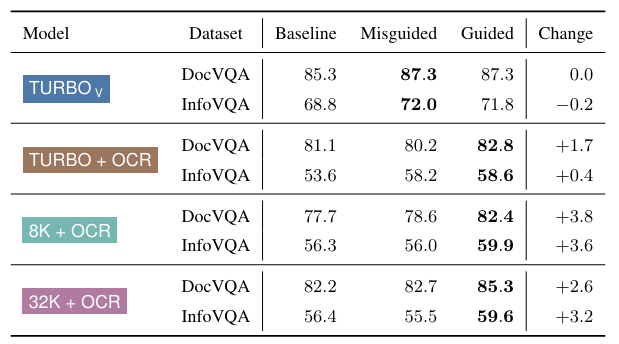

Snowflake researchers evaluated several configurations of GPT-4 models, including integrating external OCR engines with document images. This approach aims to improve document understanding by combining OCR-recognized text with visual input, allowing models to simultaneously process both types of information. The study examined different versions of GPT-4, such as the TURBO V model, which supports high-resolution images and large context windows of up to 128k tokens, allowing it to handle complex documents more effectively.

The proposed method was evaluated using several datasets including DocVQA, InfographicsVQA, SlideVQA, and DUDE. These data sets represent many types of documents, from text-intensive documents to vision-intensive and multi-page documents. The results demonstrated significant improvements in performance, particularly when text and images were used. For example, the GPT-4 Vision Turbo model achieved an ANLS score of 87.4 in DocVQA and 71.9 in InfographicsVQA when text and OCR images were provided as input. These scores are notably higher than those achieved by text-only models, highlighting the importance of integrating visual information for accurate document understanding.

The research also provided a detailed analysis of the model's performance on different types of input evidence. For example, the study found that OCR-provided text significantly improved free text, forms, lists, and tables results in DocVQA. In contrast, the improvement was less pronounced for figures or images, indicating that the model benefits more from text-rich elements structured within the document. The analysis revealed a primacy bias, as the model performed best when the relevant information was placed at the beginning of the input document.

Further evaluation showed that the GPT-4 Vision Turbo model outperformed heavier text-only models on most tasks. The best performance was achieved with high resolution images (2048 pixels on the longest side) and OCR text. For example, on the SlideVQA dataset, the model scored 64.7 with high-resolution images, compared to lower scores with lower-resolution images. This highlights the importance of image quality and OCR accuracy in improving document understanding performance.

In conclusion, the research advanced document understanding by demonstrating the effectiveness of integrating OCR-recognized text with document images. The GPT-4 Vision Turbo model outperformed on multiple data sets and achieved state-of-the-art results on tasks requiring textual and visual understanding. This approach addresses the limitations of text-only models and provides a more complete understanding of documents. The findings highlight the potential to improve accuracy in the interpretation of complex documents, paving the way for more effective and reliable document understanding systems.

Review the Paper. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on twitter.com/Marktechpost”>twitter. Join our Telegram channel, Discord Channeland LinkedIn Grabove.

If you like our work, you will love our Newsletter..

Don't forget to join our 44k+ ML SubReddit

![]()

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is committed to harnessing the potential of artificial intelligence for social good. His most recent endeavor is the launch of an ai media platform, Marktechpost, which stands out for its in-depth coverage of machine learning and deep learning news that is technically sound and easily understandable to a wide audience. The platform has more than 2 million monthly visits, which illustrates its popularity among the public.

<script async src="//platform.twitter.com/widgets.js” charset=”utf-8″>