Transformer models have significantly advanced machine learning, particularly in handling complex tasks such as natural language processing and arithmetic operations such as addition and multiplication. These tasks require models to solve problems with high efficiency and accuracy. The researchers aim to improve the capabilities of these models to perform complex multi-step reasoning tasks, especially in arithmetic, where it is crucial to track the positions of digits in long sequences.

The main challenge of transformative models is performing multi-step reasoning tasks such as adding and multiplying large numbers. This challenge is primarily due to the difficulty of accurately tracking digit positions within long sequences, which is essential for performing arithmetic operations correctly. Traditional models often do not maintain this positional information, leading to errors in calculations involving large numbers.

(Featured Article) LLMWare.ai Selected for GitHub 2024 Accelerator: Enabling the Next Wave of Innovation in Enterprise RAG with Small, Specialized Language Models

Existing methods have incorporated positional embeddings, which help transformers understand the positions of digits in sequences. These embeddings have improved model performance, but still fall short when it comes to long sequences. Advanced techniques such as Functional Interpolation for Relative Position Embeddings (FIRE) have been developed to boost what these models can achieve. However, they also face limitations regarding generalization to unseen lengths and tasks.

In a recent study, researchers from the University of Maryland, Lawrence Livermore National Laboratory, Tübingen ai Center, and Carnegie Mellon University introduced a novel method called abacus inlays. This approach significantly improves the ability of the transformer model to track the position of each digit within a number. Abacus Embeddings assigns the same positional embedding to all digits of the same meaning, allowing the model to align the digits correctly.

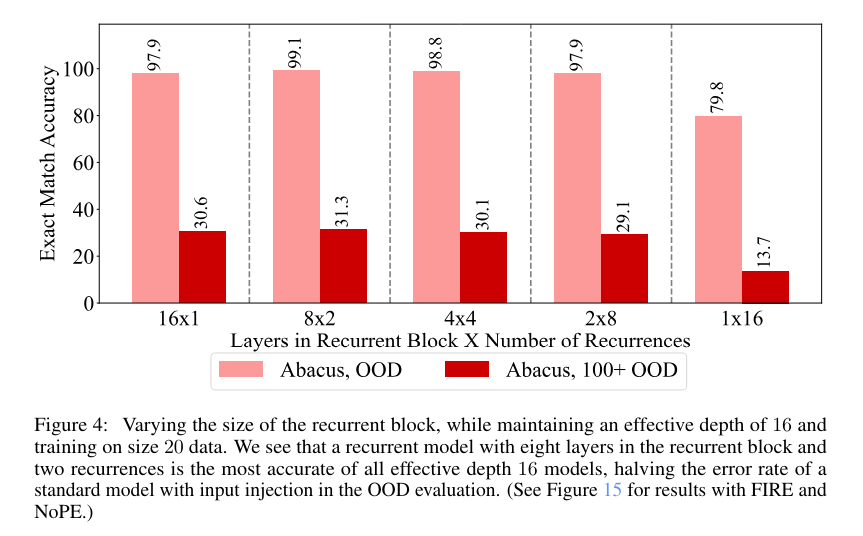

The Abacus Embeddings technique combines positional embeddings with input injection and loop transformer architectures. By encoding the relative position of each digit within a number, the model can perform arithmetic operations more accurately. For example, researchers trained transformer models on addition problems involving numbers up to 20 digits and achieved up to 99% accuracy on 100-digit addition problems. This represents state-of-the-art performance that significantly outperforms previous methods.

Performance improvements with Abacus Embeddings are not limited to just addition. The method also showed notable improvements in other algorithmic tasks, such as multiplication and classification. The study found that models trained with Abacus Embeddings could generalize to multiplication problems involving numbers up to 15 digits and classification tasks with arrays of up to 30 numbers, each with up to 30 digits. This demonstrates the versatility and effectiveness of the Abacus Embeddings approach in handling various complex tasks.

The results of the study were impressive, achieving near-perfect accuracy in many cases. For example, models using Abacus Embeddings combined with input injection achieved 99.1% accuracy on out-of-distribution tasks, reducing errors by 87% compared to standard architectures. This level of performance underscores the potential of Abacus Embeddings to transform the way transformer models handle arithmetic and other algorithmic reasoning tasks.

In conclusion, the research highlights the advances made possible by Abacus Embeddings in improving the capabilities of transformer models. The method addresses critical challenges in performing multi-step reasoning tasks, such as tracking digit positional information within long sequences, leading to substantial improvements in accuracy and generalization. This innovative approach paves the way for future advances in this field, potentially extending to even more complex and varied tasks beyond basic arithmetic. Researchers are encouraged to further explore these findings, taking advantage of the robust solutions offered by Abacus Embeddings to improve the performance and applicability of transformer models in a wide range of computational problems.

Review the Paper and GitHub. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on twitter.com/Marktechpost”>twitter. Join our Telegram channel, Discord channeland LinkedIn Grabove.

If you like our work, you will love our Newsletter..

Don't forget to join our 43k+ ML SubReddit | Also, check out our ai Event Platform

<figure class="wp-block-embed is-type-rich is-provider-twitter wp-block-embed-twitter“>

![]()

Aswin AK is a consulting intern at MarkTechPost. He is pursuing his dual degree from the Indian Institute of technology Kharagpur. She is passionate about data science and machine learning, and brings a strong academic background and practical experience solving real-life interdisciplinary challenges.

<script async src="//platform.twitter.com/widgets.js” charset=”utf-8″>