Introduction

In our digital age, where information is predominantly shared through electronic formats, PDF files serve as a crucial medium. However, the data they contain, especially images, often remains underutilized due to format limitations. This blog post presents a pioneering approach that liberates and not only liberates but also maximizes the usefulness of data from PDF files. Using Python and advanced ai technologies, we will demonstrate how to extract images from PDF files and interact with them using sophisticated ai models such as LLava and the LangChain module. This innovative method opens new avenues for data interaction, improving our ability to analyze and use information stored in PDF files.

Learning objectives

- Extract and categorize elements from PDF files using the unstructured library.

- Set up a Python environment for PDF data extraction and interaction with ai.

- Isolate and convert PDF images to base64 format for ai analysis.

- Use ai models like LLavA and LangChain to analyze and interact with PDF images.

- Integrate conversational ai into applications to improve data utility.

- Explore practical applications of ai-based PDF content analysis.

This article was published as part of the Data Science Blogathon.

Set up the environment

The first step in transforming PDF content involves preparing your computing environment with essential software tools. This setting is crucial to handle and extract unstructured data from PDF files efficiently.

!pip install "unstructured(all-docs)" unstructured-clientInstalling these packages equips your Python environment with the unstructured library, a powerful tool for dissecting and extracting various elements from PDF documents.

The data extraction process begins by dissecting the PDF into individual manageable elements. Using the unstructured library, you can easily split a PDF into different elements, including text and images. The function pdf_partition from the unstructured.partition.pdf module is essential here.

from unstructured.partition.pdf import partition_pdf

# Specify the path to your PDF file

filename = "data/gpt4all.pdf"

# Extract elements from the PDF

path = "images"

raw_pdf_elements = partition_pdf(filename=filename,

# Unstructured first finds embedded image blocks

# Only applicable if `strategy=hi_res`

extract_images_in_pdf=True,

strategy = "hi_res",

infer_table_structure=True,

# Only applicable if `strategy=hi_res`

extract_image_block_output_dir = path,

)

This function returns a list of elements present in the PDF. Each element can be text, image, or other type of content embedded in the document. PDF images are stored in the 'image' folder.

Identify and extract images

Once we have identified all the elements within the PDF, the next crucial step is to isolate the images for further interaction:

images = (el for el in elements if el.category == "Image")This list now contains all images extracted from the PDF, which can be further processed or analyzed.

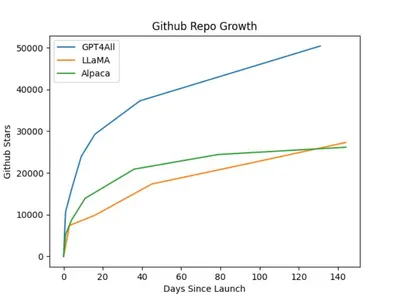

Below are the extracted images:

Code to display images in notebook file:

This simple but effective line of code filters images from a combination of different elements, setting the stage for more sophisticated data handling and analysis.

<h2 class="wp-block-heading" id="h-conversational-ai-with-llava-and-langchain”>Conversational ai with LLavA and LangChain

Installation and configuration

To interact with the extracted images, we use advanced artificial intelligence technologies. Installing langchain and its community features is critical to facilitating ai-powered dialogues with images.

Please check the link to configure Llava and Ollama in detail. Additionally, install the following package.

!pip install langchain langchain_core langchain_communityThis installation presents essential tools to integrate conversational ai capabilities into our application.

Convert saved images to base64:

To make images understandable to ai, we convert them to a format that ai models can interpret: base64 strings.

import base64

from io import BytesIO

from IPython.display import HTML, display

from PIL import Image

def convert_to_base64(pil_image):

"""

Convert PIL images to Base64 encoded strings

:param pil_image: PIL image

:return: Re-sized Base64 string

"""

buffered = BytesIO()

pil_image.save(buffered, format="JPEG") # You can change the format if needed

img_str = base64.b64encode(buffered.getvalue()).decode("utf-8")

return img_str

def plt_img_base64(img_base64):

"""

Display base64 encoded string as image

:param img_base64: Base64 string

"""

# Create an HTML img tag with the base64 string as the source

image_html = f' '

# Display the image by rendering the HTML

display(HTML(image_html))

file_path = "./images/figure2.jpg"

pil_image = Image.open(file_path)

image_b64 = convert_to_base64(pil_image)

'

# Display the image by rendering the HTML

display(HTML(image_html))

file_path = "./images/figure2.jpg"

pil_image = Image.open(file_path)

image_b64 = convert_to_base64(pil_image)

Analyzing Image with Llava and Ollama via langchain

LLaVa is an open source chatbot trained by fitting LlamA/Vicuña on multimodal instruction following data generated by GPT. It is an autoregressive language model based on a transformative architecture. In other words, it is a multimodal version of LLM optimized for chat/instructions.

Images converted to a suitable format (base64 strings) can be used as context for LLavA to provide descriptions or other relevant information.

from langchain_community.llms import Ollama

llm = Ollama(model="llava:7b")

# Use LLavA to interpret the image

llm_with_image_context = llm.bind(images=(image_b64))

response = llm_with_image_context.invoke("Explain the image")

Production:

' The image is a graph showing the growth of GitHub repositories over time. The graph includes three lines, each representing different types of repositories:\n\n1. Lama – This line represents a single repository called “Lama”, which appears to be growing steadily over the indicated period, starting at 0 and rising to just under 5.00 at the end of the time period shown on the chart. \n\n2 . Alpaca: Similar to the Lama repository, this line also represents a single repository called “Alpaca”. It also starts at 0 but grows faster than Lama, reaching approximately 75.00 by the end of the period.\n\n3. All repositories (average): This line represents an average growth rate across all repositories on GitHub. It shows a gradual increase in the number of repositories over time, with less variability than the other two lines.\n\nThe graph is marked with a timestamp running from the start to the end of the data, which does not is explicitly labeled. The vertical axis represents the number of repositories, while the horizontal axis indicates time.\n\nAdditionally, there are some annotations in the image:\n\n- “GitHub repository growth” suggests that this graph illustrates the growth of the repositories on GitHub.\n- “Lama, Alpaca, all repositories (average)” labels each line to indicate which set of repositories it represents.\n- “100s”, “1k”, “10k”, “100k” and “1M” are milestones marked on the graph, indicating the number of repositories at specific times.\n\nThe GitHub source code is not visible in the image, but could be an important aspect to consider when analyzing this graph. The growth trend shown suggests that the number of new repositories being created or contributed to is increasing over time on this platform. '

This integration allows the model to “see” the image and provide information, descriptions or answer questions related to the content of the image.

Conclusion

The ability to extract images from PDF files and then use ai to interact with these images opens up numerous possibilities for data analysis, content management, and automated processing. The techniques described here leverage powerful ai libraries and models to effectively handle and interpret unstructured data.

Key takeaways

- Efficient Extraction: Unstructured library provides a perfect method to extract and categorize different elements within PDF documents.

- Advanced ai Interaction: Converting images to a suitable format and using models like LLavA can enable sophisticated ai-powered interactions with document content.

- Broad Applications: These capabilities are applicable in various fields, from automated document processing to ai-based content analysis.

The media shown in this article is not the property of Analytics Vidhya and is used at the author's discretion.

Frequent questions

A. The unstructured library is designed to handle many elements embedded in PDF documents. Specifically, you can extract:

to. Text– Any textual content, including paragraphs, headers, footers, and annotations.

b. Images: Images embedded in the PDF, including photographs, graphs and diagrams.

c. Tables: Structured data presented in tabular format.

This versatility makes the unstructured library a powerful and comprehensive PDF data extraction tool.

A. LLavA, a conversational ai model, interacts with images by first requiring them to be converted to a format it can process, typically base64-encoded strings. Once the images have been encoded:

to. Generation Description: LLavA can describe the content of the image in natural language.

b. Answer to questions: You can answer questions about the image, providing ideas or explanations based on its visual content.

c. Contextual analysis: LLavA can integrate image context into broader conversational interactions, improving understanding of complex documents that combine text and visual elements.

A. Yes, there are several factors that can affect the quality of images extracted from PDF files:

to. Original image quality: The resolution and clarity of the original images in the PDF.

b. PDF compression: Some PDF files use compression techniques that can reduce image quality.

c. Extraction Settings: The settings used in the unstructured library (e.g. strategy = high resolution for high resolution extraction) can affect the quality.

d. File format: The format in which images are saved after extraction (e.g. JPEG, PNG) can affect the fidelity of the extracted images.

A. Yes, you can use other ai models besides LLavA for image interaction. Below are some alternative language models (LLM) that support image interaction:

to. CLIP (Contrasting Language-Image Pretraining) by OpenAI: CLIP is a versatile model that comprises images and their textual descriptions. It can generate image titles, classify images, and retrieve images based on textual queries.

b. DALL-E from OpenAI: DALL-E generates images from textual descriptions. While it is primarily used to create images from text, it can also provide detailed descriptions of images based on your understanding.

c. VisualGPT: This variant of GPT-3 integrates image understanding capabilities, allowing it to generate descriptive text based on images.

d. Florence by Microsoft: Florence is a multimodal model of image and text understanding. It can perform tasks such as image captioning, object detection, and answering questions about images.

These models, like LLavA, enable sophisticated interactions with images by providing descriptions, answering questions, and performing analysis based on visual content.

A. Basic programming knowledge, particularly in Python, is essential to implement these solutions effectively. Key skills include:

to. Environment configuration: Installing the necessary libraries and configuring the environment.

b. Write and run code: Using Python to write interaction and data extraction scripts.

c. Understanding ai Models: Integrate and use ai models such as LLavA or others.

d. Depuration: Troubleshooting and resolving issues that may arise during implementation.

While some familiarity with programming is required, the process can be simplified with clear documentation and examples, making it accessible to those with fundamental coding skills.