Large image-to-video (I2V) models appear to have a lot of generalizability based on their recent successes. Although these models can create intricate dynamic situations after watching millions of movies, they do not provide users with a crucial type of control. It is common to want to manage the generation of frames between two image endpoints; In other words, creating frames that lie between two image frames, even if they were taken at very different times or places. The process of interleaving under few endpoint constraints is known as constrained generation. Because they cannot direct the trajectory to a precise destination, current I2V models cannot perform limited generation. The goal is to find a way to generate videos that can mimic the movement of both the camera and the object without assuming anything about the direction of the movement.

Researchers at the Max Planck Institute for Intelligent Systems, Adobe, and the University of California introduced the limited training-free generation of the image-to-video (I2V) diffusion framework, defined here as the use of starting and ending frames as contextual information. The researcher's primary emphasis is on Stable Video Diffusion (SVD), a method for unlimited video production that has demonstrated remarkable realism and generalizability. While it is theoretically possible to correct for limited generation by using paired data to fit the model, doing so would undermine its ability to generalize. Therefore, this work focuses on methods that do not require training. The team moves on to two simple, alternative methods for untrained limited generation: internal painting and condition modification.

Time Reversal Fusion (TRF) is a novel sampling approach that is introduced in I2V models and allows limited generation. Because TRF requires no training or adjustments, it can take advantage of the built-in generation capabilities of an I2V model. The lack of ability to propagate image circumstances back in time to previous frames is due to the fact that current I2V models are taught to provide content along the arrow of time. This lack of capacity is what motivated the researchers to develop their approach. To create a single trajectory, TRF first denoises trajectories forward and backward in time, depending on a starting and ending frame, respectively.

The task becomes more complex when both ends of the created video are constrained. Inexperienced methods often get trapped in local minima, leading to abrupt frame transitions. The team addresses this by implementing Noise Re-Injection, a stochastic process, to ensure seamless frame transitions. TRF produces videos that inevitably end with the bounding box by fusing bidirectional trajectories regardless of pixel correspondence and motion assumptions. Unlike other controlled video creation approaches, the proposed approach fully utilizes the generalization ability of the original I2V model without requiring training or tuning of the control mechanism on selected data sets.

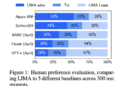

With 395 pairs of images serving as the start and end point of the data set, the researchers were able to evaluate movies produced using limited generation. These photographs contain a wide variety of snapshots, including kinematic movements of humans and animals, stochastic movements of elements such as fire and water, and multi-view images of complicated static situations. In addition to making possible a large number of hitherto infeasible downstream tasks, studies show that large I2V models together with constrained generation make it possible to probe the generated motion to understand the “mental dynamics” of these models.

The method's inherent stochasticity in creating forward and backward passes is one of its limitations. The distribution of possible motion paths for SVD could differ substantially for any two input images. Because of this, the start and end frame paths can produce drastically different videos, resulting in an unrealistic mix. Furthermore, the proposed approach addresses some of the shortcomings of SVD. Furthermore, while the SVD generations have demonstrated a solid understanding of the physical universe, they have failed to understand concepts such as “common sense” and the concept of causal consequence.

Review the Paper and Project. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on Twitter. Join our Telegram channel, Discord Channeland LinkedIn Grabove.

If you like our work, you will love our Newsletter..

Don't forget to join our 39k+ ML SubReddi

Dhanshree Shenwai is a Computer Science Engineer and has good experience in FinTech companies covering Finance, Cards & Payments and Banking with a keen interest in ai applications. He is excited to explore new technologies and advancements in today's evolving world that makes life easier for everyone.

<!– ai CONTENT END 2 –>