Recent advances in multimodal large language models (MLLM) have revolutionized several fields, leveraging the transformative capabilities of large-scale language models like ChatGPT. However, these models, primarily built on Transformer networks, suffer from quadratic computation complexity, hampering efficiency. In contrast, language-only models (LLM) have limited adaptability because they rely solely on linguistic interactions. Researchers are actively improving MLLMs by integrating multimodal processing capabilities to address this limitation. VLMs such as GPT-4, LLaMAadapter, and LLaVA increase the visual understanding of LLMs, allowing them to address various tasks such as visual question answering (VQA) and subtitles. Efforts are focused on optimizing VLMs by modifying the parameters of the base language model while maintaining the Transformer structure.

Researchers at Westlake University and Zhejiang University have developed Cobra, an MLLM with linear computational complexity. Cobra integrates the efficient Mamba language model into the visual modality, exploring various fusion schemes to optimize multimodal integration. Extensive experiments show that Cobra outperforms current computationally efficient methods such as LLaVA-Phi and TinyLLaVA, with faster speed and competitive performance on challenging prediction benchmarks. Cobra works similarly to LLaVA with much fewer parameters, indicating its efficiency. The researchers plan to release the Cobra code as open source to facilitate future research to address complexity issues in MLLM.

LLMs have reshaped natural language processing, with models like GLM and LLaMA aiming to rival InstructGPT. While LLMs excel, efforts are also focused on smaller alternatives such as Stable LM and TinyLLaMA, which demonstrate comparable effectiveness. VLMs, including GPT4V and Flamingo, extend LLMs to process visual data, often adapting Transformer backbones. However, its quadratic complexity limits scalability. Solutions like LLaVA-Phi and MobileVLM offer more efficient approaches. Vision Transformers like ViT and state space models like Mamba provide competitive alternatives, and Mamba exhibits linear scalability and competitive performance compared to Transformers.

Cobra integrates Mamba's Selective State Space Model (SSM) with visual understanding. It features a vision encoder, a projector and the Mamba spine. The vision encoder fuses DINOv2 and SigLIP representations to improve visual understanding. The projector aligns visual and textual features, employing a multilayer perceptron (MLP) or lightweight downsampling projector. The Mamba backbone, consisting of 64 identical blocks, processes concatenated visual and textual embeddings, generating sequences of target tokens. The training involves fine-tuning the entire backbone and projector over two epochs on a diverse dataset of images and dialog data.

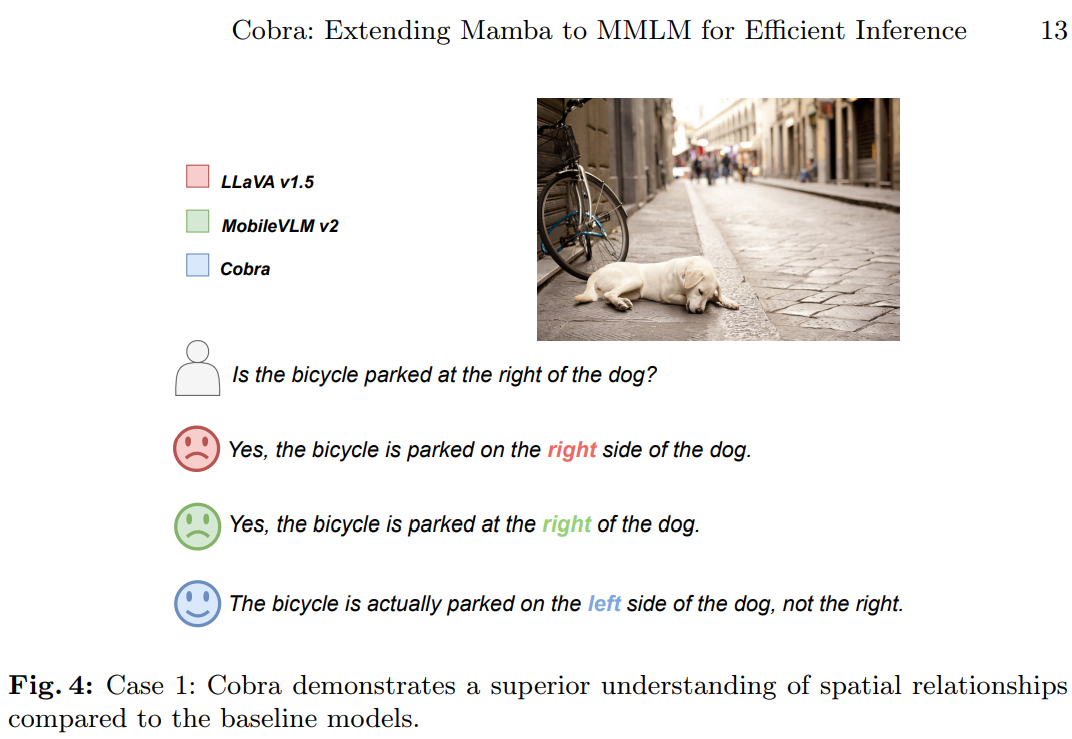

Cobra is thoroughly evaluated on six benchmarks in experiments, demonstrating its effectiveness in spatial reasoning and visual question answering tasks. The results demonstrate the competitive performance of Cobra compared to similar and larger scale models. Cobra exhibits significantly faster inference speed than Transformer-based models, while ablation studies highlight the importance of design choices such as vision encoders and projectors. The case studies further illustrate Cobra's superior understanding of spatial relationships and scene descriptions, underscoring its effectiveness in processing visual information and generating accurate natural language descriptions.

In conclusion, the study mentions Cobra as a solution to the efficiency challenges faced by existing MLLMs employing Transformer networks. By integrating language models with linear computational complexity and multimodal inputs, Cobra optimizes the fusion of visual and linguistic information within the Mamba language model. Through extensive experimentation, Cobra improves computational efficiency and achieves competitive performance comparable to advanced models such as LLaVA, particularly excelling in tasks involving mitigation of visual hallucinations and judgment of spatial relationships. These advances paved the way for deploying high-performance ai models in scenarios that require real-time visual information processing, such as image-based robotic feedback control systems.

Review the Paper and Project. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on Twitter. Join our Telegram channel, Discord channeland LinkedIn Grabove.

If you like our work, you will love our Newsletter..

Don't forget to join our 39k+ ML SubReddit

![]()

Sana Hassan, a consulting intern at Marktechpost and a dual degree student at IIT Madras, is passionate about applying technology and artificial intelligence to address real-world challenges. With a strong interest in solving practical problems, she brings a new perspective to the intersection of ai and real-life solutions.

<!– ai CONTENT END 2 –>