The relentless advance of progress in artificial intelligence is driven by the ambition to mirror and extend human cognitive capabilities through technology. This journey is characterized by the search for machines that understand language, process images and interact with the world with almost human understanding.

The research team of 01.ai has entered the yi model family. Unlike his predecessors, Yi not only analyzes text or images in isolation, but combines these capabilities, displaying an unprecedented level of multimodal understanding. In doing this, Yi addresses the challenge of bridging the gap between human language and visual perception, which requires innovative model architectures and rethinking how models are trained and the quality of the data they learn from. Previous models often need improvement when faced with the need to understand context in long stretches of text or derive meaning from a combination of text and visual cues.

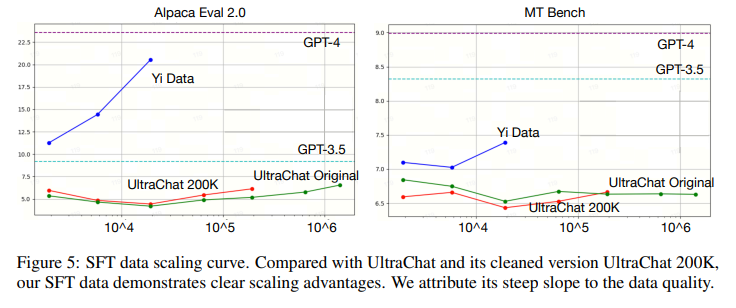

The model series includes language-specific models capable of processing visual information along with text. These are based on an evolved transformer architecture that has been tuned with data quality in mind, a factor that significantly increases performance on several benchmarks. Yi's technical foundation involves a layered approach to building and training models. Starting with the 6B and 34B language models, the team behind Yi expanded them to chat models capable of handling long contexts and integrating deep scaling techniques. The models were trained on an enriched corpus through a rigorous deduplication and filtering process, ensuring that the data fed to them was not only voluminous but also of exceptional quality.

The development of the Yi-9 B model involved a novel training methodology. This two-stage process used a dataset comprising approximately 800 billion tokens, with a special focus on collecting and curating recent data to improve the model's understanding and performance on coding-related tasks. Thanks to a constant learning rate and a strategic increase in batch size, the model demonstrated substantial performance improvements across several benchmarks, including reasoning, cognition, coding, and mathematics. This rigorous methodology and the resulting performance gains highlight the potential of the Yi family of models for advanced ai applications.

The Yi series of models is not only a theoretical advance but a practical tool with a wide range of applications. Its main strengths lie in the balance between the quantity and quality of data and the strategic adjustment process. The Yi-34B model, for example, matches the performance of GPT-3.5 but with the added advantage of deployability in consumer devices, thanks to effective quantization strategies. This practicality makes the Yi series of models a powerful tool for various applications, from natural language processing to computer vision tasks.

One of the most interesting aspects of the Yi series is its ability in vision and language tasks. By combining the chat language model with a vision transformer encoder, Yi can align visual inputs with linguistic semantics. This allows you to understand and respond to input that combines images and text. This capability opens up a world of possibilities for ai applications, from enhanced interactive chatbots to sophisticated analytics tools that can interpret complex visual and textual data sets.

In conclusion, 01.ai's Yi family of models marks an important step forward in the development of ai that can navigate the complexities of human language and vision. This progress was achieved through:

- A sophisticated transformative architecture optimized for linguistic and visual tasks.

- An innovative approach to data processing that emphasizes the quality of the data used for training.

- Successful integration of language and vision models enables the understanding of multimodal inputs.

- It has achieved notable results in standard benchmarks and user preference assessments, demonstrating its potential for various applications.

Review the Paper. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on Twitter and Google news. Join our 38k+ ML SubReddit, 41k+ Facebook community, Discord channeland LinkedIn Grabove.

If you like our work, you will love our Newsletter..

Don't forget to join our Telegram channel

You may also like our FREE ai Courses….

![]()

Hello, my name is Adnan Hassan. I'm a consulting intern at Marktechpost and soon to be a management trainee at American Express. I am currently pursuing a double degree from the Indian Institute of technology, Kharagpur. I am passionate about technology and I want to create new products that make a difference.

<!– ai CONTENT END 2 –>