The rise of large language models (LLM) has transformed text creation and computer interactions. The lack of these models to ensure content accuracy and compliance with specific formats like JSON remains a challenge. LLMs who handle data from various sources find it difficult to maintain confidentiality and security, which is crucial in sectors such as healthcare and finance. Strategies such as constrained decoding and agent-based methods, such as performance costs or complex model integration requirements, present practical obstacles.

LLMs demonstrate remarkable reasoning and textual comprehension skills, supported by multiple studies. Tuning these models through instruction tuning improves their performance on a variety of tasks, including unseen ones. However, problems such as toxicity and hallucinations persist. Conventional sampling methods, including kernel, top-k, temperature sampling, and search-based methods such as greedy or beam search, often need to pay more attention to future costs.

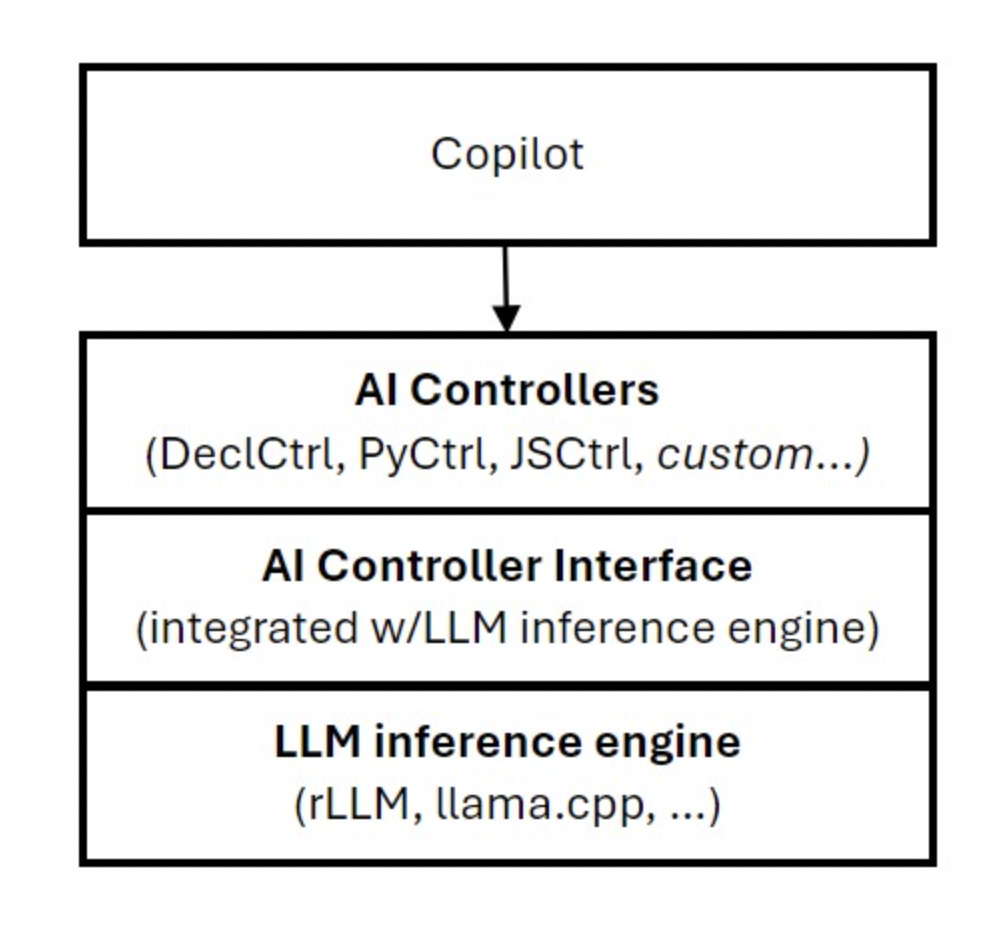

Microsoft researchers present ai Controller Interface (HERE). ai-controller-interface-generative-ai-with-a-lightweight-llm-integrated-vm/”>HERE improves feasibility by offering an “request as program” interface, overcoming traditional text-based APIs for cloud tools. Seamlessly integrate user-level code with LLM to deliver results in the cloud. AICI supports security frameworks, application-specific functionality, and various strategies for accuracy, privacy, and format compliance. Grants granular access to generative ai infrastructure, on-premises or in the cloud, enabling customized control over LLM processing.

AICI with a lightweight virtual machine (VM), which allows agile and efficient interaction with the LLMs. The ai controller, implemented as a WebAssembly virtual machine, runs alongside LLM processing, facilitating granular control over text generation. The process involves initiating the user request by specifying the ai controller and JSON program, generating tokens with pre-, mid-, and post-process stages, and assembling the response. Developers use customizable interfaces to implement ai driver programs, ensuring that LLM results fit specific requirements. The architecture supports parallel execution, efficient memory usage, and multi-stage processing for optimal performance.

Researchers have also discussed different use cases. Rust-based ai drivers use efficient methods to enforce formatting rules during text creation, ensuring compliance through test-based searches and pattern checking. These drivers support mandatory formatting requirements and are expected to offer more flexible guidance in future releases. Users can control the flow of information, timing and form of prompts and background data, enabling selective influence over structured thought processes and data pre-processing for LLM analysis, streamlining control over multiple LLM calls.

To conclude, Microsoft researchers proposed AICI to address content accuracy and privacy issues. AICI surpasses traditional text-based APIs. It integrates user-level code with cloud-based LLM output generation, supporting security frameworks, application-specific capabilities, and various accuracy and privacy strategies. Offers granular access for customized control over LLM processing, on-premises or in the cloud. AICI can be used for different purposes, such as efficient constrained decoding, enabling rapid compliance checking during text creation, controlling information flow, facilitating selective influence over structured thought processes, and preprocessing background data for LLM analysis. .

![]()

Asjad is an internal consultant at Marktechpost. He is pursuing B.tech in Mechanical Engineering at Indian Institute of technology, Kharagpur. Asjad is a machine learning and deep learning enthusiast who is always researching applications of machine learning in healthcare.

<!– ai CONTENT END 2 –>