In artificial intelligence, integrating multimodal inputs for video reasoning represents a challenging but potential frontier. Researchers are increasingly focused on leveraging various types of data—from visual frames and audio fragments to more complex 3D point clouds—to enrich ai's understanding and interpretation of the world. This effort aims to mimic human sensory integration and surpass it in depth and breadth, allowing machines to make sense of complex environments and scenarios with unprecedented clarity.

At the heart of this challenge is the problem of efficiently and effectively merging these varied modalities. Traditional approaches have often fallen short, either due to the need to be more flexible in accommodating new types of data or the need for prohibitive computational resources. Therefore, search is a solution that not only encompasses the diversity of sensory data but does so with agility and scalability.

Current methodologies in multimodal learning have shown promise but are hampered by their computational intensity and inflexibility. These systems typically require substantial parameter updates or dedicated modules for each new modality, making the integration of new data types cumbersome and resource-intensive. These limitations hinder the adaptability and scalability of ai systems in addressing the richness of real-world inputs.

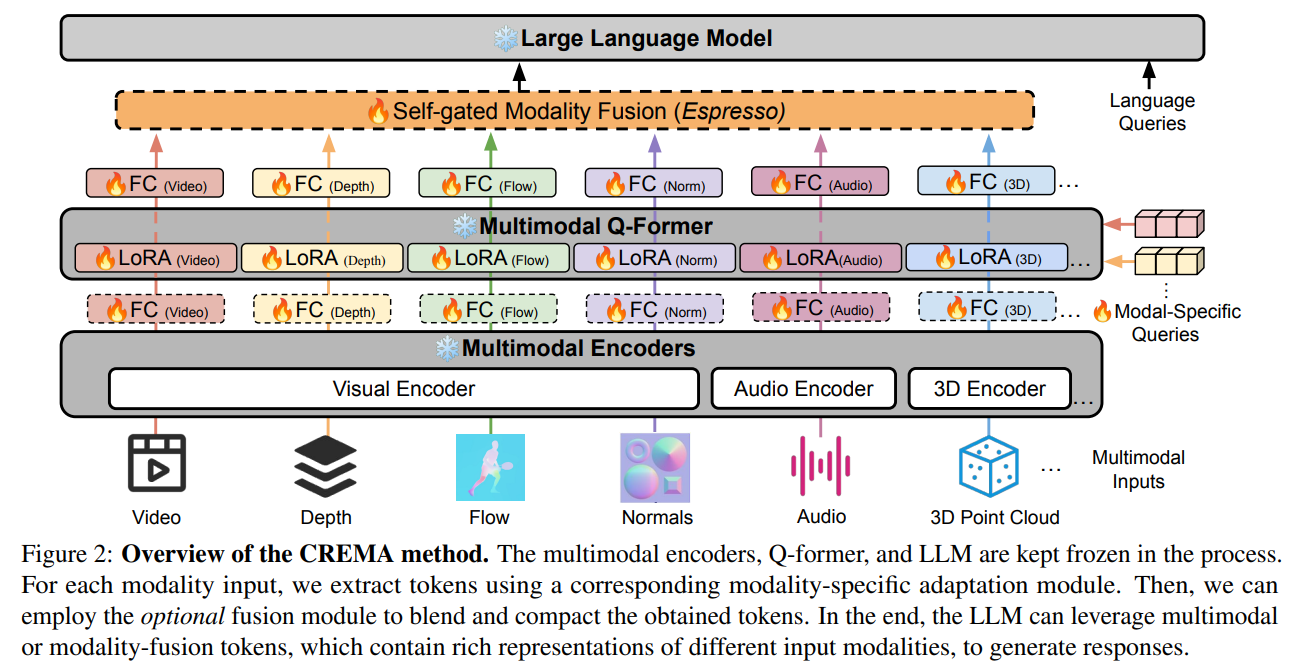

An innovative framework proposed by UNC-Chapel Hill researchers was designed to revolutionize the way artificial intelligence systems handle multimodal inputs for video reasoning. This innovative approach introduces an efficient, modular system for fusing different modalities, such as optical flow, 3D point clouds, and audio, without requiring extensive parameter updates or custom modules for each data type. At its core, CREMA uses a query transformer architecture that integrates diverse sensory data, paving the way for a more nuanced and comprehensive ai understanding of complex scenarios.

CREMA's methodology stands out for its efficiency and adaptability. Employing a set of parameter-efficient modules allows the framework to project features from multiple modalities into a common integration space, facilitating seamless integration without revising the underlying model architecture. This approach conserves computational resources and ensures that the model is future-proof, ready to adapt to new modalities as they become relevant.

CREMA's performance has been rigorously validated on several benchmarks, demonstrating superior or equivalent results compared to existing multimodal learning models with a fraction of the trainable parameters. This efficiency does not come at the cost of effectiveness; CREMA skillfully balances the inclusion of new modalities, ensuring that each contributes significantly to the reasoning process without overwhelming the system with redundant or irrelevant information.

In conclusion, CREMA represents an important advance in multimodal video reasoning. Its innovative fusion of diverse data types into a coherent and efficient framework not only addresses the challenges of flexibility and computational efficiency, but also sets a new standard for future developments in this field. The implications of this research are profound and promise to improve ai's ability to interpret and interact with the world in more nuanced and intelligent ways.

Review the Paper. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on Twitter and Google news. Join our 37k+ ML SubReddit, 41k+ Facebook community, Discord channeland LinkedIn Grabove.

If you like our work, you will love our Newsletter..

Don't forget to join our Telegram channel

![]()

Sana Hassan, a consulting intern at Marktechpost and a dual degree student at IIT Madras, is passionate about applying technology and artificial intelligence to address real-world challenges. With a strong interest in solving practical problems, she brings a new perspective to the intersection of ai and real-life solutions.

<!– ai CONTENT END 2 –>