The emergence of multimodal large language models (MLLMs), such as GPT-4 and Gemini, has sparked significant interest in combining language understanding with various modalities such as vision. This fusion offers potential for diverse applications, from embedded intelligence to GUI agents. Despite the rapid development of open source MLLMs such as BLIP and LLaMA-Adapter, their performance could be improved with more training data and model parameters. While some excel at understanding natural images, they need help with tasks that require specialized knowledge. Additionally, current model sizes may not be suitable for mobile deployment, requiring the exploration of smaller, parameter-rich architectures for broader adoption and better performance.

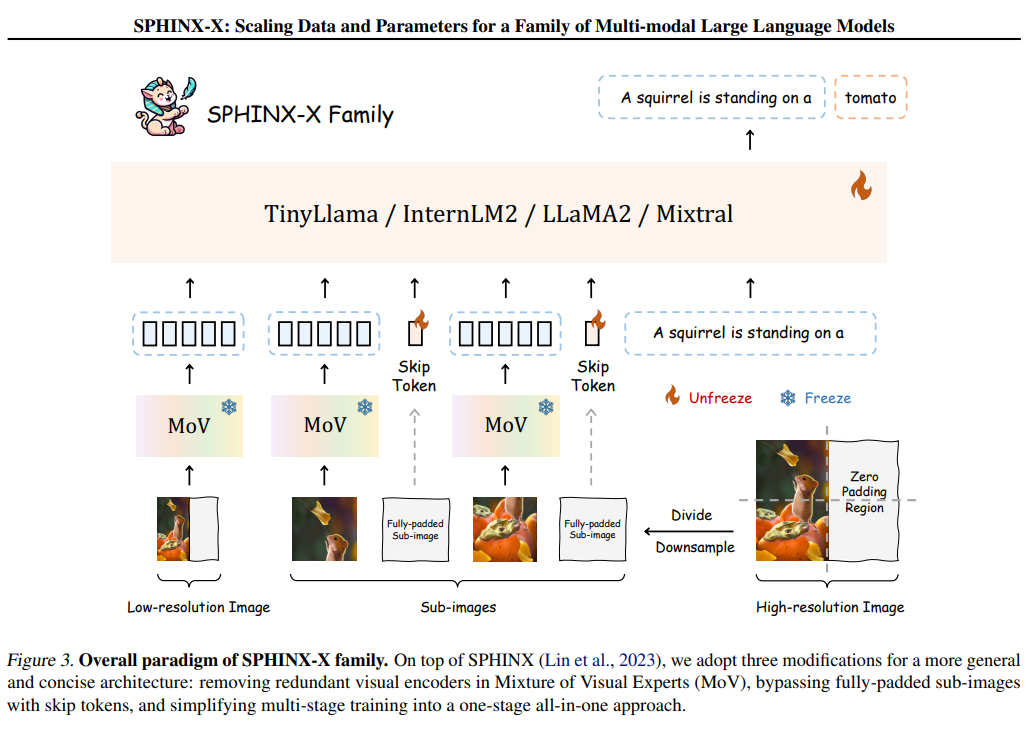

Researchers from Shanghai ai Lab, MMLab, CUHK, Rutgers University, and the University of California, Los Angeles have developed SPHINX-X, an advanced MLLM series based on the SPHINX framework. Improvements include optimizing the architecture by removing redundant visual encoders, optimizing training efficiency with skip tokens for fully filled subimages, and transitioning to a single-stage training paradigm. SPHINX-X leverages a diverse multimodal dataset, augmented with select OCR and mark set data, and is trained on several core LLMs, offering a variety of parameter sizes and multilingual capabilities. The comparative results underline the superior generalization of SPHINX-X across tasks, addressing previous limitations of MLLM while optimizing efficient large-scale multimodal training.

Recent advances in LLMs have taken advantage of Transformer architectures, notably exemplified by GPT-3's 175B parameters. Other models such as PaLM, OPT, BLOOM and LLaMA have followed suit, with innovations such as Mistral's attention to windows and Mixtral's sparse MoE layers. At the same time, bilingual LLMs such as Qwen and Baichuan have emerged, while TinyLlama and Phi-2 focus on parameter reduction for edge implementation. Meanwhile, MLLMs integrate textless encoders for visual understanding, with models such as the BLIP, Flamingo, and LLaMA-Adapter series pushing the boundaries of vision-language fusion. Detailed MLLMs like Shikra and VisionLLM excel at specific tasks, while others extend LLMs to various modalities.

The study reviews the design principles of SPHINX. It proposes three improvements to SPHINX-X, including brevity of visual encoders, learnable skip tokens for useless optical signals, and simplified single-stage training. The researchers assemble a large-scale multimodal dataset covering language, vision, and vision-language tasks and enrich it with selected mark ensemble and OCR-intensive datasets. The SPHINX-X family of MLLMs is trained on different base LLMs, including TinyLlama-1.1B, InternLM2-7B, LLaMA2-13B, and Mixtral-8×7B, to obtain a spectrum of MLLMs with different parameter sizes and multilingual capabilities.

SPHINX-X MLLMs demonstrate state-of-the-art performance in various multimodal tasks, including mathematical reasoning, complex scene understanding, low-level vision tasks, visual quality assessment, and resilience when dealing with illusions. A comprehensive benchmarking reveals a strong correlation between the multimodal performance of MLLMs and the data scales and parameters used in training. The study presents the performance of SPHINX-X on selected benchmarks such as HalllusionBench, AesBench, ScreenSpot, and MMVP, showing its capabilities in language hallucination, visual illusion, aesthetic perception, GUI element localization, and visual understanding.

In conclusion, SPHINX-X significantly advances MLLM, building on the SPHINX framework. Through improvements in architecture, training efficiency, and dataset enrichment, SPHINX-X exhibits superior performance and generalization compared to the original model. Expanding the parameters further amplifies its multimodal understanding capabilities. Publishing code and models on GitHub encourages replication and additional research. With enhancements including an optimized architecture and a comprehensive data set, SPHINX-X offers a robust platform for multi-modal, multi-purpose instruction tuning at a variety of parameter scales, shedding light on future MLLM research efforts.

Review the Paper and GitHub. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on Twitter and Google news. Join our 37k+ ML SubReddit, 41k+ Facebook community, Discord Channeland LinkedIn Grabove.

If you like our work, you will love our Newsletter..

Don't forget to join our Telegram channel

![]()

Sana Hassan, a consulting intern at Marktechpost and a dual degree student at IIT Madras, is passionate about applying technology and artificial intelligence to address real-world challenges. With a strong interest in solving practical problems, she brings a new perspective to the intersection of ai and real-life solutions.

<!– ai CONTENT END 2 –>