Aligning language models with human preferences is the cornerstone for their effective application in many real-world scenarios. With advances in machine learning, the quest to refine these models for better alignment has led researchers to explore beyond traditional methods and delve into preference optimization. This field promises to harness human feedback more intuitively and effectively.

Recent developments have moved from conventional reinforcement learning from human feedback (RLHF) towards innovative approaches such as direct policy optimization (DPO) and SLiC. These methods optimize language models based on pairwise human preference data, a technique that, while effective, only scratches the surface of possible optimization strategies. A groundbreaking study by researchers at Google Research and Google Deepmind presents the Listwise Preference Optimization (LiPO) framework, which reframes LM alignment as a listwise ranking challenge, parallel to the established Learning-to-Rank (LTR) domain. This innovative approach aligns with LTR's rich tradition. It significantly expands the scope of preference optimization by leveraging listwise data, where responses are sorted into lists to economize on required evaluation efforts.

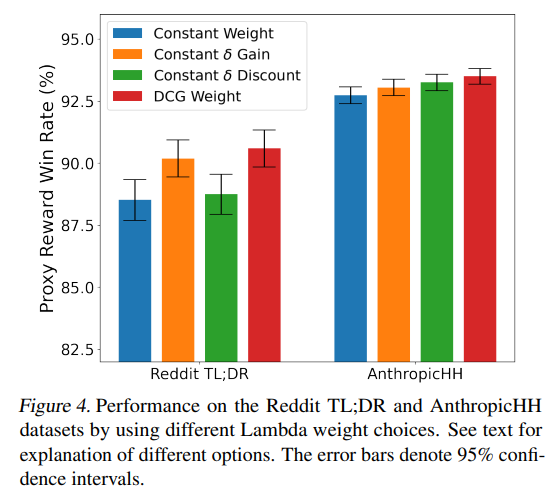

At the heart of LiPO is the recognition of the untapped potential of list preference data. Traditionally, human preference data is processed in pairs, a method that, although functional, does not fully exploit the informational richness of ranked lists. LiPO transcends this limitation by proposing a framework that can more effectively learn from list preferences. Through an in-depth exploration of various classification objectives within this framework, the study highlights LiPO-λ, which employs a state-of-the-art listwise classification objective. By demonstrating superior performance over DPO and SLiC, LiPO-λ shows the clear advantage of listwise optimization to improve the alignment of LM with human preferences.

The main innovation of LiPO-λ lies in its sophisticated use of data in list form. By conducting a comprehensive study of target ranking within the LiPO framework, the research highlights the effectiveness of listwise targeting, particularly those not previously explored in LM preference optimization. Establishes LiPO-λ as the reference method in this field. The superiority of this method is evident in several evaluation tasks, setting a new standard for aligning LMs with human preferences.

Delving deeper into the methodology, the study rigorously evaluates the performance of different unified classification losses under the LiPO framework through comparative analysis and ablation studies. These experiments underscore the remarkable ability of LiPO-λ to leverage listwise preference data, providing a more efficient means of aligning LMs with human preferences. While existing pairwise methods benefit from the inclusion of listwise data, LiPO-λ, with its inherent listwise approach, leverages this data more robustly, laying a solid foundation for future advances in training and alignment of LM.

This comprehensive research goes beyond simply presenting a new framework; bridges the gap between LM preference optimization and the well-established domain of Learning-to-Rank. By introducing the LiPO framework, the study offers a new perspective on how to align LMs with human preferences and highlights the untapped potential of listwise data. The introduction of LiPO-λ as a powerful tool to improve LM performance opens new avenues for research and innovation, promising significant implications for the future of language model training and alignment.

In conclusion, this work achieves several key milestones:

- Introduces the listwise preference optimization framework, which redefines the alignment of language models with human preferences as a listwise ranking challenge.

- Demonstrates the LiPO-λ method, a powerful tool for leveraging list data to improve LM alignment and establish new reference points in the field.

- It unites LM preference optimization with the rich tradition of Learning-to-Rank, offering novel insights and methodologies that promise to shape the future of language model development.

The success of LiPO-λ not only underscores the effectiveness of list approaches, but also heralds a new era of research at the intersection of LM training and learning-to-classify methodologies. This study advances the field by leveraging the nuanced complexity of human feedback. It sets the stage for future explorations to unlock the full potential of linguistic models to meet human communicative needs.

Review the Paper. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on Twitter and Google news. Join our 37k+ ML SubReddit, 41k+ Facebook community, Discord Channeland LinkedIn Grabove.

If you like our work, you will love our Newsletter..

Don't forget to join our Telegram channel

![]()

Hello, my name is Adnan Hassan. I'm a consulting intern at Marktechpost and soon to be a management trainee at American Express. I am currently pursuing a double degree from the Indian Institute of technology, Kharagpur. I am passionate about technology and I want to create new products that make a difference.

<!– ai CONTENT END 2 –>