ai development is moving from static task-focused models to dynamic and adaptive agent-based systems suitable for various applications. ai systems aim to collect sensory data and effectively interact with environments, a long-standing research goal. Developing generalist ai offers advantages, including training a single neural model on multiple tasks and data types. This approach is highly scalable across data, computational resources, and model parameters.

Recent work highlights the advantages of developing generalist ai systems by training a single neural model on multiple tasks and data types, offering scalability across data, computation, and model parameters. However, challenges remain as large-base models often produce hallucinations and infer incorrect information due to insufficient baseline in training environments. Current multimodal systems approaches, which rely on frozen models pretrained for each modality, can perpetuate errors without cross-modal pretraining.

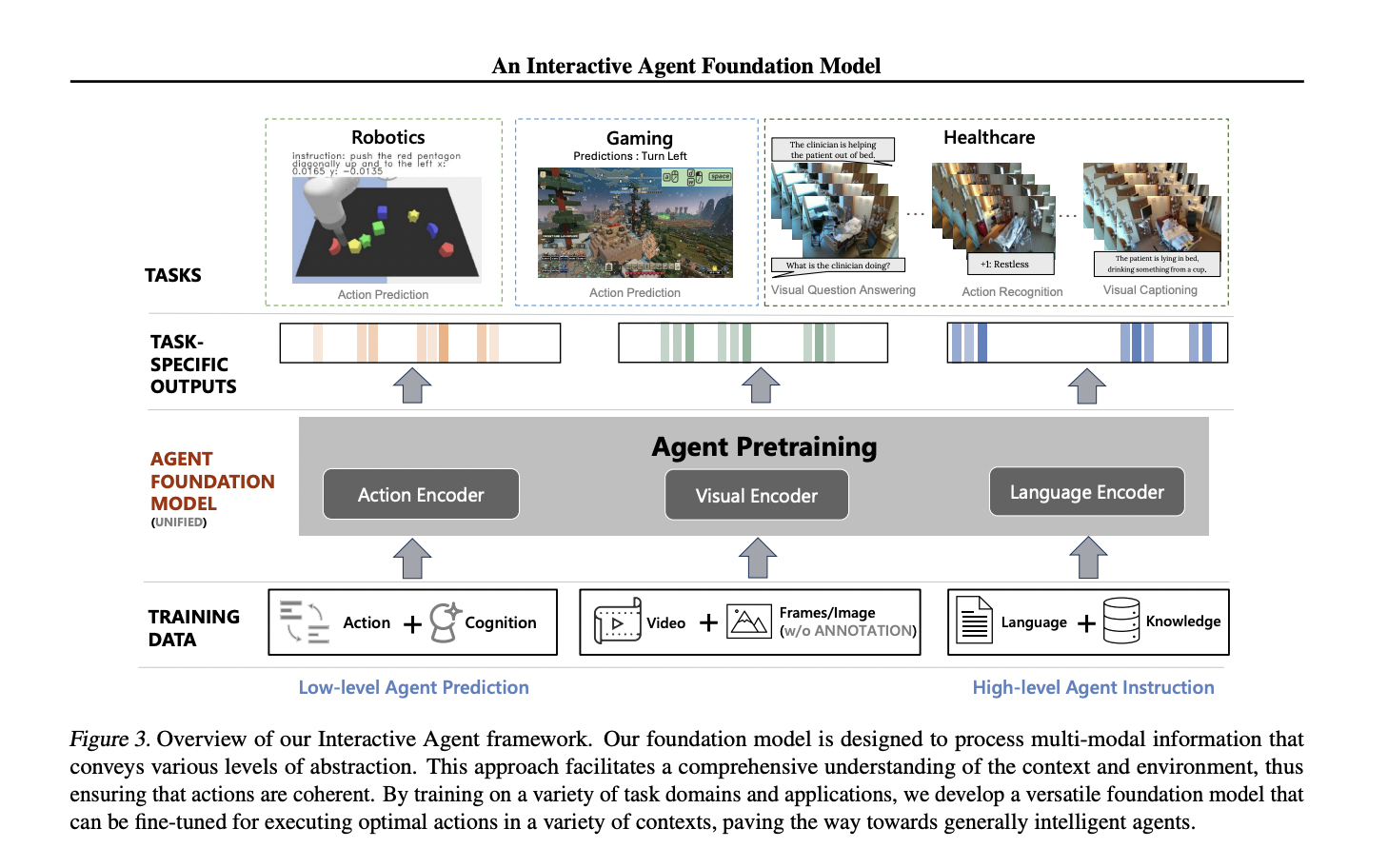

Researchers from Stanford University, Microsoft Research, Redmond, and the University of California, Los Angeles, have proposed the Interactive agent base model, which introduces a unified pre-training framework for processing text, visual data, and actions, treating each as separate tokens. It uses visual language models and pre-trained language to predict masked tokens across modalities. It allows interaction with humans and environments, incorporating the understanding of visual language. With 277 million parameters pre-trained jointly in various domains, it effectively engages in multi-modal environments in various virtual environments.

He Interactive Agent Base Model initializes its architecture with pre-trained CLIP ViT-B16 for visual coding and OPT-125M for action and language modeling. It incorporates intermodal information exchange through a linear layer transformation. Due to memory limitations, previous actions and visual frames are included as input, using a sliding window approach. Sinusoidal positional embeddings are used to predict masked visible tokens. Unlike previous models that rely on frozen submodules, the entire model is trained together during pre-training.

Evaluation of robotics, gaming, and healthcare tasks demonstrates promising results. Despite being outperformed in certain tasks by other models due to the smaller amount of data for pre-training, the method shows competitive performance, especially in robotics, where it significantly outperforms a comparative model. Fine-tuning the pre-trained model is remarkably effective in gaming tasks compared to training from scratch. In healthcare applications, the method outperforms several baselines by leveraging CLIP and OPT for initialization, demonstrating the effectiveness of its diverse pre-training approach.

In conclusion, the researchers proposed the Interactive Agent Base Model, which is adept at processing text, actions, and visual inputs and demonstrates effectiveness in various domains. Pre-training on a combination of robotics and gaming data allows the model to competently model actions, even showing positive transfer to healthcare tasks during tuning. Its broad applicability in decision-making contexts suggests potential for generalist agents in multimodal systems, opening new opportunities for the advancement of ai.

Review the Paper. All credit for this research goes to the researchers of this project. Also, don't forget to follow us on Twitter and Google news. Join our 37k+ ML SubReddit, 41k+ Facebook community, Discord Channeland LinkedIn Grabove.

If you like our work, you will love our Newsletter..

Don't forget to join our Telegram channel

![]()

Asjad is an internal consultant at Marktechpost. He is pursuing B.tech in Mechanical Engineering at Indian Institute of technology, Kharagpur. Asjad is a machine learning and deep learning enthusiast who is always researching applications of machine learning in healthcare.

<!– ai CONTENT END 2 –>